python数据分析 生成词云图、jieba分词 从最简单的数据集入门

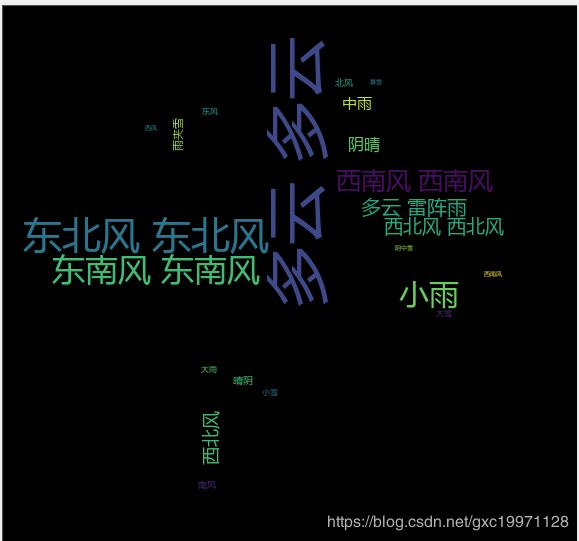

1.仅仅使用了一列文字数据进行生成词云图,我发现生成的图很丑,就当做是个简单的入门吧!哈哈

首先取了一列数据,并且转化为txt 文件

#词云图尝试

import numpy as np

import pandas as pd

from wordcloud import WordCloud

import PIL.Image as image

import csv

"""

没用到的东西

wind_sky = pd.read_csv('datadatadata1.csv',usecols = [5],header=None)

print(wind_sky)

print(type(wind_sky))

"""

#取数据

wind_sky = pd.read_csv('datadatadata1.csv',usecols = [5],header=None)

print(wind_sky)

print(type(wind_sky))

wind_sky_list = []

filename = 'datadatadata1.csv'

with open(filename,'r', encoding='UTF-8') as f:

reader = csv.reader(f)

header_row = next(reader)

column1 = [row[5] for row in reader]

wind_sky_list.append(column1)

print(wind_sky_list)

#转为txt文件(主要是自己不太会,所以就先这样子转一下)

def text_save(filename, data): # filename为写入txt文件的路径,data为要写入数据列表.

file = open(filename, 'a')

for i in range(len(data)):

s = str(data[i]).replace('[', '').replace(']', '') # 去除[],这两行按数据不同,可以选择

s = s.replace("'", '').replace(',', '') + '\n' # 去除单引号,逗号,每行末尾追加换行符

file.write(s)

file.close()

text_save('wind_sky_list.txt',wind_sky_list)

#正式开始画词云图

from wordcloud import WordCloud

import PIL.Image as image

import jieba

import numpy as np

def trans_cn(text):

word_list = jieba.cut(text)

result = " ".join(word_list)

return result;

with open("wind_sky_list5.txt") as fp:

text = fp.read()

text = trans_cn(text)

print(text)

#选了一张背景图片

mask = np.array(image.open("ciyun.png"))

WordCloud = WordCloud(

#背景图片

mask = mask,

#如果txt里面有中文的话一定要加这一行代码,不然出来的图会是空的框框!!!

font_path = "C:\\Windows\\Fonts\\msyh.ttc"

).generate(text)

image_produce = WordCloud.to_image()

image_produce.show()上面的图画完之后,感觉词太少了导致非常丑,所以现在我又加了一些数据,也就是又从我的数据集中多取了列数据。这里取数据的时候还发生了一些小插曲,就是我要取得那一列数据里面有波浪号,所以要去一下,相当于停用词吧,我知道有那更简单的方式,不过作为刚刚入门的小白,我这边还是写的比较啰嗦。

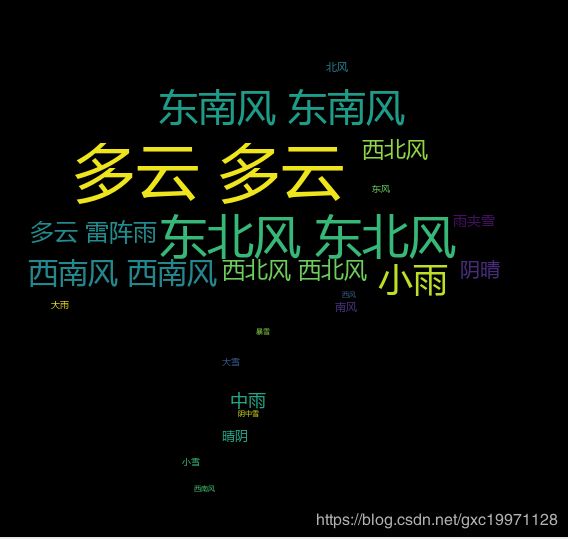

2.再次尝试画词云图(多加了一些数据)

2.1去多余符号

import csv

import numpy

list_no_bolang = []

import pandas as pd

list_no_bolang2 = []

with open('fy_tianqi_2019-2020.csv', 'rt',encoding='UTF-8') as f:

reader = csv.reader(f)

header_row = next(reader)

for row in reader:

for word in row:

str2 = word.replace('~', '')

list_no_bolang.append(str2)

for row1 in list_no_bolang:

#print(row1)

str3 = row1.replace('℃', '')

list_no_bolang2.append(str3)

print(list_no_bolang)

print(list_no_bolang2)

print(numpy.shape(list_no_bolang))

#print(numpy.shape(list_no_bolang2))

list_no_bolang_np=numpy.array(list_no_bolang2).reshape(345,9)

name = ["ymd","bWendu","yWendu","tianqi","fengxiang","fengli","aqi","aqiInfo","aqiLevel"]

csv_list = pd.DataFrame(columns=name, data=list_no_bolang_np)

csv_list.to_csv('for new ciyun//sky4.csv')

2.2取数据,转化为TXT文件

import numpy as np

import pandas as pd

import csv

#取4,5两列

wind_sky_list = []

filename = 'sky4.csv'

with open(filename,'r', encoding='UTF-8') as f:

reader = csv.reader(f)

header_row = next(reader)

column1 = [row[4] for row in reader]

wind_sky_list.append(column1)

print(np.shape(wind_sky_list))

with open(filename,'r', encoding='UTF-8') as f:

reader = csv.reader(f)

header_row = next(reader)

column2 = [row[5] for row in reader]

wind_sky_list.append(column2)

print(np.shape(wind_sky_list))

print(wind_sky_list)

#转为txt文件(主要是自己不太会,所以就先这样子转一下)

def text_save(filename, data): # filename为写入txt文件的路径,data为要写入数据列表.

file = open(filename, 'a')

for i in range(len(data)):

s = str(data[i]).replace('[', '').replace(']', '') # 去除[],这两行按数据不同,可以选择

s = s.replace("'", '').replace(',', '') + '\n' # 去除单引号,逗号,每行末尾追加换行符

file.write(s)

file.close()

text_save('wind_sky_list5.txt',wind_sky_list)2.3开始jieba分词并且画词云图

#正式开始画词云图

from wordcloud import WordCloud

import PIL.Image as image

import jieba

import numpy as np

def trans_cn(text):

word_list = jieba.cut(text)

result = " ".join(word_list)

return result;

with open("wind_sky_list5.txt") as fp:

text = fp.read()

text = trans_cn(text)

print(text)

#选了一张背景图片

mask = np.array(image.open("ciyun.png"))

WordCloud = WordCloud(

#背景图片

mask = mask,

#如果txt里面有中文的话一定要加这一行代码,不然出来的图会是空的框框!!!

font_path = "C:\\Windows\\Fonts\\msyh.ttc"

).generate(text)

image_produce = WordCloud.to_image()

image_produce.show()分词后的示例:

画出来的词云图:(还是有点丑 就是先练习练习:):两次运行结果不太一样:不过看起来字号大小是一样的