NIPS 2017 深度学习论文集锦 (2)

本文是NIPS 2017 深度学习论文集锦第二篇,第一篇是

本文是对上文的续,因此论文编号也是基于上文的续

[21] Multi-Modal Imitation Learning from Unstructured Demonstrations using Generative Adversarial Nets

Karol Hausman, Yevgen Chebotar, Stefan Schaal, Gaurav Sukhatme, Joseph J. Lim

https://papers.nips.cc/paper/6723-multi-modal-imitation-learning-from-unstructured-demonstrations-using-generative-adversarial-nets.pdf

这篇文章主要关于GAN。

相关模型InfoGAN。

[22] TernGrad: Ternary Gradients to Reduce Communication in Distributed Deep Learning

Wei Wen, Cong Xu, Feng Yan, Chunpeng Wu, Yandan Wang, Yiran Chen, Hai Li

https://papers.nips.cc/paper/6749-terngrad-ternary-gradients-to-reduce-communication-in-distributed-deep-learning.pdf

这篇文章侧重在理论研究,主要针对参数和梯度通信进行了优化。

并行框架如下

代码地址

https://github.com/wenwei202/terngrad

[23] Deep Learning with Topological Signatures

Christoph Hofer, Roland Kwitt, Marc Niethammer, Andreas Uhl

https://papers.nips.cc/paper/6761-deep-learning-with-topological-signatures.pdf

这篇论文将拓扑信息融入到深度学习中。

代码地址

https://github.com/c-hofer/nips2017

相关数据集

http://www.mit.edu/~pinary/kdd/datasets.tar.gz

[24] Deep Dynamic Poisson Factorization Model

ChengYue Gong, win-bin huang

https://papers.nips.cc/paper/6764-deep-dynamic-poisson-factorization-model.pdf

这篇论文主要基于泊松因子分析方法。

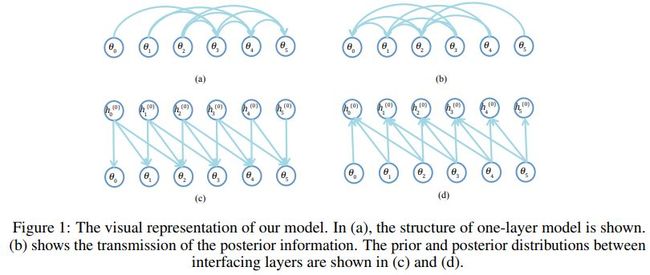

网络结构示例如下

相关数据集地址

http://ai.stanford.edu/gal/data.html

https://github.com/cmrivers/ebola/blob/master/country_timeseries.csv

http://www.emdat.be/

https://datamarket.com/data/list/?q=provider:tsdl

[25] Convergent Block Coordinate Descent for Training Tikhonov

Regularized Deep Neural Networks

Ziming Zhang, Matthew Brand

https://papers.nips.cc/paper/6769-convergent-block-coordinate-descent-for-training-tikhonov-regularized-deep-neural-networks.pdf

这篇论文主要关于训练算法,即块共轭梯度法,这种方法基于凸分析中的最近点方法得到。

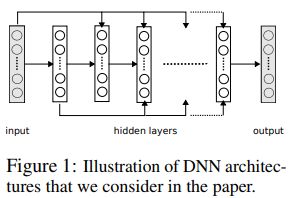

其中的网络结构如下

代码地址

https://zimingzhang.wordpress.com/publications/

[26] Flexpoint: An Adaptive Numerical Format for Efficient Training of Deep Neural Networks

Urs Köster, Tristan Webb, Xin Wang,

Marcel Nassar, Arjun K. Bansal,

William Constable, Oguz Elibol,

Scott Gray, Stewart Hall, Luke Hornof,

Amir Khosrowshahi, Carey Kloss,

Ruby J. Pai, Naveen Rao

https://papers.nips.cc/paper/6771-flexpoint-an-adaptive-numerical-format-for-efficient-training-of-deep-neural-networks.pdf

这篇论文主要从数据格式上来提升深度神经网络的训练速度。

关键思路框架如下

[27] The Numerics of GANs

Lars Mescheder, Sebastian Nowozin,

Andreas Geiger

https://papers.nips.cc/paper/6779-the-numerics-of-gans.pdf

这篇论文主要关于GAN的数值分析。

代码地址

https://github.com/LMescheder/ TheNumericsOfGANs

[28] PixelGAN Autoencoders

Alireza Makhzani, Brendan J. Frey

https://papers.nips.cc/paper/6793-pixelgan-autoencoders.pdf

这篇文章是由PixelCNN和GAN构成的

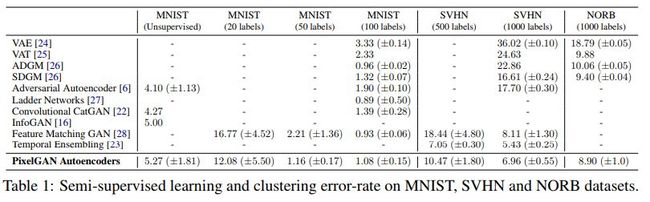

网络结构如下

各模型结果对比如下

[29] Stabilizing Training of Generative Adversarial Networks through

Regularization

Kevin Roth, Aurelien Lucchi, Sebastian Nowozin, Thomas Hofmann

https://papers.nips.cc/paper/6797-stabilizing-training-of-generative-adversarial-networks-through-regularization.pdf

这篇文章主要关于GAN的训练,主要方法是正则化。

算法流程如下

代码地址

https://github.com/rothk/Stabilizing_GANs

[30] Training Deep Networks without Learning Rates Through Coin Betting

Francesco Orabona, Tatiana Tommasi

https://papers.nips.cc/paper/6811-training-deep-networks-without-learning-rates-through-coin-betting.pdf

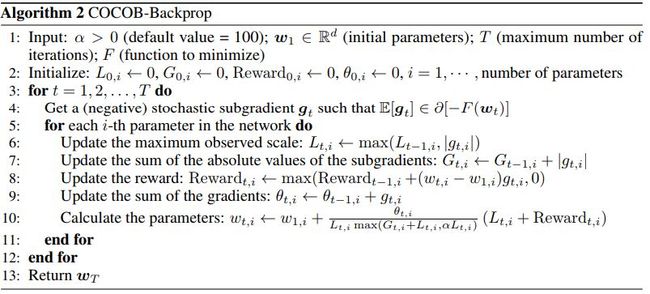

这篇文章提出了一种新的用于训练深度神经网络的随机梯度算法,这种算法不需要学习率。

关键流程如下

代码地址

https://github.com/bremen79/cocob

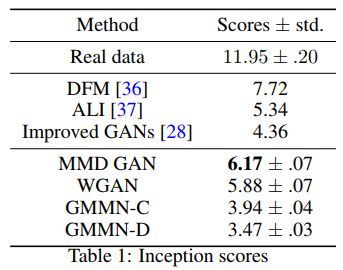

[31] MMD GAN: Towards Deeper

Understanding of Moment Matching Network

Chun-Liang Li, Wei-Cheng Chang, Yu Cheng, Yiming Yang, Barnabas Poczos

https://papers.nips.cc/paper/6815-mmd-gan-towards-deeper-understanding-of-moment-matching-network.pdf

这篇文章提出了一种新的GAN,MMD GAN,主要是结合了GMMN(

Generative moment matching network

) 和 GAN,而GMMN主要思想在于MMD (maximum mean discrepancy)。

算法流程如下

各算法对比如下

代码地址

https://github.com/OctoberChang/MMD-GAN

[32] The Reversible Residual Network: Backpropagation Without Storing

Activations

Aidan N. Gomez, Mengye Ren, Raquel Urtasun, Roger B. Grosse

https://papers.nips.cc/paper/6816-the-reversible-residual-network-backpropagation-without-storing-activations.pdf

这篇文章是对ResNet的一种改进,RevNet,这种网络不需要将激活函数的结果保存在内存中,而是直接从下一层重构得到。

算法流程如下

代码地址

https://github.com/renmengye/revnet-public

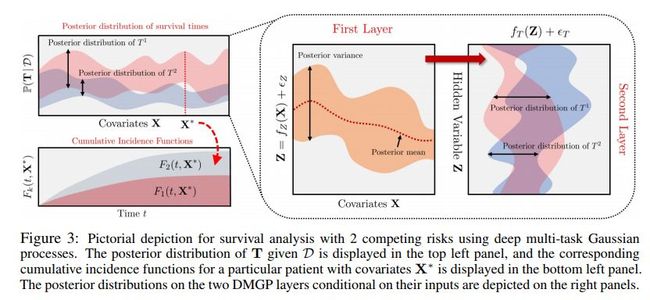

[33] Deep Multi-task Gaussian Processes for Survival Analysis with Competing Risks

https://papers.nips.cc/paper/6827-deep-multi-task-gaussian-processes-for-survival-analysis-with-competing-risks.pdf

这篇文章提出了一种用于生存分析的非参数贝叶斯模型,DMGP (deep multi-task Gaussian process)。

模型图示如下

相关代码及数据集如下

https://cran.r-project.org/web/packages/pec/index.html

https://cran.r-project.org/web/packages/threg/index.html

https://seer.cancer.gov/causespecific/

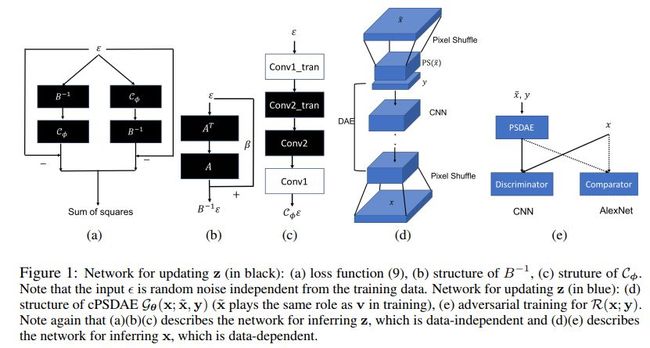

[34] An inner-loop free solution to inverse problems using deep neural networks

Kai Fan, Qi Wei, Lawrence Carin,

Katherine A. Heller

https://papers.nips.cc/paper/6831-an-inner-loop-free-solution-to-inverse-problems-using-deep-neural-networks.pdf

这篇文章主要将深度学习用于求逆的问题,其中省去了内部循环。主要基于条件去噪自编码和卷积神经网络实现。

网络结构如下

算法步骤如下

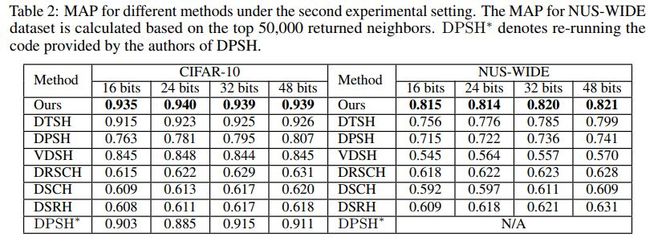

[35] Deep Supervised Discrete Hashing

Qi Li, Zhenan Sun, Ran He, Tieniu Tan

https://papers.nips.cc/paper/6842-deep-supervised-discrete-hashing.pdf

各算法比较如下

[36] Fisher GAN

Youssef Mroueh, Tom Sercu

https://papers.nips.cc/paper/6845-fisher-gan.pdf

本文提出了一种新的GAN,主要基于IPM(Integral Probability Metrics)。

该算法跟其他算法的比较如下

将Fisher IPM 跟神经网络结合的示例如下

算法流程如下

各算法效果比较如下

代码地址

https://github.com/tomsercu/FisherGAN

[37] EX2: Exploration with Exemplar Models for Deep Reinforcement Learning

Justin Fu, John Co-Reyes, Sergey Levine

https://papers.nips.cc/paper/6851-ex2-exploration-with-exemplar-models-for-deep-reinforcement-learning.pdf

这篇文章主要关于深度强化学习。

算法流程如下

网络结构如下

代码地址

https://github.com/justinjfu/exemplar_models

https://github.com/jcoreyes/ex2

[38] Nonlinear random matrix theory for deep learning

Jeffrey Pennington, Pratik Worah

https://papers.nips.cc/paper/6857-nonlinear-random-matrix-theory-for-deep-learning.pdf

本文主要将非线性随机矩阵理论用于深度学习。

[39] Dual Discriminator

Generative Adversarial Nets

Tu Nguyen, Trung Le, Hung Vu, Dinh Phung

https://papers.nips.cc/paper/6860-dual-discriminator-generative-adversarial-nets.pdf

本篇论文提出了一种新的GAN。

网络结构如下

各模型效果比较如下

代码地址

https://github.com/tund/D2GAN

[40] #Exploration: A Study of Count-Based Exploration for Deep

Reinforcement Learning

Haoran Tang, Rein Houthooft,

Davis Foote, Adam Stooke,

OpenAI Xi Chen, Yan Duan,

John Schulman, Filip DeTurck,

Pieter Abbeel

https://papers.nips.cc/paper/6868-exploration-a-study-of-count-based-exploration-for-deep-reinforcement-learning.pdf

本篇论文主要讨论了深度强化学习,主要基于频数统计。

算法伪代码如下