Hybrid Composition with IdleBlock

Hybrid Composition with IdleBlock: More Efficient Networks for Image Recognition

含义

We propose a new building block, IdleBlock, which naturally prunes connections within the block.

使用新颖的hybrid composition with IdleBlock.

背景

Remarkable single branch backbones

include Network in Network , VGGNet , ResNet , DenseNet, ResNext , MobileNet v1/v2/v3 , and ShuffleNet v1/v2。

learn with multiple resolutions

design complex connections inside a block to handle information exchanges of different resolutions.

like: MultiGrid-Conv , OctaveConv , and HRNet

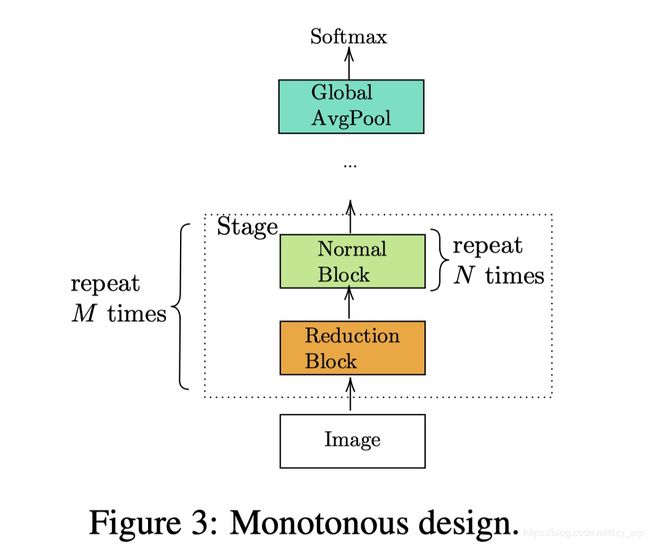

Monotonous Design

At the beginning of each stage, we insert a reduction block, and repeatedly stack the normal block. We repeat each stage multiple times, and for each stage we may have different number of normal blocks

For example

- ResNet monotonically repeats a Bottleneck block

- ShuffleNet monotonically repeats a ShuffleBlock

- MobileNet v2/v3 and EfficientNet monotonically repeats and Inverted Residual Block (MBBlock)

design more efficient CNNs

Method 1: Neural Architecture Search (NAS)

given a constraint on computation resources, NAS attempts to automatically determine the best network connections, block designs and hyper-parameters.

Methos 2: Network Pruning (NP)

given a pretrained network, an automatic algorithm is able to remove unimportant connections, to reduce computation and parameters.

We work

break the monotonous design constraint in state-of-the-art architectures. We call our non-monotonous composition method Hybrid Composition (HC)

- design a network architecture

- the blocks are guaranteed to have full information exchange

- prune connections from the network.

- it is not be guaranteed that each block has full information exchange

by considering pruning in the network design step. We create a new block design methodology: Idle.

结果

leveraging the pruned computation budget from IdleBlock to go deeper with hybrid composition achieves new state-of-art network architectures — without complex multi-resolution design or neural architecture search.

动机

Bottleneck Block & Inverted Residual Block

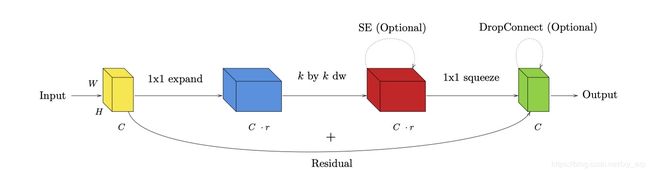

Inverted Residual Block (MBBlock)

** a pointwise convolution** will expand input dimension by a factor of r, apply a depthwise convolution to the expanded features, then apply another pointwise convolution to squeeze the high dimension features into a lower dimension(usually the same as input to allow residual addition)。

expanded feature map is helpful to improve a network’s expression power.

Bottleneck

Bottleneck applies a spatial transformation (k×k convolution or k×k depthwise convolution) on a narrowed feature map

The Bottleneck design is proposed in ResNet, where 3×3 convolutions are the most computationally intensive component. With 3×3 convolutions, narrowed feature maps significantly reduce computation cost

“Idle” Design

design principles for an efficient block structure, attempting to reduce computation (MAdds)

For example, to reduce computation in a k × k spatial convolution, depthwise convolutions can be used to replace the original convolution operator

reduce computation (MAdds)

- to reduce computation in a k × k spatial convolution, depthwise convolutions can be used to replace the original convolution operator

optimize directly for modern hardware platforms

难处:

post-training pruning will reduce computation, but without very specialized implementations and high levels of sparsity

本文:

We seek to introduce a structured pruned topology into our block design

With this insight, we create a new design pattern: Idlee, which aims to directly pass a subspace of the input to the output tensor, without any transformations.

In Idle design, we introduce an idle factor α ∈ (0, 1), which is also can be viewed as pruning factor.

Given an input tensor x with C channels, the tensor will be sliced to two branches:

- one active branch x 1 containing C · (1 − α) channels, and outputting a tensor y 1 with C ·(1−α) channels;

- the other idle branch x 2 contains C · α channels, copied directly to the output tensor y with C channels directly.

Contrasted with Residual Connections

With residual connections, the input tensor x is added to the output tensor.

In the Idle design, C ·α channels of input tensor x will be copied into the output tensor directly, without any elementwise addition.

Contrasted with Dense Connections

In a densely connected block, the entire input x is part of the output y, and entire input x in used in the block transformation.

In Idle design, only C ·α channels of x are copied into the output tensor directly, and the other C ·(1−α) channels are used in the block transformation.

Wonderful Experiences

- apply the depthwise convolution on an expanded feature map

- Grouped convolutions are not necessary

- The channel shuffle operation is not friendly to various accelerators, and should be avoided

IdleBlock

A key distinction of mixed composition is the enhanced receptive field of the stacked output.

Given an MBBlock with input tensor shape (1,C,H,W), expansion factor r, stride s, and depthwise convolution kernel k, the theoretical computation complexity is

2 · α · r · C^2 · (HW + HW/s^2)

the theoretical computation cost of one MBBlock is roughly equal to cost of two IdleBlocks.

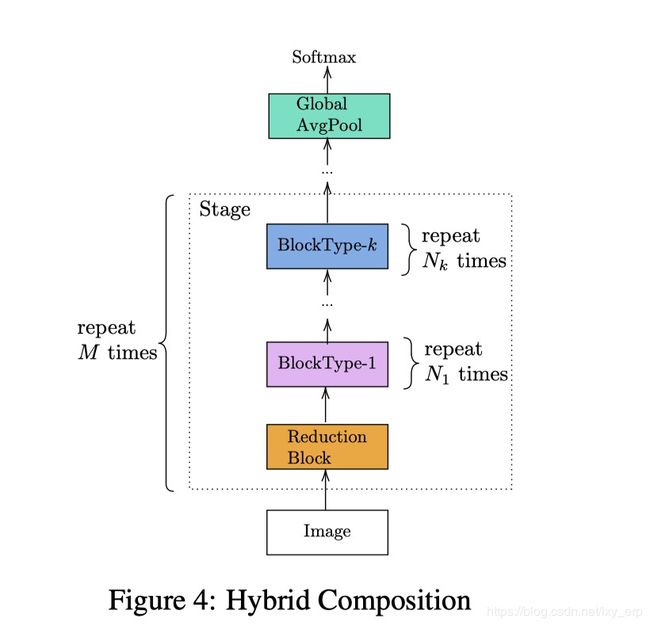

Hybrid Composition Networks

A non-monotonic composition of multiple types building blocks.

这需要:different blocks have identical constraints on the input and output dimensions

Hybrid composition attempts to jointly utilize the different properties of multiple building blocks, which monotonically design is not able to do.

For a monotonic MBBlock network, such as MobileNet v2/v3 and EfficientNet, the first pointwise convolution operator in MBBlock is only used to expand the input dimension for the depthwise operation.

In our case with IdleBlock,

both IdleBlock and MBBlock satisfy the input and output constraints for hybrid composition.

Moreover, once we hybridize IdleBlock with MBBlock, the first pointwise convolution operator in MBBlock will be able to help us ==exchange information of two branches== in IdleBlock, without an explicit channel shuffle operation as in ShuffleBlock.

hybrid composition introduces another challenge.

If a network stage contains n MBBlock units,

there are 2^n candidate combinations of MBBlock and IdleBlock placements in the Idle network,

but we seek to explore only explore a small subset of these candidates.

To solve the challenge,

we explore three configurations for the hybrid composition of MBBlock with IdleBlock:

- Maximum

use MBBlock as reduction block, or when the network changes the output dimension.

All other MBBlocks will be replaced by IdleBlock.

这是使用IdleBlock的hybrid composition的计算下限

- None

none of any blocks in the stage will be replaced with IdleBlock

这是使用IdleBlock的hybrid composition的计算上限

- Adjacent

Adjacent configuration is a greedy strategy

iteratively replace each MBBlock that has an MBBlock input with an IdleBlock,

stopping when we reach our specified computational budget.