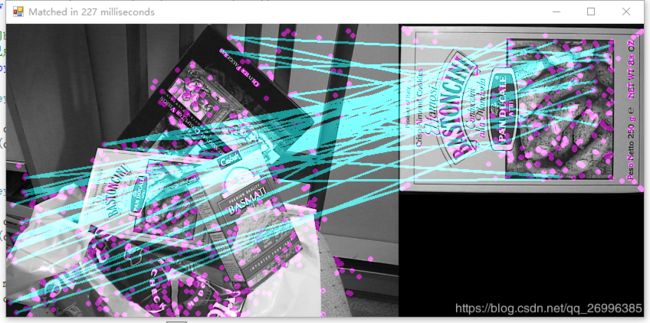

Emgucv不完整图像分割试验(十五)—— 匹配特征点的提取以及半透明显示连接线

有人求助说要将Emgucv模板匹配中的连接线半透明显示出来,没想多示例代码的集成度太高,

Mat mask;

FindMatch(modelImage, observedImage, out matchTime, out modelKeyPoints, out observedKeyPoints, matches,

out mask, out homography);

//Draw the matched keypoints

Mat result = new Mat();

Features2DToolbox.DrawMatches(modelImage, modelKeyPoints, observedImage, observedKeyPoints,

matches, result, new MCvScalar(255, 0, 255), new MCvScalar(255, 0, 255), mask);Features2DToolbox.DrawMatches()虽然可以设置颜色,但始终无法半透明显示,一气之下自己重写了一个。效果如下:

代码如下:

using (VectorOfVectorOfDMatch matches = new VectorOfVectorOfDMatch())

{

Mat mask;

FindMatch(modelImage, observedImage, out matchTime, out modelKeyPoints, out observedKeyPoints, matches,

out mask, out homography);

//Draw the matched keypoints

Mat result = new Mat();

Features2DToolbox.DrawMatches(modelImage, modelKeyPoints, observedImage, observedKeyPoints,

matches, result, new MCvScalar(255, 0, 255), new MCvScalar(255, 0, 255), mask);

//定义个新的aa,尺寸匹配图+模板图的宽,两者较高的高

//用来放匹配图和模板图

int w = modelImage.Width + observedImage.Width;

int h = Math.Max(modelImage.Height, observedImage.Height);

Image aa = new Image(new Size(w, h));

//ROI设置与CopyTo顺序要注意

Image obImage = observedImage.ToImage();

aa.ROI = obImage.ROI;

obImage.CopyTo(aa);

Image moImage = modelImage.ToImage();

aa.ROI = new Rectangle(new Point(observedImage.Width,0), modelImage.Size);

moImage.CopyTo(aa);

//恢复原始ROI

aa.ROI = new Rectangle(new Point(0, 0), new Size(w, h));

//定义个新的bb,尺寸匹配图+模板图的宽,两者较高的高

//用来放匹配点以及匹配点连接线

Image bb = new Image(new Size(w, h));

foreach (MKeyPoint pp in observedKeyPoints.ToArray())

{

CircleF cF = new CircleF(pp.Point, 2);

bb.Draw(cF, new Bgr(255, 0, 255), 2);

}

foreach (MKeyPoint pp in modelKeyPoints.ToArray())

{

CircleF cF = new CircleF(new PointF(pp.Point.X+ observedImage.Width,pp.Point.Y), 2);

bb.Draw(cF, new Bgr(255, 0, 255), 2);

}

MKeyPoint[] mp= modelKeyPoints.ToArray();

MKeyPoint[] op = observedKeyPoints.ToArray();

for (int i = 0; i < matches.Size; i++)

{

VectorOfDMatch vdm = matches[i];

for (int j = 0; j < vdm.Size; j++)

{

if (vdm[j].Distance > 0.7)

{

int a = vdm[j].QueryIdx;

int b = vdm[j].TrainIdx;

PointF pp = new PointF(modelKeyPoints[b].Point.X + observedImage.Width, modelKeyPoints[b].Point.Y);

LineSegment2DF lines = new LineSegment2DF(observedKeyPoints[a].Point, pp);

bb.Draw(lines, new Bgr(255, 255, 0), 2);

}

}

}

//定义个新的cc,尺寸匹配图+模板图的宽,两者较高的高

//用来加权叠加(半透明)

Image cc = new Image(new Size(w, h));

CvInvoke.AddWeighted(aa, 1, bb, 0.5, 0, cc);

#region draw the projected region on the image

if (homography != null)

{

//draw a rectangle along the projected model

Rectangle rect = new Rectangle(Point.Empty, modelImage.Size);

PointF[] pts = new PointF[]

{

new PointF(rect.Left, rect.Bottom),

new PointF(rect.Right, rect.Bottom),

new PointF(rect.Right, rect.Top),

new PointF(rect.Left, rect.Top)

};

pts = CvInvoke.PerspectiveTransform(pts, homography);

#if NETFX_CORE

Point[] points = Extensions.ConvertAll(pts, Point.Round);

#else

Point[] points = Array.ConvertAll(pts, Point.Round);

#endif

using (VectorOfPoint vp = new VectorOfPoint(points))

{

CvInvoke.Polylines(result, vp, true, new MCvScalar(255, 0, 0, 32), 5);

}

}

#endregion

//Image cc = aa.ToImage();

//cc.Draw(new LineSegment2D(new Point(0, 0), new Point(150, 150)), new Bgr(255, 0, 0), 10);

//CvInvoke.AddWeighted(result, 1, cc.Mat, 0.5, 0,cc.Mat);

return cc.Mat;

} 初开博客,目的是交流与合作,本人QQ:273651820。