Ubuntu16.04 使用 ssd-caffe 训练自己的数据集

一、从训练VOC数据集熟悉训练方法(参考博客:https://blog.csdn.net/qq_40806289/article/details/89335651)

1、下载VOC官方数据集范例(可以不下载,文件路径按照VOC放置即可)

cd yourpath/caffe/data

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

tar -xvf VOCtrainval_11-May-2012.tar

tar -xvf VOCtrainval_06-Nov-2007.tar

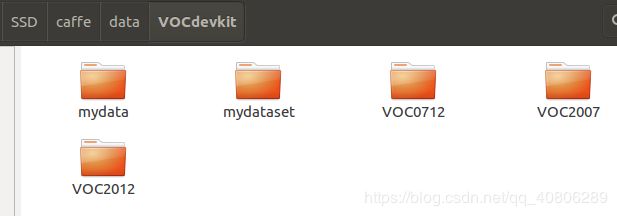

tar -xvf VOCtest_06-Nov-2007.tar2、建立VOC结构文件夹(或者将下载的VOC内的数据换成自己的,这里还是可以试着建立自己)

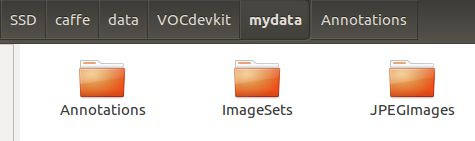

在data/VOCdevkit下新建文件夹mydata,在mydata内建立Annotations;ImageSets;JPEGImages。并在ImageSets内新建文件夹Main。

mydata

├── Annotations

├── ImageSets

│ └── Main

└── JPEGImagesAnnotations:存放标签数据(.xml)

JPEGImages:存放原始数据(.jpg)

3、生成训练、测试清单列表

在mydata路径下新建generate.py文件,在Main文件夹下新建test.txt,trainval.txt,train.txt,val.txt

mydata

├── generate.py

├── Annotations

├── ImageSets

│ └── Main

└── JPEGImagesimport os

import random

xmlfilepath=r'/data_1/SSD/caffe/data/VOCdevkit/mydata/Annotations'

saveBasePath=r"/data_1/SSD/caffe/data/VOCdevkit/mydata/ImageSets/Main"

trainval_percent=0.7

train_percent=0.7

total_xml = os.listdir(xmlfilepath)

num=len(total_xml)

list=range(num)

tv=int(num*trainval_percent)

tr=int(tv*train_percent)

trainval= random.sample(list,tv)

train=random.sample(trainval,tr)

print("train and val size",tv)

print("traub suze",tr)

ftrainval = open(os.path.join(saveBasePath,'trainval.txt'), 'w')

ftest = open(os.path.join(saveBasePath,'test.txt'), 'w')

ftrain = open(os.path.join(saveBasePath,'train.txt'), 'w')

fval = open(os.path.join(saveBasePath,'val.txt'), 'w')

for i in list:

name=total_xml[i][:-4]+'\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

ftrainval.close()

ftrain.close()

fval.close()

ftest .close() 4、生成LMDB文件

在examples文件夹下新建mydata文件夹

caffe

├── data

│ ├── ...

│ ├── mydata...............你需要新建的文件夹

│ └── VOC0712

├── examples

│ ├── ...

│ ├── mydata...............自动生成的lmdb文件链接

│ ├── ssd

│ │ │── ...

│ │ └── ssd_pascal.py

│ └── VOC0712

├── models

│ ├── ...

... └── VGGNet

└── VOC0712...........最后存放训练好的caffemodel模型

caffe/data/VOC0712中的

creat_list.sh(读取上一步生成的清单)

creat_data.sh(生成lmdb文件)

labelmap_voc.prototxt(定义标签种类)

将这三个文件放到data/mydata中。

修改这三个文件:

(1) creat_list.sh

第13行:for name in VOC2007 VOC2012,替换成for name in mydata

(2) creat_data.sh

第8行:dataset_name="VOC0712",替换成dataset_name="mydata"

(3) labelmap_voc.prototxt

item {

name: "none_of_the_above"

label: 0

display_name: "background"

}

item {

name: "person"

label: 1

display_name: "person"

}第一个“0”类固定不变为背景,其余按照格式添加自己的类别 。

回到源码根目录(caffe)

cd $CAFFE_ROOT

./data/mydata/create_list.sh

./data/mydata/create_data.sh这样就会在data/VOCdevkit/mydata/lmdb(你原来定义的源图像数据文件夹路径下)生成对应的**_lmdb的二进制文件,同时也会在$CAFFE_ROOT/examples/mydata/下建立相对应的链接。

5、修改脚本

在/examples/ssd/文件夹下复制ssd_pascal.py并重名为ssd_pascal_mydata.py

caffe

├── data

├── examples

│ ├── ...

│ ├── indoor

│ ├── ssd

│ │ │── ...

│ │ │── ssd_pascal.py

│ │ └── ssd_pascal_mydata.py

... ...修改 ssd_pascal_mydata.py

...

# The database file for training data. Created by data/mydata/create_data.sh

train_data = "/home/[yourname]/data/VOCdevkit/mydata/lmdb/mydata_trainval_lmdb" #82行

# The database file for testing data. Created by data/mydata/create_data.sh

test_data = "/home/[yourname]/data/VOCdevkit/mydata/lmdb/mydata_test_lmdb" #84行

...

# A learning rate for batch_size = 1, num_gpus = 1. change into 0.001

base_lr = 0.00004 #232行,修改学习率

...

# The name of the model. Modify it if you want.

model_name = "VGG_mydata_{}".format(job_name) #237行,将VOC0712改为mydata

# Directory which stores the model .prototxt file

save_dir = "models/VGGNet/mydata/{}".format(job_name) #240行,同上

# Directory which stores the snapshot of models

snapshot_dir = "models/VGGNet/mydata/{}".format(job_name) #242行,同上

# Directory which stores the job script and log file.

job_dir = "jobs/VGGNet/mydata/{}".format(job_name) #244行,同上

# Directory which stores the detection results.

output_result_dir = "{}/data/VOCdevkit/results/mydata/{}/Main".format(os.environ['HOME'], job_name) #246行,同上

...

# Stores the test image names and sizes. Created by data/indoor/create_list.sh

name_size_file = "data/mydata/test_name_size.txt" #259行

...

# Stores LabelMapItem.

label_map_file = "data/mydata/labelmap_voc.prototxt" #263行

...

# MultiBoxLoss parameters.

num_classes = 2 #266行,这里改成你需要训练的识别类别数量(包括背景类)

...

# Defining which GPUs to use.

gpus = "0" #332行,GPU数量改成适合你电脑的

...

# Divide the mini-batch to different GPUs.

batch_size = 32 #338-339行,根据你电脑的GPU性能合理设置batch尺寸

accum_batch_size = 32

...

# Evaluate on whole test set.

num_test_image = 32 # 359行 #360-361行,设置测试数据batch尺寸

test_batch_size = 8

...

prior_variance=prior_variance, kernel_size=3, pad=1, lr_mult=lr_mult,conf_postfix='_mydata') #445行,加上conf_postfix

...

prior_variance=prior_variance, kernel_size=3, pad=1, lr_mult=lr_mult,conf_postfix='_mydata') #474行,加上conf_postfix

...上面的一些路径可以换成绝对路径。

6、训练

回到$CAFFE_ROOT下,运行python examples/ssd/ssd_pascal_mydata.py。

它将会在$CAFFE_ROOT/models/VGGNet/mydata/SSD_300x300(上面237-263行设置,就与传统的/models/VOC0712/文件夹分开了)生成prototxt/ caffemodel/ solverstate三类文件。