深度学习论文: LRNnet: a light-weighted network for real-time semantic segmentation及其PyTorch实现

深度学习论文: LRNnet: a light-weighted network with efficient reduced non-local operation for real-time semantic segmentation及其PyTorch实现

LRNnet: a light-weighted network with efficient reduced non-local operation for real-time semantic segmentation

PDF:https://arxiv.org/pdf/2006.02706.pdf

PyTorch: https://github.com/shanglianlm0525/PyTorch-Networks

1 概述

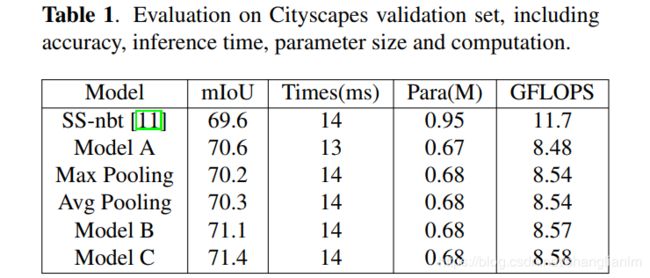

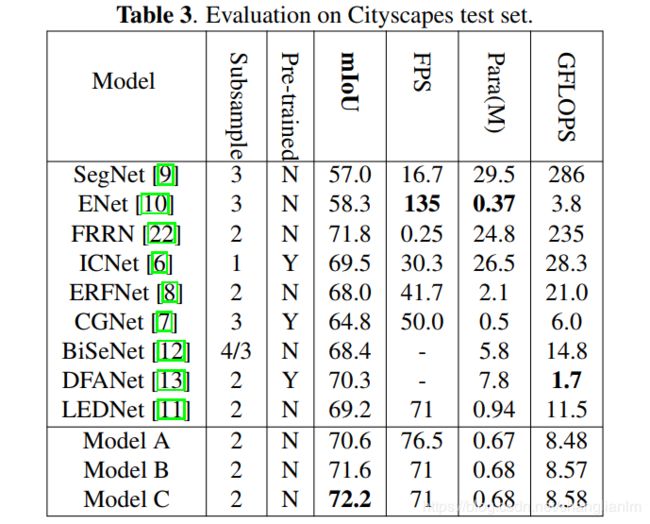

本文基于LEDNet改进, 使用SVD简化non-local网络, 通过分解因子卷积块(FCB),以适当的方式处理远程依赖关系和短距离的特征来构建轻量级且高效的特征提取网络。

LRNNET模型在GTX 1080Ti显卡上的速度为71FPS,获得了72.2% mIoU,整体模型的参数量仅有0.68M。

2 LRNNet

LRNNet编码器大致来看是由三个阶段的ResNet形式组成。在每个阶段的开始都使用下采样单元用于对各个阶段提取的特征图进行过渡。编码器环节的核心组件是分解因子卷积FCB(Factorized Convolution Block)单元,可提供轻量级且高效的特征提取。同时,在最后一个下采样单元之后,采用了空洞卷积上输出特征图的分辨率保持在1/8。

2-1 Factorized Convolution Block

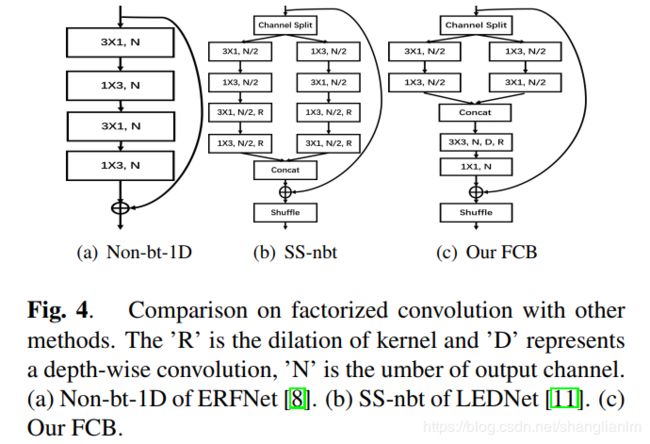

具有较大空洞率的空洞卷积核在空间中接收复杂的远程空间信息特征,并且在空间中需要更多参数。同时,具有较小空洞率的空洞卷积核在空间中接收简单的或较少信息的短距离特征,而只需要较少参数就足够了。因此FCB(上图(c))首先将通道拆分成两组,然后在两组通道中分别用两个一维卷积处理短距离和空间较少的信息特征,这样会大大降低参数和计算量。将两个通道合并后,FCB利用2维卷积来扩大感受野捕获远距离特征,并使用深度可分离卷积来减少参数和计算量。最后设置了通道混洗操作。

class HalfSplit(nn.Module):

def __init__(self, dim=1):

super(HalfSplit, self).__init__()

self.dim = dim

def forward(self, input):

splits = torch.chunk(input, 2, dim=self.dim)

return splits[0], splits[1]

class ChannelShuffle(nn.Module):

def __init__(self, groups):

super(ChannelShuffle, self).__init__()

self.groups = groups

def forward(self, x):

'''Channel shuffle: [N,C,H,W] -> [N,g,C/g,H,W] -> [N,C/g,g,H,w] -> [N,C,H,W]'''

N, C, H, W = x.size()

g = self.groups

return x.view(N, g, int(C / g), H, W).permute(0, 2, 1, 3, 4).contiguous().view(N, C, H, W)

class FCB(nn.Module):

def __init__(self, channels, dilation=1, groups=4):

super(FCB, self).__init__()

mid_channels = channels // 2

self.half_split = HalfSplit(dim=1)

self.first_bottleneck = nn.Sequential(

nn.Conv2d(in_channels=mid_channels, out_channels=mid_channels, kernel_size=[3, 1], stride=1,

padding=[1, 0]),

nn.Conv2d(in_channels=mid_channels, out_channels=mid_channels, kernel_size=[1, 3], stride=1,

padding=[0, 1]),

)

self.second_bottleneck = nn.Sequential(

nn.Conv2d(in_channels=mid_channels, out_channels=mid_channels, kernel_size=[1, 3], stride=1,

padding=[0, 1]),

nn.Conv2d(in_channels=mid_channels, out_channels=mid_channels, kernel_size=[3, 1], stride=1,

padding=[1, 0]),

)

self.conv3x3 = nn.Conv2d(in_channels=channels, out_channels=channels, kernel_size=3, stride=1,dilation=dilation,

padding=dilation,groups=channels)

self.conv1x1 = Conv1x1BN(in_channels=channels,out_channels=channels)

self.channelShuffle = ChannelShuffle(groups)

def forward(self, x):

x1, x2 = self.half_split(x)

x1 = self.first_bottleneck(x1)

x2 = self.second_bottleneck(x2)

out = torch.cat([x1, x2], dim=1)

out = self.conv1x1(self.conv3x3(out))

return self.channelShuffle(out+x)

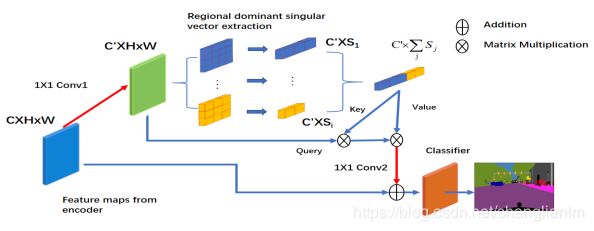

2-2 SVN module

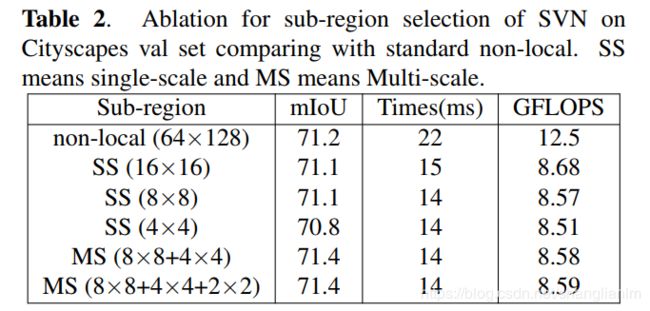

使用SVM对non-local模块的简化,使得整体模型计算量更少、参数量更小、占用内存更少。

1、通过Conv1和Conv2两个1x1卷积以减少non-local计算操作的通道数;

2、用区域主导的奇异向量(spatial regional dominant singular vectors)替换key和value。