ElasticSearch安装

https://www.runaccepted.com/2020/03/29/ElasticSearch安装

文章目录

- 安装ElasticSearch

- linux的docker中安装

- ElasticSearch5.6.11

- Kibana5.6.11

- logstash5.6.11

- linux版

- ElasticSearch 存储和检索数据

- Logstash 收集数据保存到es

- Kibana 可视化界面

- 树莓派版

- elasticsearch-1.0.1

- Kibana-3.0.0

- logstash-2.0.0

- 运行出错 libjffi-1.2.so

- 创建Maven依赖

- logback-spring.xml

- 运行SpringBoot

安装ElasticSearch

linux的docker中安装

ElasticSearch5.6.11

安装镜像 docker pull elasticsearch:5.6.11

创建本地文件

mkdir -p /mydata/elasticsearch/config

mkdir -p /mydata/elasticsearch/data

echo “http.host: 0.0.0.0” >> /mydata/elasticsearch/config/elasticsearch.yml

创建容器

docker run

--name elasticsearch #容器名

-p 9200:9200 -p 9300:9300 #端口映射 9200暴露外部端口 9300 内部集群交互

-e "discovery.type=single-node"

-e ES_JAVA_OPTS="-Xms128m -Xmx128m" #elasticsearch初始内存和最大内存

-v /mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml #内部配置文件挂载到本地

-v /mydata/elasticsearch/data:/usr/share/elasticsearch/data #数据存储到本地

-d elasticsearch:5.6.11

命令

[root@192 /]# docker pull elasticsearch:5.6.11

[root@192 /]#

[root@192 /]# mkdir -p /mydata/elasticsearch/config

[root@192 /]# mkdir -p /mydata/elasticsearch/data

[root@192 /]# echo "http.host: 0.0.0.0" >> /mydata/elasticsearch/config/elasticsearch.yml

[root@192 /]#

[root@192 /]# docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \

> -e "discovery.type=single-node" \

> -e ES_JAVA_OPTS="-Xms128m -Xmx128m" \

> -v /mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

> -v /mydata/elasticsearch/data:/usr/share/elasticsearch/data -d elasticsearch:5.6.11

760ba5e8259c75eb577e493537b632ba4750cfae8f8e14ad361762d2e6a21d2d

[root@192 /]#

[root@192 /]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

760ba5e8259c elasticsearch:5.6.11 "/docker-entrypoint.…" 17 seconds ago Up 14 seconds 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp elasticsearch

[root@192 /]# systemctl stop firewalld

[root@192 /]#

远程访问 http://192.168.0.101:9200/

// 20200331013255

// http://192.168.0.101:9200/

{

"name": "efBli3S",

"cluster_name": "elasticsearch",

"cluster_uuid": "RmnRPDI-T4G8hOH92bX5wQ",

"version": {

"number": "5.6.11",

"build_hash": "bc3eef4",

"build_date": "2018-08-16T15:25:17.293Z",

"build_snapshot": false,

"lucene_version": "6.6.1"

},

"tagline": "You Know, for Search"

}

Kibana5.6.11

[root@192 /]# docker pull kibana:5.6.11

[root@192 /]# docker run --name kibana -v /mydata/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml -p 5601:5601 -d kibana:5.6.11

25d1bd1a0531c0aec95e7885adf82dc14adbb71df43d4dbc15d6fee7cce58e34

docker: Error response from daemon: driver failed programming external connectivity on endpoint kibana (84808062bec07bb507621f089d0f31d73cc7c3f57402cb6c61444cffddbcc14d): (iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 5601 -j DNAT --to-destination 172.17.0.3:5601 ! -i docker0: iptables: No chain/target/match by that name.

(exit status 1)).

[root@192 /]#

重启docker??

[root@192 /]#

[root@192 /]# systemctl restart docker

[root@192 /]#

[root@192 /]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

25d1bd1a0531 kibana:5.6.11 "/docker-entrypoint.…" About a minute ago Created kibana

760ba5e8259c elasticsearch:5.6.11 "/docker-entrypoint.…" 21 minutes ago Exited (143) 7 seconds ago elasticsearch

[root@192 /]# docker start 25d1bd1a0531

25d1bd1a0531

[root@192 /]# docker start 760ba5e8259c

760ba5e8259c

[root@192 /]#

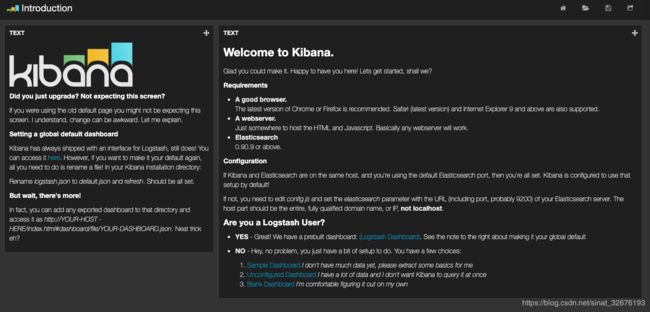

访问 http://192.168.0.101:5601/

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-SkjSId80-1585654511343)(/kibana5.png)]

修改配置文件 原kibana.yml

# Kibana is served by a back end server. This setting specifies the port to use.

#server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

#server.host: "localhost"

# Enables you to specify a path to mount Kibana at if you are running behind a proxy. This only affects

# the URLs generated by Kibana, your proxy is expected to remove the basePath value before forwarding requests

# to Kibana. This setting cannot end in a slash.

#server.basePath: ""

# The maximum payload size in bytes for incoming server requests.

#server.maxPayloadBytes: 1048576

# The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname"

# The URL of the Elasticsearch instance to use for all your queries.

#elasticsearch.url: "http://localhost:9200"

# When this setting's value is true Kibana uses the hostname specified in the server.host

# setting. When the value of this setting is false, Kibana uses the hostname of the host

# that connects to this Kibana instance.

#elasticsearch.preserveHost: true

# Kibana uses an index in Elasticsearch to store saved searches, visualizations and

# dashboards. Kibana creates a new index if the index doesn't already exist.

#kibana.index: ".kibana"

# The default application to load.

#kibana.defaultAppId: "discover"

# If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

# Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

# Optional settings that provide the paths to the PEM-format SSL certificate and key files.

# These files validate that your Elasticsearch backend uses the same key files.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

# Optional setting that enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ]

# To disregard the validity of SSL certificates, change this setting's value to 'none'.

#elasticsearch.ssl.verificationMode: full

# Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: 1500

# Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: 30000

# List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ]

# Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {}

# Time in milliseconds for Elasticsearch to wait for responses from shards. Set to 0 to disable.

#elasticsearch.shardTimeout: 0

# Time in milliseconds to wait for Elasticsearch at Kibana startup before retrying.

#elasticsearch.startupTimeout: 5000

# Specifies the path where Kibana creates the process ID file.

#pid.file: /var/run/kibana.pid

# Enables you specify a file where Kibana stores log output.

#logging.dest: stdout

# Set the value of this setting to true to suppress all logging output.

#logging.silent: false

# Set the value of this setting to true to suppress all logging output other than error messages.

#logging.quiet: false

# Set the value of this setting to true to log all events, including system usage information

# and all requests.

#logging.verbose: false

# Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to 5000.

#ops.interval: 5000

# The default locale. This locale can be used in certain circumstances to substitute any missing

# translations.

#i18n.defaultLocale: "en"

创建 /mydata/kibana/config/kibana.yml

kibana配置文件挂载到本地

[root@192 /]# mkdir -p /mydata/kibana/config

[root@192 /]# cd /mydata/kibana/config

[root@192 config]# nano kibana.yml

bash: nano: 未找到命令

[root@192 config]# yum install nano

[root@192 config]# nano kibana.yml

#############################################

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.0.101:9200"

elasticsearch.requestTimeout: 90000

i18n.defaultLocale: "zh-CN"

##################################################

[root@192 config]# docker rm 25d1bd1a0531

25d1bd1a0531

[root@192 config]# docker run --name kibana -v /mydata/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml -p 5601:5601 -d kibana:5.6.11

7122d84209e4d082dc6e0e18065567eb0bbfd5e4aa3017b3ff8d8457c217b66b

[root@192 config]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7122d84209e4 kibana:5.6.11 "/docker-entrypoint.…" 25 minutes ago Up 10 minutes 0.0.0.0:5601->5601/tcp kibana

760ba5e8259c elasticsearch:5.6.11 "/docker-entrypoint.…" About an hour ago Up 49 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp elasticsearch

[root@192 config]#

[root@192 config]#

[root@192 config]# docker logs 7122d84209e4

"tags":["status","ui settings","info"],"pid":9,"state":"green","message":"Status changed from yellow to green - Ready","prevState":"yellow","prevMsg":"Elasticsearch plugin is yellow"}

logstash5.6.11

[root@192 config]# docker pull logstash:5.6.11

[root@192 config]# mkdir -p /mydata/logstash

[root@192 config]# cd /mydata/logstash

[root@192 logstash]# nano logstash.conf

#################################################

input {

tcp {

port => 4560

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["192.168.0.101:9200"]

index => "applog"

#user => "elastic"

#password => "changeme"

}

stdout{ codec => rubydebug}

}

####################################################

[root@192 logstash]#

[root@192 logstash]# pwd

/mydata/logstash

[root@192 logstash]# docker run --name logstash -p 4560:4560 -v /mydata/logstash/logstash.conf:/etc/logstash.conf --link elasticsearch:elasticsearch -d logstash:5.6.11 logstash -f /etc/logstash.conf

c7473e2145bc7478835f08b08caf8134b126899a4031b78baf5b234c732bd7fa

[root@192 logstash]#

[root@192 logstash]#

[root@192 logstash]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7473e2145bc logstash:5.6.11 "/docker-entrypoint.…" 6 seconds ago Up 3 seconds 0.0.0.0:4560->4560/tcp logstash

7122d84209e4 kibana:5.6.11 "/docker-entrypoint.…" About an hour ago Up 49 minutes 0.0.0.0:5601->5601/tcp kibana

760ba5e8259c elasticsearch:5.6.11 "/docker-entrypoint.…" 2 hours ago Up 2 hours 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp elasticsearch

[root@192 logstash]#

linux版

ElasticSearch 存储和检索数据

https://www.elastic.co/cn/downloads/elasticsearch

elasticsearch只能用非root启动

拷贝至/usr/local/elasticsearch-7.6.1

编译 elasticsearch.yml

[root@192 Downloads]# tar zxvf elasticsearch-7.6.1-linux-x86_64.tar.gz

[root@192 Downloads]# ls

elasticsearch-7.6.1 elasticsearch-7.6.1-linux-x86_64.tar.gz tomcat-users.xml

[root@192 Downloads]# cp -rf elasticsearch-7.6.1 /usr/local/elasticsearch-7.6.1

[root@192 Downloads]# cd /usr/local/elasticsearch-7.6.1

[root@192 elasticsearch-7.6.1]# ls

bin config jdk lib LICENSE.txt logs modules NOTICE.txt plugins README.asciidoc

[root@192 elasticsearch-7.6.1]# cd config

[root@192 config]# ls

elasticsearch.yml jvm.options log4j2.properties role_mapping.yml roles.yml users users_roles

[root@192 config]# nano elasticsearch.yml

elasticsearch.yml

cluster.name: es-linux

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-linux

#

# Add custom attributes to the node:

#

# node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /usr/local/elasticsearch-7.6.1/data

#

# Path to log files:

#

path.logs: /usr/local/elasticsearch-7.6.1/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 0.0.0.0

#

# Set a custom port for HTTP:

#

http.port: 9200

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.zen.ping.unicast.hosts: ["127.0.0.1","192.168.0.100","192.168.0.112"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

cluster.initial_master_nodes: ["es-linux"]

#

# For more information, consult the discovery and cluster formation module documentation.

#

jvm.options

-Xms256m

-Xmx256m

创建用户和权限

[root@192 bin]# adduser elasticsearch

[root@192 bin]# passwd elasticsearch

[root@192 local]# chown -R elasticsearch:elasticsearch elasticsearch-7.6.1

ERROR Unable to invoke factory method in class org.apache.logging.log4j.core.appender.RollingFileAppender for element RollingFile

[root@192 bin]# yum install -y log4j

max file descriptors [4096] for elasticsearch process likely too low, increase to at least [65536]

[root@192 bin]# nano /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

nano /etc/sysctl.conf

vm.max_map_count=262144

sysctl -p

运行

[root@192 bin]# su elasticsearch

[elasticsearch@192 bin]$ ./elasticsearch

http://192.168.0.113:9200/

Logstash 收集数据保存到es

https://www.elastic.co/cn/downloads/logstash 解压后进入config文件夹下

nano config/logstash-sample.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

logstash-sample.conf中的内容改为

input {

tcp {

port => 4560

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["http://192.168.0.113:9200"]

index => "applog"

#user => "elastic"

#password => "changeme"

}

stdout{ codec => rubydebug}

}

安装json_lines

./logstash-plugin list|grep json-lines

./logstash-plugin install logstash-codec-json_lines

运行

./logstash

Kibana 可视化界面

https://www.elastic.co/cn/downloads/kibana

config/kibana.yml

server.port: 5601

# To allow connections from remote users

server.host: "192.168.0.100"

# The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["http://192.168.0.100:9200"]

虚拟机中ELK全开+本机Intellij项目运行 笔记本完全卡死了。。。故采用树莓派安装ELK

树莓派版

https://zhuanlan.zhihu.com/p/23111516

elasticsearch-1.0.1

https://www.elastic.co/cn/downloads/past-releases/elasticsearch-1-0-1

pi@pi:/usr/local $ cd es1

pi@pi:/usr/local/es1 $ ls

bin config lib LICENSE.txt NOTICE.txt README.textile

root@pi:/usr/local/es1# cd config

root@pi:/usr/local/es1/config# nano elasticsearch.yml

# 集群名字,如果要搭集群的话,同个网段内的Elasticsearch应用集群名取得一样就行了,应用会自动广播组成集群的,非常方便

cluster.name: es-pi

# 节点的名称,这个应用的节点名字叫啥,用于区分同个集群里的不同节点

node.name: "node-pi"

# 是否为主节点,一个集群里必须有一个主节点,也只能有一个主节点。当出现一个主节点后,其他master: true的节点都只会成为备用主节点,只有当之前的主节点挂了的时候其他备用的节点才会顶上来。

node.master: true

# 是否是数据节点,一个集群必须要有至少一个数据节点来存储数据

node.data: true

# 默认这个集群中每个索引的分片数量。Elasticsearch中的数据都是存储在索引(index)中的(不同于sql数据库中的索引的概念),每个索引会被分成多个分片到不同的数据节点中进行保存。这就是一个分布式文件数据库的概念

index.number_of_shards: 1

# 默认这个集群中每个分片的副本数量。Elasticsearch中的每个分片都会产生等同于该配置数量的副本,副本分片一般不会与主分片存储在相同的主机节点中,当有主机挂掉的时候,该节点上的分片就丢失了,这时,如果设置了副本分片(相当于备份),那就会保证索引的完整性。如果是单节点没有集群的话,这里配置0就可以了

index.number_of_replicas: 0

# 节点中数据存储的路径,这条不配也没事,默认存储在Elasticsearch文件夹中

# path.data: /path/to/data

root@pi:/usr/local/es1/bin# nano elasticsearch.in.sh

root@pi:/usr/local/es1/bin#

ES_MIN_MEM=128m

ES_MAX_MEM=128m

Kibana-3.0.0

https://www.elastic.co/cn/downloads/past-releases/kibana-3-0-0

root@pi:/home/pi# cd /usr/local/kibana

root@pi:/usr/local/kibana# ls

app build.txt config.js css favicon.ico font img index.html LICENSE.md README.md vendor

root@pi:/usr/local/kibana# nano config.js

elasticsearch: "http://192.168.0.112:9200",

kibana需要挂载到tomcat或nignx中显示

整个文件添加到tomcat的webapps文件夹下

root@pi:/usr/local/tomcat8/webapps# cp -rf /usr/local/kibana /usr/local/tomcat8/webapps

root@pi:/usr/local/tomcat8/webapps# ls

root@pi:/usr/local/tomcat8/webapps# ls

docs examples host-manager kibana manager ROOT

运行tomcat http://192.168.0.112:8080/kibana/index.html

logstash-2.0.0

https://www.elastic.co/cn/downloads/past-releases/logstash-2-0-0

root@pi:/home/pi/Downloads# tar zxvf logstash-2.0.0.tar.gz

root@pi:/home/pi/Downloads# cp -rf logstash-2.0.0 /usr/local/logstash

root@pi:/home/pi# cd /usr/local/logstash

root@pi:/usr/local/logstash# ls

bin CHANGELOG.md CONTRIBUTORS Gemfile Gemfile.jruby-1.9.lock lib LICENSE logstash.conf NOTICE.TXT vendor

root@pi:/usr/local/logstash# cd bin

root@pi:/usr/local/logstash/bin# ls

logstash logstash.bat logstash.lib.sh plugin plugin.bat rspec rspec.bat setup.bat

root@pi:/usr/local/logstash/bin# ./logstash

运行出错 libjffi-1.2.so

java.lang.UnsatisfiedLinkError: /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux/libjffi-1.2.so: /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux/libjffi-1.2.so: 无法打开共享对象文件: 没有那个文件或目录

https://discuss.elastic.co/t/logstash-7-x-on-raspberry-pi-4/205348

安装texinfo,jruby

root@pi:/usr/local/logstash/bin# apt-get install apt-transport-https jruby -y

root@pi:/usr/local/logstash/bin# apt-get install texinfo build-essential ant git -y

perl-base 5.24.1-3+deb9u6 [无候选版本]

安装perl

https://packages.debian.org/buster/arm64/perl/download

root@pi:/usr/local/logstash/bin# nano /etc/apt/sources.list

#镜像

deb http://ftp.cn.debian.org/debian buster main

#升级

root@pi:/usr/local/logstash/bin# apt-get install update

W: GPG 错误:http://ftp.us.debian.org/debian stretch Release: 由于没有公钥,无W: GPG 错误:http://ftp.us.debian.org/debian stretch Release: 由于没有公钥,无法验证下列签名 NO_PUBKEY << key>>

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys <<key>>

W: 目标 Packages (main/binary-armhf/Packages) 在 /etc/apt/sources.list.d/elastic-7.x.list:1和 /etc/apt/sources.list.d/elastic-7.x.list:2 中被配置了多次

root@pi:/usr/local/logstash/bin# cd /etc/apt/sources.list.d

root@pi:/etc/apt/sources.list.d# ls

docker.list elastic-7.x.list raspi.list

root@pi:/etc/apt/sources.list.d# rm -r elastic-7.x.list

root@pi:/etc/apt/sources.list.d# sudo apt-get update

root@pi:/etc/apt/sources.list.d#

root@pi:/etc/apt/sources.list.d#

root@pi:/etc/apt/sources.list.d# apt-get install perl

依旧perl-base 5.24.1-3+deb9u6 [无候选版本]

手动安装

root@pi:/home/pi/Downloads# wget http://www.cpan.org/src/5.0/perl-5.24.1.tar.gz

root@pi:/home/pi/Downloads# tar zxvf perl-5.24.1.tar.gz

root@pi:/home/pi/Downloads# cp -rf perl-5.24.1 /usr/local/perl-5.24.1

root@pi:/home/pi/Downloads# cd /usr/local/perl-5.24.1

root@pi:/usr/local/perl-5.24.1# ./Configure -des -Dprefix=/usr

root@pi:/usr/local/perl-5.24.1# make && make install

root@pi:/usr/local/perl-5.24.1# perl -v

编译jffi库

root@pi:/usr/local# git clone https://github.com/jnr/jffi 9

root@pi:/usr/local# cd jffi/

root@pi:/usr/local/jffi# ant jar

替换jffi

将/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux下的libjffi-1.2.so 替换为/usr/local/jffi/build/jni/下的libjffi-1.2.so

root@pi:/usr/local/jffi# cd /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# ls

libjffi-1.2.so

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# cd /usr/local/jffi/build/jni/

root@pi:/usr/local/jffi/build/jni# ls

com_kenai_jffi_Foreign.h com_kenai_jffi_Foreign_InValidInstanceHolder.h com_kenai_jffi_ObjectBuffer.h jffi libjffi-1.2.so

com_kenai_jffi_Foreign_InstanceHolder.h com_kenai_jffi_Foreign_ValidInstanceHolder.h com_kenai_jffi_Version.h libffi-arm-linux

root@pi:/usr/local/jffi/build/jni# cd /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# mv libjffi-1.2.so libjffi-1.2.so.old

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# cd /usr/local/jffi/build/jni/

root@pi:/usr/local/jffi/build/jni# cp libjffi-1.2.so /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux/libjffi-1.2.so

root@pi:/usr/local/jffi/build/jni# cd /usr/local/logstash/vendor/jruby/lib/jni/arm-Linux

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# ls

libjffi-1.2.so libjffi-1.2.so.old

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux#

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux#

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux#

root@pi:/usr/local/logstash/vendor/jruby/lib/jni/arm-Linux# cd /usr/local/logstash/bin

root@pi:/usr/local/logstash/bin# ./logstash

io/console not supported; tty will not be manipulated

No command given

Usage: logstash <command> [command args]

Run a command with the --help flag to see the arguments.

For example: logstash agent --help

Available commands:

agent - runs the logstash agent

version - emits version info about this logstash

root@pi:/usr/local/logstash/bin# ls

logstash logstash.bat logstash.lib.sh plugin plugin.bat rspec rspec.bat setup.bat

root@pi:/usr/local/logstash/bin# logstash

bash: logstash:未找到命令

root@pi:/usr/local/logstash/bin# ./logstash --help

io/console not supported; tty will not be manipulated

Usage:

/bin/logstash agent [OPTIONS]

Options:

-f, --config CONFIG_PATH Load the logstash config from a specific file

or directory. If a directory is given, all

files in that directory will be concatenated

in lexicographical order and then parsed as a

single config file. You can also specify

wildcards (globs) and any matched files will

be loaded in the order described above.

-e CONFIG_STRING Use the given string as the configuration

data. Same syntax as the config file. If no

input is specified, then the following is

used as the default input:

"input { stdin { type => stdin } }"

and if no output is specified, then the

following is used as the default output:

"output { stdout { codec => rubydebug } }"

If you wish to use both defaults, please use

the empty string for the '-e' flag.

(default: "")

-w, --filterworkers COUNT Sets the number of filter workers to run.

(default: 2)

-l, --log FILE Write logstash internal logs to the given

file. Without this flag, logstash will emit

logs to standard output.

-v Increase verbosity of logstash internal logs.

Specifying once will show 'informational'

logs. Specifying twice will show 'debug'

logs. This flag is deprecated. You should use

--verbose or --debug instead.

--quiet Quieter logstash logging. This causes only

errors to be emitted.

--verbose More verbose logging. This causes 'info'

level logs to be emitted.

--debug Most verbose logging. This causes 'debug'

level logs to be emitted.

-V, --version Emit the version of logstash and its friends,

then exit.

-p, --pluginpath PATH A path of where to find plugins. This flag

can be given multiple times to include

multiple paths. Plugins are expected to be

in a specific directory hierarchy:

'PATH/logstash/TYPE/NAME.rb' where TYPE is

'inputs' 'filters', 'outputs' or 'codecs'

and NAME is the name of the plugin.

-t, --configtest Check configuration for valid syntax and then exit.

-h, --help print help

root@pi:/usr/local/logstash/bin#

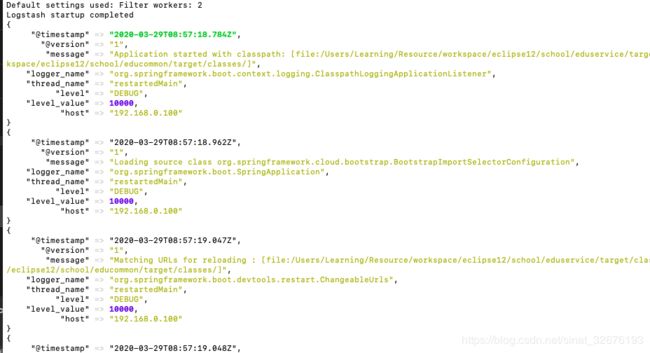

运行logstash脚本

root@pi:/usr/local/logstash/bin# ./logstash -e 'input{stdin{}}output{stdout{codec=>rubydebug}}'

io/console not supported; tty will not be manipulated

Default settings used: Filter workers: 2

Logstash startup completed

Hello World

{

"message" => "Hello World",

"@version" => "1",

"@timestamp" => "2020-03-29T08:28:52.594Z",

"host" => "pi"

}

配置文件

root@pi:/usr/local/logstash/bin#cd /usr/local/logstash

root@pi:/usr/local/logstash# ls

bin CHANGELOG.md CONTRIBUTORS Gemfile Gemfile.jruby-1.9.lock lib LICENSE logstash.conf NOTICE.TXT vendor

root@pi:/usr/local/logstash# nano logstash.conf

input {

tcp {

port => 4560

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["http://192.168.0.112:9200"]

index => "applog"

#user => "elastic"

#password => "changeme"

}

stdout{ codec => rubydebug}

}

开放4560端口

root@pi:/usr/local/logstash# iptables -I INPUT -i eth0 -p tcp --dport 4560 -j ACCEPT

root@pi:/usr/local/logstash# iptables -I OUTPUT -o eth0 -p tcp --sport 4560 -j ACCEPT

创建Maven依赖

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>6.3version>

dependency>

logback-spring.xml

<configuration>

<include resource="org/springframework/boot/logging/logback/base.xml"/>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="APP_NAME" value="edu-service"/>

<property name="LOG_FILE_PATH" value="edu-service"/>

<contextName>${APP_NAME}contextName>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE_PATH}/${APP_NAME}-%d{yyyy-MM-dd}.logfileNamePattern>

<maxHistory>30maxHistory>

rollingPolicy>

<encoder>

<pattern>${FILE_LOG_PATTERN}pattern>

encoder>

appender>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.0.100:4560destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

appender>

<root level="DEBUG">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

<appender-ref ref="LOGSTASH"/>

root>

configuration>

运行SpringBoot

http://192.168.0.112:8080/kibana/index.html#/dashboard/file/logstash.json

树莓派终端

运行一次就两千多个数据