使用yolov3-tiny训练一个人脸检测器

春节放假回家时,在北京西乘坐高铁进站时发现,现在出现了很多自助进站验证对pos机器,主要是对身份证和个人的照片进行匹配,判断是不是同一个人,无需人工check了,省时省力。春节在家没事干,想起了人脸识别的事情,感觉挺好玩,就试着先训一个人脸检测模型。这里的识别效果并不会和目前最先进的模型进行对比,只是觉得好玩测试一下流程而已。

一、人脸检测数据的准备

![]() 我使用的是widerface数据集(http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/index.html)

我使用的是widerface数据集(http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/index.html)

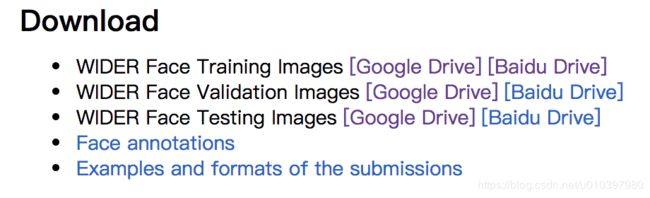

分别下载训练集、验证集及标注的label 人脸边框的位置,得到wider_face_split.zip,WIDER_train.zip,WIDER_val.zip,解压后得到wider_face_split、WIDER_train、WIDER_val文件夹。wider_face_split为标注的边框,里面label保存为txt格式,但是yolov3使用的是voc数据格式的数据集。因此需要对每张图像生成新的VOC数据格式的xml标签。

下面的代码可以把txt的标签转为xml,代码名字为txt_2_xml.py,注意修改txt_label和img_path的路径,然后执行 python2 txt_2_xml.py

#coding:utf-8

import os

import cv2

from xml_writer import PascalVocWriter

#txt_label = "wider_face_split/wider_face_val_bbx_gt.txt"

#img_path = "WIDER_val/images"

txt_label = "wider_face_split/wider_face_train_bbx_gt.txt"

img_path = "WIDER_train/images"

def write_xml(img, res):

img_dir = os.path.join(img_path, img)

print img_dir

img = cv2.imread(img_dir)

shape = img.shape

img_h, img_w = shape[0], shape[1]

writer = PascalVocWriter("./", img_dir, (img_h, img_w, 3), localImgPath="./", usrname="wider_face")

for r in res:

r = r.strip().split(" ")[:4]

print r

x_min, y_min = int(r[0]), int(r[1])

x_max, y_max = x_min + int(r[2]), y_min + int(r[3])

writer.verified = True

writer.addBndBox(x_min, y_min, x_max, y_max, 'face', 0)

writer.save(targetFile = img_dir[:-4] + '.xml')

with open(txt_label, "r") as f:

line_list = f.readlines()

for index, line in enumerate(line_list):

line = line.strip()

if line[-4:] == ".jpg":

print "----------------------"

#print index, line

label_number = int(line_list[index + 1].strip())

print "label number:", label_number

#print line_list[index: index + 2 + label_number]

write_xml(line_list[index].strip(), line_list[index+2: index + 2 + label_number])

xml_writer.py为

#!/usr/bin/env python

# -*- coding: utf8 -*-

import sys

from xml.etree import ElementTree

from xml.etree.ElementTree import Element, SubElement

from lxml import etree

import codecs

XML_EXT = '.xml'

ENCODE_METHOD = 'utf-8'

class PascalVocWriter:

def __init__(self, foldername, filename, imgSize,databaseSrc='Unknown', localImgPath=None , usrname = None):

self.foldername = foldername

self.filename = filename

self.databaseSrc = databaseSrc

self.imgSize = imgSize

self.boxlist = []

self.localImgPath = localImgPath

self.verified = False

self.usr = usrname

def prettify(self, elem):

"""

Return a pretty-printed XML string for the Element.

"""

rough_string = ElementTree.tostring(elem, 'utf8')

root = etree.fromstring(rough_string)

return etree.tostring(root, pretty_print=True, encoding=ENCODE_METHOD).replace(" ".encode(), "\t".encode())

# minidom does not support UTF-8

'''reparsed = minidom.parseString(rough_string)

return reparsed.toprettyxml(indent="\t", encoding=ENCODE_METHOD)'''

def genXML(self):

"""

Return XML root

"""

# Check conditions

if self.filename is None or \

self.foldername is None or \

self.imgSize is None:

return None

top = Element('annotation')

if self.verified:

top.set('verified', 'yes')

user = SubElement(top , 'usr')

# print('usrname:' , self.usr)

user.text = str(self.usr)

folder = SubElement(top, 'folder')

folder.text = self.foldername

filename = SubElement(top, 'filename')

filename.text = self.filename

if self.localImgPath is not None:

localImgPath = SubElement(top, 'path')

localImgPath.text = self.localImgPath

source = SubElement(top, 'source')

database = SubElement(source, 'database')

database.text = self.databaseSrc

size_part = SubElement(top, 'size')

width = SubElement(size_part, 'width')

height = SubElement(size_part, 'height')

depth = SubElement(size_part, 'depth')

width.text = str(self.imgSize[1])

height.text = str(self.imgSize[0])

if len(self.imgSize) == 3:

depth.text = str(self.imgSize[2])

else:

depth.text = '1'

segmented = SubElement(top, 'segmented')

segmented.text = '0'

return top

def addBndBox(self, xmin, ymin, xmax, ymax, name, difficult):

bndbox = {'xmin': xmin, 'ymin': ymin, 'xmax': xmax, 'ymax': ymax}

bndbox['name'] = name

bndbox['difficult'] = difficult

self.boxlist.append(bndbox)

def appendObjects(self, top):

for each_object in self.boxlist:

object_item = SubElement(top, 'object')

name = SubElement(object_item, 'name')

try:

name.text = unicode(each_object['name'])

except NameError:

# Py3: NameError: name 'unicode' is not defined

name.text = each_object['name']

pose = SubElement(object_item, 'pose')

pose.text = "Unspecified"

truncated = SubElement(object_item, 'truncated')

if int(each_object['ymax']) == int(self.imgSize[0]) or (int(each_object['ymin'])== 1):

truncated.text = "1" # max == height or min

elif (int(each_object['xmax'])==int(self.imgSize[1])) or (int(each_object['xmin'])== 1):

truncated.text = "1" # max == width or min

else:

truncated.text = "0"

difficult = SubElement(object_item, 'difficult')

difficult.text = str( bool(each_object['difficult']) & 1 )

bndbox = SubElement(object_item, 'bndbox')

xmin = SubElement(bndbox, 'xmin')

xmin.text = str(each_object['xmin'])

ymin = SubElement(bndbox, 'ymin')

ymin.text = str(each_object['ymin'])

xmax = SubElement(bndbox, 'xmax')

xmax.text = str(each_object['xmax'])

ymax = SubElement(bndbox, 'ymax')

ymax.text = str(each_object['ymax'])

def save(self, targetFile=None):

root = self.genXML()

self.appendObjects(root)

out_file = None

if targetFile is None:

out_file = codecs.open(

self.filename + XML_EXT, 'w', encoding=ENCODE_METHOD)

else:

out_file = codecs.open(targetFile, 'w', encoding=ENCODE_METHOD)

prettifyResult = self.prettify(root)

out_file.write(prettifyResult.decode('utf8'))

out_file.close()

class PascalVocReader:

def __init__(self, filepath):

# shapes type:

# [labbel, [(x1,y1), (x2,y2), (x3,y3), (x4,y4)], color, color, difficult]

self.shapes = []

self.filepath = filepath

self.verified = False

try:

self.parseXML()

except:

pass

def getShapes(self):

return self.shapes

def addShape(self, label, bndbox, difficult):

xmin = int(bndbox.find('xmin').text)

ymin = int(bndbox.find('ymin').text)

xmax = int(bndbox.find('xmax').text)

ymax = int(bndbox.find('ymax').text)

points = [(xmin, ymin), (xmax, ymin), (xmax, ymax), (xmin, ymax)]

self.shapes.append((label, points, None, None, difficult))

def parseXML(self):

assert self.filepath.endswith(XML_EXT), "Unsupport file format"

parser = etree.XMLParser(encoding=ENCODE_METHOD)

xmltree = ElementTree.parse(self.filepath, parser=parser).getroot()

filename = xmltree.find('filename').text

try:

verified = xmltree.attrib['verified']

if verified == 'yes':

self.verified = True

except KeyError:

self.verified = False

for object_iter in xmltree.findall('object'):

bndbox = object_iter.find("bndbox")

label = object_iter.find('name').text

# Add chris

difficult = False

if object_iter.find('difficult') is not None:

difficult = bool(int(object_iter.find('difficult').text))

self.addShape(label, bndbox, difficult)

return True

二、yolov3的darknet目标检测框架

在darknet官网上下载(https://pjreddie.com/darknet/)并安装darknet,保证可以对VOC数据集进行训练。然后把VOC数据替换为我们的人脸数据。修改配置文件参数,并进行训练,这里我只用yolov3-tiny训练了14万次,模型在这里链接: https://pan.baidu.com/s/109LU1GlCA-1o-l0CpZ0ZIw 提取码: mw5i

三、检测人脸

我们可以使用darknet训练好的.weights模型对图像进行人脸检测。使用python接口调用模型,预测的代码在这里(https://github.com/zhangming8/face_detect_yolov3/tree/init)对源码进行了少量修改,执行python2 detect_all_img.py可以对图像进行预测,预测方法有3种:1.可以直接预测本地的图像 2. 可以用cv2调用摄像头再进行预测 3. 可以对本地对视频进行预测

结构保存在results文件夹里,下面是对本地图像对预测结果: