在Windows中安装Hadoop(非虚拟机安装)

在Windows中安装Hadoop

操作系统:Windows 10

Hadoop版本:hadoop-2.7.3

JDK版本:jdk-8u181-windows-x64.exe

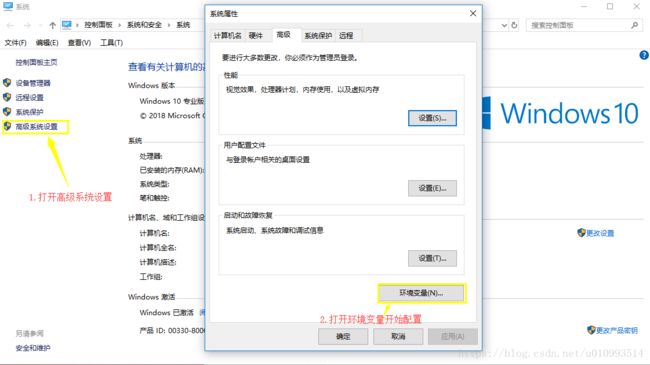

配置Java环境变量

Hadoop底层实现语言是Java,所以我们在运行Hadoop时是需要Java运行环境的。

下载好jdk之后按照提示安装,这里就不演示了,安装完成之后在DOS命令窗(运行cmd)输入:

java -version看到如下版本信息说明安装成功:

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)安装完成之后手动配置一下Java环境变量:

变量名: JAVA_HOME

变量值:C:\Program Files\Java\jdk1.8.0_181

变量值为jdk的安装路径

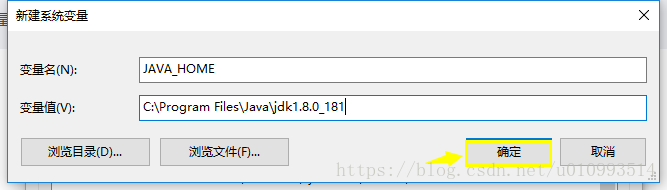

变量名:CLASSPATH

变量值:.;%JAVA_HOME%\lib\dt.jar;%JAVA_HOME%\lib\tools.jar;

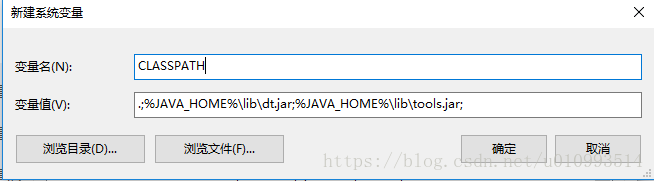

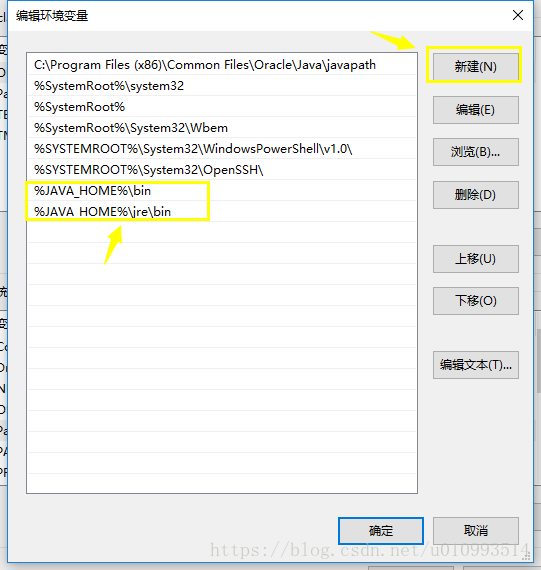

编辑Path变量,在里面新建:

%JAVA_HOME%\bin

%JAVA_HOME%\jre\bin

Hadoop配置

1、下载Hadoop安装包:hadoop-2.7.3

hadoop-2.7.3.tar.gz

2、下载Windows配置文件

https://github.com/PengShuaixin/hadoop-2.7.3_windows.git

3、用GitHub下载的配置文件替换掉第一步在官方下载的安装包中的bin和etc文件夹

ps:这里我也上传了一份已经配置好的包,解压即可直接使用,不用进行[1-3]的配置。该资源虽然已经免积分下载,但是仍然需要csdn账号,没账号的自己按1-3配置一下,百度网盘下载资源速度太慢,这里就不贴链接了。

https://download.csdn.net/download/u010993514/10627460

4、 打开Hadoop文件夹下的\etc\hadoop目录对配置文件进行修改,

hadoop-env.cmd

找到如下代码(26行),将路径修改为你自己的JAVA_HOME路径

set JAVA_HOME=C:\PROGRA~1\Java\jdk1.8.0_181注意:这里带空格的文件夹名需要用软链代替,否则会报错。

在下面这篇博文中有对常见错误的处理方法,在安装完成后出现报错可参考解决:

在Windows中安装Hadoop常见错误解决方法

hdfs-site.xml

dfs.replication 1 dfs.namenode.name.dir file:/D:/hadoopdata/namenode dfs.datanode.data.dir file:/D:/hadoopdata/datanode namenode的文件路径:

D:/hadoopdata/namenode

datanode的文件路径:

D:/hadoopdata/datanode

可以根据自己的实际情况修改

core-site.xml

fs.defaultFS hdfs://localhost:9000

mapred-site.xml

mapreduce.framework.name yarn

yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.aux-services.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler

5、将Hadoop文件夹\bin下的hadoop.dll复制到C:\Windows\System32

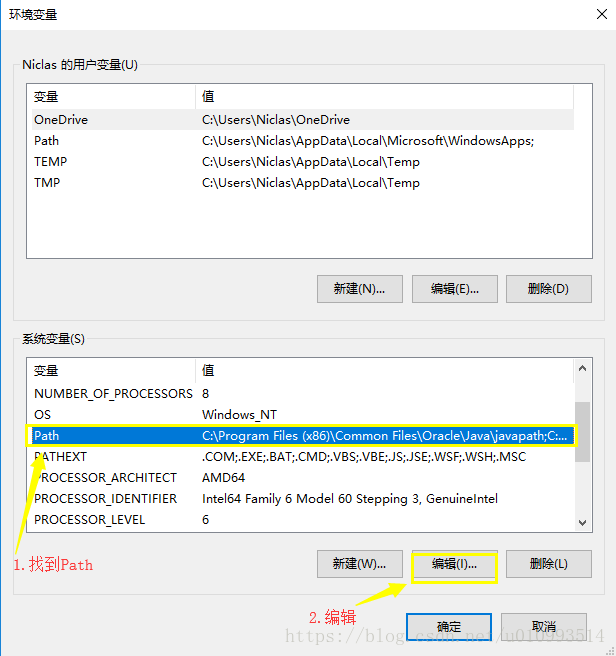

Hadoop环境变量配置

和上述JAVA环境变量配置方法一样

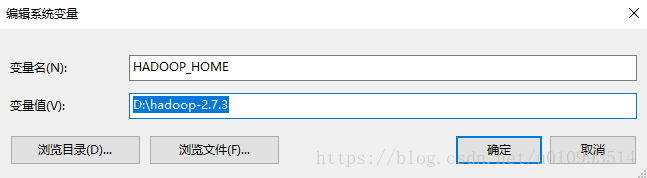

新建系统环境变量

变量名:HADOOP_HOME

变量值:D:\hadoop-2.7.3

(变量值根据自己实际安装目录填写)

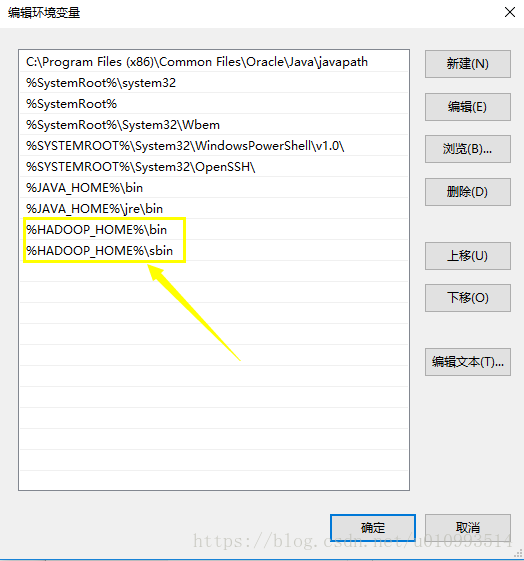

编辑Path变量,新建:

%HADOOP_HOME%\bin

%HADOOP_HOME%\sbin

环境变量测试

C:\Users\Niclas>hadoop

Usage: hadoop [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar run a jar file

note: please use "yarn jar" to launch

YARN applications, not this command.

checknative [-a|-h] check native hadoop and compression libraries availability

distcp copy file or directories recursively

archive -archiveName NAME -p * create a hadoop archive

classpath prints the class path needed to get the

Hadoop jar and the required libraries

credential interact with credential providers

key manage keys via the KeyProvider

daemonlog get/set the log level for each daemon

or

CLASSNAME run the class named CLASSNAME

Most commands print help when invoked w/o parameters.

C:\Users\Niclas>hadoop -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode) DOS命令输入hadoop和hadoop -version出现上述界面说明Hadoop环境变量配置成功

初始化HDFS

运行如下命令:

hdfs namenode -format

出现如下代码块:

18/10/01 11:20:24 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = DESKTOP-28UR3P0/192.168.17.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.7.3 STARTUP_MSG: classpath = D:\hadoop-2.7.3\etc\hadoop;D:\hadoop-2.7.3\share\hadoop\common\lib\activation-1.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\apacheds-i18n-2.0.0-M15.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\api-asn1-api-1.0.0-M20.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\api-util-1.0.0-M20.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\asm-3.2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\avro-1.7.4.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-beanutils-1.7.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-beanutils-core-1.8.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-cli-1.2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-codec-1.4.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-collections-3.2.2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-compress-1.4.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-configuration-1.6.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-digester-1.8.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-httpclient-3.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-io-2.4.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-lang-2.6.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-logging-1.1.3.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-math3-3.1.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\commons-net-3.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\curator-client-2.7.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\curator-framework-2.7.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\curator-recipes-2.7.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\gson-2.2.4.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\guava-11.0.2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\hadoop-annotations-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\hadoop-auth-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\hamcrest-core-1.3.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\htrace-core-3.1.0-incubating.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\httpclient-4.2.5.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\httpcore-4.2.5.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jackson-core-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jackson-jaxrs-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jackson-xc-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\java-xmlbuilder-0.4.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jaxb-api-2.2.2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jaxb-impl-2.2.3-1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jersey-core-1.9.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jersey-json-1.9.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jersey-server-1.9.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jets3t-0.9.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jettison-1.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jetty-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jetty-util-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jsch-0.1.42.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jsp-api-2.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\jsr305-3.0.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\junit-4.11.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\log4j-1.2.17.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\mockito-all-1.8.5.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\netty-3.6.2.Final.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\paranamer-2.3.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\protobuf-java-2.5.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\servlet-api-2.5.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\slf4j-api-1.7.10.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\slf4j-log4j12-1.7.10.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\snappy-java-1.0.4.1.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\stax-api-1.0-2.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\xmlenc-0.52.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\xz-1.0.jar;D:\hadoop-2.7.3\share\hadoop\common\lib\zookeeper-3.4.6.jar;D:\hadoop-2.7.3\share\hadoop\common\hadoop-common-2.7.3-tests.jar;D:\hadoop-2.7.3\share\hadoop\common\hadoop-common-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\common\hadoop-nfs-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\hdfs;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\asm-3.2.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-cli-1.2.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-codec-1.4.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-daemon-1.0.13.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-io-2.4.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-lang-2.6.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\commons-logging-1.1.3.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\guava-11.0.2.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\htrace-core-3.1.0-incubating.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jackson-core-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jersey-core-1.9.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jersey-server-1.9.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jetty-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jetty-util-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\jsr305-3.0.0.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\leveldbjni-all-1.8.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\log4j-1.2.17.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\netty-3.6.2.Final.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\netty-all-4.0.23.Final.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\protobuf-java-2.5.0.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\servlet-api-2.5.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\xercesImpl-2.9.1.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\xml-apis-1.3.04.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\lib\xmlenc-0.52.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\hadoop-hdfs-2.7.3-tests.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\hadoop-hdfs-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\hdfs\hadoop-hdfs-nfs-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\activation-1.1.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\aopalliance-1.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\asm-3.2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-cli-1.2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-codec-1.4.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-collections-3.2.2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-compress-1.4.1.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-io-2.4.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-lang-2.6.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\commons-logging-1.1.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\guava-11.0.2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\guice-3.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\guice-servlet-3.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jackson-core-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jackson-jaxrs-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jackson-xc-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\javax.inject-1.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jaxb-api-2.2.2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jaxb-impl-2.2.3-1.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jersey-client-1.9.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jersey-core-1.9.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jersey-guice-1.9.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jersey-json-1.9.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jersey-server-1.9.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jettison-1.1.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jetty-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jetty-util-6.1.26.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\jsr305-3.0.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\leveldbjni-all-1.8.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\log4j-1.2.17.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\netty-3.6.2.Final.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\protobuf-java-2.5.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\servlet-api-2.5.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\stax-api-1.0-2.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\xz-1.0.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\zookeeper-3.4.6-tests.jar;D:\hadoop-2.7.3\share\hadoop\yarn\lib\zookeeper-3.4.6.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-api-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-applications-distributedshell-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-client-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-common-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-registry-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-applicationhistoryservice-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-common-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-nodemanager-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-resourcemanager-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-sharedcachemanager-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-tests-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\yarn\hadoop-yarn-server-web-proxy-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\aopalliance-1.0.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\asm-3.2.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\avro-1.7.4.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\commons-compress-1.4.1.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\commons-io-2.4.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\guice-3.0.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\guice-servlet-3.0.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\hadoop-annotations-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\hamcrest-core-1.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\jackson-core-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\javax.inject-1.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\jersey-core-1.9.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\jersey-guice-1.9.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\jersey-server-1.9.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\junit-4.11.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\leveldbjni-all-1.8.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\log4j-1.2.17.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\netty-3.6.2.Final.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\paranamer-2.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\protobuf-java-2.5.0.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\snappy-java-1.0.4.1.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\lib\xz-1.0.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-app-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-common-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-plugins-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.7.3-tests.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-client-shuffle-2.7.3.jar;D:\hadoop-2.7.3\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.7.3.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff; compiled by 'root' on 2016-08-18T01:41Z STARTUP_MSG: java = 1.8.0_181 ************************************************************/ 18/10/01 11:20:24 INFO namenode.NameNode: createNameNode [-format] Formatting using clusterid: CID-3518aff7-1d9d-48a2-87f5-451ff0b750a5 18/10/01 11:20:25 INFO namenode.FSNamesystem: No KeyProvider found. 18/10/01 11:20:25 INFO namenode.FSNamesystem: fsLock is fair:true 18/10/01 11:20:25 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 18/10/01 11:20:25 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 18/10/01 11:20:25 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 18/10/01 11:20:25 INFO blockmanagement.BlockManager: The block deletion will start around 2018 十月 01 11:20:25 18/10/01 11:20:25 INFO util.GSet: Computing capacity for map BlocksMap 18/10/01 11:20:25 INFO util.GSet: VM type = 64-bit 18/10/01 11:20:25 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 18/10/01 11:20:25 INFO util.GSet: capacity = 2^21 = 2097152 entries 18/10/01 11:20:25 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 18/10/01 11:20:25 INFO blockmanagement.BlockManager: defaultReplication = 1 18/10/01 11:20:25 INFO blockmanagement.BlockManager: maxReplication = 512 18/10/01 11:20:25 INFO blockmanagement.BlockManager: minReplication = 1 18/10/01 11:20:25 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 18/10/01 11:20:25 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 18/10/01 11:20:25 INFO blockmanagement.BlockManager: encryptDataTransfer = false 18/10/01 11:20:25 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 18/10/01 11:20:25 INFO namenode.FSNamesystem: fsOwner = Niclas (auth:SIMPLE) 18/10/01 11:20:25 INFO namenode.FSNamesystem: supergroup = supergroup 18/10/01 11:20:25 INFO namenode.FSNamesystem: isPermissionEnabled = true 18/10/01 11:20:25 INFO namenode.FSNamesystem: HA Enabled: false 18/10/01 11:20:25 INFO namenode.FSNamesystem: Append Enabled: true 18/10/01 11:20:26 INFO util.GSet: Computing capacity for map INodeMap 18/10/01 11:20:26 INFO util.GSet: VM type = 64-bit 18/10/01 11:20:26 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 18/10/01 11:20:26 INFO util.GSet: capacity = 2^20 = 1048576 entries 18/10/01 11:20:26 INFO namenode.FSDirectory: ACLs enabled? false 18/10/01 11:20:26 INFO namenode.FSDirectory: XAttrs enabled? true 18/10/01 11:20:26 INFO namenode.FSDirectory: Maximum size of an xattr: 16384 18/10/01 11:20:26 INFO namenode.NameNode: Caching file names occuring more than 10 times 18/10/01 11:20:26 INFO util.GSet: Computing capacity for map cachedBlocks 18/10/01 11:20:26 INFO util.GSet: VM type = 64-bit 18/10/01 11:20:26 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 18/10/01 11:20:26 INFO util.GSet: capacity = 2^18 = 262144 entries 18/10/01 11:20:26 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 18/10/01 11:20:26 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 18/10/01 11:20:26 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 18/10/01 11:20:26 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 18/10/01 11:20:26 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 18/10/01 11:20:26 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 18/10/01 11:20:26 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 18/10/01 11:20:26 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 18/10/01 11:20:26 INFO util.GSet: Computing capacity for map NameNodeRetryCache 18/10/01 11:20:26 INFO util.GSet: VM type = 64-bit 18/10/01 11:20:26 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 18/10/01 11:20:26 INFO util.GSet: capacity = 2^15 = 32768 entries 18/10/01 11:20:26 INFO namenode.FSImage: Allocated new BlockPoolId: BP-301588983-192.168.17.1-1538364026657 18/10/01 11:20:26 INFO common.Storage: Storage directory D:\hadoopdata\namenode has been successfully formatted. 18/10/01 11:20:26 INFO namenode.FSImageFormatProtobuf: Saving image file D:\hadoopdata\namenode\current\fsimage.ckpt_0000000000000000000 using no compression 18/10/01 11:20:26 INFO namenode.FSImageFormatProtobuf: Image file D:\hadoopdata\namenode\current\fsimage.ckpt_0000000000000000000 of size 353 bytes saved in 0 seconds. 18/10/01 11:20:27 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 18/10/01 11:20:27 INFO util.ExitUtil: Exiting with status 0 18/10/01 11:20:27 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at DESKTOP-28UR3P0/192.168.17.1 ************************************************************/看到下面这句说明初始化成功:

18/10/01 11:20:26 INFO common.Storage: Storage directory D:\hadoopdata\namenode has been successfully formatted.

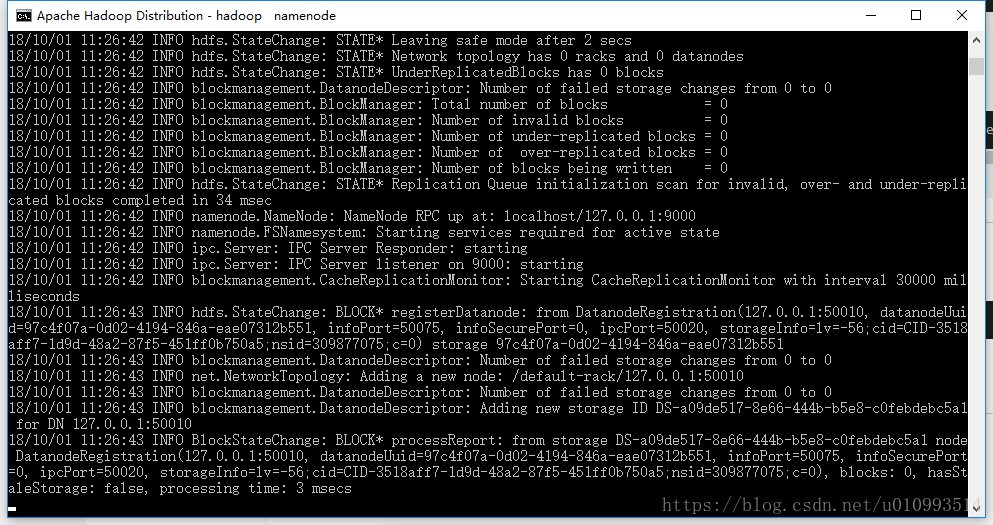

启动Hadoop

启动Hadoop

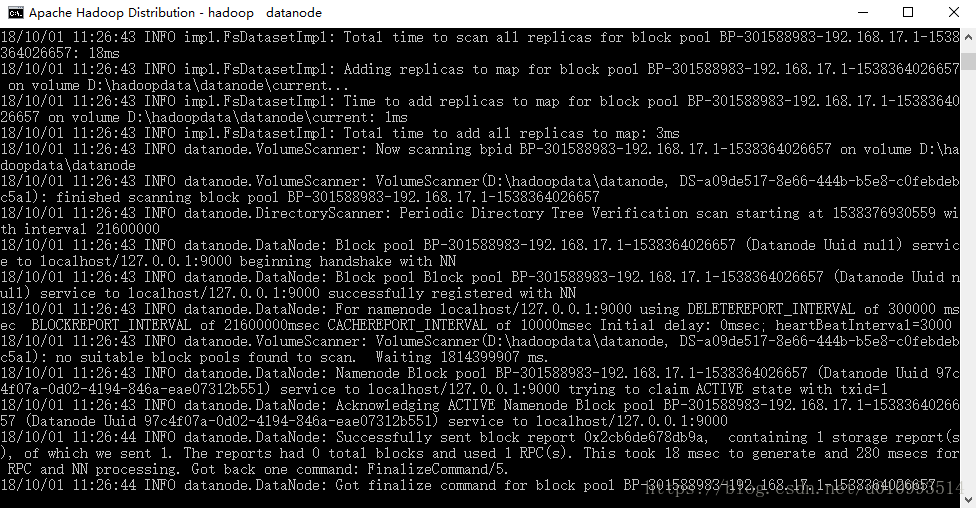

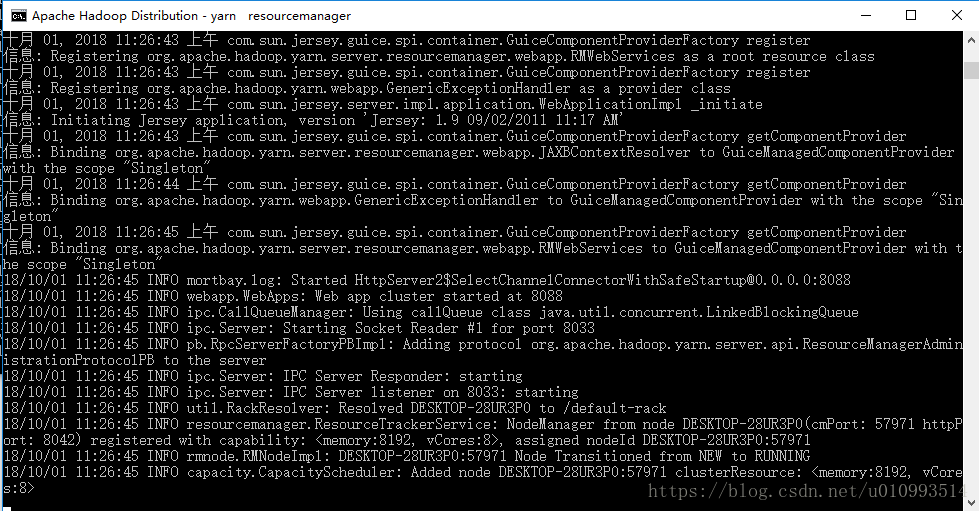

start-all出现如下四个黑框说明成功启动进程:

namenode

datanode

resourcemanager

nodemanager

使用"jps"命令可以看到进程信息,如下:

C:\Users\Niclas>jps

9392 DataNode

30148 NameNode

35156 Jps

5284 ResourceManager

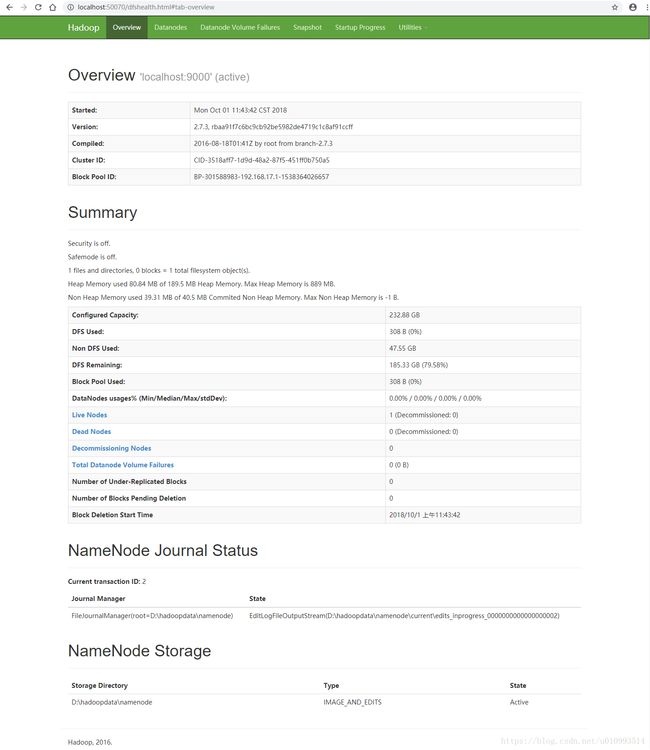

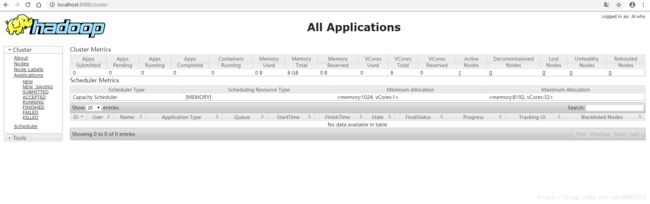

31324 NodeManager进入网页查看:

localhost:50070

localhost:8088

关闭Hadoop

stop-allC:\Users\Niclas>stop-all

This script is Deprecated. Instead use stop-dfs.cmd and stop-yarn.cmd

成功: 给进程发送了终止信号,进程的 PID 为 15300。

成功: 给进程发送了终止信号,进程的 PID 为 31276。

stopping yarn daemons

成功: 给进程发送了终止信号,进程的 PID 为 7032。

成功: 给进程发送了终止信号,进程的 PID 为 32596。

信息: 没有运行的带有指定标准的任务。常见错误解决方法

在Windows中安装Hadoop常见错误解决方法