记一次Go爬取小说

记一次Go定向爬虫

- 原始需求

- 分析

- 实战

- Workers

- BookerWorker

- DirectoryWorker

- ChapterWorker

- PageWorker

- main.go

- 执行结果

原始需求

原始需求:https://m.999xs.com/files/article/html/69/69208/index.html

在线看不方便,而且浏览器还有强制广告

ps: 在实战过程中,对m.999xs.com造成了非正常的访问,由此带来的服务端压力和问题在此说声Sorry。

分析

打开链接,我们发现一本被分成了很多章节,在菜单目录中20章节为一页。

每一章节又被分为多个子页。

分级关系描述如下:

page1,page2…->Chapter

Chapter1,Chapter2…(<=20)->Directory

Directory1,Directory2…->Book

实战

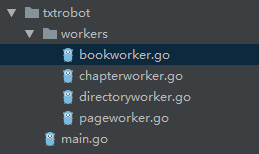

Workers

定义一个workers目录,按照分析过程中的层级关系,添加如下4个worker

BookerWorker

package workers

import (

"io/ioutil"

"log"

"net/http"

"os"

"regexp"

"strings"

)

/*

Booker

职责:

1. 获取Title,书名即最终的文本文件名

2. 初始化 DirectoryWorker

3. 接收最终 DirectoryWorker 和 PageWorker 解析出来的内容,并生成文本文件

*/

/*

BookWorker.Contents的描述

[]byte ========>Chapter ========>每章中的内容,每个章节包含多页,在章节中合并,章节=章节名+页1+页2+...

[][]byte ========>Directory ========>每个菜单目录,[章节1,章节2...]

[][][]byte ========>Book ========>整本书,[目录1,目录2...]

*/

type BookWorker struct {

DefaultUrl string //初始url,https://m.999xs.com/files/article/html/69/69208/index.html

Contents [][][]byte //index页面获取到的html流,

FileName string //书名

PageContentChan chan PageContentItem //接收处理的Page结果

Host string

}

type PageContentItem struct {

Content []byte

PageNum int

SubPageNum int

}

func (b *BookWorker) Run() error {

defer log.Println("booker worker down")

//initial Receiver

downchan := make(chan int)

go b.ReceiveContents(downchan)

resp, err := http.DefaultClient.Get(b.Host + b.DefaultUrl)

if err != nil {

return err

}

buf, err := ioutil.ReadAll(resp.Body)

if err != nil {

return err

}

b.getTitle(buf)

err = b.StartDirectoryWorker(buf, b.DefaultUrl)

if err != nil {

return err

}

close(b.PageContentChan)

<-downchan

return nil

}

func (b *BookWorker) getTitle(contents []byte) {

//处理BookTitle

titlepat := `id="bqgmb_h1">[\s\S]*`

reg := regexp.MustCompile(titlepat)

bytes := reg.Find(contents)

b.FileName = strings.ReplaceAll(string(bytes), `id="bqgmb_h1">`, "")

b.FileName = strings.ReplaceAll(b.FileName, ``, "")

b.FileName = b.FileName + ".txt"

log.Println("get book title:", b.FileName)

}

func (b *BookWorker) StartDirectoryWorker(currentContents []byte, url string) error {

log.Println("start directory workers")

defer log.Println("directory workers all done")

directorynum := 0

for {

if currentContents == nil {

break

}

d := DirectoryWorker{

IndexUrl: url,

Contents: currentContents,

Host: b.Host,

DirectoryNum: directorynum,

}

d.Run(b.PageContentChan)

nexturl, err := d.GetNextDirectoryUrl()

if err != nil {

return err

}

if nexturl == "#" || nexturl == "" {

break

}

nexturl = strings.ReplaceAll(b.DefaultUrl, "index.html", nexturl)

resp, err := http.DefaultClient.Get(b.Host + nexturl)

if err != nil {

return err

}

buf, err := ioutil.ReadAll(resp.Body)

if err != nil {

return err

}

url = nexturl

currentContents = buf

directorynum += 1

}

return nil

}

func (b *BookWorker) ReceiveContents(downchan chan int) {

log.Println("start ReceiveContents")

defer log.Println("ReceiveContents done")

for item := range b.PageContentChan {

if b.Contents[item.PageNum] != nil && b.Contents[item.PageNum][item.SubPageNum] != nil {

log.Fatal(item.PageNum, item.SubPageNum, string(item.Content))

}

pagecont := b.Contents[item.PageNum]

if pagecont == nil {

pagecont = make([][]byte, 100)

}

pagecont[item.SubPageNum] = item.Content

b.Contents[item.PageNum] = pagecont

}

b.Flush()

downchan <- 1

}

func (b *BookWorker) Flush() {

_ = os.Remove(b.FileName)

file, err := os.Create(b.FileName)

defer func() {

file.Close()

}()

if err != nil {

panic(err.Error())

}

for _, c := range b.Contents {

for _, sc := range c {

n, err := file.Write(sc)

if err != nil {

log.Fatal(err, n)

}

}

}

}

DirectoryWorker

package workers

import (

"log"

"regexp"

"strings"

"time"

)

/*

Directory 分页目录

职责:

1. 获取分页明细

2. 初始化 ChapterWorker

*/

type DirectoryWorker struct {

IndexUrl string

Contents []byte

Host string

DirectoryNum int

}

func getPageUrls(contents []byte) []string {

//解析pages

pagespat := `正文[\S\s]*`

reg := regexp.MustCompile(pagespat)

bytes := reg.Find(contents)

lipat := `href=\"[\w\"=\./]*\"`

reg = regexp.MustCompile(lipat)

lis := reg.FindAll(bytes, 100)

pages := make([]string, len(lis))

for index, li := range lis {

pageurl := strings.Trim(string(li), `href="`)

pageurl = strings.Trim(pageurl, `"`)

pages[index] = pageurl

}

return pages

}

func (d *DirectoryWorker) Run(bookcontentchan chan PageContentItem) {

log.Println("directory worker run", d.IndexUrl)

defer log.Println("directory worker down", d.IndexUrl)

pages := getPageUrls(d.Contents)

subdownchan := make(chan int)

subrutinenum := 0

for index, p := range pages {

//一个p表示一个章节,预计开20个p

subrutinenum += 1

go func(pu string) {

chapter := ChapterWorker{

Host: d.Host,

PageNum: d.DirectoryNum,

SubPageNum: index,

DownChan: subdownchan,

BookContentChan: bookcontentchan,

IndexUrl: pu,

}

chapter.Run() //go runtine开始执行,执行结束会自动关闭

}(p)

time.Sleep(time.Millisecond * 10)

}

//wait for chapter worker done

for i := 0; i < subrutinenum; i++ {

<-subdownchan

}

}

func (d *DirectoryWorker) GetNextDirectoryUrl() (string, error) {

pat := `[\s\S]*`

reg := regexp.MustCompile(pat)

bytes := reg.Find(d.Contents)

reg = regexp.MustCompile(`[\s\S]*`)

bytes = reg.Find(bytes)

reg = regexp.MustCompile(`href="[#\w=\d_\.]*"`)

bytes = reg.Find(bytes)

lll := strings.Trim(string(bytes), `href="`)

lll = strings.Trim(lll, `"`)

return lll, nil

}

ChapterWorker

package workers

import (

"io/ioutil"

"log"

"net/http"

"strings"

"time"

)

/*

Chapter 章节

职责:

1. 解析Html页面内容,章节Title

2. 解析NextPager Url

3. 获取Page的文本内容,并合并成Chapter文本(包含Title),发送给BookWorker

*/

type ChapterWorker struct {

Host string

PageNum int

SubPageNum int

IndexUrl string

DownChan chan int

BookContentChan chan PageContentItem

}

func (c *ChapterWorker) Run() {

defer func() {

c.DownChan <- 1 //当前goroutine结束

}()

log.Println("start chapter worker ", c.IndexUrl)

defer log.Println("chapter worker done ", c.IndexUrl)

url := c.IndexUrl

chapcontent := make([]byte, 0)

for {

if !strings.Contains(url, "_") && url != c.IndexUrl {

break

}

if c.IndexUrl == "" {

panic("Invalid Index Url")

}

//获取Chapter Title

resp, err := http.DefaultClient.Get(c.Host + url)

if err != nil {

panic(err.Error())

}

buf, err := ioutil.ReadAll(resp.Body)

if err != nil {

panic(err)

}

page := PageWorker{

Contents: buf,

}

con, err := page.GetPageContent()

if err != nil {

panic(err)

}

if url == c.IndexUrl {

title, err := page.GetPageTitle()

if err != nil {

panic(err)

}

con = append([]byte("\r\n\r\n【"+string(title)+"】\r\n\r\n"), con...)

}

chapcontent = append(chapcontent, con...)

next, err := page.GetNextPageUrl()

if err != nil {

panic(err.Error())

}

url = next

time.Sleep(time.Millisecond * 10)

}

c.BookContentChan <- PageContentItem{

Content: chapcontent,

PageNum: c.PageNum,

SubPageNum: c.SubPageNum,

}

}

PageWorker

package workers

import (

"regexp"

"strings"

)

/*

Page 分页目录

职责:

1. 解析Html页面内容,获取文本

*/

type PageWorker struct {

Contents []byte

}

func (p *PageWorker) GetPageContent() ([]byte, error) {

pat := `[\S\s]*`

reg := regexp.MustCompile(pat)

bytes := reg.Find(p.Contents)

htmlpat := `<[/]?[\s\w\"=]*>`

htmlreg := regexp.MustCompile(htmlpat)

sbytes := htmlreg.ReplaceAll(bytes, []byte("\n"))

all := strings.ReplaceAll(string(sbytes), " ", "")

all = strings.ReplaceAll(all, `手机\端 一秒記住『m.999xs.com』為您提\供精彩小說\閱讀`, "")

all = strings.ReplaceAll(all, "sthuojia", "")

all = strings.ReplaceAll(all, "travefj", "")

all = strings.TrimSpace(all)

return []byte(all), nil

}

func (p *PageWorker) GetPageTitle() ([]byte, error) {

pat := `nr_title">[\S\s]*`)

t := make([]rune, 0)

for _, r := range []rune(title) {

if r == rune('<') {

break

}

t = append(t, r)

}

return []byte(string(t)), nil

}

func (p *PageWorker) GetNextPageUrl() (string, error) {

pat := `[\S\s]*`

reg := regexp.MustCompile(pat)

bytes := reg.Find(p.Contents)

pat = `下一章`

reg = regexp.MustCompile(pat)

bytes = reg.Find(bytes)

pat = `href="[\S\s]*"`

reg = regexp.MustCompile(pat)

bytes = reg.Find(bytes)

result := strings.ReplaceAll(string(bytes), "href=\"", "")

result = strings.ReplaceAll(result, "\"", "")

return result, nil

}

main.go

package main

import (

"txtrobot/workers"

)

func main() {

book := workers.BookWorker{

DefaultUrl: "/files/article/html/69/69208/index.html", //必须是小说的首页

PageContentChan: make(chan workers.PageContentItem, 1000), //带buffer的chan,有利于前台抓钱线程执行

Host: `https://m.999xs.com`,

Contents: make([][][]byte, 1000),

}

err := book.Run()

if err != nil {

panic(err)

}

}

执行结果

2019/10/19 17:08:23 start ReceiveContents

2019/10/19 17:08:23 get book title: 秦苒程隽.txt

2019/10/19 17:08:23 start directory workers

2019/10/19 17:08:23 directory worker run /files/article/html/69/69208/index.html

....

2019/10/19 17:09:07 chapter worker done /files/article/html/69/69208/48867262.html

2019/10/19 17:09:07 directory worker down /files/article/html/69/69208/index_27.html

2019/10/19 17:09:07 directory workers all done

2019/10/19 17:09:07 ReceiveContents done

2019/10/19 17:09:07 booker worker down

Process finished with exit code 0