Netty - 模拟源码实现简单Netty以及拓展应用

文章目录

- Netty - 模拟源码实现简单Netty以及拓展应用

- 1.模拟Netty线程模型实现简单网络通讯服务端

- 目录结构:

- AbstractNioSelector.java

- Boss.java

- NioServerBoss.java

- Worker.java

- NioServerWorker.java

- NioSelectorRunnablePool.java

- ServerBootStrap.java

- Start.java

- 2.Netty5基本使用

- pom.xml

- ServerHandler.java

- NettyServer.java

- ClientHandler.java

- NettyClient.java

- 3.单客户端多连接

- MultiClient.java

- StartClient.java

- 4.Netty心跳

- Netty3

- NettyServer.java

- ServerHeartHandler.java

- ServerHeartHandler2.java

- 运行结果

- Netty5

- NettyServer.java

- ServerHeartHandler.java

- 运行结果

Netty - 模拟源码实现简单Netty以及拓展应用

1.模拟Netty线程模型实现简单网络通讯服务端

我们在学习一个开源的技术框架的时候尽可能地尝试去看懂他的源码对自己理解这个框架以及应用都能带来十分巨大的帮助,通过断点、查看调用栈等等都可以有效地帮助我们理解框架源码,这里我们根据Netty框架的模型思想来模拟手写一个简单的网络通讯的服务端。

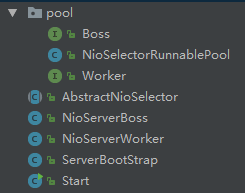

目录结构:

AbstractNioSelector.java

package com.proto.nio;

import com.proto.nio.pool.NioSelectorRunnablePool;

import java.io.IOException;

import java.nio.channels.Selector;

import java.util.Queue;

import java.util.concurrent.ConcurrentLinkedDeque;

import java.util.concurrent.Executor;

import java.util.concurrent.atomic.AtomicBoolean;

/**

* 抽象selector线程类

* @author hzk

* @date 2018/8/20

*/

public abstract class AbstractNioSelector implements Runnable{

/**

* 线程池

*/

private final Executor executor;

/**

* 选择器

*/

protected Selector selector;

/**

* 选择器wakenup的状态标记

*/

protected final AtomicBoolean wakenUp = new AtomicBoolean();

/**

* 任务队列

*/

private final Queue<Runnable> taskQueue = new ConcurrentLinkedDeque<Runnable>();

/**

* 线程名称

*/

private String threadName;

/**

* 线程管理对象

*/

protected NioSelectorRunnablePool nioSelectorRunnablePool;

public AbstractNioSelector(Executor executor, String threadName, NioSelectorRunnablePool nioSelectorRunnablePool) {

this.executor = executor;

this.threadName = threadName;

this.nioSelectorRunnablePool = nioSelectorRunnablePool;

openSelector();

}

/**

* 获取select并启动线程

*/

private void openSelector(){

try {

this.selector = Selector.open();

} catch (IOException e) {

e.printStackTrace();

throw new RuntimeException("Failed to create a selector.");

}

executor.execute(this);

}

@Override

public void run() {

Thread.currentThread().setName(this.threadName);

while (true){

try {

wakenUp.set(false);

select(this.selector);

processTaskQueue();

process(this.selector);

}catch (Exception e){

e.printStackTrace();

}

}

}

/**

* 注册一个任务并激活selector

* @param task

*/

protected final void registerTask(Runnable task){

taskQueue.add(task);

Selector selector = this.selector;

if(null != selector){

if(wakenUp.compareAndSet(false,true)){

selector.wakeup();

}

}else{

taskQueue.remove(task);

}

}

/**

* 执行队列里的任务

*/

private void processTaskQueue(){

for(;;){

final Runnable task = taskQueue.poll();

if(null == task){

break;

}

task.run();

}

}

/**

* 获取线程管理对象

* @return

*/

public NioSelectorRunnablePool getNioSelectorRunnablePool() {

return nioSelectorRunnablePool;

}

/**

* select抽象方法

* @param selector

* @return

* @throws IOException

*/

protected abstract int select(Selector selector) throws IOException;

/**

* select业务处理

* @param selector

* @return

* @throws IOException

*/

protected abstract void process(Selector selector) throws IOException;

}

Boss.java

package com.proto.nio.pool;

import java.nio.channels.ServerSocketChannel;

/**

* Boss接口

* @author hzk

* @date 2018/8/20

*/

public interface Boss {

/**

* 加入一个新的serverSocket

* @param serverSocketChannel

*/

public void regiserAcceptChannelTask(ServerSocketChannel serverSocketChannel);

}

NioServerBoss.java

package com.proto.nio;

import com.proto.nio.pool.Boss;

import com.proto.nio.pool.NioSelectorRunnablePool;

import com.proto.nio.pool.Worker;

import java.io.IOException;

import java.nio.channels.*;

import java.util.Iterator;

import java.util.Set;

import java.util.concurrent.Executor;

/**

* Boss实现

* @author hzk

* @date 2018/8/20

*/

public class NioServerBoss extends AbstractNioSelector implements Boss{

public NioServerBoss(Executor executor,String threadName, NioSelectorRunnablePool nioSelectorRunnablePool) {

super(executor,threadName, nioSelectorRunnablePool);

}

@Override

public void regiserAcceptChannelTask(final ServerSocketChannel serverSocketChannel) {

final Selector selector = this.selector;

registerTask(new Runnable() {

@Override

public void run() {

try {

//注册serverChannel到selector

serverSocketChannel.register(selector,SelectionKey.OP_ACCEPT);

} catch (ClosedChannelException e) {

e.printStackTrace();

}

}

});

}

@Override

protected int select(Selector selector) throws IOException {

return selector.select();

}

@Override

protected void process(Selector selector) throws IOException {

Set<SelectionKey> selectionKeys = selector.selectedKeys();

if(selectionKeys.isEmpty()){

return;

}

for (Iterator<SelectionKey> iterator = selectionKeys.iterator(); iterator.hasNext();){

SelectionKey key = iterator.next();

iterator.remove();

ServerSocketChannel server = (ServerSocketChannel)key.channel();

//新客户端

SocketChannel channel = server.accept();

//设置为非阻塞

channel.configureBlocking(false);

//获取一个worker

Worker worker = getNioSelectorRunnablePool().nextWorkers();

//注册新客户端接入任务

worker.registerNewChannelTask(channel);

System.out.println("New Client into...");

}

}

}

Worker.java

package com.proto.nio.pool;

import java.nio.channels.SocketChannel;

/**

* Worker接口

* @author hzk

* @date 2018/8/20

*/

public interface Worker {

/**

* 加入一个新的客户端会话

* @param socketChannel

*/

public void registerNewChannelTask(SocketChannel socketChannel);

}

NioServerWorker.java

package com.proto.nio;

import com.proto.nio.pool.NioSelectorRunnablePool;

import com.proto.nio.pool.Worker;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.channels.*;

import java.util.Iterator;

import java.util.Set;

import java.util.concurrent.Executor;

/**

* worker实现

* @author hzk

* @date 2018/8/20

*/

public class NioServerWorker extends AbstractNioSelector implements Worker{

public NioServerWorker(Executor executor, String threadName, NioSelectorRunnablePool nioSelectorRunnablePool) {

super(executor, threadName, nioSelectorRunnablePool);

}

/**

* 加入一个新的socket客户端

* @param socketChannel

*/

@Override

public void registerNewChannelTask(final SocketChannel socketChannel) {

final Selector selector = this.selector;

registerTask(new Runnable() {

@Override

public void run() {

try {

//将客户端socketChannel注册到selector中

socketChannel.register(selector, SelectionKey.OP_READ);

} catch (ClosedChannelException e) {

e.printStackTrace();

}

}

});

}

@Override

protected int select(Selector selector) throws IOException {

return selector.select(500);

}

@Override

protected void process(Selector selector) throws IOException {

Set<SelectionKey> selectionKeys = selector.selectedKeys();

if(selectionKeys.isEmpty()){

return;

}

Iterator<SelectionKey> iterator = selectionKeys.iterator();

while(iterator.hasNext()){

SelectionKey key = iterator.next();

//移除 防止重复处理

iterator.remove();

//得到事件发生的socket通道

SocketChannel channel = (SocketChannel)key.channel();

//数据总长度

int ret = 0;

boolean failure = true;

ByteBuffer buffer = ByteBuffer.allocate(1024);

//读取数据

try {

ret = channel.read(buffer);

failure = false;

} catch (IOException e) {

e.printStackTrace();

}

//判断连接是否断开

if(ret <= 0 || failure){

key.cancel();

System.out.println("Client disconnect...");

}else{

System.out.println("Receive msg:"+ new String(buffer.array()));

//回写数据给客户端

ByteBuffer wrap = ByteBuffer.wrap("Receive success!".getBytes());

channel.write(wrap);

}

}

}

}

NioSelectorRunnablePool.java

package com.proto.nio.pool;

import com.proto.nio.NioServerBoss;

import com.proto.nio.NioServerWorker;

import java.util.concurrent.Executor;

import java.util.concurrent.atomic.AtomicInteger;

/**

* selector线程管理者

* @author hzk

* @date 2018/8/20

*/

public class NioSelectorRunnablePool {

/**

* boos线程数组

*/

private final AtomicInteger bossIndex = new AtomicInteger();

private Boss[] bosses;

/**

* worker线程数组

*/

private final AtomicInteger workerIndex = new AtomicInteger();

private Worker[] workers;

public NioSelectorRunnablePool(Executor boss, Executor worker) {

initBoss(boss, 1);

initWorker(worker, Runtime.getRuntime().availableProcessors() * 2);

}

/**

* 初始化boss线程

* @param boss

* @param count

*/

private void initBoss(Executor boss, int count){

this.bosses = new NioServerBoss[count];

for (int i = 0 ;i < bosses.length;i++){

bosses[i] = new NioServerBoss(boss,"Boss_Thread_"+(i+1),this);

}

}

/**

* 初始化worker线程

* @param worker

* @param count

*/

private void initWorker(Executor worker, int count){

this.workers = new NioServerWorker[count];

for (int i = 0 ;i < workers.length;i++){

workers[i] = new NioServerWorker(worker,"Worker_Thread_"+(i+1),this);

}

}

/**

* 获取一个Boss

* @return

*/

public Boss nextBosses() {

return bosses[Math.abs(bossIndex.getAndIncrement() % bosses.length)];

}

/**

* 获取一个Worker

* @return

*/

public Worker nextWorkers() {

return workers[Math.abs(workerIndex.getAndIncrement() % workers.length)];

}

}

ServerBootStrap.java

package com.proto.nio;

import com.proto.nio.pool.Boss;

import com.proto.nio.pool.NioSelectorRunnablePool;

import java.net.SocketAddress;

import java.nio.channels.ServerSocketChannel;

/**

* 服务启动类

* @author hzk

* @date 2018/8/20

*/

public class ServerBootStrap {

private NioSelectorRunnablePool nioSelectorRunnablePool;

public ServerBootStrap(NioSelectorRunnablePool nioSelectorRunnablePool) {

this.nioSelectorRunnablePool = nioSelectorRunnablePool;

}

public void bind(final SocketAddress socketAddress){

try {

//获得一个serverSocket通道

ServerSocketChannel serverSocketChannel = ServerSocketChannel.open();

//设置非阻塞

serverSocketChannel.configureBlocking(false);

//绑定端口

serverSocketChannel.bind(socketAddress);

//获取Boss进程

Boss boss = nioSelectorRunnablePool.nextBosses();

//向boss进程注册通道

boss.regiserAcceptChannelTask(serverSocketChannel);

}catch (Exception e){

e.printStackTrace();

}

}

}

Start.java

package com.proto.nio;

import com.proto.nio.pool.NioSelectorRunnablePool;

import java.net.InetSocketAddress;

import java.util.concurrent.Executors;

/**

* @author hzk

* @date 2018/8/20

*/

public class Start {

public static void main(String[] args){

//初始化线程

NioSelectorRunnablePool nioSelectorRunnablePool = new NioSelectorRunnablePool(Executors.newCachedThreadPool(),Executors.newCachedThreadPool());

//获取服务了

ServerBootStrap serverBootStrap = new ServerBootStrap(nioSelectorRunnablePool);

//绑定端口

serverBootStrap.bind(new InetSocketAddress(8888));

System.out.println("Start success...");

}

}

2.Netty5基本使用

Netty5.x使用NioEventLoopGroup循环事件组替代了手动创建线程,在构建服务端和客户端时与之前版本有一些差异,但是整体的构建流程大体一致。

pom.xml

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>5.0.0.Alpha2</version>

</dependency>

ServerHandler.java

package com.proto.server;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

/**

* @author hzk

* @date 2018/9/10

*/

public class ServerHandler extends SimpleChannelInboundHandler<String>{

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

System.out.println("exceptionCaught");

super.exceptionCaught(ctx, cause);

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.out.println("channelActive");

super.channelActive(ctx);

}

/**

* 客户端断开

*/

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

System.out.println("channelInactive");

super.channelInactive(ctx);

}

@Override

protected void messageReceived(ChannelHandlerContext channelHandlerContext, String msg) throws Exception {

System.out.println("msg:"+msg);

channelHandlerContext.channel().writeAndFlush("receive");

channelHandlerContext.writeAndFlush("get");

}

}

NettyServer.java

package com.proto.server;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

/**

* @author hzk

* @date 2018/9/10

*/

public class NettyServer {

public static void main(String[] args){

ServerBootstrap serverBootstrap = new ServerBootstrap();

NioEventLoopGroup boss = new NioEventLoopGroup();

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

//1.设置线程池

serverBootstrap.group(boss,worker);

//2.设置socket工厂

serverBootstrap.channel(NioServerSocketChannel.class);

//3.设置管道工厂

serverBootstrap.childHandler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

channel.pipeline().addLast(new StringDecoder());

channel.pipeline().addLast(new StringEncoder());

channel.pipeline().addLast(new ServerHandler());

}

});

//设置TCP参数

//netty3设置方式

// serverBootstrap.setOption("backlog",1024);

// serverBootstrap.setOption("tcpNoDelay",true);

// serverBootstrap.setOption("keepAlive",true);

serverBootstrap.option(ChannelOption.SO_BACKLOG,2048);//serverSocketChannel设置,连接缓冲池大小

serverBootstrap.childOption(ChannelOption.SO_KEEPALIVE,true);//socketChannel设置,维持链接活跃,清除死连接

serverBootstrap.childOption(ChannelOption.TCP_NODELAY,true);//socketChannel设置,关闭延迟发送

//4.绑定端口

ChannelFuture channelFuture = serverBootstrap.bind(8888);

System.out.println("Netty Server Start...");

//5.等待服务端关闭

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

//释放资源

boss.shutdownGracefully();

worker.shutdownGracefully();

}

}

}

ClientHandler.java

package com.proto.client;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

/**

* @author hzk

* @date 2018/9/14

*/

public class ClientHandler extends SimpleChannelInboundHandler<String>{

@Override

protected void messageReceived(ChannelHandlerContext channelHandlerContext, String s) throws Exception {

System.out.println("Client receive:"+s);

}

}

NettyClient.java

package com.proto.client;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import java.io.BufferedReader;

import java.io.InputStreamReader;

/**

* Netty5客户端

* @author hzk

* @date 2018/9/14

*/

public class NettyClient {

public static void main(String[] args) throws InterruptedException {

Bootstrap bootstrap = new Bootstrap();

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

//设置线程池

bootstrap.group(worker);

//设置socket工厂

bootstrap.channel(NioSocketChannel.class);

//设置管道

bootstrap.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

channel.pipeline().addLast(new StringDecoder());

channel.pipeline().addLast(new StringEncoder());

channel.pipeline().addLast(new ClientHandler());

}

});

ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 8888);

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(System.in));

while(true){

System.out.println("Please input:");

String s = bufferedReader.readLine();

channelFuture.channel().writeAndFlush(s);

}

}catch (Exception e){

e.printStackTrace();

}finally {

worker.shutdownGracefully().sync();

}

}

}

3.单客户端多连接

之前我们的示例都是单客户端单连接去进行通讯,这里对客户端方面进行了简单地封装,实现了单客户端多连接的结构,这里我们在初始化客户端的时候会先装载多个Channel通道,在我们发送数据时从池中获取不同的Channel去处理请求。

MultiClient.java

package com.proto.multiclient;

import com.proto.client.ClientHandler;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.atomic.AtomicInteger;

/**

* 多连接客户端

* @author hzk

* @date 2018/9/18

*/

public class MultiClient {

/**

* 服务类

*/

private Bootstrap bootstrap = new Bootstrap();

/**

* 会话

*/

private List<Channel> channels = new ArrayList<>();

/**

* 引用计数

*/

private final AtomicInteger index = new AtomicInteger();

public MultiClient(String address, int port, int count) {

init(address,port,count);

}

/**

* 初始化

* @param address 连接地址

* @param port 端口

* @param count 创建客户端数量

*/

private void init(String address,int port,int count){

NioEventLoopGroup worker = new NioEventLoopGroup();

//设置线程池

bootstrap.group(worker);

//设置工厂

bootstrap.channel(NioSocketChannel.class);

//设置管道

bootstrap.handler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

channel.pipeline().addLast(new StringDecoder());

channel.pipeline().addLast(new StringEncoder());

channel.pipeline().addLast(new ClientHandler());

}

});

for (int i = 1;i<= count;i++){

ChannelFuture connect = bootstrap.connect(address, port);

channels.add(connect.channel());

}

}

/**

* 返回当前使用的会话

* @param channel

* @return

*/

public int indexChannel(Channel channel){

return channels.indexOf(channel);

}

/**

* 获取会话

* @param address

* @param port

* @return

*/

public Channel nextChannel(String address,int port){

return getFirstActiveChannel(address,port,0);

}

private Channel getFirstActiveChannel(String address,int port,int count){

Channel channel = channels.get(Math.abs(index.getAndIncrement() % channels.size()));

if(!channel.isActive()){

//重连

reconnect(channel,address,count);

if(count >= channels.size()){

throw new RuntimeException("Not Have Effective Channel!");

}

return getFirstActiveChannel(address,port,++count);

}

return channel;

}

private void reconnect(Channel channel,String address,int port){

synchronized (channel){

if(channels.indexOf(channel) == -1){

return;

}

ChannelFuture connect = bootstrap.connect(address, port);

channels.set(channels.indexOf(channel),connect.channel());

}

}

}

StartClient.java

package com.proto.multiclient;

import io.netty.channel.Channel;

import java.io.BufferedReader;

import java.io.InputStreamReader;

/**

* @author hzk

* @date 2018/9/18

*/

public class StartClient {

private static final String ADDRESS = "127.0.0.1";

private static final int PORT = 8888;

public static void main(String[] args){

MultiClient multiClient = new MultiClient(ADDRESS,PORT, 5);

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(System.in));

while (true){

try {

System.out.println("Please input:");

String msg = bufferedReader.readLine();

// multiClient.nextChannel(ADDRESS,PORT).writeAndFlush(msg);

Channel channel = multiClient.nextChannel(ADDRESS, PORT);

channel.writeAndFlush(msg+":"+multiClient.indexChannel(channel));

}catch (Exception e){

e.printStackTrace();

}

}

}

}

4.Netty心跳

一般情况下,客户端与服务端在指定时间内没有任何读写请求,则客户端在写空闲时主动发起心跳请求,服务器接收到心跳请求后给出一个心跳响应,维持服务端与客户端的连接。 Netty功能十分强大,自带了心跳检测功能,那就是IdleStateHandler。

这里我们介绍一下Netty3以及Netty5怎么使用心跳功能。

Netty3

NettyServer.java

package com.proto.server;

import org.jboss.netty.bootstrap.ServerBootstrap;

import org.jboss.netty.channel.ChannelPipeline;

import org.jboss.netty.channel.ChannelPipelineFactory;

import org.jboss.netty.channel.Channels;

import org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory;

import org.jboss.netty.handler.codec.string.StringDecoder;

import org.jboss.netty.handler.codec.string.StringEncoder;

import org.jboss.netty.handler.timeout.IdleStateHandler;

import org.jboss.netty.util.HashedWheelTimer;

import java.net.InetSocketAddress;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* netty服务类

* @author hzk

* @date 2018/9/19

*/

public class NettyServer {

public static void main(String[] args){

//服务类

ServerBootstrap serverBootstrap = new ServerBootstrap();

//Boss线程监听 worker线程负责数据读写

ExecutorService boss = Executors.newCachedThreadPool();

ExecutorService worker = Executors.newCachedThreadPool();

//设置NioSocket工厂

serverBootstrap.setFactory(new NioServerSocketChannelFactory(boss,worker));

//设置管道

final HashedWheelTimer hashedWheelTimer = new HashedWheelTimer();

serverBootstrap.setPipelineFactory(new ChannelPipelineFactory() {

@Override

public ChannelPipeline getPipeline() throws Exception {

ChannelPipeline pipeline = Channels.pipeline();

pipeline.addLast("idle",new IdleStateHandler(hashedWheelTimer,5,5,10));

pipeline.addLast("decoder",new StringDecoder());

pipeline.addLast("encoder",new StringEncoder());

// pipeline.addLast("heart",new ServerHeartHandler());

pipeline.addLast("heart",new ServerHeartHandler2());

return pipeline;

}

});

serverBootstrap.bind(new InetSocketAddress(8888));

System.out.println("Netty Server start...");

}

}

ServerHeartHandler.java

package com.proto.server;

import org.jboss.netty.channel.ChannelHandler;

import org.jboss.netty.channel.ChannelHandlerContext;

import org.jboss.netty.handler.timeout.IdleStateAwareChannelHandler;

import org.jboss.netty.handler.timeout.IdleStateEvent;

import java.text.SimpleDateFormat;

import java.util.Date;

/**

* @author hzk

* @date 2018/9/19

*/

public class ServerHeartHandler extends IdleStateAwareChannelHandler implements ChannelHandler{

@Override

public void channelIdle(ChannelHandlerContext ctx, IdleStateEvent e) throws Exception {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("ss");

//会话状态

System.out.println(e.getState()+":"+simpleDateFormat.format(new Date()));

}

}

ServerHeartHandler2.java

package com.proto.server;

import org.jboss.netty.channel.*;

import org.jboss.netty.handler.timeout.IdleState;

import org.jboss.netty.handler.timeout.IdleStateEvent;

import java.util.Date;

/**

* @author hzk

* @date 2018/9/19

*/

public class ServerHeartHandler2 extends SimpleChannelHandler{

@Override

public void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {

System.out.println(e.getMessage());

}

@Override

public void handleUpstream(final ChannelHandlerContext ctx, ChannelEvent e) throws Exception {

if(e instanceof IdleStateEvent){

System.out.println(new Date(System.currentTimeMillis())+":"+((IdleStateEvent) e).getState());

if(((IdleStateEvent)e).getState() == IdleState.ALL_IDLE){

System.out.println("踢用户下线!");

//关闭会话T玩家下线

ChannelFuture channelFuture = ctx.getChannel().write("Time out,you will close!");

channelFuture.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture channelFuture) throws Exception {

ctx.getChannel().close();

}

});

}

}else{

super.handleUpstream(ctx,e);

}

}

}

运行结果

Netty Server start...

Mon Nov 05 16:11:06 CST 2018:READER_IDLE

Mon Nov 05 16:11:06 CST 2018:WRITER_IDLE

Mon Nov 05 16:11:11 CST 2018:ALL_IDLE

踢用户下线!

这里我们可以看出,通过集成SimpleChannelHandler或者IdleStateAwareChannelHandler都可以实现心跳检测的目的,但是我们需要注意的是,需要在使用这些handler之前需要先设置一个特殊的IdleStateHandler并且设置读写超时时间。

在处理IdleState时我们查看源码可以发现三种状态的存在

- ALL_IDLE : 一段时间内没有数据接收或者发送

- READER_IDLE : 一段时间内没有数据接收

- WRITER_IDLE : 一段时间内没有数据发送

大家查看源码在timeout包下其实有很多其他可以供大家使用的类,这个需要大家自行研究,我这里做的大部分也是在自己学习过程中了解的分享给大家。

Netty5

Netty5大部分结构和使用方法和Netty3其实都类似,只是换了几个其他的方法去实现。

NettyServer.java

package com.proto.heart;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.Channel;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import io.netty.handler.timeout.IdleStateHandler;

/**

* 服务类(心跳)

* @author hzk

* @date 2018/9/19

*/

public class NettyServer {

public static void main(String[] args){

ServerBootstrap serverBootstrap = new ServerBootstrap();

NioEventLoopGroup boss = new NioEventLoopGroup();

NioEventLoopGroup worker = new NioEventLoopGroup();

try {

//设置线程池

serverBootstrap.group(boss,worker);

//设置socket工厂

serverBootstrap.channel(NioServerSocketChannel.class);

//设置管道

serverBootstrap.childHandler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

channel.pipeline().addLast(new IdleStateHandler(5,5,10));

channel.pipeline().addLast(new StringDecoder());

channel.pipeline().addLast(new StringEncoder());

channel.pipeline().addLast(new ServerHeartHandler());

}

});

//设置TCP参数

//netty3设置方式

// serverBootstrap.setOption("backlog",1024);

// serverBootstrap.setOption("tcpNoDelay",true);

// serverBootstrap.setOption("keepAlive",true);

serverBootstrap.option(ChannelOption.SO_BACKLOG,2048);//serverSocketChannel设置,连接缓冲池大小

serverBootstrap.childOption(ChannelOption.SO_KEEPALIVE,true);//socketChannel设置,维持链接活跃,清除死连接

serverBootstrap.childOption(ChannelOption.TCP_NODELAY,true);//socketChannel设置,关闭延迟发送

//绑定端口

ChannelFuture channelFuture = serverBootstrap.bind(8888);

System.out.println("Netty Server Start..");

channelFuture.channel().closeFuture().sync();

}catch (Exception e){

e.printStackTrace();

}finally {

boss.shutdownGracefully();

worker.shutdownGracefully();

}

}

}

ServerHeartHandler.java

package com.proto.heart;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.handler.timeout.IdleState;

import io.netty.handler.timeout.IdleStateEvent;

import java.util.Date;

/**

* @author hzk

* @date 2018/9/19

*/

public class ServerHeartHandler extends SimpleChannelInboundHandler<String>{

@Override

protected void messageReceived(ChannelHandlerContext channelHandlerContext, String s) throws Exception {

System.out.println("Receive:"+s);

channelHandlerContext.writeAndFlush("get:"+new Date());

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

System.out.println("exceptionCaught");

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.out.println("channelActive");

}

@Override

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

System.out.println("channelInactive");

}

@Override

public void userEventTriggered(final ChannelHandlerContext ctx, Object evt) throws Exception {

if(evt instanceof IdleStateEvent){

IdleStateEvent idleStateEvent = (IdleStateEvent) evt;

if(idleStateEvent.state() == IdleState.ALL_IDLE){

//清除超时会话

ChannelFuture channelFuture = ctx.writeAndFlush("Time out,you will close!");

channelFuture.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture channelFuture) throws Exception {

ctx.channel().close();

}

});

}

}else{

super.userEventTriggered(ctx, evt);

}

}

}

运行结果

Netty Server Start..

channelActive

channelInactive

心跳其实就是用来检测会话状态,就是一个普通的请求,特点数据简单,业务也简单

心跳对于服务端来说,可以用来定时清除闲置会话inactive(netty5) channelclose(netty3)

心跳对客户端来说,可以用来检测会话是否断开,是否重连, 用来检测网络延时等