分类算法(决策树、逻辑回归、线性SVC、SVC)模型的耗时测试

初学数据挖掘算法时,在具体工作中常常不清楚如何选择算法,本文将从耗时的角度进行测试,选择的基础算法有DecisionClassifier、LogisticRegression、LinearSVC、SVC(高斯核函数)。并没有再用SVC(kernel = linear)作对比,LinearSVC就够了。

数据集使用的是sklearn.datasets中的make_moons,下图中数据加入10%噪声。就分类效果来说,对于这种非线性分类来说,逻辑回归和线性svc肯定不会很好,但这次测试主要针对耗费时间所以暂时不讨论分类效果。

先贴代码说明过程:

import numpy as np

import pandas as pd

from sklearn.datasets import make_moons

import time

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import LinearSVC

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

from sklearn.metrics import recall_score

n_samples = np.linspace(10000, 1000000, 100)

#n_features = np.linspace(10, 100, 10)

k = 0

list_DecisionTreeClassifier = []

list_LogisticRegression = []

list_LinearSVC = []

list_SVC = []

for n_sample in n_samples:

# for n_feature in n_features:

start = time.clock()

data = make_moons(n_samples = int(n_sample), noise = 0.2, random_state = 42)

x = data[0]

y = data[1]

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=42)

tree_clf = DecisionTreeClassifier()

log_clf = LogisticRegression()

linearsvc_clf = LinearSVC()

svc_clf = SVC()

for clf in [tree_clf, log_clf, linearsvc_clf, svc_clf]:

clf.fit(x_train, y_train)

y_pred = clf.predict(x_test)

k = k + 1

print(clf.__class__.__name__, ' n_sample =', int(n_sample),

'\ntrain_score: ', clf.score(x_train, y_train), 'test_score: ', clf.score(x_test, y_test),

'\naccuracy_score: ', accuracy_score(y_test, y_pred),

'\nrecall_score: ', recall_score(y_test, y_pred, average = 'micro'),

'\ntime: ', time.clock() - start,

'\nk =', k,

'\n---------------------------------------------------------------')

if clf == tree_clf:

list_DecisionTreeClassifier.append(time.clock() - start)

elif clf == log_clf:

list_LogisticRegression.append(time.clock() - start)

elif clf == linearsvc_clf:

list_LinearSVC.append(time.clock() - start)

elif clf == svc_clf:

list_SVC.append(time.clock() - start)

print(list_DecisionTreeClassifier)

print(list_LogisticRegression)

print(list_LinearSVC)

print(list_SVC)数据如下:

第一行是样本数量,从10k到1000k,也就是百万,所有样本均为两个特征。

一共跑了20.24小时,其中决策树0.047h、逻辑回归0.065h、LinearSVC 0.387h、SVC(高斯核函数) 19.74h。

| n_samples(k) | DecisionClassifier | LogisticRegression | LinearSVC | SVC |

| 10 | 0.02883747 | 0.049836921 | 0.078581678 | 0.327533809 |

| 20 | 0.058209357 | 0.072491098 | 0.12397223 | 0.959392353 |

| 30 | 0.055744011 | 0.076332479 | 0.152550657 | 2.102193433 |

| 40 | 0.075380437 | 0.102246571 | 0.203200135 | 3.242318279 |

| 50 | 0.096630075 | 0.12982895 | 0.268338368 | 4.742749603 |

| 60 | 0.123576192 | 0.163956345 | 0.328781705 | 6.687300061 |

| 70 | 0.168099112 | 0.213670662 | 0.441686996 | 9.074958383 |

| 80 | 0.168602809 | 0.220825094 | 0.428130358 | 11.48451644 |

| 90 | 0.211460762 | 0.272062956 | 0.523074432 | 14.64752711 |

| 100 | 0.24415954 | 0.313279468 | 0.654271114 | 17.69263482 |

| 110 | 0.244321442 | 0.325576588 | 0.778064836 | 20.76720159 |

| 120 | 0.27956278 | 0.378553588 | 0.935907076 | 49.65587547 |

| 130 | 0.32607641 | 0.460912716 | 1.16475368 | 29.29933011 |

| 140 | 0.346625301 | 0.48400057 | 1.315402189 | 34.02656613 |

| 150 | 0.372370017 | 0.586220849 | 1.584742415 | 40.06493316 |

| 160 | 0.423706959 | 0.646293885 | 1.848321679 | 44.7496937 |

| 170 | 0.440558938 | 0.63655953 | 1.787725574 | 49.81503784 |

| 180 | 0.486027535 | 0.673445079 | 2.250058327 | 58.382703 |

| 190 | 0.557732921 | 0.834294278 | 2.64545725 | 96.63282664 |

| 200 | 0.55375261 | 0.852491846 | 2.658757889 | 94.75976404 |

| 210 | 0.660044253 | 0.941345617 | 2.768064517 | 80.91920565 |

| 220 | 0.626086788 | 0.966818007 | 3.005038905 | 86.18817032 |

| 230 | 0.670140606 | 0.993128967 | 3.212830983 | 122.7775426 |

| 240 | 0.73641105 | 1.067132817 | 3.021734793 | 98.89337542 |

| 250 | 0.692669689 | 0.992732375 | 3.949486686 | 104.3569378 |

| 260 | 0.706089611 | 0.998963824 | 3.100860021 | 113.7740898 |

| 270 | 0.793118174 | 1.090411634 | 4.163498036 | 124.1380248 |

| 280 | 0.812848696 | 1.137654793 | 3.530820015 | 134.5863551 |

| 290 | 0.840621207 | 1.202555292 | 3.611334561 | 165.7891099 |

| 300 | 0.820008109 | 1.187318188 | 3.697400011 | 180.7568949 |

| 310 | 0.915756437 | 1.289138554 | 3.965521402 | 227.2264197 |

| 320 | 0.938713664 | 1.328560853 | 4.29018248 | 202.5930091 |

| 330 | 0.984258369 | 1.357701372 | 4.529406067 | 188.1415734 |

| 340 | 1.0649243 | 1.481109295 | 4.639771011 | 252.7613858 |

| 350 | 1.041193263 | 1.430132414 | 4.824420714 | 247.1732288 |

| 360 | 1.123670843 | 1.524292161 | 5.725227875 | 264.2904282 |

| 370 | 1.152559513 | 1.615813865 | 6.322684206 | 247.99097 |

| 380 | 1.142039723 | 1.637767849 | 8.954775502 | 266.9802446 |

| 390 | 1.190608269 | 1.66227408 | 5.425929867 | 319.2140473 |

| 400 | 1.214198991 | 1.729258832 | 9.016491641 | 321.7064201 |

| 410 | 1.26459413 | 1.793089668 | 6.083544475 | 299.8074605 |

| 420 | 1.319481026 | 1.837493583 | 8.346132674 | 319.218243 |

| 430 | 1.341065817 | 1.880541944 | 6.638102447 | 329.2450695 |

| 440 | 1.359091242 | 1.96505313 | 7.579508121 | 349.2920359 |

| 450 | 1.430822091 | 2.01616922 | 10.7825815 | 377.9132338 |

| 460 | 1.481463267 | 2.074451365 | 7.030453175 | 562.6923841 |

| 470 | 1.524301847 | 2.081178761 | 8.532552788 | 480.4078591 |

| 480 | 1.549834016 | 2.171983666 | 10.97452927 | 495.310479 |

| 490 | 1.605759025 | 2.22234255 | 9.559583592 | 440.4521703 |

| 500 | 1.6389734 | 2.281242692 | 9.421958412 | 465.9535545 |

| 510 | 1.591427192 | 2.251642757 | 10.1713034 | 481.42287 |

| 520 | 1.688606351 | 2.307748213 | 9.904361544 | 511.5490605 |

| 530 | 1.736909211 | 2.396495156 | 10.64585661 | 546.4557019 |

| 540 | 1.689089291 | 2.347317468 | 10.10210958 | 599.3554054 |

| 550 | 1.716026275 | 2.431734004 | 10.73117288 | 640.8862102 |

| 560 | 1.843366078 | 2.547667842 | 11.00812391 | 656.5034435 |

| 570 | 1.864082683 | 2.600270391 | 12.9047205 | 605.5532614 |

| 580 | 1.906778458 | 2.62118294 | 14.64065912 | 697.0465515 |

| 590 | 1.878746626 | 2.672614256 | 15.51130785 | 663.513101 |

| 600 | 1.947375588 | 2.750074517 | 13.53774197 | 675.039494 |

| 610 | 2.027375089 | 2.838580405 | 12.67999563 | 710.3224443 |

| 620 | 2.033808294 | 2.862703883 | 13.61235801 | 898.6646633 |

| 630 | 2.067229959 | 2.814968374 | 15.87992865 | 835.0988546 |

| 640 | 2.076654347 | 2.791250898 | 14.76721681 | 764.5906833 |

| 650 | 2.131187271 | 2.987456843 | 14.93312678 | 810.0983294 |

| 660 | 2.241519836 | 3.128689821 | 16.9502807 | 1130.963952 |

| 670 | 2.239337611 | 3.057659719 | 16.46323553 | 845.722558 |

| 680 | 2.275467891 | 3.105867099 | 18.35621547 | 1154.157348 |

| 690 | 2.291907503 | 3.190526626 | 16.15837223 | 991.0283706 |

| 700 | 2.3328265 | 3.260851552 | 16.92598729 | 1060.819834 |

| 710 | 2.489256729 | 3.366372152 | 17.3391335 | 977.3716089 |

| 720 | 2.55171679 | 3.479403092 | 17.81736425 | 1005.078345 |

| 730 | 2.540897272 | 3.498850215 | 17.90919011 | 1069.855728 |

| 740 | 2.612989841 | 3.515815386 | 22.03596312 | 1944.74973 |

| 750 | 2.620192428 | 3.612667973 | 22.28870128 | 1642.03376 |

| 760 | 2.628637376 | 3.509083839 | 25.85574401 | 1309.324554 |

| 770 | 2.795656584 | 3.805254498 | 27.374286 | 1723.238744 |

| 780 | 2.739056842 | 3.651658533 | 26.68898966 | 1251.787643 |

| 790 | 2.700334737 | 3.697969576 | 23.75833155 | 1300.57802 |

| 800 | 2.851554748 | 3.932474465 | 30.10339926 | 1288.789001 |

| 810 | 2.840837354 | 3.897600937 | 22.98218657 | 1345.440995 |

| 820 | 2.874908843 | 3.907148204 | 27.86596795 | 1301.799378 |

| 830 | 2.902940953 | 3.994063297 | 24.41901289 | 1361.743071 |

| 840 | 2.98235124 | 4.111643837 | 26.81874638 | 1404.069808 |

| 850 | 3.030584081 | 4.174068749 | 30.87201563 | 1454.968759 |

| 860 | 3.107563339 | 4.081504226 | 27.35487237 | 1490.286797 |

| 870 | 3.122779963 | 4.183902183 | 28.26201365 | 1696.073407 |

| 880 | 3.063565703 | 4.260023773 | 35.9357651 | 1633.272899 |

| 890 | 3.217063697 | 4.306676612 | 30.60561677 | 1660.043353 |

| 900 | 3.180678247 | 4.403967858 | 29.99422963 | 1621.002932 |

| 910 | 3.347478541 | 4.560222623 | 44.98514177 | 1696.784396 |

| 920 | 3.366416156 | 4.629918758 | 34.08945046 | 1743.880592 |

| 930 | 3.472418035 | 4.718100011 | 32.78220483 | 1740.061516 |

| 940 | 3.459947942 | 4.658998114 | 34.14549835 | 1863.307061 |

| 950 | 3.541337038 | 4.823270514 | 36.36540129 | 1887.806115 |

| 960 | 3.608922629 | 4.856454168 | 47.56512038 | 1925.553855 |

| 970 | 3.689194182 | 5.011694898 | 37.64121789 | 1994.018002 |

| 980 | 3.640454324 | 4.900210474 | 49.82976018 | 2009.114227 |

| 990 | 3.63392038 | 4.985876288 | 40.58021385 | 1957.2254 |

| 1000 | 3.665191647 | 5.039182628 | 46.99966042 | 2033.99081 |

一目了然,折线图不太好画,SVC实在多太多。

顺便拟合了各自样本量为自变量、时间为因变量的函数:

DecisionClassifier:![]() R² = 0.9937

R² = 0.9937

LogisticRegression:![]() R² = 0.9953

R² = 0.9953

LinearSVC:![]() R² = 0.9639

R² = 0.9639

SVC:![]() R² = 0.9675

R² = 0.9675

决策树和逻辑回归都是一次函数,拟合模型耗费时间和样本量成正比。

俩支持向量机就厉害了,都是二次函数,但也有差别,二次函数的斜率是导数 ![]() ,SVC的a比Linear两个数量级。

,SVC的a比Linear两个数量级。

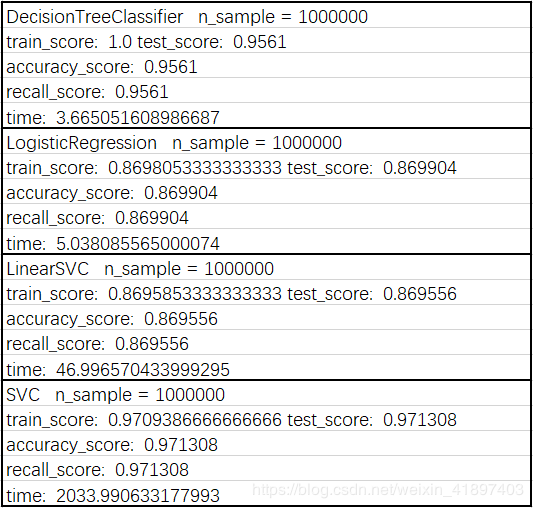

还是再贴一个100万样本时各自的模拟精度:

对于非线性数据,逻辑回归和线性支持向量机的效果都是相对较差的,而决策树和SVC(高斯核函数)分类效果较好。

由此可以得出在选择模型时的一些方法:

1、如果决定先尝试,优先选择逻辑回归和决策树;

2、当数据为非线性时,应该避免选择逻辑回归等线性模型(但可尝试多项式逻辑回归);

3、当样本和特征数量都较少时,花费时间相近,可以尝试多种模型来选择一个效果较好的;

4、样本或特征较多并且不想等太久的时候,一定不要选择SVM!!!即使要用也尽量选择LinearSVM;

5、使用集成算法之前应该根据base_model对拟合时间有一个预估;

6、一个关于SVM的小经验:通过随机森林的方式抽样拟合模型,当单个模型处理的数据变小总体时间会少很多,并且可以做并行计算,比全部样本拟合一个模型花的时间少得多。