NIST 2019 Speaker Recognition Evaluation: CTS Challenge

https://www.nist.gov/itl/iad/mig/nist-2019-speaker-recognition-evaluation

https://www.nist.gov/sites/default/files/documents/2019/07/22/2019_nist_speaker_recognition_challenge_v8.pdf

目录

- 1 Introduction

- 2 Task Description

- 2.1 Task Definition

- 2.2 Training Condition

- 2.3 Enrollment Conditions

- 2.4 Test Conditions

- 3 Performance Measurement

- 3.1 Primary Metric

- 4 Data Description

- 4.1 Data Organization

- 4.2 Trial File

- 4.3 Development Set

- 4.4 Training Set

- 5 Evaluation Rules and Requirements

- 6 Evaluation Protocol

- 6.1 Evaluation Account

- 6.2 Evaluation Registration

- 6.3 Data License Agreement

- 6.4 Submission Requirements

- 6.4.1 System Output Format

- 6.4.2 System Description Format

- 7 Schedule

1 Introduction

1介绍

The 2019 speaker recognition evaluation (SRE19) is the next in an ongoing series of speaker recognition evaluations conducted by the US National Institute of Standards and Technology (NIST) since 1996.

2019年说话人识别评估(SRE19)是自1996年以来由美国国家标准与技术研究所(NIST)进行的一系列说话人识别评估中的一个。

The objectives of the evaluation series are (1) for NIST to effectively measure system-calibrated performance of the current state of technology, (2) to provide a common test bed that enables the research community to explore promising new ideas in speaker recognition, and (3) to support the community in their development of advanced technology incorporating these ideas.

这一系列评估比赛的目标是:(1) 有效地测量系统校准的当前技术状态的性能 (2)提供一个共通的测试平台,让研究机构可以探讨有说话人识别的有前途的新思想(3) 支持社会发展先进科技,采纳这些技术方法。

The evaluations are intended to be of interest to all researchers working on the general problem of text-independent speaker recognition.

这些评价旨在引起从事与文本无关的说话人识别一般问题研究的所有研究人员的兴趣。

To this end, the evaluations are designed to focus on core technology issues and to be simple and accessible to those wishing to participate.

为此目的,比赛的目的是集中于核心技术问题,并使那些希望参加的人能够容易地参赛。

SRE19 will consist of two separate activities: 1) a leaderboard-style challenge using conversational telephone speech (CTS) extracted from the unexposed portions of the Call My Net 2 (CMN2) corpus, and 2) a regular evaluation using audio-visual material extracted from the unexposed portions of the Video Annotation for Speech Technology (VAST) corpus.

SRE19 将 包括 两个独立的挑战: 1 ) 一个leaderboard-style 挑战 :从Call My Net 2 (CMN2)语料库的未公开部分提取的,的电话会话语音(CTS)

2 ) 一个固定的评估, 使用 从VAST corpus 中未公开部分提取的,audio-visual material。

This document describes the task, the performance metric, data, and the evaluation protocol as well as rules/requirements for Part 1 (i.e., the CTS Challenge).

本文档描述了比赛的任务、性能度量、数据和规则,以及第1部分的规则/要求(即, CTS挑战)。

The evaluation plan for Part 2 will be described in another document.

第二部分的比赛规则将在另一份文件中说明。here

Note that in order to participate in the regular evaluation (i.e.Part 2), one must first complete Part 1.

请注意,为了参加固定评估(即part2),参赛者必须先完成Part 1。

The SRE19 will be organized in a similar manner to SRE18, except for this year’s evaluation only the open training condition will be offered (see Section 2.2).

SRE19将以与SRE18类似的方式组织,除了今年的评估,只提供开放训练条件。

Participation in the SRE19 CTS Challenge is open to all who find the evaluation of interest and are able to comply with the evaluation rules set forth in this plan.

SRE19 CTS挑战赛对所有感兴趣的,并能够遵守本文规定的人开放。

There is no cost to participate in the SRE19 CTS Challenge and the evaluation web platform, data, and the scoring software will be available free of charge.

参加SRE19 CTS挑战赛不需要任何费用,比赛网络平台、数据和评分软件都是免费的。

Participating teams in the SRE19 CTS Challenge will have the option to attend the post-evaluation workshop to be co-located with IEEE ASRU workshop in Sentosa, Singapore, on December 12-13, 2019. [ Workshop registration is required for attendance.]

参加“SRE19 CTS挑战赛”的队伍可参加,2019年12月12日至13日,IEEE ASRU研讨会,在新加坡圣淘沙举行的赛后研讨会。【需要网上注册】

Workshop registration is required for attendance.

https://ww.nist. gov/itl/iad/mig/nist-2019-speaker-recognit ion- evaluation

2 Task Description

2任务描述

2.1 Task Definition

2.1任务定义

The task for the SRE19 CTS Challenge is speaker detection: given a segment of speech and the target speaker enrollment data, automatically determine whether the target speaker is speaking in the segment.

SRE19 CTS挑战的任务是说话人检测:给定一段语音和目标说话人的登记数据,自动确定目标说话人是否在该段中说话。

A segment of speech (test segment) along with the enrollment speech segment(s) from a designated target speaker constitute a trial.

一个语音片段(测试片段)与一个指定目标说话人的登记语音片段构成一个试验。

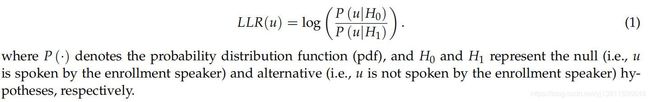

The system is required to process each trial independently and to output a log-likelihood ratio (LLR), using natural (base e) logarithm, for that trial.

系统需要独立处理每个试验,并输出一个对数似然比(LLR),使用自然对数(以e为底)进行试验。

The LLR for a given trial including a test segment u is defined as follows

对于一个给定的试验,包括一个测试段u, LLR的定义如下:

2.2 Training Condition

2.2 训练条件

The training condition is defined as the amount of data/resources used to build a Speaker Recognition (SR)system.

训练条件定义为,用于构建说话人识别系统(SR)的数据/资源的数量。

Unlike SRE16 and SRE18, this year’s evaluation only offers the open training condition that allows the use of any publicly available and/or proprietary data for system training and development.

与SRE16和SRE18不同,今年的评估只提供了开放的训练条件,允许使用任何公开的或专有的数据进行系统训练和开发。

The motivation behind this decision is twofold.

这一决定背后的动机是双重的。

First, results from the most recent NIST SREs (i.e., SRE16 and SRE18) indicate limited performance improvements, if any, from unconstrained training compared to fixed training.

首先,NIST最新的SREs(即, SRE16和SRE18)结果表明,训练的性能进步很小,之前都是fixed training。

Participants cited lack of time and/or resources during the evaluation period for not demonstrating significant improvement with open vs fixed training.

参与者表示,在比赛期间,由于缺乏时间和/或资源,开放式训练与固定训练没有显著改善。

Second, the number of publicly available large-scale data resources for speaker recognition has dramatically increased over the past few years (e.g., see VoxCeleb and SITW).

第二,在过去的几年里,用于说话人识别的大规模公开数据资源的数量急剧增加(例如,参见VoxCeleb和SITW)。

Therefore, removing the fixed training condition will allow more in-depth exploration into the gains that can be achieved with the availability of unconstrained resources given the success of data-hungry Neural Network based approaches in the most recent evaluation (i.e. SRE18).

因此,消除固定的训练条件将使我们能够更深入地探讨在无约束资源的情况下所能取得的成果,因为在最近的评估(即SRE18)中,基于数据饥渴的神经网络方法取得了成功。

For the sake of convenience, in particular for the new and first-time participants, NIST also will provide an in-domain Development set that can be used for system training and development purposes:

为了方便,特别是对于第一次参与者,NIST还将提供一个域内开发集,可用于系统训练和开发目的:

• 2019 NIST Speaker Recognition Evaluation CTS Challenge Development Set (LDC2019E59)

This Development set simply combines the SRE18 CTS Dev and Test sets into one package.

这个开发集简单地将SRE18 CTS开发和测试集组合到一个包中。

Participants can obtain this dataset through the evaluation web platform (https://sre.nist.gov) after they have signed the LDC data license agreement.

参与者在签署LDC数据许可协议后,可以通过评估web平台(https://sre.nist.gov)获取该数据集。

Although SRE 19 allows unconstrained system training and development, participating teams must provide a sufficient description of speech and non-speech data resources as well as pre-trained models used during the training and development of their systems (see Section 6.4.2).

虽然SRE 19允许不受约束的系统训练和开发,但是参与的团队必须提供充分的语音和非语音数据资源的描述,以及在系统的训练和开发过程中使用的预训练模型 (see Section 6.4.2).

2.3 Enrollment Conditions

2.3注册条件

The enrollment condition is defined as the number of speech segments provided to create a target speaker model.

登记条件定义为,为创建目标说话人模型而提供的语音段数。

As in SRE 16 and SRE 18, gender labels will not be provided.

与SRE 16和SRE 18一样,将不提供性别标签。

There are two enrollment conditions for the SRE 19 CTS Challenge:

SRE 19 CTS挑战赛有两种登记条件:

•One segment - in which the system is given only one segment, approximately containing 60 seconds of speech [ As determined by a speech activity detector (SAD) output], to build the model of the target speaker.

•单段——给系统一个片段,大约包含60秒的语音(由语音活动检测器(SAD)输出),用来建立目标说话人的模型。

•Three segment - where the system is given three segments, each containing approximately 60 seconds of speech to build the model of the target speaker, all from the same phone number.

•三段——给系统三个片段,每个片段包含大约60秒的语音,来建立目标说话人的模型,所有片段都来自相同的电话号码。

This condition only involves the Public Switched Telephone Network (PSTN) data.

这种情况只涉及公共交换电话网(PSTN)数据。

2.4 Test Conditions

2.4测试条件

For the SRE 19 CTS Challenge, the trials will be divided into two subsets: a progress subset, and an evaluation subset.

本年度SRE 19 CTS Challenge, 试验 将 分为 两个子集: 一个progress子集,和一个评估子集。

The progress subset will comprise 30% of the trials and will be used to monitor progress in the leaderboard.

progress子集将包括30%的试验,并将用于监测leaderboard的progress情况。

The remaining 70% of the trials will form the evaluation subset, and will be used to generate the official final results determined at the end of the challenge.

剩下的70%的试验将形成评估子集,并将用于生成在挑战结束时,确定的,正式的最终结果。

The challenge test conditons are as follows:

测试条件如下:

• The speech duration of the test segments will be uniformly sampled ranging approximately from 10 seconds to 60 seconds.

•测试片段的语音时长将统一采样,采样时间大约在10秒到60秒之间。

•Trials will be conducted with test segments from both same and different phone numbers as the enrollment segment(s).

•试验中的登记段将使用来自相同或不同电话号码的片段。

•There will be no cross-gender trials.

•不会有跨性别试验。

3 Performance Measurement

3性能测量

3.1 Primary Metric

3.1主要指标

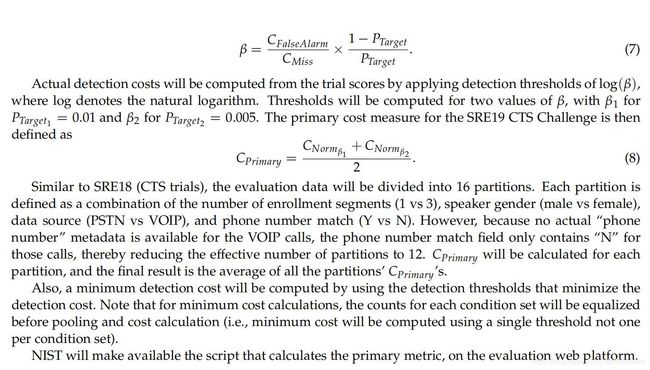

A basic cost model is used to measure the speaker detection performance and is defined as a weighted sum of false-reject (missed detection) and false-alarm error probabilities for some decision threshold θ as follows:

使用基本成本模型来衡量说话人检测性能,并将其定义为某个阈值θ的错误拒绝率(漏检)和错误报警率的加权和,如下所示:

(这部分公式有点多,我就直接粘贴照片了)

4 Data Description

4数据描述

The data collected by the LDC as part of the CMN2 corpus will be used to compile the SRE19 CTS Challenge Development and Test sets.

LDC收集的,作为CMN2语料库一部分的数据,将用于组成SRE19 CTS挑战开发和测试集。

The CMN2 data are composed of PSTN and VOIP data collected outside North America, spoken in Tunisian Arabic.

CMN2数据由采集自北美以外地区的PSTN和VOIP数据组成,这些数据使用突尼斯的阿拉伯语。

Recruited speakers (called claque speakers) made multiple calls to people in their social network (e.g., family, friends).

被招募的说话人(称为claque speakers)会给他们社交网络中的人(如家人、朋友)打多个电话。

Claque speakers were encouraged to use different telephone instruments (e.g., cell phone, landline) in a variety of settings (e.g., noisy cafe, quiet office) for their initiated calls and were instructed to talk for at least 8 minutes on a topic of their choice.

我们鼓励Claque speakers在不同的环境(如嘈杂的咖啡馆、安静的办公室)中使用不同的电话工具(如手机、座机)进行初始通话,并要求他们就自己选择的话题至少讲8分钟。

All CMN2 segments will be encoded as a-law sampled at 8 kHz in SPHERE formatted files.

所有CMN2段,将被编码在一个8khz采样的球体格式文件中。

The Development and Test sets will be distributed by NIST via the online evaluation platform (https://sre.nist.gov)

开发和测试集将由NIST通过在线评估平台(https://sre.nist.gov)分发。

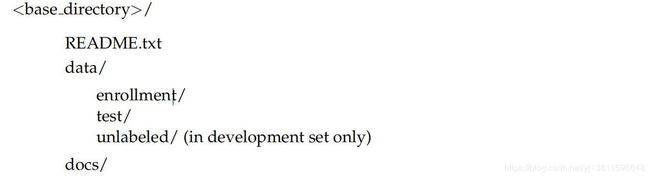

4.1 Data Organization

4.1数据组织

The Development and Test sets follow a similar directory structure:

开发和测试集遵循类似的目录结构:

4.2 Trial File

4.2试验文件

The trial file, named srel9_cts_challenge_trials.tsv and located in the docs directory, is composed of a header and a set of records where each record describes a given trial.

名为srel9_cts_challenge_trials的试验文件,位于docs目录中,由一个标题和一组记录组成,其中每个记录描述一个给定的试验。

Each record is a single line containing three fields separated by a tab character and in the following format:

每条记录是一行,包含三个字段,字段之间用制表符隔开,格式如下:

4.3 Development Set

4.3开发集

Participants in the SRE19 CTS Challenge will receive data for development experiments that will mirror the evaluation conditions.

SRE19 CTS挑战的参与者将收到反映评估条件的dev实验数据。

The development data will simply combine the SRE18 CTS Dev and Test sets, and will include:

开发数据将简单地结合SRE18 CTS开发和测试集,并将包括:

•213 speakers from the CMN2 portion of SRE18

•来自SRE18 CMN2的213位演讲者

•Associated metadata which will be listed in the file

![]()

located in the docs directory as outlined in section 4.1.

关联的metadata将被列在文件…中,位于docs目录中,如4.1节所述。

The file contains information about the segments and speakers from the CMN2 portion of SRE18, and includes the following fields:

该文件包含SRE18的CMN2部分的片段和说话人信息,包括以下字段:

一 segmentid (segment identifier),应该是某个说话人的第几段语音

一 subjectid (LDC speaker id) ,说话人id

一 gender (male or female),性别(男性或女性)

一partition (enrollment, test, or unlabeled),分区(登记、测试或未标记)

一phone_number (anonymized phone number),匿名电话号码

一 speech duration (segment speech duration) ,语音段时长

一data source (CMN2),数据来源

As part of the SRE19 CTS Challenge dev set, an unlabeled (i.e., no speaker ID, gender, or language labels) set of 2332 segments (with speech duration uniformly distributed in 10 s to 60 s range) from the CMN2 collection will also be made available.

作为,SRE19 CTS挑战,开发集的一部分,还将提供一套由2332个语音片段组成的,未标记的(也就是没有说话人id,性别,或者语言标记的),来自CMN2的数据集(语音时长均匀分布在10秒至60秒范围内)。

The segments are extracted from the non-claque (i.e., callee) side of the

PSTN/VOIP calls.

这些语音段来自于PSTN/VOIP calls中的non-claque那部分。

NIST will provide phone number metadata for the unlabeled segments, with the caveat that the phone numbers for these segments are unaudited and may not necessarily be reliable indications of speaker IDs, because one phone number may be associated with multiple callees, and one callee may be associated with multiple phone numbers.

对于未标记的语音段,NIST将提供电话号码的元数据,但需要警告说明的是,这些电话号码是未经审计的,不一定是可靠的说话人id,因为一个电话号码可能与多个callee有关,一个callee也可能与多个电话号码相关。

Also, note that for the unlabeled cuts, the subject id field in the segment key file simply provides call IDs (not speaker IDs) prepended with the number 9.

另外,请注意,对于未标记的片段,在key file中的subjectid字段如果看到了数字9,那是call ID(而不是speaker id)。

The development data may be used for any purpose.

这个开发数据可以用于任何目的。

4.4 Training Set

4.4训练集

Section 2.2 describes the training condition for the SRE19 CTS Challenge (open training condition).

第2.2节描述了SRE19 CTS挑战赛(开放训练条件)的训练条件。

Participants are allowed to use any publicly available or proprietary data they have available for system training and development purposes.

参与者被允许使用他们拥有的任何公开的或专有的数据来进行系统训练和开发。

The SRE19 CTS Challenge participants will also receive a Development set (described in previous Section) that they can use for system training.

SRE19 CTS挑战的参与者还将收到一个开发集(在前一节中描述过),他们可以使用它进行系统培训。

To obtain this Development data, participants must sign the LDC data license agreement which outlines the terms of the data usage.

为了获得这些开发数据,参与者必须签署LDC数据许可协议,该协议概述了数据使用的条款。

5 Evaluation Rules and Requirements

5 评价规则和要求

The SRE19 CTS Challenge is conducted as an open evaluation where the test data is sent to the participants to process locally and submit the output of their systems to NIST for scoring.

SRE19 CTS挑战是作为一个开放的评估进行的,其中测试数据被发送到参与者,在参与者的本地处理,并将其系统的输出提交给NIST进行评分。

As such, the participants have agreed to process the data in accordance with the following rules:

因此,参加者同意按照下列规则处理有关资料:

•The participants agree to make at least one valid submission for the open training condition.

参与者同意至少提交一次有效的开放培训条件。

•The participants agree to process each trial independently.

参与者同意独立处理每个试验。

•That is, each decision for a trial is to be based only upon the specified test segment and target speaker enrollment data.

也就是说,每个试验的结果,只能基于指定的测试段和目标演讲者的登记数据。

•The use of information about other test segments or other target speaker data is not allowed.

不允许使用其他测试段或其他目标说话人数据的信息。

•The participants agree not to probe the enrollment or test segments via manual/human means such as listening to the data or producing the manual transcript of the speech。

参与者同意不通过人工手段(如听数据或制作人工翻译稿)来探查登记或测试片段

• The participants are allowed to use any automatically derived information for training, development, enrollment, or test segments.

参与者可以使用任何自动派生的信息进行训练、开发、注册或测试。

• The participants are allowed to use information available in the SPHERE header.

参与者允许使用球体文件头中的可用信息。

• The participants may make multiple challenge submissions (up to 3 per day).

参赛者可以有多个结果提交(每天最多3个)。

A leaderboard will be maintained by NIST indicating the best submission performance results thus far received and processed.

NIST将维护一个排行榜,表明迄今为止收到和处理的最佳提交性能结果。

In addition to the above data processing rules, participants agree to comply with the following general requirements:

除上述数据处理规则外,参加者同意遵守下列一般规定:

•The participants agree to the guidelines governing the publication of the results:

参与者同意指导结果发布的准则:

-Participants are free to publish results for their own system but must not publicly compare their results with other participants (ranking, score differences, etc.) without explicit written consent 同意 from the other participants.

-参与者可以自由发布自己系统的结果,但未经其他参与者的明确书面同意,不得公开与其他参与者比较结果(排名、分数差异等)。

-While participants may report their own results, participants may not make advertising claims about their standing in the evaluation, regardless of rank, or winning the evaluation, or claim NIST endorsement背书of their system(s).

-虽然参与者可以报告他们自己的结果,但是参与者不可以对他们在评价中的地位进行广告宣传,无论他们的排名如何,或者赢得比赛,或者要求NIST支持他们的系统。

The following language in the U.S. Code of Federal Regulations (15 C.F.R. § 200.113) shall be respected,(See http: //ww. ecfr. gov/cgi-bin/ECFR?page=browse)No reference shall be made to NIST, or to reports or results furnished by NIST in any advertising or sales promotion which would indicate or imply that NIST approves, recommends, or endorses any proprietary product or proprietary material, or which has as its purpose an intent to cause directly or indirectly the advertised product to be used or purchased because of NIST test reports or results.

应遵守《美国联邦法规法典》(15 C.F.R.§200.113)中的下列规定:

(See http: //ww. ecfr. gov/cgi-bin/ECFR?page=browse)

在任何广告或促销中,不得提及NIST或NIST提供的报告或结果,以表明或暗示NIST批准(推荐或认可)任何专有产品或专有材料,或具有下面意图, 由于NIST的测试报告或结果,导致直接或间接使用或购买广告产品。

-At the conclusion of the evaluation NIST generates a report summarizing the system results for conditions of interest, but these results/charts do not contain the participant names of the systems involved.

-在比赛结束时,NIST会生成一份报告,对系统结果进行汇总,但这些结果/图表不会包含所涉及系统的参与者名称。

Participants may publish or otherwise disseminate传播 these charts, unaltered and with appropriate reference to their source.

参赛者可出版或以其他方式传播这些图表,但不得改动,并适当引用其来源。

-The report that NIST creates should not be construed or represented as endorsements背书 for any participant’s system or commercial product, or as official findings on the part of NIST or the U.S. Government.

- NIST创建的报告不应被解释为 或代表为 任何参与者的系统 或商业产品 的背书,也不能被代表为美国政府的官方调查结果。

Sites failing to meet the above noted rules and requirements, will be excluded from future evaluation participation, and their registrations will not be accepted until they are committed to fully participate.

未能满足上面提到的规则和要求的参赛者, 未来将被禁止参与比赛, 他们的注册将不被接受,直到他们承诺充分参与。

6 Evaluation Protocol

6 评估规则

To facilitate information exchange between the participants and NIST, all evaluation activities are conducted over a web-interface.

为了方便参与者和NIST之间的信息交流,所有的评估活动都在一个网络界面上进行。

6.1 Evaluation Account

6.1参赛帐户

Participants must sign up for an evaluation account where they can perform various activities such as registering for the evaluation, signing the data license agreement, as well as uploading the submission and system description.

参与者必须注册一个评估账户,在那里他们可以执行各种活动,如注册评估、签署数据许可协议,以及上传提交结果和系统描述。

To sign up for an evaluation account, go to https: //sre. nist. gov.

要注册评估帐户,请访问 https: //sre. nist. gov.

The password must be at least 12 characters long and must contain a mix of upper and lower case letters, numbers and symbols.

密码必须至少有12个字符长,并且必须包含大小写字母、数字和符号的混合。

After the evaluation account is confirmed, the participant is asked to join a site or create one if it does not exist.

评估帐户确认后,参与者被要求加入一个site,如果site不存在就创建一个。

The participant is also asked to associate his site to a team or create one if it does not exist.

参与者还被要求将他的站点与一个团队相关联,如果团队不存在就创建一个。

This allows multiple members with their individual accounts to perform activities on behalf of their site and/or team (e.g., make a submission) in addition to performing their own activities (e.g., requesting workshop invitation letter).

这允许多个成员用他们的个人帐户代表他们的站点和/或团队执行活动(例如,提交),除了执行他们自己的活动(例如,请求研讨会邀请函)。

• A participant is defined as a member or representative of a site who takes part in the evaluation (e.g.,John Doe)

参与者被定义为参与评估的网站的成员或代表(例如,John Doe)。

• A site is defined as a single organization (e.g., NIST)

•站点被定义为单个组织(例如,NIST)

• A team is defined as a group of organizations collaborating on a task (e.g., Team1 consisting of NIST and LDC)

•团队被定义为一组协作完成任务的组织(例如,由NIST和LDC组成的Team1)

6.2 Evaluation Registration

6.2评估登记

One participant from a site must formally register his site to participate in the evaluation by agreeing to the terms of participation.

一个网站的参与者必须通过同意参与条款来正式注册他的网站来参加评估。

For more information about the terms of participation, see Section 5.

有关参与条款的更多信息,请参见第5节。

6.3 Data License Agreement

6.3数据许可协议

One participant from each site must sign the LDC data license agreement to obtain the development/training data for the SRE 19 CTS Challenge.

每个站点的一名参与者必须签署LDC数据许可协议,以获得SRE 19 CTS挑战赛的开发/培训数据。

6.4 Submission Requirements

6.4提交需求

Each team must make at least one valid submission for the challenge, processing all test segments.

每个团队必须提交至少一个有效的挑战,处理所有的测试部分。

Submissions with missing test segments will not pass the validation step, and hence will be rejected.

缺少测试片段的提交将不能通过验证步骤,因此将被拒绝。

Each team is required to submit a system description at the designated time (see Section 7). The final evaluation results (on the 70% evaluation subset) will be made available only after the system description report has been received by NIST and confirmed to comply with guidelines described in Section 6.4.2.

每个团队都必须在指定的时间提交系统描述(见第7节)。最终的评估结果(70%的评估子集)只有在NIST收到系统描述报告并确认符合第6.4.2节所述的指导方针之后才能获得。

6.4.1 System Output Format

6.4.1系统输出格式

The system output file is composed of a header and a set of records where each record contains a trial given in the trial file (see Section 4.2) and a log likelihood ratio output by the system for the trial.

系统输出文件由一个头和一组记录组成,其中每个记录包含试验文件中给出的试验(参见4.2节)和系统为试验输出的日志似然比。

The order of the trials in the system output file must follow the same order as the trial list.

系统输出文件中试验的顺序必须与试验列表的顺序相同。

Each record is a single line containing 4 fields separated by tab character in the following format:

每条记录是一行,包含4个字段,按制表符分隔,格式如下:

6.4.2 System Description Format

6.4.2系统描述格式

Each team is required to submit a system description.

每个团队都需要提交一个系统描述。

The system description must include the following items:

系统描述必须包括以下内容:

•a complete description of the system components, including front-end (e.g., speech activity detection, features, normalization) and back-end (e.g.,background models, i-vector/embedding extractor,LDA/PLDA) modules along with their configurations (i.e.filterbank configuration,dimensionality and type of the acoustic feature parameters, as well as the acoustic model and the backend model configurations)

系统组件的完整描述,包括前端模块(如语音活动检测、特征、规格化)和后端模块(如背景模型、i-vector/ embedded extractor、LDA/PLDA)及其配置(如(1)滤波器组配置、声学特征参数的维数和类型,以及声学模型和后端模型配置)

•a complete description of the data partitions used to train the various models (as mentioned above).

•用于训练各种模型的数据分区的完整描述(如上所述)。

Teams are encouraged to report how having access to the Development set (labeled and unlabeled) impacted the performance,

鼓励团队报告开发集(标记的和未标记的)如何影响性能,

•performance of the submission systems (primary and secondary) on the SRE19 Development set (or a derivative/custom dev set), using the scoring software provided via the web platform (https://sre.nist.gov).

•使用web平台(https://sre.nist.gov)提供的评分软件,在SRE19开发集(或衍生/自定义开发集)上执行提交(主要的和次要的)。

Teams are encouraged to quantify the contribution of their major system components that they believe resulted in significant performance gains,

鼓励团队量化他们认为能够带来显著性能收益的主要系统组件的贡献,

•a report of the CPU (single threaded) and GPU execution times as well as the amount of memory used to process a single trial (i.e.the time and memory used for creating a speaker model from enrollment data as well as processing a test segment to compute the LLR).

CPU(单线程)和GPU执行时间的报告,以及用于处理单个试验的内存的数量(即用于从登记数据创建speaker模型以及处理测试段以计算LLR的时间和内存)。

The system description should follow the latest IEEE ICASSP conference proceeding template.

系统描述应遵循最新的IEEE ICASSP会议进程模板。