- Python网络编程05----django与数据库的交互

翻滚吧挨踢男

Pythonpython网络编程

介绍Django为多种数据库后台提供了统一的调用API,在Django的帮助下,我们不用直接编写SQL语句。Django将关系型的表(table)转换成为一个类(class)。而每个记录(record)是该类下的一个对象(object)。我们可以使用基于对象的方法,来操纵关系型数据库。设置数据库设置数据库需要修改settings.py文件如果使用的数据库是mysql:[python]viewpla

- Python爬虫:高效获取1688商品详情的实战指南

数据小爬虫@

python爬虫开发语言

在电商行业,数据是商家制定策略、优化运营的核心资源。1688作为国内领先的B2B电商平台,拥有海量的商品信息。通过Python爬虫技术,我们可以高效地获取这些商品详情数据,为商业决策提供有力支持。一、为什么选择Python爬虫?Python以其简洁易读的语法和强大的库支持,成为爬虫开发的首选语言之一。利用Python爬虫,可以快速实现从1688平台获取商品详情的功能,包括商品标题、价格、图片、描述

- python模块triton安装教程

2401_85863780

1024程序员节tritonwhl

Triton是一个用于高性能计算的开源库,特别适用于深度学习和科学计算。通过预编译的whl文件安装Triton可以简化安装过程,尤其是在编译时可能会遇到依赖问题的情况下。以下是详细的安装步骤:安装前准备:Python环境:确保已经安装了Python,并且Python版本与whl文件兼容。pip:确保已经安装了pip,这是Python的包管理器,用来安装外部库。下载whl文件:从可靠的来源下载适用于

- python模块mediapipe安装教程

2401_85863780

python开发语言mediapipe

安装MediaPipe通过.whl文件的方法与安装其他Python库相似。下面是详细的步骤,指导你如何通过.whl文件安装MediaPipe。1.确认Python和pip已经安装首先,确保你的系统上已经安装了Python和pip。你可以通过打开命令行(对于Windows用户,这可以是CMD或PowerShell;对于macOS和Linux用户,这可以是终端)并运行以下命令来检查:python--v

- 【whl文件】python各版本whl下载地址汇总

2401_85863780

pythonlinux开发语言

whl文件,全称为wheel文件,是Python分发包的一种标准格式。它是预编译的二进制包,包含了Python模块的压缩形式(如.py文件和编译后的.pyd文件)以及这些模块的元数据,通常通过Zip压缩算法进行压缩。whl文件的出现,使得Python包的安装过程变得更为简单和高效,因为它允许用户快速安装Python包及其依赖项,而无需从源代码开始编译。此外,whl文件还具有良好的跨平台兼容性,可以

- Ubuntu下 Python 版本切换

Tobey袁

Ubuntushellubuntulinux

在Ubuntu的开发环境下,由于Python2和Python3很多不兼容,经常会需要我们手动切换Python版本。sudoupdate-alternatives--install/usr/bin/pythonpython/usr/bin/python2100sudoupdate-alternatives--install/usr/bin/pythonpython/usr/bin/python315

- python中set的用法_Python中set的用法

weixin_39876645

python中set的用法

python的集合类型和其他语言类似,是一个无序不重复元素集,我在之前学过的其他的语言好像没有见过这个类型,基本功能包括关系测试和消除重复元素.集合对象还支持union(联合),intersection(交),difference(差)和sysmmetricdifference(对称差集)等数学运算,和我们初中数学学的集合的非常的相似。1先看下python集合类型的不重复性,这方面做一些去重处理非

- python set用法小结

Super_Meredith

pandasset

1.创建集合set()>>>set('python'){'o','p','h','n','t','y'}>>>set(['python']){'python'}#去重>>>list1=[11,11,12,13,14,14,15]>>>set(list1){11,12,13,14,15}2.添加add(),update()#add():把传入的元素做为一个整体添加到集合中>>>set1=set('p

- python 集合概念set用法

shuwenting

python基础

Python中set的用法python的集合类型和其他语言类似,是一个无序不重复元素集,我在之前学过的其他的语言好像没有见过这个类型,基本功能包括关系测试和消除重复元素.集合对象还支持union(联合),intersection(交),difference(差)和sysmmetricdifference(对称差集)等数学运算,和我们初中数学学的集合的非常的相似。1先看下python集合类型的不重复

- python set operation

screaming

PythonSet

Setcanbeconvertedtolistbylist(set)add(elem)¶Addelementelemtotheset.remove(elem)Removeelementelemfromtheset.RaisesKeyErrorifelemisnotcontainedintheset.discard(elem)Removeelementelemfromthesetifitispres

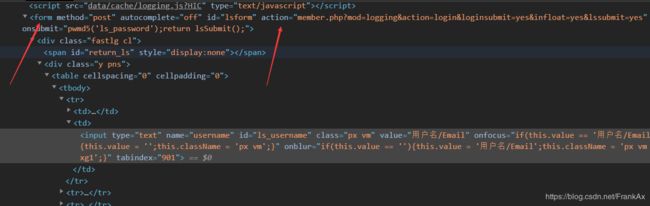

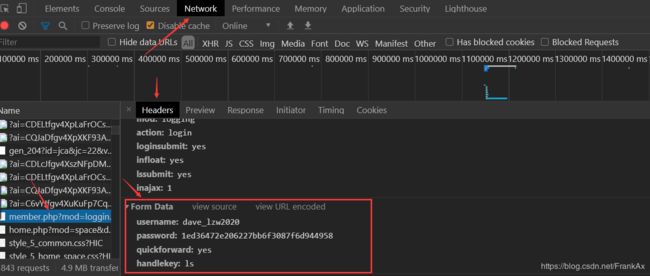

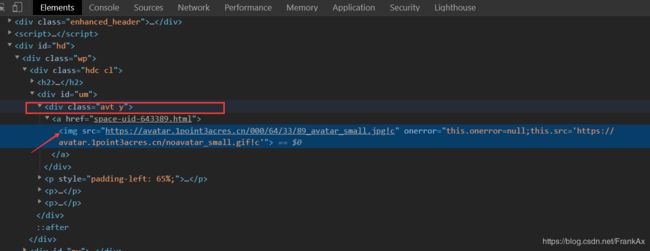

- Python Web开发记录 Day12:Django part6 用户登录

Code_流苏

#---PythonWeb开发---#Django项目探索实验室python前端django

名人说:东边日出西边雨,道是无晴却有晴。——刘禹锡《竹枝词》创作者:Code_流苏(CSDN)(一个喜欢古诗词和编程的Coder)目录1、登录界面2、用户名密码校验3、cookie与session配置①cookie与session②配置4、登录验证5、注销登录6、图片验证码①Pillow库②图片验证码的实现7、补充:图片验证码的作用和扩展①作用②其他类型的验证码8、验证码校验在上一篇博客中我们实现

- Ubuntu中如何使用pip切换不同的python版本建立虚拟环境

挪威的深林

【Linux】操作命令linux问题python教程pipvirtualenvpython

一.前言最近遇到非常头疼的问题,在ubuntu中运行不同的项目或者downloadgithub的项目时,总是需要不同版本的python,不同版本的pkgs.因此,为不同的项目建立各自的虚拟环境是一个比较方便的事情.对于建立虚拟环境,目前本人所掌握的主要是conda,以及pip,如果使用conda去建立虚拟环境,则需要安装anaconda,或则minianaconda.在安装anaconda后才能够

- 代码随想录day3

mvufi

python开发语言

203.移除链表元素虚拟头结点:增加删除都很容易python不用new,直接=ListNode(...)#Definitionforsingly-linkedlist.#classListNode:#def__init__(self,val=0,next=None):#self.val=val#self.next=nextclassSolution:defremoveElements(self,h

- [LeetCode-Python版]Hot100(2/100)——128. 最长连续序列

古希腊掌管学习的神

LeetCode-Pythonleetcodepython算法

题目给定一个未排序的整数数组nums,找出数字连续的最长序列(不要求序列元素在原数组中连续)的长度。请你设计并实现时间复杂度为O(n)的算法解决此问题。示例1:输入:nums=[100,4,200,1,3,2]输出:4解释:最长数字连续序列是[1,2,3,4]。它的长度为4。示例2:输入:nums=[0,3,7,2,5,8,4,6,0,1]输出:9题目链接思路因为题目要求O(n)的时间复杂度,所以

- [LeetCode-Python版]Hot100(1/100)——49. 字母异位词分组

古希腊掌管学习的神

LeetCode-Pythonleetcodepython算法

题目给你一个字符串数组,请你将字母异位词组合在一起。可以按任意顺序返回结果列表。字母异位词是由重新排列源单词的所有字母得到的一个新单词。示例1:输入:strs=[“eat”,“tea”,“tan”,“ate”,“nat”,“bat”]输出:[[“bat”],[“nat”,“tan”],[“ate”,“eat”,“tea”]]示例2:输入:strs=[“”]输出:[[“”]]示例3:输入:strs=

- ubuntu系统切换python版本的方法

lkasi

Ubuntuubuntulinux运维

1.查看所有的python版本终端输入ls/usr/bin/python*结果2.切换版本终端输入sudoupdate-alternatives--configpython结果输入对应的选择编号即可切换python版本

- python版本升级

HiSiri~

pythonpython开发语言

python版本升级背景在对centos机器升级Python版本从3.6到3.10后,pip安装出现了一些问题[解决pipisconfiguredwithlocationsthatrequireTLS/SSL问题]操作下载在官方主站找到合适的版本,并下载https://www.python.org/ftp/python/wgethttps://www.python.org/ftp/python/3

- Python集合之set()使用方法详解

lmseo5hy

python培训python集合

set是一个无序且不重复的元素集合,它有可变集合(set())和不可变集合(frozenset)两种,可以对set()集合进行创建、添加、删除、交集、并集和差集的操作,非常实用,以下是具体用法:一、创建集合setpythonset类是在python的sets模块中,新的python版本可以直接创建集合,不需要导入sets模块。具体用法:1.set('old')2.set(‘o’,’l’,’d’)二

- [LeetCode-Python版]动态规划——0-1背包和完全背包问题总结

古希腊掌管学习的神

LeetCode-Pythonleetcodepython动态规划

0-1背包有n个物品,第i个物品的体积为wiw_iwi,价值为viv_ivi,每个物品至多选一个,求体积和不超过capacity时的最大价值和状态转移:dfs(i,c)=max(dfs(i−1,c),dfs(i−1,c−w[i])+v[i]dfs(i,c)=max(dfs(i-1,c),dfs(i-1,c-w[i])+v[i]dfs(i,c)=max(dfs(i−1,c),dfs(i−1,c−w[

- ipykernel-4.10.0-py2-none-any.whl文件解析与安装指南

嗹国学长

本文还有配套的精品资源,点击获取简介:PyPI是Python的软件包仓库,本篇将解析一个特定Python包——ipykernel的4.10.0版本。ipykernel是Jupyter项目的核心组件,用于创建和运行交互式Python内核。本指南将介绍其功能、特点和安装过程,并强调其在跨语言支持、异步I/O处理、调试和交互式通信等方面的重要性。用户可通过pip安装该版本,以支持Python2环境中的J

- [解决ERROR]VScode中运行ipynb文件报错缺少ipykernel

又困又爱睡

vscodejupyter

[解决ERROR]VScode中运行ipynb文件报错缺少ipykernel1.在VScode中添加jupyter插件2.建议大家安装python版本目前不要大于3.93.在环境中安装jupyter的包,同时会帮我们下载好ipykernel的包4.如果你不幸发现自己的pyzmq包已经下载且版本是23.3.1或以上版本导致FailedtostarttheKernel5.结语前言:最近帮大家配置ana

- python字典的嵌套

计算机小白的爬坑之路

python基础python

字典嵌套及打印题目:城市创建一个名为cities的字典,其中将三个城市名用作键;对于每座城市,都创建一个字典,并在其中包含该城市所属的国家、人口约数以及一个有关该城市的事实。在表示每座城市的字典中,应包含country、population和fact等键。将每座城市的名字以及有关它们的信息都打印出来。代码如下所示:cities={'北京':{'country':'China','populatio

- w224疫情管理系统设计与实现

卓怡学长

计算机毕业设计javaspringspringboot数据库intellij-idea

作者简介:多年一线开发工作经验,原创团队,分享技术代码帮助学生学习,独立完成自己的网站项目。代码可以查看文章末尾⬇️联系方式获取,记得注明来意哦~赠送计算机毕业设计600个选题excel文件,帮助大学选题。赠送开题报告模板,帮助书写开题报告。作者完整代码目录供你选择:《Springboot网站项目》400套《ssm网站项目》800套《小程序项目》300套《App项目》500套《Python网站项目

- Python:字典嵌套

lcqin111

python

可以在列表中嵌套字典、在字典中嵌套列表甚至在字典中嵌套字典alien_0={'color':'green','points':5}alien_1={'color':'yellow','points':10}alien_2={'color':'red','points':15}aliens=[alien_0,alien_1,alien_2]foralieninaliens:print(alien)这

- Conda添加新的Kernel

_TFboy

condapython开发语言

官方说明:https://ipython.readthedocs.io/en/stable/install/kernel_install.html要向Conda添加一个新的内核(kernel),你可以按照以下步骤进行操作:确保你已经激活了你想要添加内核的Conda环境。运行以下命令激活环境:condaactivateyour_environment_name将“your_environment_n

- 探索IPykernel:Python交互式计算的核心引擎

解然嫚Keegan

探索IPykernel:Python交互式计算的核心引擎项目地址:https://gitcode.com/gh_mirrors/ip/ipykernel是一个开放源代码项目,它是IPythonNotebook和JupyterNotebook背后的驱动力,使得在Python环境中进行交互式计算成为可能。本文将带你深入了解IPykernel的技术特性、应用及优势,引导你更有效地利用它提升开发效率。项目

- 【20天快速掌握Python】day20-手动搭建HTTP服务器

菜鸟进阶站

Python后端开发编程pythonhttp服务器

演示代码: importre importsocket frommultiprocessingimportProcess classWSGIServer(): def__init__(self,server,port,root): self.server=server self.port=port self.root=root self.server_s

- 【20天快速掌握Python】day18-进程

菜鸟进阶站

Python编程后端开发python前端javascript

进程程序:例如xxx.py这是程序,是一个静态的。进程:一个程序运行起来后,代码+用到的资源称之为进程,它是操作系统分配资源的基本单元。不仅可以通过线程完成多任务,进程也是可以的。进程的状态工作中,任务数往往大于cpu的核数,即一定有一些任务正在执行,而另外一些任务在等待cpu进行执行,因此导致了有了不同的状态。就绪态:运行的条件都已经满足,正在等在cpu执行。执行态:cpu正在执行其功能。等待态

- 【20天快速掌握Python】day17-线程

菜鸟进阶站

Python编程后端开发python前端java

1.线程安全问题1.1线程访问全局变量importthreadingg_num=0deftest(n):globalg_numforxinrange(n):g_num+=xg_num-=xprint(g_num)if__name__=='__main__':t1=threading.Thread(target=test,args=(10,))t2=threading.Thread(target=t

- 【20天快速掌握Python】day08-高阶函数

菜鸟进阶站

Python编程后端开发python开发语言numpy

1.递归函数什么是递归函数?如果一个函数在内部不调用其它的函数,而是自己本身的话,这个函数就是递归函数。递归函数的作用举个例子,我们来计算阶乘n!=1*2*3*...*n解决办法1:使用循环来完成 defcal(num): result,i=1,1 whilei函数对应的数据类型是function,可以把它当做是一种复杂的数据类型。既然同样都是一种数据类型,我们就可以把它当做数字或者字符串

- Spring4.1新特性——综述

jinnianshilongnian

spring 4.1

目录

Spring4.1新特性——综述

Spring4.1新特性——Spring核心部分及其他

Spring4.1新特性——Spring缓存框架增强

Spring4.1新特性——异步调用和事件机制的异常处理

Spring4.1新特性——数据库集成测试脚本初始化

Spring4.1新特性——Spring MVC增强

Spring4.1新特性——页面自动化测试框架Spring MVC T

- Schema与数据类型优化

annan211

数据结构mysql

目前商城的数据库设计真是一塌糊涂,表堆叠让人不忍直视,无脑的架构师,说了也不听。

在数据库设计之初,就应该仔细揣摩可能会有哪些查询,有没有更复杂的查询,而不是仅仅突出

很表面的业务需求,这样做会让你的数据库性能成倍提高,当然,丑陋的架构师是不会这样去考虑问题的。

选择优化的数据类型

1 更小的通常更好

更小的数据类型通常更快,因为他们占用更少的磁盘、内存和cpu缓存,

- 第一节 HTML概要学习

chenke

htmlWebcss

第一节 HTML概要学习

1. 什么是HTML

HTML是英文Hyper Text Mark-up Language(超文本标记语言)的缩写,它规定了自己的语法规则,用来表示比“文本”更丰富的意义,比如图片,表格,链接等。浏览器(IE,FireFox等)软件知道HTML语言的语法,可以用来查看HTML文档。目前互联网上的绝大部分网页都是使用HTML编写的。

打开记事本 输入一下内

- MyEclipse里部分习惯的更改

Array_06

eclipse

继续补充中----------------------

1.更改自己合适快捷键windows-->prefences-->java-->editor-->Content Assist-->

Activation triggers for java的右侧“.”就可以改变常用的快捷键

选中 Text

- 近一个月的面试总结

cugfy

面试

本文是在学习中的总结,欢迎转载但请注明出处:http://blog.csdn.net/pistolove/article/details/46753275

前言

打算换个工作,近一个月面试了不少的公司,下面将一些面试经验和思考分享给大家。另外校招也快要开始了,为在校的学生提供一些经验供参考,希望都能找到满意的工作。

- HTML5一个小迷宫游戏

357029540

html5

通过《HTML5游戏开发》摘抄了一个小迷宫游戏,感觉还不错,可以画画,写字,把摘抄的代码放上来分享下,喜欢的同学可以拿来玩玩!

<html>

<head>

<title>创建运行迷宫</title>

<script type="text/javascript"

- 10步教你上传githib数据

张亚雄

git

官方的教学还有其他博客里教的都是给懂的人说得,对已我们这样对我大菜鸟只能这么来锻炼,下面先不玩什么深奥的,先暂时用着10步干净利索。等玩顺溜了再用其他的方法。

操作过程(查看本目录下有哪些文件NO.1)ls

(跳转到子目录NO.2)cd+空格+目录

(继续NO.3)ls

(匹配到子目录NO.4)cd+ 目录首写字母+tab键+(首写字母“直到你所用文件根就不再按TAB键了”)

(查看文件

- MongoDB常用操作命令大全

adminjun

mongodb操作命令

成功启动MongoDB后,再打开一个命令行窗口输入mongo,就可以进行数据库的一些操作。输入help可以看到基本操作命令,只是MongoDB没有创建数据库的命令,但有类似的命令 如:如果你想创建一个“myTest”的数据库,先运行use myTest命令,之后就做一些操作(如:db.createCollection('user')),这样就可以创建一个名叫“myTest”的数据库。

一

- bat调用jar包并传入多个参数

aijuans

下面的主程序是通过eclipse写的:

1.在Main函数接收bat文件传递的参数(String[] args)

如: String ip =args[0]; String user=args[1]; &nbs

- Java中对类的主动引用和被动引用

ayaoxinchao

java主动引用对类的引用被动引用类初始化

在Java代码中,有些类看上去初始化了,但其实没有。例如定义一定长度某一类型的数组,看上去数组中所有的元素已经被初始化,实际上一个都没有。对于类的初始化,虚拟机规范严格规定了只有对该类进行主动引用时,才会触发。而除此之外的所有引用方式称之为对类的被动引用,不会触发类的初始化。虚拟机规范严格地规定了有且仅有四种情况是对类的主动引用,即必须立即对类进行初始化。四种情况如下:1.遇到ne

- 导出数据库 提示 outfile disabled

BigBird2012

mysql

在windows控制台下,登陆mysql,备份数据库:

mysql>mysqldump -u root -p test test > D:\test.sql

使用命令 mysqldump 格式如下: mysqldump -u root -p *** DBNAME > E:\\test.sql。

注意:执行该命令的时候不要进入mysql的控制台再使用,这样会报

- Javascript 中的 && 和 ||

bijian1013

JavaScript&&||

准备两个对象用于下面的讨论

var alice = {

name: "alice",

toString: function () {

return this.name;

}

}

var smith = {

name: "smith",

- [Zookeeper学习笔记之四]Zookeeper Client Library会话重建

bit1129

zookeeper

为了说明问题,先来看个简单的示例代码:

package com.tom.zookeeper.book;

import com.tom.Host;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.Wat

- 【Scala十一】Scala核心五:case模式匹配

bit1129

scala

package spark.examples.scala.grammars.caseclasses

object CaseClass_Test00 {

def simpleMatch(arg: Any) = arg match {

case v: Int => "This is an Int"

case v: (Int, String)

- 运维的一些面试题

yuxianhua

linux

1、Linux挂载Winodws共享文件夹

mount -t cifs //1.1.1.254/ok /var/tmp/share/ -o username=administrator,password=yourpass

或

mount -t cifs -o username=xxx,password=xxxx //1.1.1.1/a /win

- Java lang包-Boolean

BrokenDreams

boolean

Boolean类是Java中基本类型boolean的包装类。这个类比较简单,直接看源代码吧。

public final class Boolean implements java.io.Serializable,

- 读《研磨设计模式》-代码笔记-命令模式-Command

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.util.ArrayList;

import java.util.Collection;

import java.util.List;

/**

* GOF 在《设计模式》一书中阐述命令模式的意图:“将一个请求封装

- matlab下GPU编程笔记

cherishLC

matlab

不多说,直接上代码

gpuDevice % 查看系统中的gpu,,其中的DeviceSupported会给出matlab支持的GPU个数。

g=gpuDevice(1); %会清空 GPU 1中的所有数据,,将GPU1 设为当前GPU

reset(g) %也可以清空GPU中数据。

a=1;

a=gpuArray(a); %将a从CPU移到GPU中

onGP

- SVN安装过程

crabdave

SVN

SVN安装过程

subversion-1.6.12

./configure --prefix=/usr/local/subversion --with-apxs=/usr/local/apache2/bin/apxs --with-apr=/usr/local/apr --with-apr-util=/usr/local/apr --with-openssl=/

- sql 行列转换

daizj

sql行列转换行转列列转行

行转列的思想是通过case when 来实现

列转行的思想是通过union all 来实现

下面具体例子:

假设有张学生成绩表(tb)如下:

Name Subject Result

张三 语文 74

张三 数学 83

张三 物理 93

李四 语文 74

李四 数学 84

李四 物理 94

*/

/*

想变成

姓名 &

- MySQL--主从配置

dcj3sjt126com

mysql

linux下的mysql主从配置: 说明:由于MySQL不同版本之间的(二进制日志)binlog格式可能会不一样,因此最好的搭配组合是Master的MySQL版本和Slave的版本相同或者更低, Master的版本肯定不能高于Slave版本。(版本向下兼容)

mysql1 : 192.168.100.1 //master mysq

- 关于yii 数据库添加新字段之后model类的修改

dcj3sjt126com

Model

rules:

array('新字段','safe','on'=>'search')

1、array('新字段', 'safe')//这个如果是要用户输入的话,要加一下,

2、array('新字段', 'numerical'),//如果是数字的话

3、array('新字段', 'length', 'max'=>100),//如果是文本

1、2、3适当的最少要加一条,新字段才会被

- sublime text3 中文乱码解决

dyy_gusi

Sublime Text

sublime text3中文乱码解决

原因:缺少转换为UTF-8的插件

目的:安装ConvertToUTF8插件包

第一步:安装能自动安装插件的插件,百度“Codecs33”,然后按照步骤可以得到以下一段代码:

import urllib.request,os,hashlib; h = 'eb2297e1a458f27d836c04bb0cbaf282' + 'd0e7a30980927

- 概念了解:CGI,FastCGI,PHP-CGI与PHP-FPM

geeksun

PHP

CGI

CGI全称是“公共网关接口”(Common Gateway Interface),HTTP服务器与你的或其它机器上的程序进行“交谈”的一种工具,其程序须运行在网络服务器上。

CGI可以用任何一种语言编写,只要这种语言具有标准输入、输出和环境变量。如php,perl,tcl等。 FastCGI

FastCGI像是一个常驻(long-live)型的CGI,它可以一直执行着,只要激活后,不

- Git push 报错 "error: failed to push some refs to " 解决

hongtoushizi

git

Git push 报错 "error: failed to push some refs to " .

此问题出现的原因是:由于远程仓库中代码版本与本地不一致冲突导致的。

由于我在第一次git pull --rebase 代码后,准备push的时候,有别人往线上又提交了代码。所以出现此问题。

解决方案:

1: git pull

2:

- 第四章 Lua模块开发

jinnianshilongnian

nginxlua

在实际开发中,不可能把所有代码写到一个大而全的lua文件中,需要进行分模块开发;而且模块化是高性能Lua应用的关键。使用require第一次导入模块后,所有Nginx 进程全局共享模块的数据和代码,每个Worker进程需要时会得到此模块的一个副本(Copy-On-Write),即模块可以认为是每Worker进程共享而不是每Nginx Server共享;另外注意之前我们使用init_by_lua中初

- java.lang.reflect.Proxy

liyonghui160com

1.简介

Proxy 提供用于创建动态代理类和实例的静态方法

(1)动态代理类的属性

代理类是公共的、最终的,而不是抽象的

未指定代理类的非限定名称。但是,以字符串 "$Proxy" 开头的类名空间应该为代理类保留

代理类扩展 java.lang.reflect.Proxy

代理类会按同一顺序准确地实现其创建时指定的接口

- Java中getResourceAsStream的用法

pda158

java

1.Java中的getResourceAsStream有以下几种: 1. Class.getResourceAsStream(String path) : path 不以’/'开头时默认是从此类所在的包下取资源,以’/'开头则是从ClassPath根下获取。其只是通过path构造一个绝对路径,最终还是由ClassLoader获取资源。 2. Class.getClassLoader.get

- spring 包官方下载地址(非maven)

sinnk

spring

SPRING官方网站改版后,建议都是通过 Maven和Gradle下载,对不使用Maven和Gradle开发项目的,下载就非常麻烦,下给出Spring Framework jar官方直接下载路径:

http://repo.springsource.org/libs-release-local/org/springframework/spring/

s

- Oracle学习笔记(7) 开发PLSQL子程序和包

vipbooks

oraclesql编程

哈哈,清明节放假回去了一下,真是太好了,回家的感觉真好啊!现在又开始出差之旅了,又好久没有来了,今天继续Oracle的学习!

这是第七章的学习笔记,学习完第六章的动态SQL之后,开始要学习子程序和包的使用了……,希望大家能多给俺一些支持啊!

编程时使用的工具是PLSQL