运行 MapReduce 样例

一 hadoop样例代码

1 样例程序路径

/opt/hadoop-2.7.4/share/hadoop/mapreduce

2 样例程序包

hadoop-mapreduce-examples-2.7.4.jar包含着数个可以直接运行的样例程序

3 如何查看样例程序

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar

4 举例

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar

An example program must be given as the first argument.

Valid program names are:

aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files.

aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files.

bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi.

dbcount: An example job that count the pageview counts from a database.

distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi.

grep: A map/reduce program that counts the matches of a regex in the input.

join: A job that effects a join over sorted, equally partitioned datasets

multifilewc: A job that counts words from several files.

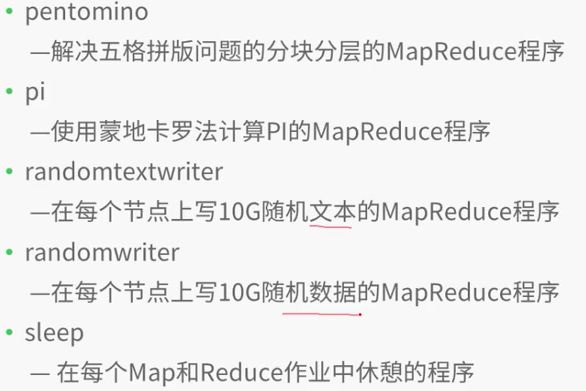

pentomino: A map/reduce tile laying program to find solutions to pentomino problems.

pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method.

randomtextwriter: A map/reduce program that writes 10GB of random textual data per node.

randomwriter: A map/reduce program that writes 10GB of random data per node.

secondarysort: An example defining a secondary sort to the reduce.

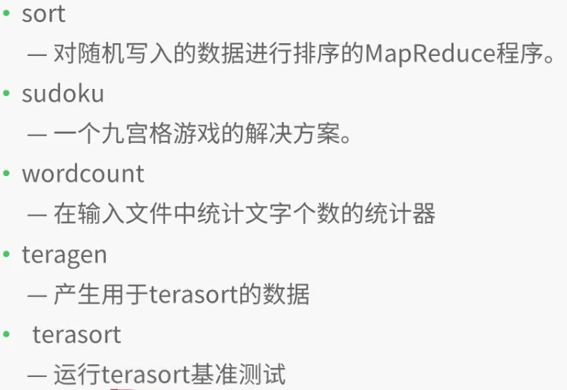

sort: A map/reduce program that sorts the data written by the random writer.

sudoku: A sudoku solver.

teragen: Generate data for the terasort

terasort: Run the terasort

teravalidate: Checking results of terasort

wordcount: A map/reduce program that counts the words in the input files.

wordmean: A map/reduce program that counts the average length of the words in the input files.

wordmedian: A map/reduce program that counts the median length of the words in the input files.

wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

二 样例程序简介

三 查看样例帮助

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar pi

举例

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount

Usage: wordcount [...]

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar pi

Usage: org.apache.hadoop.examples.QuasiMonteCarlo

Generic options supported are

-conf specify an application configuration file

-D use value for given property

-fs specify a namenode

-jt specify a ResourceManager

-files specify comma separated files to be copied to the map reduce cluster

-libjars specify comma separated jar files to include in the classpath.

-archives specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions]

四 运行wordcount样例

[root@master hadoop-2.7.4]# jps

4912 NameNode

9265 NodeManager

9155 ResourceManager

9561 Jps

5195 SecondaryNameNode

5038 DataNode

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount /input /output2

17/12/17 16:28:33 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:28:35 INFO input.FileInputFormat: Total input paths to process : 1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0001

17/12/17 16:28:36 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0001

17/12/17 16:28:37 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0001/

17/12/17 16:28:37 INFO mapreduce.Job: Running job: job_1513499297109_0001

17/12/17 16:29:06 INFO mapreduce.Job: Job job_1513499297109_0001 running in uber mode : false

17/12/17 16:29:06 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:29:25 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:29:40 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:29:41 INFO mapreduce.Job: Job job_1513499297109_0001 completed successfully

17/12/17 16:29:42 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=339

FILE: Number of bytes written=242217

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=267

HDFS: Number of bytes written=217

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=16910

Total time spent by all reduces in occupied slots (ms)=9673

Total time spent by all map tasks (ms)=16910

Total time spent by all reduce tasks (ms)=9673

Total vcore-milliseconds taken by all map tasks=16910

Total vcore-milliseconds taken by all reduce tasks=9673

Total megabyte-milliseconds taken by all map tasks=17315840

Total megabyte-milliseconds taken by all reduce tasks=9905152

Map-Reduce Framework

Map input records=4

Map output records=31

Map output bytes=295

Map output materialized bytes=339

Input split bytes=95

Combine input records=31

Combine output records=29

Reduce input groups=29

Reduce shuffle bytes=339

Reduce input records=29

Reduce output records=29

Spilled Records=58

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=166

CPU time spent (ms)=1380

Physical memory (bytes) snapshot=279044096

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=138969088

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=172

File Output Format Counters

Bytes Written=217

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /output2/

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:29 /output2/_SUCCESS

-rw-r--r-- 1 root supergroup 217 2017-12-17 16:29 /output2/part-r-00000

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -cat /output2/part-r-00000

78 1

ai 1

daokc 1

dfksdhlsd 1

dkhgf 1

docke 1

docker 1

erhejd 1

fdjk 1

fdskre 1

fjdk 1

fjdks 1

fjksl 1

fsd 1

go 1

haddop 1

hello 3

hi 1

hki 1

jfdk 1

scalw 1

sd 1

sdkf 1

sdkfj 1

sdl 1

sstem 1

woekd 1

yfdskt 1

yuihej 1

五 使用Web GUI监控实例

http://192.168.0.102:8088

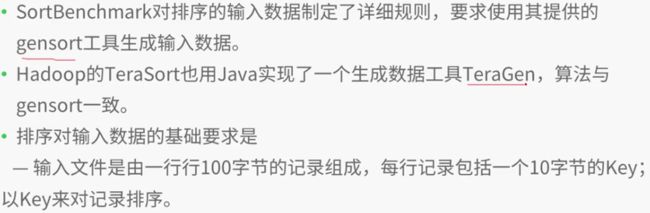

六 关于TearSort

七 TearSort的原理

八 生成数据TearGen

简介:

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen

注意:teragen后的数值单位是行数,因为每行100个字节,所以如果要产生1T的数据,则这个值是1T/100=10000000000(10个0)

举例:

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen

teragen

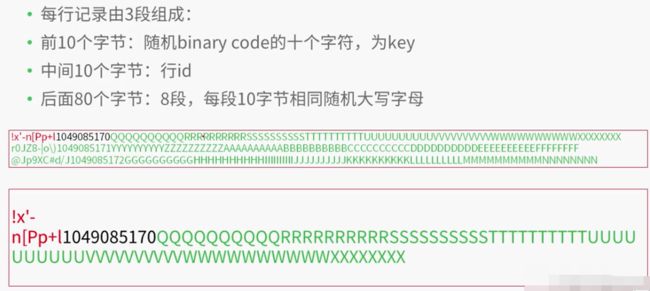

九 生成数据的格式

举例:

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /teragen

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:37 /teragen/_SUCCESS

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00000

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00001

十 运行TearSort

简介:

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar terasort

启动m个mapper(取决于数据文件个数)和r个reduce(取决于设置项:mapred.reduce.tasks)

举例:

[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar terasort /teragen /terasort

17/12/17 16:46:24 INFO terasort.TeraSort: starting

17/12/17 16:46:25 INFO input.FileInputFormat: Total input paths to process : 2

Spent 135ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

Computing input splits took 139ms

Sampling 2 splits of 2

Making 1 from 10000 sampled records

Computing parititions took 384ms

Spent 530ms computing partitions.

17/12/17 16:46:26 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: number of splits:2

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0003

17/12/17 16:46:28 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0003

17/12/17 16:46:28 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0003/

17/12/17 16:46:28 INFO mapreduce.Job: Running job: job_1513499297109_0003

17/12/17 16:46:38 INFO mapreduce.Job: Job job_1513499297109_0003 running in uber mode : false

17/12/17 16:46:38 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:47:19 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:47:41 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:47:44 INFO mapreduce.Job: Job job_1513499297109_0003 completed successfully

17/12/17 16:47:45 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1040006

FILE: Number of bytes written=2445488

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000208

HDFS: Number of bytes written=1000000

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=87622

Total time spent by all reduces in occupied slots (ms)=12795

Total time spent by all map tasks (ms)=87622

Total time spent by all reduce tasks (ms)=12795

Total vcore-milliseconds taken by all map tasks=87622

Total vcore-milliseconds taken by all reduce tasks=12795

Total megabyte-milliseconds taken by all map tasks=89724928

Total megabyte-milliseconds taken by all reduce tasks=13102080

Map-Reduce Framework

Map input records=10000

Map output records=10000

Map output bytes=1020000

Map output materialized bytes=1040012

Input split bytes=208

Combine input records=0

Combine output records=0

Reduce input groups=10000

Reduce shuffle bytes=1040012

Reduce input records=10000

Reduce output records=10000

Spilled Records=20000

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=3246

CPU time spent (ms)=3580

Physical memory (bytes) snapshot=400408576

Virtual memory (bytes) snapshot=6236995584

Total committed heap usage (bytes)=262987776

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=1000000

17/12/17 16:47:45 INFO terasort.TeraSort: done

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /terasort

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:47 /terasort/_SUCCESS

-rw-r--r-- 10 root supergroup 0 2017-12-17 16:46 /terasort/_partition.lst

-rw-r--r-- 1 root supergroup 1000000 2017-12-17 16:47 /terasort/part-r-00000

十一 结果校验

简介:

TearSort还自带一个校验程序,来检验排序结果是否有序的。

执行TearValidate的命令是

./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar tervalidate

举例:

[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teravalidate /terasort /report

17/12/17 17:03:46 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 17:03:48 INFO input.FileInputFormat: Total input paths to process : 1

Spent 56ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

17/12/17 17:03:48 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 17:03:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0007

17/12/17 17:03:49 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0007

17/12/17 17:03:49 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0007/

17/12/17 17:03:49 INFO mapreduce.Job: Running job: job_1513499297109_0007

17/12/17 17:04:00 INFO mapreduce.Job: Job job_1513499297109_0007 running in uber mode : false

17/12/17 17:04:00 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 17:04:08 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 17:04:19 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 17:04:20 INFO mapreduce.Job: Job job_1513499297109_0007 completed successfully

17/12/17 17:04:20 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=92

FILE: Number of bytes written=241805

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000105

HDFS: Number of bytes written=22

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4952

Total time spent by all reduces in occupied slots (ms)=8032

Total time spent by all map tasks (ms)=4952

Total time spent by all reduce tasks (ms)=8032

Total vcore-milliseconds taken by all map tasks=4952

Total vcore-milliseconds taken by all reduce tasks=8032

Total megabyte-milliseconds taken by all map tasks=5070848

Total megabyte-milliseconds taken by all reduce tasks=8224768

Map-Reduce Framework

Map input records=10000

Map output records=3

Map output bytes=80

Map output materialized bytes=92

Input split bytes=105

Combine input records=0

Combine output records=0

Reduce input groups=3

Reduce shuffle bytes=92

Reduce input records=3

Reduce output records=1

Spilled Records=6

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=193

CPU time spent (ms)=1250

Physical memory (bytes) snapshot=281731072

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=139284480

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=22

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /report

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 17:04 /report/_SUCCESS

-rw-r--r-- 1 root supergroup 22 2017-12-17 17:04 /report/part-r-00000

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -cat /report/part-r-00000

checksum 139abefd74b2

十二 应用场景

十三 参考

http://www.jikexueyuan.com/course/2116.html