ELK7.2.0部署-搭建分布式日志平台-log4j自定义日志级别发送日志到LogStash

一、前言

1、ELK简介

ELK是Elasticsearch+Logstash+Kibana的简称

-

ElasticSearch是一个基于Lucene的分布式全文搜索引擎,提供 RESTful API进行数据读写

-

Logstash是一个收集,处理和转发事件和日志消息的工具

- Kibana是Elasticsearch的开源数据可视化插件,为查看存储在ElasticSearch提供了友好的Web界面,并提供了条形图,线条和散点图,饼图和地图等分析工具

总的来说,ElasticSearch负责存储数据,Logstash负责收集日志,并将日志格式化后写入ElasticSearch,Kibana提供可视化访问ElasticSearch数据的功能。

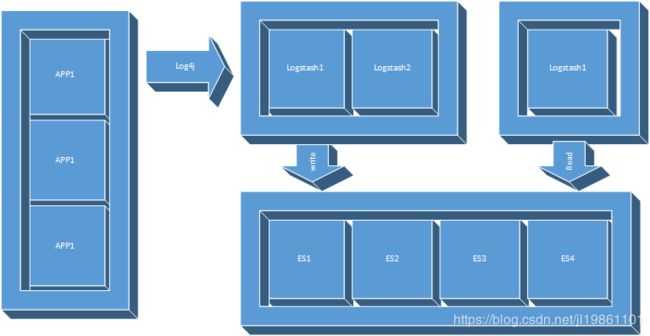

2、ELK工作流

应用将日志按照约定的Key写入Redis,Logstash从Redis中读取日志信息写入ElasticSearch集群。Kibana读取ElasticSearch中的日志,并在Web页面中以表格/图表的形式展示。

二、准备工作

1、服务器&软件环境说明

- 服务器

一共准备3台Ubuntu18.04 Server

| 服务器名 | IP | 说明 |

|---|---|---|

| es1 | 192.168.1.69 | 部署ElasticSearch主节点 |

| es2 | 192.168.1.70 | 部署ElasticSearch从节点 |

| elk | 192.168.1.71 | 部署Logstash + Kibana |

这里为了节省,只部署2台Elasticsearch,并将Logstash + Kibana + Redis部署在了一台机器上。

如果在生产环境部署,可以按照自己的需求调整。

- 软件环境

| 项 | 说明 |

|---|---|

| Linux Server | Ubuntu 18.04 |

| Elasticsearch | 7.2.0 |

| Logstash | 7.2.0 |

| Kibana | 7.2.0 |

| JDK | 11.0.2 |

2、ELK环境准备

由于Elasticsearch、Logstash、Kibana均不能以root账号运行。

但是Linux对非root账号可并发操作的文件、线程都有限制。

所以,部署ELK相关的机器都要调整:

- 修改文件以及进程数限制以及最大并发数限制

# 修改系统文件

sudo vim /etc/security/limits.conf

#增加的内容

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096- 修改虚拟内存大小限制

sudo vim /etc/sysctl.conf

#添加下面配置

vm.max_map_count=655360以上操作重启系统后生效(各系统的限制不一样,不同的硬件环境不同的软件版本都会不一样,请根据elasticsearch启动报错来精确设置)

sudo reboot now

- 下载ELK包并解压

https://www.elastic.co/cn/downloads

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.2.0-linux-x86_64.tar.gz

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.2.0.tar.gz

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.2.0-linux-x86_64.tar.gz三、Elasticsearch 安装部署

本次一共要部署两个Elasticsearch节点,所有文中没有指定机器的操作都表示每个Elasticsearch机器都要执行该操作

1、准备工作

- 解压

tar -zxvf elasticsearch-7.2.0-linux-x86_64.tar.gz2、Elasticsearch 配置

- 修改配置

vim config/elasticsearch.yml- 主节点配置(192.168.1.31)

cluster.name: es

node.name: es1

path.data: /home/rock/elasticsearch-7.2.0/data

path.logs: /home/rock/elasticsearch-7.2.0/logs

network.host: 192.168.1.69

http.port: 9200

transport.tcp.port: 9300

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["192.168.1.69:9300","192.168.1.70:9300"]

discovery.zen.minimum_master_nodes: 1- 从节点配置(192.168.1.32)

cluster.name: es

node.name: es2

path.data: /home/rock/elasticsearch-7.2.0/data

path.logs: /home/rock/elasticsearch-7.2.0/logs

network.host: 192.168.1.70

http.port: 9200

transport.tcp.port: 9300

node.master: false

node.data: true

discovery.zen.ping.unicast.hosts: ["192.168.1.69:9300","192.168.1.70:9300"]

discovery.zen.minimum_master_nodes: 1- 配置项说明

| 项 | 说明 |

|---|---|

| cluster.name | 集群名 |

| node.name | 节点名 |

| path.data | 数据保存目录 |

| path.logs | 日志保存目录 |

| network.host | 节点host/ip |

| http.port | HTTP访问端口 |

| transport.tcp.port | TCP传输端口 |

| node.master | 是否允许作为主节点 |

| node.data | 是否保存数据 |

| discovery.zen.ping.unicast.hosts | 集群中的主节点的初始列表,当节点(主节点或者数据节点)启动时使用这个列表进行探测 |

| discovery.zen.minimum_master_nodes | 主节点个数 |

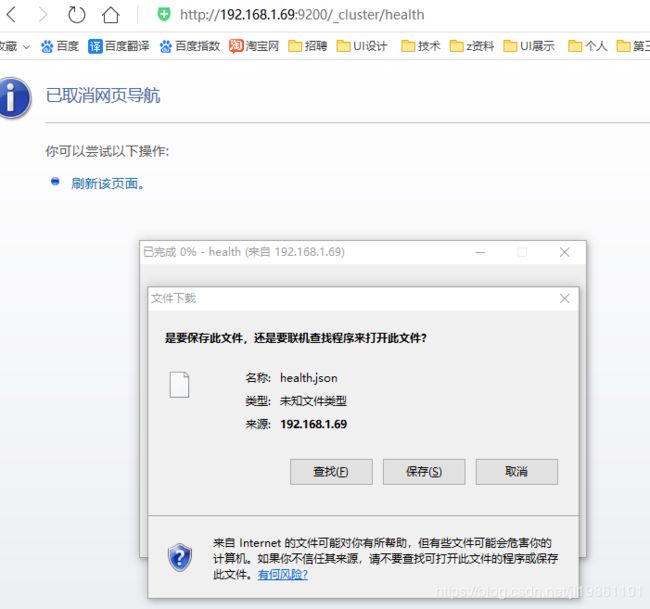

2、Elasticsearch启动&健康检查

- 启动

#进入elasticsearch根目录

cd /home/rock/elasticsearch-7.2.0

#启动

./bin/elasticsearch- 查看健康状态

{

"cluster_name": "es",

"status": "green",

"timed_out": false,

"number_of_nodes": 2,

"number_of_data_nodes": 2,

"active_primary_shards": 0,

"active_shards": 0,

"relocating_shards": 0,

"initializing_shards": 0,

"unassigned_shards": 0,

"delayed_unassigned_shards": 0,

"number_of_pending_tasks": 0,

"number_of_in_flight_fetch": 0,

"task_max_waiting_in_queue_millis": 0,

"active_shards_percent_as_number": 100

}如果返回status=green表示正常

四、Logstash 配置

- 配置数据&日志目录&主目录&jvm启动项&pipelines

#打开目录

cd /home/rock/logstash-7.2.0

#修改配置

vim config/startup.options

#修改为以下内容

LS_HOME=/home/rock/logstash-7.2.0

LS_SETTINGS_DIR=/home/rock/logstash-7.2.0/config

#修改配置

vim config/logstash.yml

#增加以下内容

path.data: /home/rock/logstash-7.2.0/data

path.logs: /home/rock/logstash-7.2.0/logs

#修改配置

vim config/jvm.options

#修改如下配置

-Xms512m #修改成最适合您的配置

-Xmx512m #修改成最合适您的配置

#修改垃圾收集器策略 默认为CMS,改用G1

#-XX:+UseConcMarkSweepGC

#-XX:CMSInitiatingOccupancyFraction=75

#-XX:+UseCMSInitiatingOccupancyOnly

-XX:+UseG1GC

#修改配置

vim config/pipelines.yml

#添加如下配置

- pipeline.id: my_pipeline_name

path.config: "/home/rock/logstash-7.2.0/config/logstash.conf"

queue.type: persisted- 配置LogStash出入口

#编辑文件

vim config/logstash.conf

#添加如下内容

input {

tcp {

port => 12345

codec => json

}

}

filter {

}

output {

elasticsearch {

hosts => ["192.168.1.69:9200","192.168.1.70:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout {

}

}

五、Kibana 配置

修改配置

#进入目录

cd /home/rock/kibana-7.2.0-linux-x86_64

#配置信息

vim config/kibana.yml

#增加如下信息

server.port: 5601

server.host: "192.168.1.71"

elasticsearch.hosts: ["http://192.168.1.69:9200","http://192.168.1.70:9200"]- 启动

bin/kibana浏览器访问: 192.168.1.71:5601

六、log4j部署

配置文件log4j.xml。自定义日志级别。自定义json解析器。

my-project

%m

创建log4j自定义的json pattern

package com.test.rock.log;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.commons.lang3.time.DateFormatUtils;

import org.apache.logging.log4j.core.Layout;

import org.apache.logging.log4j.core.LogEvent;

import org.apache.logging.log4j.core.config.Configuration;

import org.apache.logging.log4j.core.config.Node;

import org.apache.logging.log4j.core.config.plugins.*;

import org.apache.logging.log4j.core.layout.AbstractStringLayout;

import org.apache.logging.log4j.core.layout.PatternLayout;

import org.apache.logging.log4j.core.layout.PatternSelector;

import org.apache.logging.log4j.core.pattern.RegexReplacement;

import java.io.File;

import java.lang.management.ManagementFactory;

import java.lang.management.RuntimeMXBean;

import java.nio.charset.Charset;

@Plugin(name = "ElkJsonPatternLayout", category = Node.CATEGORY, elementType = Layout.ELEMENT_TYPE, printObject = true)

public class ElkJsonPatternLayout extends AbstractStringLayout {

/** 项目路径 */

private static String PROJECT_PATH;

private PatternLayout patternLayout;

private String projectName;

static {

PROJECT_PATH = new File("").getAbsolutePath();

}

private ElkJsonPatternLayout(Configuration config, RegexReplacement replace, String eventPattern,

PatternSelector patternSelector, Charset charset, boolean alwaysWriteExceptions,

boolean noConsoleNoAnsi, String headerPattern, String footerPattern, String projectName) {

super(config, charset,

PatternLayout.createSerializer(config, replace, headerPattern, null, patternSelector, alwaysWriteExceptions,

noConsoleNoAnsi),

PatternLayout.createSerializer(config, replace, footerPattern, null, patternSelector, alwaysWriteExceptions,

noConsoleNoAnsi));

this.projectName = projectName;

this.patternLayout = PatternLayout.newBuilder()

.withPattern(eventPattern)

.withPatternSelector(patternSelector)

.withConfiguration(config)

.withRegexReplacement(replace)

.withCharset(charset)

.withAlwaysWriteExceptions(alwaysWriteExceptions)

.withNoConsoleNoAnsi(noConsoleNoAnsi)

.withHeader(headerPattern)

.withFooter(footerPattern)

.build();

}

@Override

public String toSerializable(LogEvent event) {

//在这里处理日志内容

String message = patternLayout.toSerializable(event);

String jsonStr = new JsonLoggerInfo(projectName, message, event.getLevel().name(), event.getLoggerName(), Thread.currentThread().getName(), event.getTimeMillis()).toString();

return jsonStr + "\n";

}

@PluginFactory

public static ElkJsonPatternLayout createLayout(

@PluginAttribute(value = "pattern", defaultString = PatternLayout.DEFAULT_CONVERSION_PATTERN) final String pattern,

@PluginElement("PatternSelector") final PatternSelector patternSelector,

@PluginConfiguration final Configuration config,

@PluginElement("Replace") final RegexReplacement replace,

// LOG4J2-783 use platform default by default, so do not specify defaultString for charset

@PluginAttribute(value = "charset") final Charset charset,

@PluginAttribute(value = "alwaysWriteExceptions", defaultBoolean = true) final boolean alwaysWriteExceptions,

@PluginAttribute(value = "noConsoleNoAnsi", defaultBoolean = false) final boolean noConsoleNoAnsi,

@PluginAttribute("header") final String headerPattern,

@PluginAttribute("footer") final String footerPattern,

@PluginAttribute("projectName") final String projectName) {

return new ElkJsonPatternLayout(config, replace, pattern, patternSelector, charset,

alwaysWriteExceptions, noConsoleNoAnsi, headerPattern, footerPattern, projectName);

}

public static String getProcessID() {

RuntimeMXBean runtime = ManagementFactory.getRuntimeMXBean();

String name = runtime.getName(); // format: "pid@hostname"

try {

return name.substring(0, name.indexOf('@'));

} catch (Exception e) {

return null;

}

}

public static String getThreadID(){

return "" + (int)(1+Math.random()*10000);

// return "" + Thread.currentThread().getId();

}

/**

* 输出的日志内容

*/

public static class JsonLoggerInfo{

/** 项目名 */

private String projectName;

/** 当前进程ID */

private String pid;

/** 当前线程ID */

private String tid;

/** 当前线程名 */

private String tidname;

/** 日志信息 */

private String message;

/** 日志级别 */

private String level;

/** 日志分类 */

private String topic;

/** 日志时间 */

private String time;

public JsonLoggerInfo(String projectName, String message, String level, String topic, String tidname, long timeMillis) {

this.projectName = projectName;

this.pid = getProcessID();

this.tid = getThreadID();

this.tidname = tidname;

this.message = message;

this.level = level;

this.topic = topic;

this.time = DateFormatUtils.format(timeMillis, "yyyy-MM-dd HH:mm:ss.SSS");

}

public String getProjectName() {

return projectName;

}

public String getPid() {

return pid;

}

public String getTid() {

return tid;

}

public String getTidname() {

return tidname;

}

public String getMessage() {

return message;

}

public String getLevel() {

return level;

}

public String getTopic() {

return topic;

}

public String getTime() {

return time;

}

@Override

public String toString() {

try {

return new ObjectMapper().writeValueAsString(this);

} catch (JsonProcessingException e) {

e.printStackTrace();

}

return null;

}

}

}测试main方法

public static void main(String[] args) throws Exception{

log.info("abc abc abc");

for (int i = 0; i < 1000000; i++) {

log.log(Level.toLevel("CUSTOMER"), "hahahahaha");

try{

Thread.sleep(1*1000);

} catch(Exception e){

e.printStackTrace();

}

}

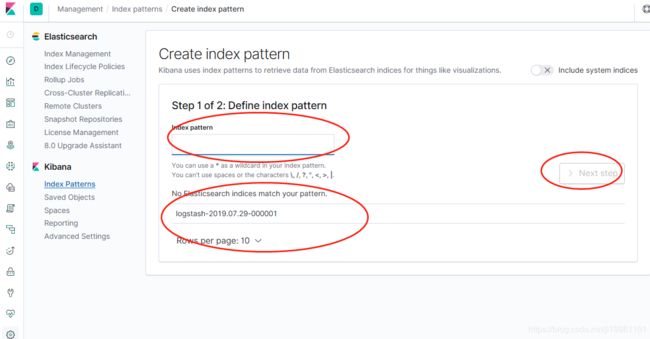

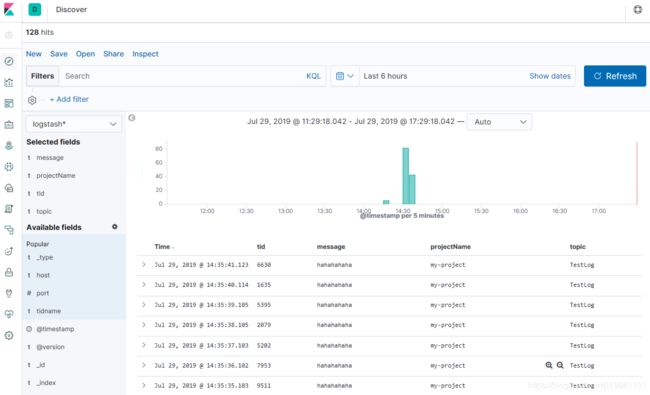

}七、Kibana可视化图形界面,简单查看

成功发送日志以后就是需要在Kibana上的可视化界面查看我们的日志数据的统计结果。

创建index pattern

查看索引结果