SpringBoot之Kafka官方案例分析

代码来自Kafka官方网站:https://github.com/spring-projects/spring-kafka/tree/master/samples/sample-03

ConcurrentKafkaListenerContainerFactory工厂

@Bean

public ConcurrentKafkaListenerContainerFactory kafkaListenerContainerFactory(

ConcurrentKafkaListenerContainerFactoryConfigurer configurer,

ConsumerFactory kafkaConsumerFactory) {

ConcurrentKafkaListenerContainerFactory factory =

new ConcurrentKafkaListenerContainerFactory<>();

configurer.configure(factory, kafkaConsumerFactory);

factory.setBatchListener(true);

factory.setMessageConverter(batchConverter());

return factory;

} 源码分析:

ConcurrentKafkaListenerContainerFactory:并发Kafka监听器容器工厂类

public class ConcurrentKafkaListenerContainerFactory

extends AbstractKafkaListenerContainerFactory, K, V>

该工厂类负责容器的创建,初始化容器,其父类有ContainerProperties(容器的属性),ConsumerFactory(消费工厂类),MessageConverter(消息转换),KafkaTemplate(Kafka模板)。

ConcurrentKafkaListenerContainerFactoryConfigurer :并发Kafka监听器容器工厂的配置类:

public class ConcurrentKafkaListenerContainerFactoryConfigurer

该类的属性有:KafkaProperties,MessageConverter,KafkaTemplate等,用来进行容器配置。

ConsumerFactory :Kafka消费者类工厂

public interface ConsumerFactory

其实现类:

public class DefaultKafkaConsumerFactory implements ConsumerFactory DefaultKafkaConsumerFactory该类有:

private final Map configs;

private Supplier> keyDeserializerSupplier;

private Supplier> valueDeserializerSupplier;

public DefaultKafkaConsumerFactory(Map configs)

//一系列创建createConsumer的方法

public Consumer createConsumer(...)

主要方法:Application类-configure(...)

configurer.configure(factory, kafkaConsumerFactory);

==》

public void configure(ConcurrentKafkaListenerContainerFactory listenerFactory,

ConsumerFactory consumerFactory) {

listenerFactory.setConsumerFactory(consumerFactory);

configureListenerFactory(listenerFactory);

configureContainer(listenerFactory.getContainerProperties());

} 该方法接受一个ConcurrentKafkaListenerContainerFactory形参和ConsumerFactory形参,configure方法实现ConcurrentKafkaListenerContainerFactory的配置,来绑定ConsumerFactory,并对configureListenerFactory进行配置以及配置容器。

listenerFactory.setConsumerFactory(...)

public void setConsumerFactory(ConsumerFactory consumerFactory) {

this.consumerFactory = consumerFactory;

}设置ConcurrentKafkaListenerContainerFactory的consumer对象;

configureListenerFactory(...)

private void configureListenerFactory(ConcurrentKafkaListenerContainerFactory factory) {

PropertyMapper map = PropertyMapper.get().alwaysApplyingWhenNonNull();

Listener properties = this.properties.getListener();

map.from(properties::getConcurrency).to(factory::setConcurrency);

map.from(this.messageConverter).to(factory::setMessageConverter);

map.from(this.replyTemplate).to(factory::setReplyTemplate);

......

} 该方法:ConcurrentKafkaListenerContainerFactoryConfigurer类先获取自身的KafkaProperties对象。

@ConfigurationProperties(prefix = "spring.kafka")

public class KafkaProperties {

private final Map properties = new HashMap<>();

private final Consumer consumer = new Consumer();

private final Producer producer = new Producer();

private final Admin admin = new Admin();

private final Listener listener = new Listener();

......

}

KafkaProperties对象包含Kafkabroker的配置信息,其具体的配置信息映射到yml文件中spirng.kafka前缀的属性配置中,如果某些配置没有被配置,则会使用默认的配置,如果在配置文件中进行了配置,则会覆盖默认的配置。利用这些配置信息可以创建生产者,消费者,Stream等。

this.properties.getListener()

public static class Listener {

public enum Type {

SINGLE,

BATCH

}

private Type type = Type.SINGLE;

private AckMode ackMode;

private String clientId;

......

}Listener类Kafka具体生产者,消费者等具体的配置信息。

map.from(properties::getConcurrency).to(factory::setConcurrency)

private void configureListenerFactory(ConcurrentKafkaListenerContainerFactory factory) {

map.from(properties::getConcurrency).to(factory::setConcurrency);

map.from(this.messageConverter).to(factory::setMessageConverter);

map.from(this.replyTemplate).to(factory::setReplyTemplate);

......

} 该方法是将ConcurrentKafkaListenerContainerFactoryConfigure类的相关配置赋值给ConcurrentKafkaListenerContainerFactory中对应的配置中。ConfigureContainer(listenerFactory.getContainerProperties())

private void configureContainer(ContainerProperties container) {

PropertyMapper map = PropertyMapper.get().alwaysApplyingWhenNonNull();

Listener properties = this.properties.getListener();

map.from(properties::getAckMode).to(container::setAckMode);

......

}同理,该方法获取配置类的KafkaProperties对象,然后获取Listener类,将对应的配置属性赋值给容器的相关配置。factory.setBatchListener(true)

factory.setMessageConverter(batchConverter())

public void setBatchListener(Boolean batchListener) {

this.batchListener = batchListener;

}

public void setMessageConverter(MessageConverter messageConverter) {

this.messageConverter = messageConverter;

}该方法设置是否批量监听,以及消息的转换。Kafka消息传送,必须被序列化。

RecordMessageConverter类

@Bean

public RecordMessageConverter converter() {

return new StringJsonMessageConverter();

}一路追踪该类:

===》

public StringJsonMessageConverter() {

super();

}

===》 进入StringJsonMessageConverter的super()类

public JsonMessageConverter() {

this(JacksonUtils.enhancedObjectMapper());

}

===》可以看出,JacksonUtils.enhancedObjectMapper()返回了ObjecMapper对象

public JsonMessageConverter(ObjectMapper objectMapper) {

Assert.notNull(objectMapper, "'objectMapper' must not be null.");

this.objectMapper = objectMapper;

}

返回上级,并转移到JacksonUtils.enhancedObjectMapper()

===》进入enhancedObjectMapper()

public static ObjectMapper enhancedObjectMapper() {

return enhancedObjectMapper(ClassUtils.getDefaultClassLoader());

}

===》ClassUtils.getDefaultClassLoader() :返回一个类加载器

public static ClassLoader getDefaultClassLoader() {

ClassLoader cl = null;

try {

cl = Thread.currentThread().getContextClassLoader();

}

......

}

返回上上级,并转移到 enhancedObjectMapper(ClassUtils.getDefaultClassLoader())

===》实现ObjectMapper的构建以及注册和classLoader

public static ObjectMapper enhancedObjectMapper(ClassLoader classLoader) {

ObjectMapper objectMapper = JsonMapper.builder()

.configure(MapperFeature.DEFAULT_VIEW_INCLUSION, false)

.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false)

.build();

registerWellKnownModulesIfAvailable(objectMapper, classLoader);

return objectMapper;

}

===》进入JsonMapper.builder()

public static JsonMapper.Builder builder() {

return new Builder(new JsonMapper());

}

===》进入 new Builder(new JsonMapper())

public Builder(JsonMapper m) {

super(m);

}

===》进入MapperBuilder(M mapper)

protected MapperBuilder(M mapper)

}

_mapper = mapper;

}

===》返回上上级,进入new JsonMapper()

public JsonMapper() {

this(new JsonFactory());

}

===》进入 JsonFactory()

public JsonFactory() {

this((ObjectCodec) null);

}

===》

public JsonFactory(ObjectCodec oc) {

_objectCodec = oc;

_quoteChar = DEFAULT_QUOTE_CHAR;

}

===》进入ObjectCodec对象

描述信息为:

Abstract class that defines the interface that {@link JsonParser} and use to serialize and deserialize regular

一个接口,实现类将Java对昂进行序列化和反序列化。

总结为:converter类就是将对象进行序列化的类。

BatchMessagingMessageConverter类

@Bean

public BatchMessagingMessageConverter batchConverter() {

return new BatchMessagingMessageConverter(converter());

}

同上,与批处理消息侦听器一起使用的Messaging实现;

KafkaTemplate 类

@Autowired

private KafkaTemplate kafkaTemplate;KafkaTemplate是一个 “A template for executing high-level operations.“,即操作进行封装的一个类,提供了事务,消息转换,主题,生产者工厂类。

@KafkaListener

@KafkaListener(id = "fooGroup2", topics = "topic2")

public void listen1(List foos) throws IOException {

logger.info("Received: " + foos);

foos.forEach(f -> kafkaTemplate.send("topic3", f.getFoo().toUpperCase()));

logger.info("Messages sent, hit Enter to commit tx");

System.in.read();

} @KafkaListener注解,用来监听特定主题,当主题有变动时,会被调用,参数foos就是消息

主题创建

@Bean

public NewTopic dlt() {

return new NewTopic("topic1.DLT", 1, (short) 1);

}

===》

public NewTopic(String name, int numPartitions, short replicationFactor) {

this.name = name;

this.numPartitions = numPartitions;

this.replicationFactor = replicationFactor;

this.replicasAssignments = null;

}该类用来实现主题的创建。

Controller类

@Autowired

private KafkaTemplate template;

@PostMapping(path = "/send/foos/{what}")

public void sendFoo(@PathVariable String what) {

this.template.executeInTransaction(

kafkaTemplate -> { StringUtils.commaDelimitedListToSet(what).stream()

.map(s -> new Foo1(s))

.forEach(foo -> kafkaTemplate.send("topic2", foo));

return null;

});

}这个使用KafkaTemplate将消息发送到特定的主题。

资源文件之配置文件application.yml文件

server:

port: 9999

spring:

application:

name: broker

kafka:

producer:

value-serializer: org.springframework.kafka.support.serializer.JsonSerializer

transaction-id-prefix: tx.

consumer:

properties:

isolation.level: read_committed

bootstrap-servers: localhost:8989

server:配置springboot本身自带服务器的相关配置,端口port为9999

spring的相关配置,application.name:应用名称

kafka的相关配置:生产者,消费者的配置,Kafka主机的地址和端口。

这里以spirng.kafka开头的配置信息,与KafkaProperties类的属性对应,没有配置的则采用默认配置。

注意:server和spirng.application是我自己增加上去的。官网的没有,这个没什么影响。

消息对象

Foo1

package com.common;

public class Foo1 {

private String foo;

public Foo1() {

super();

}

public Foo1(String foo) {

this.foo = foo;

}

public String getFoo() {

return this.foo;

}

public void setFoo(String foo) {

this.foo = foo;

}

@Override

public String toString() {

return "Foo1 [foo=" + this.foo + "]";

}}

一般的消息使用字符串,由于Kafka传送时需要序列化,则使用(生产者)

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

如果使用对象,则需要将对象序列化,这里这里我们使用:

value-serializer: org.springframework.kafka.support.serializer.JsonSerializer

为什么没有对Key使用序列化,如果没有,则默认找到一个分区进行存储,而值是序列化Foo对象。

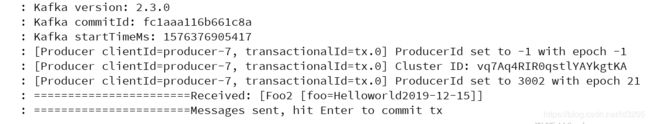

案例运行结果分析:

运行时,首先启动Zookeeper和Kafka服务器,需要注意的地方时,对于主题的副本数,小于Kafka的集群数量。

使用PostmanCanary软件进行post请求的发送,该请求会将消息发送到topic2主题中,然后topic2监听器监听到topic2发生变化,会将消息发送到topic3主题中。

控制台日志结果:

如有问题,敬请指出,谢谢观看,与君共勉