Python与R协同完成【中国裁判文书网】文书内容爬取

概述:

目标网站:http://wenshu.court.gov.cn/

核心:爬取每份文书的DocID

请求URL:http://wenshu.court.gov.cn/List/ListContent

请求类型:POST

传参表格数据参数:Param,Index,Page,Order,Direction,vl5x,number,guid

分析过程:

1.guid也称为uuid,通用唯一识别码

2.number由向http://wenshu.court.gov.cn/ValiCode/GetCode POST请求得到,form data为guid

3.vl5x由getKey()函数(判断方法JavaScrip代码:Lawyee.CPWSW.JsTree.js)

4.案由层级目录:Lawyee.CPWSW.DictData.js

5.getKey()的function定义在List?Sorttype=1&conditions=searchWord+1+AJLX++...的response中(加密packed标识)该函数是js代码

注:以上过程均需要自己耐心寻找,没有捷径

6.加密packed的getKey()函数unpacked:

复制getKey()函数,如function getKey() {.....}到http://tool.chinaz.com/js.aspx进行unpacked,之后将解密后的结果代码复制到http://jsbeautifier.org/进行js界面美观

7.调试unpacked后的getKey()函数js代码

新建unpacked.html文件,内容如下:

将unpacked.html文件用谷歌浏览器打开,f12-console-filter,点击【点击这里】按钮,发现报错信息getCookie is not defined(因为文件中有getCookie函数未定义),getCookie获取的是Cookie中的vjkl5(故可以直接替换),调试过程中可以将return或eval替换成alert观察输出结果(一行一行的解析)

上面红色部分,使用Console调试,看缺少哪些东西,补充(原网页找链接,补充),与unpacked.html文件放在同一目录下

配置文件下载地址:

JS混淆解密并美化过的getkey:https://download.csdn.net/download/qq_38984677/10541403

getkey JS文件:https://download.csdn.net/download/qq_38984677/10541401

base64 JS文件:https://download.csdn.net/download/qq_38984677/10541398

代码如下:

import urllib.request

import re

import http.cookiejar

import execjs

import uuid

guid=uuid.uuid4()

print("guid:"+str(guid))#文件路径自己改

fh=open("F:/../base64.js","r")

js1=fh.read()

fh.close()

fh=open("F:/../md5.js","r")

js2=fh.read()

fh.close()

fh=open("F:/../getkey.js","r")

js3=fh.read()

fh.close()

js_all=js1+js2+js3

cjar=http.cookiejar.CookieJar()

opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cjar))

opener.addheaders=[("Referer","http://wenshu.court.gov.cn/list/list/?sorttype=1&number=A3EXFTV7&guid=a4103106-1934-74f9c684-59d9b84f84e1&conditions=searchWord+1+AJLX++%E6%A1%88%E4%BB%B6%E7%B1%BB%E5%9E%8B:%E5%88%91%E4%BA%8B%E6%A1%88%E4%BB%B6&conditions=searchWord+%E6%B5%99%E6%B1%9F%E7%9C%81+++%E6%B3%95%E9%99%A2%E5%9C%B0%E5%9F%9F:%E6%B5%99%E6%B1%9F%E7%9C%81")]

urllib.request.install_opener(opener)

import random

#第四个是我自己的,贡献出来

uapools=[

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36 Edge/14.14393",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36",

]

urllib.request.urlopen("http://wenshu.court.gov.cn/list/list/?sorttype=1&number=A3EXFTV7&guid=a4103106-1934-74f9c684-59d9b84f84e1&conditions=searchWord+1+AJLX++%E6%A1%88%E4%BB%B6%E7%B1%BB%E5%9E%8B:%E5%88%91%E4%BA%8B%E6%A1%88%E4%BB%B6&conditions=searchWord+%E6%B5%99%E6%B1%9F%E7%9C%81+++%E6%B3%95%E9%99%A2%E5%9C%B0%E5%9F%9F:%E6%B5%99%E6%B1%9F%E7%9C%81").read().decode("utf-8","ignore")

pat="vjkl5=(.*?)\s"

vjkl5=re.compile(pat,re.S).findall(str(cjar))

if(len(vjkl5)>0):

vjkl5=vjkl5[0]

else:

vjkl5=0

print("vjkl5:"+str(vjkl5))

js_all=js_all.replace("ce7c8849dffea151c0179187f85efc9751115a7b",str(vjkl5))

compile_js=execjs.compile(js_all)

vl5x=compile_js.call("getKey")

print("vl5x:"+str(vl5x))

url="http://wenshu.court.gov.cn/List/ListContent"

#100页

for i in range(0,100):

try:

codeurl="http://wenshu.court.gov.cn/ValiCode/GetCode"

codedata=urllib.parse.urlencode({

"guid":guid,

}).encode('utf-8')

codereq = urllib.request.Request(codeurl,codedata)

codereq.add_header('User-Agent',random.choice(uapools))

codedata=urllib.request.urlopen(codereq).read().decode("utf-8","ignore")

#print(codedata)

#条件自己改,一般只能爬取100页,所以可以传入更精确的查询条件,比如律所、律师等

postdata =urllib.parse.urlencode({

"Param":"案件类型:民事案件,法院地域:浙江省",

"Index":str(i+1),

"Page":"20",

"Order":"法院层级",

"Direction":"asc",

"number":str(codedata),

"guid":guid,

"vl5x":vl5x,

}).encode('utf-8')

req = urllib.request.Request(url,postdata)

req.add_header('User-Agent',random.choice(uapools))

data=urllib.request.urlopen(req).read().decode("utf-8","ignore")

pat1='文书ID.*?".*?"(.*?)."'

pat2='裁判日期.*?".*?"(.*?)."'

pat3='案件名称.*?".*?"(.*?)."'

pat4='审判程序.*?".*?"(.*?)."'

pat5='案号.*?".*?"(.*?)."'

pat6='法院名称.*?".*?"(.*?)."'

allid1=re.compile(pat1).findall(data)

print(allid1)

tian=open('Doc_Id.txt','a')

for j in allid1:

tian.write(j)

tian.write('\n')

tian.close()

allid2=re.compile(pat2).findall(data)

print(allid2)

tian=open('Date_Referee.txt','a')

for j in allid2:

tian.write(j)

tian.write('\n')

tian.close()

allid3=re.compile(pat3).findall(data)

print(allid3)

tian=open('Case_Name.txt','a')

for j in allid3:

tian.write(j)

tian.write('\n')

tian.close()

allid4=re.compile(pat4).findall(data)

print(allid4)

tian=open('Trial_Procedure.txt','a')

for j in allid4:

tian.write(j)

tian.write('\n')

tian.close()

allid5=re.compile(pat5).findall(data)

print(allid5)

tian=open('Case_Number.txt','a')

for j in allid5:

tian.write(j)

tian.write('\n')

tian.close()

allid6=re.compile(pat6).findall(data)

print(allid6)

tian=open('Court_Name.txt','a')

for j in allid6:

tian.write(j)

tian.write('\n')

tian.close()

except Exception as err:

print(err)####用R完成剩余的工作,CSDN没有R选项,所以按Python来编辑

setwd("F:/../...")

library("rvest")

library("dplyr")

Case_Name<-read.table("Case_Name.txt",stringsAsFactors = F,sep = '\n')

Case_Number<-read.table("Case_Number.txt",stringsAsFactors = F,sep = '\n')

Court_Name<-read.table("Court_Name.txt",stringsAsFactors = F,sep = '\n')

Date_Referee<-read.table("Date_Referee.txt",stringsAsFactors = F,sep = '\n')

Doc_Id<-read.table("Doc_Id.txt",stringsAsFactors = F,sep = '\n')

Trial_Procedure<-read.table("Trial_Procedure.txt",stringsAsFactors = F,sep = '\n')

document_basic_info<-cbind(Case_Name,Case_Number,Court_Name,Date_Referee,Doc_Id,Trial_Procedure,stringsAsFactors = F)

colnames(document_basic_info)<-c('Case_Name','Case_Number','Court_Name','Date_Referee','Doc_Id','Trial_Procedure')

rm(Case_Name)

rm(Case_Number)

rm(Court_Name)

rm(Date_Referee)

rm(Doc_Id)

rm(Trial_Procedure)

gc()

data_frame_huizong<-data.frame()

url_yuan<-'http://wenshu.court.gov.cn/CreateContentJS/CreateContentJS.aspx?DocID='

for (j in 1:nrow(document_basic_info)) {

url<-paste0(url_yuan,document_basic_info$Doc_Id[j])

i=1

while (i<=10) {

trycatch_value<-tryCatch({

link<-as.character()

link<-read_html(url) %>% html_nodes(xpath="/html/body/div") %>% html_text();

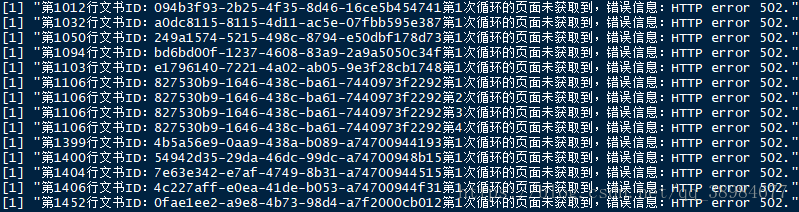

1+1},error=function(e) return(paste0("第",j,"行文书ID:",document_basic_info$Doc_Id[j],"第",i,"次循环的页面未获取到,错误信息:",e$message))

)

if (trycatch_value==2 & (length(link)>0))

break else {

print(trycatch_value)

i=i+1

Sys.sleep(runif(1,3,4))

}

}

#如果某个文书ID始终没有获取到数据,跳出循环

if (i>1 & (length(link)==0)) break

data_frame_tmp<-as.data.frame(cbind(as.character(document_basic_info$Case_Number)[j],link),stringsAsFactors=F)

rm(link)

colnames(data_frame_tmp)<-c("Case_Number","Case_Text")

data_frame_huizong<-rbind(data_frame_huizong,data_frame_tmp)

rm(data_frame_tmp)

gc()

Sys.sleep(runif(1,1,2))

}

rm(j)

rm(url)

data_frame_huizong$Case_Text<-gsub('[[:space:]]',"",data_frame_huizong$Case_Text)以上的Python部分参考了韦玮老师的部分代码,整个爬取过程,1600份文书共1h左右

附上一些例外处理的截图,只是为了debug和log使用,不影响结果的完整及准确性