机器学习-决策树模型-西瓜书代码(C4.5)-预剪枝修正

以下代码是本人在学习西瓜书时花费两个礼拜根据原理进行原创,若需转载请咨询本人,谢谢!

自我研究模拟代码

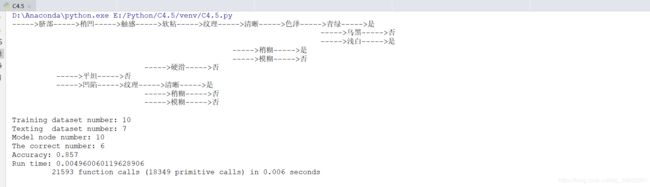

附上离散类别运行截图

c45_config.py

"""

Filename: kdd_config

Author: kdd_zyx

Description: 机器学习 - C4.5(连续值) - 剪枝

Start: 18.10.1

End:

"""

import time as t

import numpy as np

import pandas as pd

import random as r

import cProfile as cPf

text_tnum = 791 # 抽取数据量

kddt_tnum = 1 # KDD测试量

text_pret = 0.225 # 测试集百分比(计算需要排除属性集)

let = 0 # 小于

got = 1 # 大于

start = 1 # 数据上标

end = -1 # 结尾下标

def divi(x, y):

# 除法异常捕捉

if y == 0:

return 0

else:

return x / y

def log2(x):

# log函数异常捕捉

if x == 0:

return 0

else:

return np.log2(x)

def tw_copy(old_arrs):

# 避免deepcopy

new_arrs = []

for arr in old_arrs:

new_arrs.append(arr.copy())

return new_arrs

def flat(datas):

re_datas = []

for data in datas:

if data == datas[end]:

re_datas.append(data)

return re_datas

else:

re_datas.append(float(data))

def radf(text_addr, text_splt):

Data = pd.read_csv('D:\\BaiduNetdiskDownload\\dc\\train.csv')

Data = Data.values

dataset = np.delete(Data, 0, axis=1)

dataset[0] = np.arange(6001)

# with open(text_addr, 'r', enco

# with open(text_addr, 'r', encoding='UTF-8') as f:

# dataset = [eachLine.replace('\n', '').split(text_splt) for eachLine in f]

# f.close()

return dataset.tolist()

def writ(NUM, result):

try:

with open('kdd{}.txt'.format(NUM), 'a+', encoding='UTF-8') as f:

f.write(result)

f.close()

except Exception as e:

print('Error:', e)

writ(result)

# 引入训练集、验证集、测试集

def Lead_dataset():

try:

dataset = radf('c45_csv.txt', ',')

fin_dataset = []

pro_dataset = dataset[0]

txt_dataset = dataset[start : text_tnum]

r.shuffle(txt_dataset)

fin_dataset.append(pro_dataset)

for data in txt_dataset:

fin_dataset.append(data)

kdd_dataset = dataset[text_tnum:]

for data in kdd_dataset:

fin_dataset.append(data)

return fin_dataset

except Exception as e:

print('Error:', e)

c45.py

"""

Filename: kdd_check_one

Author: kdd_zyx

Description: 机器学习 - C4.5(连续值) - 预剪枝

Datas:kdd - 随机划分

Start: 18.10.1

End: 18.10.7

"""

from c45_config import *

class Decision_tree(object):

# 初始化决策树对象

def __init__(self):

self.tra_dataset = []

self.pro_dataset = []

self.end_dataset = []

self.tex_dataset = []

# 引入类别集

def Lead_attrset(self):

end_data = []

for data in self.tra_dataset:

end_data.append(data[end])

return list(set(end_data))

# 构建参数集

def Main_data(self):

dataset = Lead_dataset()

self.tra_num = len(dataset)

if text_pret != 0:

self.tex_num = int(len(dataset[start: ]) * text_pret + 1)

self.tra_dataset = dataset[start : -self.tex_num]

self.tex_dataset = dataset[-self.tex_num: ]

self.pro_dataset = dataset[0]

self.end_dataset = self.Lead_attrset()

else:

pass

# 构建决策树模型

def TreeCreate(self):

self.C45_Tree = []

self.Main_data()

self.TreeGenerate([], self.tra_dataset, self.pro_dataset)

self.Tree_gui()

self.Tree_verify(True)

# 构建决策树枝叶

def TreeGenerate(self, limb, tra_dataset, pro_dataset):

print(len(tra_dataset))

# situation one

condition_one = True

for data in tra_dataset:

if data[end] != tra_dataset[0][end]:

condition_one = False

break

if condition_one:

limb.append(data[end])

self.C45_Tree.append(limb)

return

# situation two

condition_two = True

for data in tra_dataset:

if data[:end] != tra_dataset[0][:end]:

condition_two = False

break

if condition_two or not pro_dataset:

Pk = self.Calc_Max(tra_dataset)

limb.append(self.end_dataset[Pk.index(max(Pk))])

self.C45_Tree.append(limb)

return

# 预剪枝前

Pk = self.Calc_Max(tra_dataset)

limb.append(self.end_dataset[Pk.index(max(Pk))])

self.C45_Tree.append(limb.copy())

accury_font = self.Tree_verify()

Font_Tree = tw_copy(self.C45_Tree)

del limb[end]

del self.C45_Tree[end]

# situation three

tra_data, tra_pro, tra_Ta = self.Calc_InfoGR(tra_dataset, pro_dataset)

limb.append(tra_pro)

limb.append(str(round(tra_Ta, 2)))

comp_copy = {let: '>', got: '<'}

# 预剪枝后

for data in tra_data:

limb.append(comp_copy[tra_data.index(data)])

Pk = self.Calc_Max(data)

limb.append(self.end_dataset[Pk.index(max(Pk))])

self.C45_Tree.append(limb.copy())

del limb[-2: ]

accury_back = self.Tree_verify()

del self.C45_Tree[-2: ]

# 判断剪枝结果

if accury_font >= accury_back:

self.C45_Tree = Font_Tree

return

# 进入非剪枝递归

for data in tra_data:

limb.append(comp_copy[tra_data.index(data)])

self.TreeGenerate(limb.copy(), data, pro_dataset)

del limb[end]

# 模拟树图形结构

def Tree_gui(self):

Go_Tr = '~~~~~'

for num in self.C45_Tree[0]:

print(Go_Tr + str(num), end='')

print()

for tree in self.C45_Tree[start: ]:

num_TF = False

for num in range(len(tree)):

try:

if tree[num] != self.C45_Tree[self.C45_Tree.index(tree) - 1][num] or num_TF:

num_TF = True

print(Go_Tr + str(tree[num]), end='')

else:

print(' ' * len(Go_Tr) + ' ' * len(tree[num]), end='')

except:

print(Go_Tr + str(tree[num]), end='')

print()

print()

# 验证模型

def Tree_verify(self, Tree = False):

verify_num = 0

for data in self.tex_dataset:

for tree in self.C45_Tree:

data_verify = True

for tree_num in range(2, len(tree[ :end]), 3):

pro_num = self.pro_dataset.index(tree[tree_num - 2])

if tree[tree_num] == '>' and float(tree[tree_num - 1]) < data[pro_num]:

data_verify = False

break

elif tree[tree_num] == '<' and float(tree[tree_num - 1]) > data[pro_num]:

data_verify = False

break

if data_verify and tree[end] == data[end]:

verify_num += 1

elif Tree and data_verify and tree[end] != data[end] and data[end] == 'No':

writ(1, tree[end] + '\n')

accury = round(divi(verify_num, self.tex_num - kddt_tnum), 3)

if Tree == True:

print('Training dataset number:', self.tra_num - self.tex_num - 1)

print('Texting dataset number:', self.tex_num - kddt_tnum)

print('The correct number:', verify_num)

print('Model node number:', len(self.C45_Tree))

print('Accuracy:', accury)

return accury

# 信息增益率

def Calc_InfoGR(self, tra_dataset, pro_dataset):

All_ta = []

All_dt = []

All_gain = []

All_ratio = []

for pro in pro_dataset[:end]:

pro_gain, pro_dt, pro_ta = self.Calc_InfoG(tra_dataset, pro)

# 增益率计算

Iv = 0.0

for dt in pro_dt:

iv_num = len(dt)

value = divi(iv_num, len(tra_dataset))

Iv -= (value * log2(value))

pro_ratio = divi(pro_gain, Iv)

All_ta.append(pro_ta)

All_dt.append(pro_dt)

All_gain.append(pro_gain)

All_ratio.append(pro_ratio)

Max_num = 0

Max_ratio = 0.0

All_gain = np.array(All_gain)

aver_gain = All_gain.mean()

for num in range(len(All_gain)):

if All_ratio[num] > Max_ratio and All_gain[num] > aver_gain:

Max_num = num

high_gain = All_gain[Max_num]

Max_ratio = All_ratio[Max_num]

elif All_ratio[num] == Max_ratio and All_gain[num] > high_gain and All_gain[num] > aver_gain:

Max_num = num

high_gain = All_gain[Max_num]

Max_ratio = All_ratio[Max_num]

return All_dt[Max_num], pro_dataset[Max_num], All_ta[Max_num]

# 信息增益

def Calc_InfoG(self, tra_dataset, pro):

Et_Pk = self.Calc_Max(tra_dataset)

Ent_D = self.Calc_Info(Pk=Et_Pk)

pro_indx = self.pro_dataset.index(pro)

tra_dataset = sorted(tra_dataset, key=lambda data:data[pro_indx])

Pk = [[0] * len(self.end_dataset), Et_Pk]

max_num = 0

max_gain_d = 0.0

for data in tra_dataset:

Pk[let][self.end_dataset.index(data[end])] += 1

Pk[got][self.end_dataset.index(data[end])] -= 1

gain_d = Ent_D

num = sum(Pk[let])

for pk in Pk:

ent_d = self.Calc_Info(Pk=pk)

gain_d -= divi(ent_d * sum(pk), len(tra_dataset))

if gain_d > max_gain_d:

max_num = num

max_gain_d = gain_d

max_dt = [tra_dataset[ :max_num], tra_dataset[max_num: ]]

max_ta = divi(max_dt[let][-1][pro_indx] + max_dt[got][0][pro_indx], 2)

return max_gain_d, max_dt, max_ta

# 信息熵

def Calc_Info(self, tra_dataset = None, Pk = None):

if not Pk:

data_num = len(tra_dataset)

Pk = self.Calc_Max(tra_dataset)

else:

data_num = sum(Pk)

Ent_D = 0.0

for pk_value in Pk:

value = divi(pk_value, data_num)

Ent_D -= (value * log2(value))

return Ent_D

# 信息标记分类

def Calc_Max(self, tra_dataset):

Pk = [0] * len(self.end_dataset)

datas = []

for data in tra_dataset:

datas.append(data[end])

for num in range(len(Pk)):

pk_num = datas.count(self.end_dataset[num])

Pk[num] = pk_num

return Pk

if __name__ == '__main__':

start_time = t.time()

tree = Decision_tree()

tree.TreeCreate()

end_time = t.time()

print('Run time:', end_time - start_time)