mac系统下安装hadoop+hbase+spark单机版+IDEA和scala编写使用spark来计算的helloworld程序

一、启动ssh服务

1、首先找到系统偏好设置,如下图所示:

2、然后选择共享,如下图所示:

3、然后将远程登录选上,并选择所有用户,如下图所示:

4、此时即可通过ssh登录了。

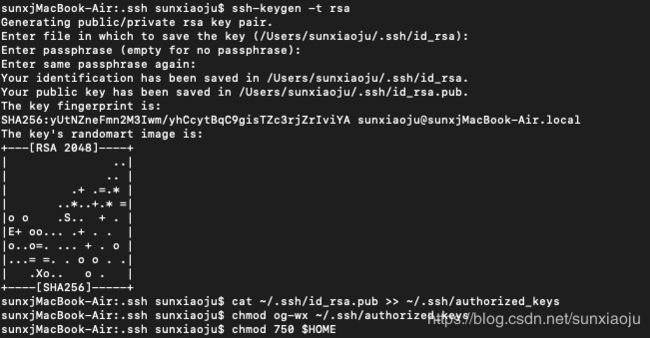

二、建立ssh无密码登录本机

1、ssh生成密钥有rsa和dsa两种生成方式,默认情况下采用rsa方式,首先主机上创建ssh-key,这里我们采用rsa方式。使用如下命令(P是要大写的,后面跟"",表示无密码)

ssh-keygen -t rsa如下图所示:

![]()

2、此时会在~/.ssh/下生成两个文件:id_rsa和id_rsa.pub这两个文件是成对出现的,进入到该目录查看,如下图所示:

3、将id_rsa.pub文件authorized_keys授权文件中,开始是没有authorized_keys文件的,只需要执行如下命令即可:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys4、如果存在known_hosts文件则将其删除掉,然后修改authorized_keys的权限使用如下命令修改:

chmod og-wx ~/.ssh/authorized_keys5、然后修改$HOME的权限为750,使用如下命令修改:

chmod 750 $HOME 如下图所示:

6、然后输入ssh localhost测试即可成功,如下图所示:

![]()

三、安装hadoop

1、首先从https://hadoop.apache.org/releases.html下载,如下版本:

![]()

2、这里选择hadoop2.7.7的Binary版本。

3、将下载好的放置在某个目录,我的是放在/sunxj/InstallFile/如下图所示:

5、使用如下命令解压:

tar -xzvf hadoop-2.7.7.tar.gz 6、进入到hadoop-2.7.7/etc/hadoop/修改到hadoop-env.sh,修改export JAVA_HOME对应的目录,需要找到java的安装目录,我的是:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/

如下图所示:

7、在hadoop-2.7.7目录中一个hdfs目录和三个子目录,如

- hadoop-2.7.3/hdfs

- hadoop-2.7.3/hdfs/tmp

- hadoop-2.7.3/hdfs/name

- hadoop-2.7.3/hdfs/data

如下图所示:

8、配置core-site.xml文件,使用如下命令打开:

vim etc/hadoop/core-site.xml 然后在

hadoop.tmp.dir

file:/sunxj/InstallFile/hadoop-2.7.7/hdfs/tmp

A base for other temporary directories.

io.file.buffer.size

131072

fs.defaultFS

hdfs://localhost:9000

如下图所示:

注意:第一个属性中的value和我们之前创建的/sunxj/InstallFile/hadoop-2.7.7/hdfs/tmp路径要一致。

9、在mapred-env.sh加入JAVA_HOME,如下图所示:

12、在yarn-env.sh加入JAVA_HOME,如下图所示:

![]()

13、配置hdfs-site.xml,使用如下命令打开文件

vim etc/hadoop/hdfs-site.xml然后在

dfs.replication

1

dfs.namenode.name.dir

file:/sunxj/InstallFile/hadoop-2.7.7/hdfs/name

true

dfs.datanode.data.dir

file:/sunxj/InstallFile/hadoop-2.7.7/hdfs/data

true

dfs.namenode.secondary.http-address

localhost:9001

dfs.webhdfs.enabled

true

dfs.permissions

false

注意:其中第二个dfs.namenode.name.dir和dfs.datanode.data.dir的value和之前创建的/hdfs/name和/hdfs/data路径一致;

如下图所示:

14、复制mapred-site.xml.template文件,并命名为mapred-site.xml,使用如下命令拷贝

cp etc/hadoop/mapred-site.xml.template etc/hadoop/mapred-site.xml并编辑mapred-site.xml,在标签

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

localhost:10020

mapreduce.jobhistory.webapp.address

localhost:19888

mapred.job.tracker

localhost:8021

如下图所示:

15、配置yarn-site.xml,使用如下命令打开

vim etc/hadoop/yarn-site.xml 然后在

yarn.resourcemanager.address

localhost:18040

yarn.resourcemanager.scheduler.address

localhost:18030

yarn.resourcemanager.webapp.address

localhost:18088

yarn.resourcemanager.resource-tracker.address

localhost:18025

yarn.resourcemanager.admin.address

localhost:18141

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.auxservices.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

如下图所示:

16、配置hadoop环境变量,使用sudo vim /etc/profile打开文件,添加入下代码:

export HADOOP_HOME=/sunxj/InstallFile/hadoop-2.7.7

export PATH="$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH"

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop如下图所示:

18、输入如下命令使配置立即生效

source /etc/profile 19、此时使用hadoop用户登录通过如下命令进行格式化

hdfs namenode -format如下图所示:

注意:/sunxj/InstallFile/hdfs/name/current目录具有写入权限

20、然后使用start-all.sh启动,如下图所示:

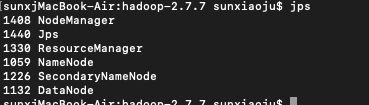

21、然后输入jps查看进程,如下图所示:

22、通过web查看集群运行情况,YARN的web页面,然后端口是用yarn-site.xml配置文件中的yarn.resourcemanager.webapp.address指定的,我们配置的是18088,那么在浏览器中输入:http://localhost:18088即可打开界面,如下图所示:

界面中显示的记录则是执行的任务个数。

23、HDFS界面如果没有更改端口,则默认的端口是50070:http://localhost:50070,如下图所示:

24、用自带的样例测试hadoop集群能不能正常跑任务,使用如下命令测试:

hadoop jar /sunxj/InstallFile/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar pi 10 10运行结果如下:

Number of Maps = 10

Samples per Map = 10

19/01/10 01:28:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Starting Job

19/01/10 01:28:30 INFO client.RMProxy: Connecting to ResourceManager at localhost/127.0.0.1:18040

19/01/10 01:28:31 INFO input.FileInputFormat: Total input paths to process : 10

19/01/10 01:28:31 INFO mapreduce.JobSubmitter: number of splits:10

19/01/10 01:28:32 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1547054421110_0001

19/01/10 01:28:32 INFO impl.YarnClientImpl: Submitted application application_1547054421110_0001

19/01/10 01:28:32 INFO mapreduce.Job: The url to track the job: http://192.168.0.104:18088/proxy/application_1547054421110_0001/

19/01/10 01:28:32 INFO mapreduce.Job: Running job: job_1547054421110_0001

19/01/10 01:28:41 INFO mapreduce.Job: Job job_1547054421110_0001 running in uber mode : false

19/01/10 01:28:41 INFO mapreduce.Job: map 0% reduce 0%

19/01/10 01:28:58 INFO mapreduce.Job: map 30% reduce 0%

19/01/10 01:28:59 INFO mapreduce.Job: map 60% reduce 0%

19/01/10 01:29:09 INFO mapreduce.Job: map 70% reduce 0%

19/01/10 01:29:10 INFO mapreduce.Job: map 100% reduce 0%

19/01/10 01:29:11 INFO mapreduce.Job: map 100% reduce 100%

19/01/10 01:29:12 INFO mapreduce.Job: Job job_1547054421110_0001 completed successfully

19/01/10 01:29:12 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=226

FILE: Number of bytes written=1355618

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2690

HDFS: Number of bytes written=215

HDFS: Number of read operations=43

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=10

Launched reduce tasks=1

Data-local map tasks=10

Total time spent by all maps in occupied slots (ms)=125151

Total time spent by all reduces in occupied slots (ms)=11133

Total time spent by all map tasks (ms)=125151

Total time spent by all reduce tasks (ms)=11133

Total vcore-milliseconds taken by all map tasks=125151

Total vcore-milliseconds taken by all reduce tasks=11133

Total megabyte-milliseconds taken by all map tasks=128154624

Total megabyte-milliseconds taken by all reduce tasks=11400192

Map-Reduce Framework

Map input records=10

Map output records=20

Map output bytes=180

Map output materialized bytes=280

Input split bytes=1510

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=280

Reduce input records=20

Reduce output records=0

Spilled Records=40

Shuffled Maps =10

Failed Shuffles=0

Merged Map outputs=10

GC time elapsed (ms)=989

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=1911554048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1180

File Output Format Counters

Bytes Written=97

Job Finished in 41.692 seconds

Estimated value of Pi is 3.2000000000000000000025、那么此时hadoop安装成功。

四、安装hbase

1、在https://hbase.apache.org/downloads.html下载hbase,我们选择2.0.4版本的bin,如下图所示:

2、将下载好的hbase-2.0.4-bin.tar.gz放置在/sunxj/InstallFile/目录,如下图所示:

3、然后使用如下命令进行解压:

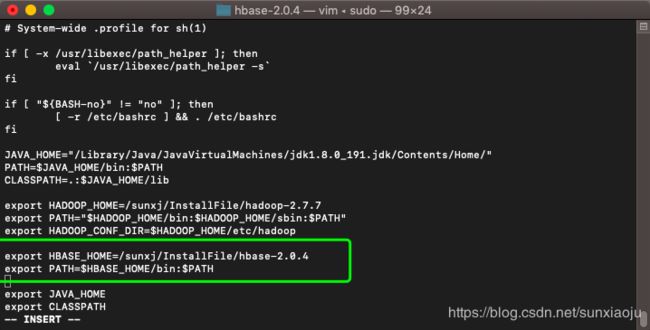

tar -xzvf hbase-2.0.4-bin.tar.gz4、配置hbase的环境变量编辑 /etc/profile 文件,通过如下命令进行编辑:

sudo vim /etc/profile然后在该文件中加入:

export HBASE_HOME=/sunxj/InstallFile/hbase-2.0.4

export PATH=$HBASE_HOME/bin:$PATH如下图所示:

5、保存退出,然后执行:source /etc/profile命令使之生效.

6、然后即可通过如下命令查看版本:

hbase version如下图所示:

![]()

7、修改配置文件,切换到 /sunxj/InstallFile/hbase-2.0.4/conf下,如下图所示:

![]()

8、使用如下命令在hbase-2.0.4创建一个pids,用于存放用于运行hbase进程的pid文件:

mkdir /sunxj/InstallFile/hbase-2.0.4/pids如下图所示:

9、修改hbase-env.sh,使用如下命令编辑:

vim hbase-env.sh 然后在文件末尾加入如下信息:

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home

export HADOOP_HOME=/sunxj/InstallFile/hadoop-2.7.7

export HBASE_HOME=/sunxj/InstallFile/hbase-2.0.4

export HBASE_CLASSPATH=/sunxj/InstallFile/hadoop-2.7.7/etc/hadoop

export HBASE_PID_DIR=/sunxj/InstallFile/hbase-2.0.4/pids

export HBASE_MANAGES_ZK=false如下图所示:

其中HBASE_PID_DIR的路径就是刚才创建的pids文件夹路径,HBASE_MANAGES_ZK则表示启用hbase自己的zookeeper。

10、使用如下命令创建一个tmp文件夹,如下图所示:

mkdir /sunxj/InstallFile/hbase-2.0.4/tmp

如下图所示:

11、然后修改 hbase-site.xml,编辑hbase-site.xml 文件,在

hbase.rootdir

hdfs://localhost:9000/hbase

The directory shared byregion servers.

hbase.tmp.dir

/sunxj/InstallFile/hbase-2.0.4/tmp

hbase.cluster.distributed

false

hbase.master

localhost:60000

如下图所示:

12、先启动Hadoop后在启动hbase,使用如下命令进行启动:

start-all.sh

start-hbase.sh 如下图所示:

13、然后用jps查看进程情况,如下图所示:

14、然后通过web地址来查看,地址为:http://localhost16010,如下图所示:

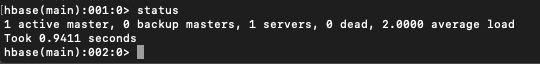

15、此时说明hbase集群已经搭建好了,那么可以通过以下命令进入hbase的shell命令命令行:

hbase shell

如下图所示:

16、然后输入status可以查看hbase的状态,如下图所示:

意思是1个主hbase并且是活动的,0个是备用节点,一共有1个服务

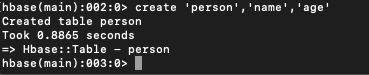

17、通过如下命令创建一个表:

create 'person','name','age'如下图所示:

其中person是表名,name和age是字段名

18、开始向表中插入数据使用如下命令:

put 'person','1','name','sun'

put 'person','1','age',24意思是向person的第一行中的name列插入sun,向person的第一行中的age列插入24

如下图所示:

![]()

19、通过scan '表名'来查看表中所有的记录,如下图所示:

![]()

20、具体的请看下表操作方法:

HBase Shell的一些基本操作命令,列出了几个常用的HBase Shell命令,如下:

| 名称 | 命令表达式 |

|---|---|

| 查看存在哪些表 | list |

| 创建表 | create '表名称', '列名称1','列名称2','列名称N' |

| 添加记录 | put '表名称', '行名称', '列名称:', '值' |

| 查看记录 | get '表名称', '行名称' |

| 查看表中的记录总数 | count '表名称' |

| 删除记录 | delete '表名' ,'行名称' , '列名称' |

| 删除一张表 | 先要屏蔽该表,才能对该表进行删除,第一步 disable '表名称' 第二步 drop '表名称' |

| 查看所有记录 | scan "表名称" |

| 查看某个表某个列中所有数据 | scan "表名称" , ['列名称:'] |

| 更新记录 | 就是重写一遍进行覆 |

五、安装spark

1、安装scala环境,在mac上直接执行如下指令即可安装:

brew install scala如下图所示:

2、然后执行scala -version查看scala版本,并检测是否配置成功,如下图所示:

3、从http://spark.apache.org/downloads.html下载spark,选择spark和hadoop版本,如下图所示:

4、然后将压缩包放在/sunxj/InstallFile/目录,如下图所示:

5、此时权限哪里多了一个@这是mac自带的,可以通过 xattr -c -r *命令去掉,如下图所示:

6、然后使用如下命令解压spark:

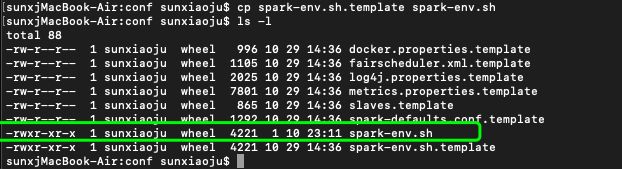

tar -xzvf spark-2.4.0-bin-hadoop2.7.tgz7、进入到/sunxj/InstallFile/spark-2.4.0-bin-hadoop2.7/conf/目录修改配置文件,如下图所示:

8、复制spark-env.sh.template并重命名为spark-env.sh,如下图所示:

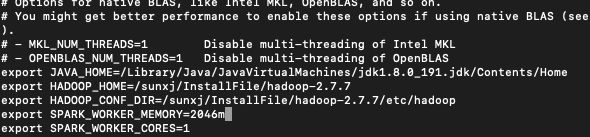

9、然后编辑spark-env.sh,在文件的末尾添加入下配置:

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home

export HADOOP_HOME=/sunxj/InstallFile/hadoop-2.7.7

export HADOOP_CONF_DIR=/sunxj/InstallFile/hadoop-2.7.7/etc/hadoop

export SPARK_WORKER_MEMORY=500m

export SPARK_WORKER_CORES=1如下图所示:

注意:如果存在export SPARK_MASTER_IP则需要将此项注释掉。然后保存退出。

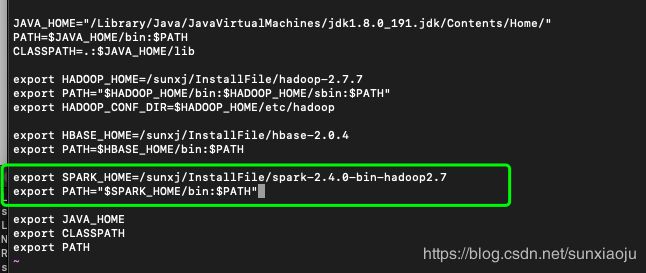

10、配置环境变量,编辑/etc/profile文件,在最后加入如下配置:

export SPARK_HOME=/sunxj/InstallFile/spark-2.4.0-bin-hadoop2.7

export PATH="$SPARK_HOME/bin:$PATH"如下图所示:

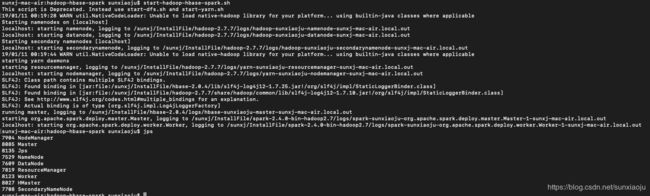

11、然后使用source /etc/profile使之配置生效,依次启动启动hadoop、hbase、spark

命令如下:

(1)、start-all.sh(注意:有时候第一次启动时namenode节点都是standby状态,需要停止在启动即可)

(2)、start-hbase.sh

(3)、 /sunxj/InstallFile/spark-2.4.0-bin-hadoop2.7/sbin/start-all.sh 如下图所示:

![]()

12、然后查看进程,如下图所示:

13、此时Master进程和Worker进程都以启动成功,

14、打开web页面查看http://localhost:8080/,如下图所示:

如果主机名不好记可以更改成一个其他的名称,比如我更改为了:sunxj-mac-air,然后重启hadoop、hbase、spark,再次查看web如下图所示:

15、测试,通过spark-shell 命令进行测试,在master节点上输入该命令进入scala环境,如下图所示:

16、然后创建一个worldcount.txt文件,使用如下命令创建:

vim worldcount.txt文件内容如下:

hello

hello

world

world

hello

linux

spark

window

linux

spark

spark

linux

hello

sunxj

window如下图所示:

17、然后通过如下命令在hadoop的fs系统中创建一个user_data的文件夹:

hadoop fs -mkdir /user_data如下图所示:

![]()

18、然后通过如下命令查看hadoop的fs系统目录信息:

hadoop fs -ls /如下图所示:

19、此时发现有有一个警告 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable,那么更改hadoop-env.sh文件,在该文件中加入如下代码:

export HADOOP_OPTS="-Djava.library.path=/sunxj/InstallFile/hadoop-2.7.7/lib/native" 如下图所示:

20、重启hadoop在次查看还有有警告,如下图所示:

21、更改core-site.xml 文件,在该文件中加入如下代码:

hadoop.native.lib

false

如下图所示:

![]()

22、重启hadoop在次查看还有有警告,如下图所示:

23、此警告不影响程序执行,先跳过,以后看如何解决,那么通过如下命令将文件上传至hdfs系统:

hadoop fs -put worldcount.txt /user_data

如下图所示:

24、然后在spark即scala命令行依次输入如下代码:

val file=sc.textFile("hdfs://localhost:9000/user_data/worldcount.txt")

val rdd = file.flatMap(line => line.split(" ")).map(word => (word,1)).reduceByKey(_+_)

rdd.collect()

rdd.foreach(println)如下图所示:

25、此时spark搭建完毕,注意在写hdfs路径时,可以通过hadoop fs -ls hdfs://localhost:9000/,一步一步的找路径,如下图所示:

26、最后可写两个脚本start-hadoop-hbase-spark.sh和stop-hadoop-hbase-spark.sh来统一启动和停止这些服务,我们在/sunxj/InstallFile目录创建一个hadoop-hbase-spark目录,在次目录中创建两个脚本文件,使用如下命令创建:

vim start-hadoop-hbase-spark.sh文件内容如下:

start-all.sh

start-hbase.sh

/sunxj/InstallFile/spark-2.4.0-bin-hadoop2.7/sbin/start-all.sh 如下图所示:

27、用如下命令创建stop-hadoop-hbase-spark.sh文件:

vim stop-hadoop-hbase-spark.sh文件内容如下:

/sunxj/InstallFile/spark-2.4.0-bin-hadoop2.7/sbin/stop-all.sh

stop-hbase.sh

stop-all.sh如下图所示:

29、修改权限为777,如下图所示:

30、然后将该目录添加到/etc/profile文件中,添加入如下代码:

export HADOOP_HBASE_SPARK_START_STOP_SH_HOME=/sunxj/InstallFile/hadoop-hbase-spark

export PATH=$HADOOP_HBASE_SPARK_START_STOP_SH_HOME:$PATH如下图所示:

31、然后使用source /etc/profile命令使之生效,然后在终端直接输入start-hadoop-hbase-spark.sh启动hadoop、hbase、spark,如下图所示:

32、在终端直接输入stop-hadoop-hbase-spark.sh停止spark、hbase、hadoop,如下图所示:

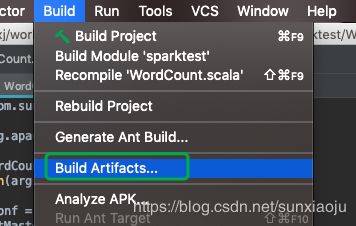

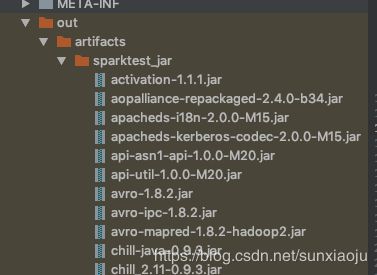

六、使用IDEA+scala编写使用spark来计算的helloworld程序

1、首先打开IDEA,如下图所示:

2、然后找到右下角位置的Configure->Plugins,如下图所示:

3、在Marketplace搜索scala查看scala插件是否安装,如果已安装Installed则是灰色的,未安装则可以进行安装,如下图所示:

4、在安装好之后开始创建工程,如下图所示:

5、选择Maven,选择SDK,然后选择Next,如下图所示:

![]()

6、输入GroupId和ArtifactId,然后Next,如下图所示:

![]()

7、输入Project name的名称,然后点击Finish,如下图所示:

8、然后选择Project Structure,如下图所示:

9、然后选择Libraries,接着选择+号->Scala SDK,如下图所示:

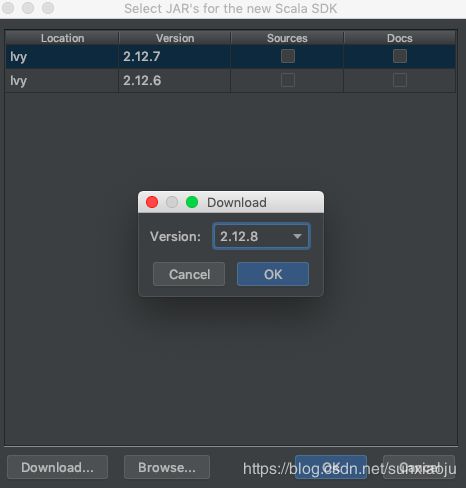

10、此时会让其选择版本,如果此时没有想要的版本可以选择Download...按钮,如下图所示:

![]()

11、此时就会弹出让其下载的版本,并且可以选择下载那个版本,如下图所示:

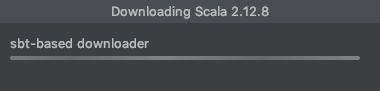

12、选择好之后即可开始下载,下载时间比较长:

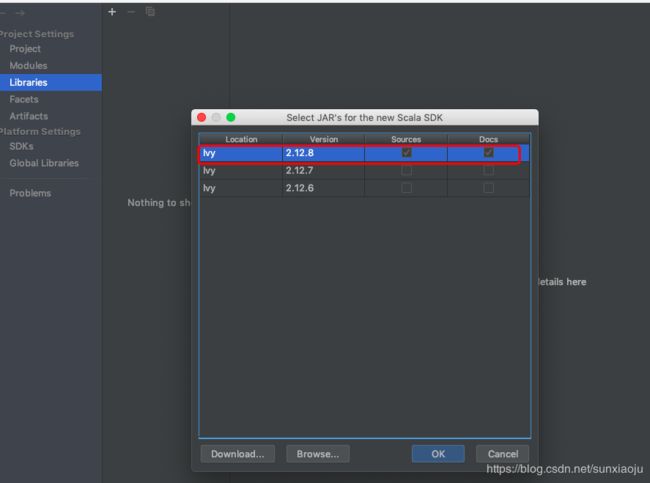

13、再次打开即可发现scala的版本已经被选择,或者直接可以选择,如下图所示:

14、点击OK之后会让选择项目,如下图所示:

15、点击OK之后即可添加Scala的Lib,如下图所示:

16、最后在点击OK进行应用,在配置之前要确定spark使用的scala的版本,登录到spark的集群查看scala的版本,可以通过spark-shell 命令进行测试,在spark上输入该命令进入scala环境如下图所示:

否则会出现如下错误:

/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/bin/java -agentlib:jdwp=transport=dt_socket,address=127.0.0.1:50675,suspend=y,server=n -javaagent:/Users/sunxiaoju/Library/Caches/IntelliJIdea2018.3/captureAgent/debugger-agent.jar -Dfile.encoding=UTF-8 -classpath "/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/charsets.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/deploy.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/cldrdata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/dnsns.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/jaccess.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/jfxrt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/localedata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/nashorn.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunec.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunjce_provider.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunpkcs11.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/zipfs.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/javaws.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jce.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jfr.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jfxswt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jsse.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/management-agent.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/plugin.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/resources.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/rt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/ant-javafx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/dt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/javafx-mx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/jconsole.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/packager.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/sa-jdi.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/tools.jar:/sunxj/work/git/sparktest/target/classes:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-reflect/jars/scala-reflect-2.12.8.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-library/jars/scala-library-2.12.8.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-reflect/srcs/scala-reflect-2.12.8-sources.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-library/srcs/scala-library-2.12.8-sources.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-core_2.12/2.4.0/spark-core_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro/1.8.2/avro-1.8.2.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-core-asl/1.9.13/jackson-core-asl-1.9.13.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-mapper-asl/1.9.13/jackson-mapper-asl-1.9.13.jar:/Users/sunxiaoju/.m2/repository/com/thoughtworks/paranamer/paranamer/2.7/paranamer-2.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-compress/1.8.1/commons-compress-1.8.1.jar:/Users/sunxiaoju/.m2/repository/org/tukaani/xz/1.5/xz-1.5.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro-mapred/1.8.2/avro-mapred-1.8.2-hadoop2.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro-ipc/1.8.2/avro-ipc-1.8.2.jar:/Users/sunxiaoju/.m2/repository/commons-codec/commons-codec/1.9/commons-codec-1.9.jar:/Users/sunxiaoju/.m2/repository/com/twitter/chill_2.12/0.9.3/chill_2.12-0.9.3.jar:/Users/sunxiaoju/.m2/repository/com/esotericsoftware/kryo-shaded/4.0.2/kryo-shaded-4.0.2.jar:/Users/sunxiaoju/.m2/repository/com/esotericsoftware/minlog/1.3.0/minlog-1.3.0.jar:/Users/sunxiaoju/.m2/repository/org/objenesis/objenesis/2.5.1/objenesis-2.5.1.jar:/Users/sunxiaoju/.m2/repository/com/twitter/chill-java/0.9.3/chill-java-0.9.3.jar:/Users/sunxiaoju/.m2/repository/org/apache/xbean/xbean-asm6-shaded/4.8/xbean-asm6-shaded-4.8.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-launcher_2.12/2.4.0/spark-launcher_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-kvstore_2.12/2.4.0/spark-kvstore_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/fusesource/leveldbjni/leveldbjni-all/1.8/leveldbjni-all-1.8.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-core/2.6.7/jackson-core-2.6.7.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-annotations/2.6.7/jackson-annotations-2.6.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-network-common_2.12/2.4.0/spark-network-common_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-network-shuffle_2.12/2.4.0/spark-network-shuffle_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-unsafe_2.12/2.4.0/spark-unsafe_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/javax/activation/activation/1.1.1/activation-1.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-recipes/2.6.0/curator-recipes-2.6.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-framework/2.6.0/curator-framework-2.6.0.jar:/Users/sunxiaoju/.m2/repository/com/google/guava/guava/16.0.1/guava-16.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/zookeeper/zookeeper/3.4.6/zookeeper-3.4.6.jar:/Users/sunxiaoju/.m2/repository/javax/servlet/javax.servlet-api/3.1.0/javax.servlet-api-3.1.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-lang3/3.5/commons-lang3-3.5.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-math3/3.4.1/commons-math3-3.4.1.jar:/Users/sunxiaoju/.m2/repository/com/google/code/findbugs/jsr305/1.3.9/jsr305-1.3.9.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/slf4j-api/1.7.16/slf4j-api-1.7.16.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/jul-to-slf4j/1.7.16/jul-to-slf4j-1.7.16.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/jcl-over-slf4j/1.7.16/jcl-over-slf4j-1.7.16.jar:/Users/sunxiaoju/.m2/repository/log4j/log4j/1.2.17/log4j-1.2.17.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/slf4j-log4j12/1.7.16/slf4j-log4j12-1.7.16.jar:/Users/sunxiaoju/.m2/repository/com/ning/compress-lzf/1.0.3/compress-lzf-1.0.3.jar:/Users/sunxiaoju/.m2/repository/org/xerial/snappy/snappy-java/1.1.7.1/snappy-java-1.1.7.1.jar:/Users/sunxiaoju/.m2/repository/org/lz4/lz4-java/1.4.0/lz4-java-1.4.0.jar:/Users/sunxiaoju/.m2/repository/com/github/luben/zstd-jni/1.3.2-2/zstd-jni-1.3.2-2.jar:/Users/sunxiaoju/.m2/repository/org/roaringbitmap/RoaringBitmap/0.5.11/RoaringBitmap-0.5.11.jar:/Users/sunxiaoju/.m2/repository/commons-net/commons-net/3.1/commons-net-3.1.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scala-library/2.12.7/scala-library-2.12.7.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-jackson_2.12/3.5.3/json4s-jackson_2.12-3.5.3.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-core_2.12/3.5.3/json4s-core_2.12-3.5.3.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-ast_2.12/3.5.3/json4s-ast_2.12-3.5.3.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-scalap_2.12/3.5.3/json4s-scalap_2.12-3.5.3.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/modules/scala-xml_2.12/1.0.6/scala-xml_2.12-1.0.6.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-client/2.22.2/jersey-client-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/ws/rs/javax.ws.rs-api/2.0.1/javax.ws.rs-api-2.0.1.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-api/2.4.0-b34/hk2-api-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-utils/2.4.0-b34/hk2-utils-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/external/aopalliance-repackaged/2.4.0-b34/aopalliance-repackaged-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/external/javax.inject/2.4.0-b34/javax.inject-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-locator/2.4.0-b34/hk2-locator-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/javassist/javassist/3.18.1-GA/javassist-3.18.1-GA.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-common/2.22.2/jersey-common-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/annotation/javax.annotation-api/1.2/javax.annotation-api-1.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/bundles/repackaged/jersey-guava/2.22.2/jersey-guava-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/osgi-resource-locator/1.0.1/osgi-resource-locator-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-server/2.22.2/jersey-server-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/media/jersey-media-jaxb/2.22.2/jersey-media-jaxb-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/validation/validation-api/1.1.0.Final/validation-api-1.1.0.Final.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/containers/jersey-container-servlet/2.22.2/jersey-container-servlet-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/containers/jersey-container-servlet-core/2.22.2/jersey-container-servlet-core-2.22.2.jar:/Users/sunxiaoju/.m2/repository/io/netty/netty-all/4.1.17.Final/netty-all-4.1.17.Final.jar:/Users/sunxiaoju/.m2/repository/io/netty/netty/3.9.9.Final/netty-3.9.9.Final.jar:/Users/sunxiaoju/.m2/repository/com/clearspring/analytics/stream/2.7.0/stream-2.7.0.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-core/3.1.5/metrics-core-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-jvm/3.1.5/metrics-jvm-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-json/3.1.5/metrics-json-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-graphite/3.1.5/metrics-graphite-3.1.5.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-databind/2.6.7.1/jackson-databind-2.6.7.1.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/module/jackson-module-scala_2.12/2.6.7.1/jackson-module-scala_2.12-2.6.7.1.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scala-reflect/2.12.1/scala-reflect-2.12.1.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/module/jackson-module-paranamer/2.7.9/jackson-module-paranamer-2.7.9.jar:/Users/sunxiaoju/.m2/repository/org/apache/ivy/ivy/2.4.0/ivy-2.4.0.jar:/Users/sunxiaoju/.m2/repository/oro/oro/2.0.8/oro-2.0.8.jar:/Users/sunxiaoju/.m2/repository/net/razorvine/pyrolite/4.13/pyrolite-4.13.jar:/Users/sunxiaoju/.m2/repository/net/sf/py4j/py4j/0.10.7/py4j-0.10.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-tags_2.12/2.4.0/spark-tags_2.12-2.4.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-crypto/1.0.0/commons-crypto-1.0.0.jar:/Users/sunxiaoju/.m2/repository/org/spark-project/spark/unused/1.0.0/unused-1.0.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-client/3.1.1/hadoop-client-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-common/3.1.1/hadoop-common-3.1.1.jar:/Users/sunxiaoju/.m2/repository/commons-cli/commons-cli/1.2/commons-cli-1.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/httpcomponents/httpclient/4.5.2/httpclient-4.5.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/httpcomponents/httpcore/4.4.4/httpcore-4.4.4.jar:/Users/sunxiaoju/.m2/repository/commons-io/commons-io/2.5/commons-io-2.5.jar:/Users/sunxiaoju/.m2/repository/commons-collections/commons-collections/3.2.2/commons-collections-3.2.2.jar:/Users/sunxiaoju/.m2/repository/org/eclipse/jetty/jetty-servlet/9.3.19.v20170502/jetty-servlet-9.3.19.v20170502.jar:/Users/sunxiaoju/.m2/repository/org/eclipse/jetty/jetty-security/9.3.19.v20170502/jetty-security-9.3.19.v20170502.jar:/Users/sunxiaoju/.m2/repository/org/eclipse/jetty/jetty-webapp/9.3.19.v20170502/jetty-webapp-9.3.19.v20170502.jar:/Users/sunxiaoju/.m2/repository/org/eclipse/jetty/jetty-xml/9.3.19.v20170502/jetty-xml-9.3.19.v20170502.jar:/Users/sunxiaoju/.m2/repository/javax/servlet/jsp/jsp-api/2.1/jsp-api-2.1.jar:/Users/sunxiaoju/.m2/repository/com/sun/jersey/jersey-servlet/1.19/jersey-servlet-1.19.jar:/Users/sunxiaoju/.m2/repository/commons-logging/commons-logging/1.1.3/commons-logging-1.1.3.jar:/Users/sunxiaoju/.m2/repository/commons-lang/commons-lang/2.6/commons-lang-2.6.jar:/Users/sunxiaoju/.m2/repository/commons-beanutils/commons-beanutils/1.9.3/commons-beanutils-1.9.3.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-configuration2/2.1.1/commons-configuration2-2.1.1.jar:/Users/sunxiaoju/.m2/repository/com/google/re2j/re2j/1.1/re2j-1.1.jar:/Users/sunxiaoju/.m2/repository/com/google/protobuf/protobuf-java/2.5.0/protobuf-java-2.5.0.jar:/Users/sunxiaoju/.m2/repository/com/google/code/gson/gson/2.2.4/gson-2.2.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-auth/3.1.1/hadoop-auth-3.1.1.jar:/Users/sunxiaoju/.m2/repository/com/nimbusds/nimbus-jose-jwt/4.41.1/nimbus-jose-jwt-4.41.1.jar:/Users/sunxiaoju/.m2/repository/com/github/stephenc/jcip/jcip-annotations/1.0-1/jcip-annotations-1.0-1.jar:/Users/sunxiaoju/.m2/repository/net/minidev/json-smart/2.3/json-smart-2.3.jar:/Users/sunxiaoju/.m2/repository/net/minidev/accessors-smart/1.2/accessors-smart-1.2.jar:/Users/sunxiaoju/.m2/repository/org/ow2/asm/asm/5.0.4/asm-5.0.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-client/2.12.0/curator-client-2.12.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/htrace/htrace-core4/4.1.0-incubating/htrace-core4-4.1.0-incubating.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-simplekdc/1.0.1/kerb-simplekdc-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-client/1.0.1/kerb-client-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerby-config/1.0.1/kerby-config-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-core/1.0.1/kerb-core-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerby-pkix/1.0.1/kerby-pkix-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerby-asn1/1.0.1/kerby-asn1-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerby-util/1.0.1/kerby-util-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-common/1.0.1/kerb-common-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-crypto/1.0.1/kerb-crypto-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-util/1.0.1/kerb-util-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/token-provider/1.0.1/token-provider-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-admin/1.0.1/kerb-admin-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-server/1.0.1/kerb-server-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerb-identity/1.0.1/kerb-identity-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/kerby/kerby-xdr/1.0.1/kerby-xdr-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/woodstox/stax2-api/3.1.4/stax2-api-3.1.4.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/woodstox/woodstox-core/5.0.3/woodstox-core-5.0.3.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-hdfs-client/3.1.1/hadoop-hdfs-client-3.1.1.jar:/Users/sunxiaoju/.m2/repository/com/squareup/okhttp/okhttp/2.7.5/okhttp-2.7.5.jar:/Users/sunxiaoju/.m2/repository/com/squareup/okio/okio/1.6.0/okio-1.6.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-api/3.1.1/hadoop-yarn-api-3.1.1.jar:/Users/sunxiaoju/.m2/repository/javax/xml/bind/jaxb-api/2.2.11/jaxb-api-2.2.11.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-client/3.1.1/hadoop-yarn-client-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-core/3.1.1/hadoop-mapreduce-client-core-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-common/3.1.1/hadoop-yarn-common-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/eclipse/jetty/jetty-util/9.3.19.v20170502/jetty-util-9.3.19.v20170502.jar:/Users/sunxiaoju/.m2/repository/com/sun/jersey/jersey-core/1.19/jersey-core-1.19.jar:/Users/sunxiaoju/.m2/repository/javax/ws/rs/jsr311-api/1.1.1/jsr311-api-1.1.1.jar:/Users/sunxiaoju/.m2/repository/com/sun/jersey/jersey-client/1.19/jersey-client-1.19.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/module/jackson-module-jaxb-annotations/2.7.8/jackson-module-jaxb-annotations-2.7.8.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/jaxrs/jackson-jaxrs-json-provider/2.7.8/jackson-jaxrs-json-provider-2.7.8.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/jaxrs/jackson-jaxrs-base/2.7.8/jackson-jaxrs-base-2.7.8.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-jobclient/3.1.1/hadoop-mapreduce-client-jobclient-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-common/3.1.1/hadoop-mapreduce-client-common-3.1.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-annotations/3.1.1/hadoop-annotations-3.1.1.jar:/Applications/IntelliJ IDEA.app/Contents/lib/idea_rt.jar" com.sunxj.sparktest.WordCount

Connected to the target VM, address: '127.0.0.1:50675', transport: 'socket'

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/01/06 22:53:38 INFO SparkContext: Running Spark version 2.4.0

19/01/06 22:53:39 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/01/06 22:53:40 INFO SparkContext: Submitted application: Spark 学习

19/01/06 22:53:40 INFO SecurityManager: Changing view acls to: sunxiaoju

19/01/06 22:53:40 INFO SecurityManager: Changing modify acls to: sunxiaoju

19/01/06 22:53:40 INFO SecurityManager: Changing view acls groups to:

19/01/06 22:53:40 INFO SecurityManager: Changing modify acls groups to:

19/01/06 22:53:40 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(sunxiaoju); groups with view permissions: Set(); users with modify permissions: Set(sunxiaoju); groups with modify permissions: Set()

19/01/06 22:53:41 INFO Utils: Successfully started service 'sparkDriver' on port 50679.

19/01/06 22:53:41 INFO SparkEnv: Registering MapOutputTracker

19/01/06 22:53:41 INFO SparkEnv: Registering BlockManagerMaster

19/01/06 22:53:41 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/01/06 22:53:41 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/01/06 22:53:41 INFO DiskBlockManager: Created local directory at /private/var/folders/7m/ls3n9dj958g25ktsw8d9cym80000gn/T/blockmgr-d772314d-fc3a-4ee0-8a69-241a5d73e78b

19/01/06 22:53:41 INFO MemoryStore: MemoryStore started with capacity 912.3 MB

19/01/06 22:53:41 INFO SparkEnv: Registering OutputCommitCoordinator

19/01/06 22:53:42 INFO Utils: Successfully started service 'SparkUI' on port 4040.

19/01/06 22:53:42 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.0.104:4040

19/01/06 22:53:42 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://192.168.0.108:7077...

19/01/06 22:53:43 INFO TransportClientFactory: Successfully created connection to /192.168.0.108:7077 after 133 ms (0 ms spent in bootstraps)

19/01/06 22:54:02 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://192.168.0.108:7077...

19/01/06 22:54:22 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://192.168.0.108:7077...

19/01/06 22:54:42 ERROR StandaloneSchedulerBackend: Application has been killed. Reason: All masters are unresponsive! Giving up.

19/01/06 22:54:42 WARN StandaloneSchedulerBackend: Application ID is not initialized yet.

19/01/06 22:54:42 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 50690.

19/01/06 22:54:42 INFO NettyBlockTransferService: Server created on 192.168.0.104:50690

19/01/06 22:54:42 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

19/01/06 22:54:42 INFO SparkUI: Stopped Spark web UI at http://192.168.0.104:4040

19/01/06 22:54:42 INFO StandaloneSchedulerBackend: Shutting down all executors

19/01/06 22:54:42 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

19/01/06 22:54:42 WARN StandaloneAppClient$ClientEndpoint: Drop UnregisterApplication(null) because has not yet connected to master

19/01/06 22:54:42 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/01/06 22:54:42 INFO MemoryStore: MemoryStore cleared

19/01/06 22:54:42 INFO BlockManager: BlockManager stopped

19/01/06 22:54:42 INFO BlockManagerMaster: BlockManagerMaster stopped

19/01/06 22:54:42 WARN MetricsSystem: Stopping a MetricsSystem that is not running

19/01/06 22:54:42 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/01/06 22:54:42 INFO SparkContext: Successfully stopped SparkContext

19/01/06 22:54:42 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.0.104, 50690, None)

19/01/06 22:54:42 ERROR SparkContext: Error initializing SparkContext.

java.lang.NullPointerException

at org.apache.spark.storage.BlockManagerMaster.registerBlockManager(BlockManagerMaster.scala:64)

at org.apache.spark.storage.BlockManager.initialize(BlockManager.scala:252)

at org.apache.spark.SparkContext.(SparkContext.scala:510)

at com.sunxj.sparktest.WordCount$.main(WordCount.scala:13)

at com.sunxj.sparktest.WordCount.main(WordCount.scala)

19/01/06 22:54:42 INFO SparkContext: SparkContext already stopped.

Exception in thread "main" java.lang.NullPointerException

at org.apache.spark.storage.BlockManagerMaster.registerBlockManager(BlockManagerMaster.scala:64)

at org.apache.spark.storage.BlockManager.initialize(BlockManager.scala:252)

at org.apache.spark.SparkContext.(SparkContext.scala:510)

at com.sunxj.sparktest.WordCount$.main(WordCount.scala:13)

at com.sunxj.sparktest.WordCount.main(WordCount.scala)

19/01/06 22:54:43 INFO ShutdownHookManager: Shutdown hook called

19/01/06 22:54:43 INFO ShutdownHookManager: Deleting directory /private/var/folders/7m/ls3n9dj958g25ktsw8d9cym80000gn/T/spark-10c156e0-e3bf-44aa-92a7-77a4080a4c71

Disconnected from the target VM, address: '127.0.0.1:50675', transport: 'socket'

Process finished with exit code 1 如下图所示:

17、然后在pom.xml中添加相关依赖,如下配置:

4.0.0

spark

spark-test

1.0-SNAPSHOT

org.apache.spark

spark-core_2.11

2.3.2

org.apache.hadoop

hadoop-client

2.7.7

18、然后创建一个com.sunxj.sparktest包,并在该包中创建一个scala的类WordCount,如下代码所示:

package com.sunxj.sparktest

import org.apache.spark.{SparkConf, SparkContext}

object WordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("Spark 学习")

.setMaster("spark://sunxj-mac-air.local:7077")

.set("spark.executor.memory", "500m")

.set("spark.cores.max", "1")

val sc = new SparkContext(conf)

//val line = sc.textFile(args(0))

val file=sc.textFile("hdfs://localhost:9000/user_data/worldcount.txt")

val rdd = file.flatMap(line => line.split(" ")).map(word => (word,1)).reduceByKey(_+_)

rdd.collect()

rdd.foreach(println)

}

}

lect()

rdd.foreach(println)

}

}如下图所示:

19、其中spark://sunxj-mac-air.local:7077是spark集群中的ALIVE,如下图所示:

20、其中hdfs://localhost:9000是hadoop集群中的一个active节点,如下图所示:

而在hadoop的hdfs系统中是存在一个worldcount.txt文件的,位置在:hdfs://localhost:9000/user_data/worldcount.txt,如下图所示:

![]()

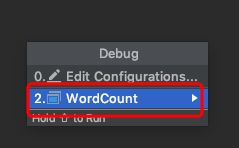

21、然后选择Run->Debug如下图所示:

23、然后选择WordCount,如下图所示:

24、此时会出现以下错误:

Connected to the target VM, address: '127.0.0.1:50188', transport: 'socket'

Exception in thread "main" java.lang.NoSuchMethodError: scala.Predef$.refArrayOps([Ljava/lang/Object;)Lscala/collection/mutable/ArrayOps;

at org.apache.spark.internal.config.ConfigHelpers$.stringToSeq(ConfigBuilder.scala:48)

at org.apache.spark.internal.config.TypedConfigBuilder$$anonfun$toSequence$1.apply(ConfigBuilder.scala:124)

at org.apache.spark.internal.config.TypedConfigBuilder$$anonfun$toSequence$1.apply(ConfigBuilder.scala:124)

at org.apache.spark.internal.config.TypedConfigBuilder.createWithDefault(ConfigBuilder.scala:142)

at org.apache.spark.internal.config.package$.(package.scala:152)

at org.apache.spark.internal.config.package$.(package.scala)

at org.apache.spark.SparkConf$.(SparkConf.scala:668)

at org.apache.spark.SparkConf$.(SparkConf.scala)

at org.apache.spark.SparkConf.set(SparkConf.scala:94)

at org.apache.spark.SparkConf.set(SparkConf.scala:83)

at org.apache.spark.SparkConf.setAppName(SparkConf.scala:120)

at com.sunxj.sparktest.WordCount$.main(WordCount.scala:8)

at com.sunxj.sparktest.WordCount.main(WordCount.scala)

Disconnected from the target VM, address: '127.0.0.1:50188', transport: 'socket'

Process finished with exit code 1 如下图所示:

25、出现此问题是由于pom.xml选的scala的版本,与IDEA选择的SDK版本不一致,首先pom.xml选择的是2.11.12,而在idea也要选择2.11.12,如下图所示:

26、然后再次执行debug,此时没有出现错误,如下代码所示:

/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/bin/java -agentlib:jdwp=transport=dt_socket,address=127.0.0.1:50386,suspend=y,server=n -javaagent:/Users/sunxiaoju/Library/Caches/IntelliJIdea2018.3/captureAgent/debugger-agent.jar -Dfile.encoding=UTF-8 -classpath "/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/charsets.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/deploy.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/cldrdata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/dnsns.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/jaccess.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/jfxrt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/localedata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/nashorn.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunec.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunjce_provider.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/sunpkcs11.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/ext/zipfs.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/javaws.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jce.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jfr.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jfxswt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/jsse.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/management-agent.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/plugin.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/resources.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/jre/lib/rt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/ant-javafx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/dt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/javafx-mx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/jconsole.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/packager.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/sa-jdi.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_191.jdk/Contents/Home/lib/tools.jar:/sunxj/work/sparktest/target/classes:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-core_2.11/2.3.2/spark-core_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro/1.7.7/avro-1.7.7.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-core-asl/1.9.13/jackson-core-asl-1.9.13.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-mapper-asl/1.9.13/jackson-mapper-asl-1.9.13.jar:/Users/sunxiaoju/.m2/repository/com/thoughtworks/paranamer/paranamer/2.3/paranamer-2.3.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-compress/1.4.1/commons-compress-1.4.1.jar:/Users/sunxiaoju/.m2/repository/org/tukaani/xz/1.0/xz-1.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro-mapred/1.7.7/avro-mapred-1.7.7-hadoop2.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro-ipc/1.7.7/avro-ipc-1.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/avro/avro-ipc/1.7.7/avro-ipc-1.7.7-tests.jar:/Users/sunxiaoju/.m2/repository/com/twitter/chill_2.11/0.8.4/chill_2.11-0.8.4.jar:/Users/sunxiaoju/.m2/repository/com/esotericsoftware/kryo-shaded/3.0.3/kryo-shaded-3.0.3.jar:/Users/sunxiaoju/.m2/repository/com/esotericsoftware/minlog/1.3.0/minlog-1.3.0.jar:/Users/sunxiaoju/.m2/repository/org/objenesis/objenesis/2.1/objenesis-2.1.jar:/Users/sunxiaoju/.m2/repository/com/twitter/chill-java/0.8.4/chill-java-0.8.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/xbean/xbean-asm5-shaded/4.4/xbean-asm5-shaded-4.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-launcher_2.11/2.3.2/spark-launcher_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-kvstore_2.11/2.3.2/spark-kvstore_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/fusesource/leveldbjni/leveldbjni-all/1.8/leveldbjni-all-1.8.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-core/2.6.7/jackson-core-2.6.7.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-annotations/2.6.7/jackson-annotations-2.6.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-network-common_2.11/2.3.2/spark-network-common_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-network-shuffle_2.11/2.3.2/spark-network-shuffle_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-unsafe_2.11/2.3.2/spark-unsafe_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/net/java/dev/jets3t/jets3t/0.9.4/jets3t-0.9.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/httpcomponents/httpcore/4.4.1/httpcore-4.4.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/httpcomponents/httpclient/4.5/httpclient-4.5.jar:/Users/sunxiaoju/.m2/repository/commons-codec/commons-codec/1.11/commons-codec-1.11.jar:/Users/sunxiaoju/.m2/repository/javax/activation/activation/1.1.1/activation-1.1.1.jar:/Users/sunxiaoju/.m2/repository/org/bouncycastle/bcprov-jdk15on/1.52/bcprov-jdk15on-1.52.jar:/Users/sunxiaoju/.m2/repository/com/jamesmurty/utils/java-xmlbuilder/1.1/java-xmlbuilder-1.1.jar:/Users/sunxiaoju/.m2/repository/net/iharder/base64/2.3.8/base64-2.3.8.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-recipes/2.6.0/curator-recipes-2.6.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-framework/2.6.0/curator-framework-2.6.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/zookeeper/zookeeper/3.4.6/zookeeper-3.4.6.jar:/Users/sunxiaoju/.m2/repository/com/google/guava/guava/16.0.1/guava-16.0.1.jar:/Users/sunxiaoju/.m2/repository/javax/servlet/javax.servlet-api/3.1.0/javax.servlet-api-3.1.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-lang3/3.5/commons-lang3-3.5.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-math3/3.4.1/commons-math3-3.4.1.jar:/Users/sunxiaoju/.m2/repository/com/google/code/findbugs/jsr305/1.3.9/jsr305-1.3.9.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/slf4j-api/1.7.16/slf4j-api-1.7.16.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/jul-to-slf4j/1.7.16/jul-to-slf4j-1.7.16.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/jcl-over-slf4j/1.7.16/jcl-over-slf4j-1.7.16.jar:/Users/sunxiaoju/.m2/repository/log4j/log4j/1.2.17/log4j-1.2.17.jar:/Users/sunxiaoju/.m2/repository/org/slf4j/slf4j-log4j12/1.7.16/slf4j-log4j12-1.7.16.jar:/Users/sunxiaoju/.m2/repository/com/ning/compress-lzf/1.0.3/compress-lzf-1.0.3.jar:/Users/sunxiaoju/.m2/repository/org/xerial/snappy/snappy-java/1.1.2.6/snappy-java-1.1.2.6.jar:/Users/sunxiaoju/.m2/repository/org/lz4/lz4-java/1.4.0/lz4-java-1.4.0.jar:/Users/sunxiaoju/.m2/repository/com/github/luben/zstd-jni/1.3.2-2/zstd-jni-1.3.2-2.jar:/Users/sunxiaoju/.m2/repository/org/roaringbitmap/RoaringBitmap/0.5.11/RoaringBitmap-0.5.11.jar:/Users/sunxiaoju/.m2/repository/commons-net/commons-net/2.2/commons-net-2.2.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scala-library/2.11.8/scala-library-2.11.8.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-jackson_2.11/3.2.11/json4s-jackson_2.11-3.2.11.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-core_2.11/3.2.11/json4s-core_2.11-3.2.11.jar:/Users/sunxiaoju/.m2/repository/org/json4s/json4s-ast_2.11/3.2.11/json4s-ast_2.11-3.2.11.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scalap/2.11.0/scalap-2.11.0.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scala-compiler/2.11.0/scala-compiler-2.11.0.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/modules/scala-xml_2.11/1.0.1/scala-xml_2.11-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/modules/scala-parser-combinators_2.11/1.0.1/scala-parser-combinators_2.11-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-client/2.22.2/jersey-client-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/ws/rs/javax.ws.rs-api/2.0.1/javax.ws.rs-api-2.0.1.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-api/2.4.0-b34/hk2-api-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-utils/2.4.0-b34/hk2-utils-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/external/aopalliance-repackaged/2.4.0-b34/aopalliance-repackaged-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/external/javax.inject/2.4.0-b34/javax.inject-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/hk2-locator/2.4.0-b34/hk2-locator-2.4.0-b34.jar:/Users/sunxiaoju/.m2/repository/org/javassist/javassist/3.18.1-GA/javassist-3.18.1-GA.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-common/2.22.2/jersey-common-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/annotation/javax.annotation-api/1.2/javax.annotation-api-1.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/bundles/repackaged/jersey-guava/2.22.2/jersey-guava-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/hk2/osgi-resource-locator/1.0.1/osgi-resource-locator-1.0.1.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/core/jersey-server/2.22.2/jersey-server-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/media/jersey-media-jaxb/2.22.2/jersey-media-jaxb-2.22.2.jar:/Users/sunxiaoju/.m2/repository/javax/validation/validation-api/1.1.0.Final/validation-api-1.1.0.Final.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/containers/jersey-container-servlet/2.22.2/jersey-container-servlet-2.22.2.jar:/Users/sunxiaoju/.m2/repository/org/glassfish/jersey/containers/jersey-container-servlet-core/2.22.2/jersey-container-servlet-core-2.22.2.jar:/Users/sunxiaoju/.m2/repository/io/netty/netty-all/4.1.17.Final/netty-all-4.1.17.Final.jar:/Users/sunxiaoju/.m2/repository/io/netty/netty/3.9.9.Final/netty-3.9.9.Final.jar:/Users/sunxiaoju/.m2/repository/com/clearspring/analytics/stream/2.7.0/stream-2.7.0.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-core/3.1.5/metrics-core-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-jvm/3.1.5/metrics-jvm-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-json/3.1.5/metrics-json-3.1.5.jar:/Users/sunxiaoju/.m2/repository/io/dropwizard/metrics/metrics-graphite/3.1.5/metrics-graphite-3.1.5.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/core/jackson-databind/2.6.7.1/jackson-databind-2.6.7.1.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/module/jackson-module-scala_2.11/2.6.7.1/jackson-module-scala_2.11-2.6.7.1.jar:/Users/sunxiaoju/.m2/repository/org/scala-lang/scala-reflect/2.11.8/scala-reflect-2.11.8.jar:/Users/sunxiaoju/.m2/repository/com/fasterxml/jackson/module/jackson-module-paranamer/2.7.9/jackson-module-paranamer-2.7.9.jar:/Users/sunxiaoju/.m2/repository/org/apache/ivy/ivy/2.4.0/ivy-2.4.0.jar:/Users/sunxiaoju/.m2/repository/oro/oro/2.0.8/oro-2.0.8.jar:/Users/sunxiaoju/.m2/repository/net/razorvine/pyrolite/4.13/pyrolite-4.13.jar:/Users/sunxiaoju/.m2/repository/net/sf/py4j/py4j/0.10.7/py4j-0.10.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/spark/spark-tags_2.11/2.3.2/spark-tags_2.11-2.3.2.jar:/Users/sunxiaoju/.m2/repository/org/apache/commons/commons-crypto/1.0.0/commons-crypto-1.0.0.jar:/Users/sunxiaoju/.m2/repository/org/spark-project/spark/unused/1.0.0/unused-1.0.0.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-client/2.7.7/hadoop-client-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-common/2.7.7/hadoop-common-2.7.7.jar:/Users/sunxiaoju/.m2/repository/commons-cli/commons-cli/1.2/commons-cli-1.2.jar:/Users/sunxiaoju/.m2/repository/xmlenc/xmlenc/0.52/xmlenc-0.52.jar:/Users/sunxiaoju/.m2/repository/commons-httpclient/commons-httpclient/3.1/commons-httpclient-3.1.jar:/Users/sunxiaoju/.m2/repository/commons-io/commons-io/2.4/commons-io-2.4.jar:/Users/sunxiaoju/.m2/repository/commons-collections/commons-collections/3.2.2/commons-collections-3.2.2.jar:/Users/sunxiaoju/.m2/repository/org/mortbay/jetty/jetty-sslengine/6.1.26/jetty-sslengine-6.1.26.jar:/Users/sunxiaoju/.m2/repository/javax/servlet/jsp/jsp-api/2.1/jsp-api-2.1.jar:/Users/sunxiaoju/.m2/repository/commons-logging/commons-logging/1.1.3/commons-logging-1.1.3.jar:/Users/sunxiaoju/.m2/repository/commons-lang/commons-lang/2.6/commons-lang-2.6.jar:/Users/sunxiaoju/.m2/repository/commons-configuration/commons-configuration/1.6/commons-configuration-1.6.jar:/Users/sunxiaoju/.m2/repository/commons-digester/commons-digester/1.8/commons-digester-1.8.jar:/Users/sunxiaoju/.m2/repository/commons-beanutils/commons-beanutils/1.7.0/commons-beanutils-1.7.0.jar:/Users/sunxiaoju/.m2/repository/commons-beanutils/commons-beanutils-core/1.8.0/commons-beanutils-core-1.8.0.jar:/Users/sunxiaoju/.m2/repository/com/google/protobuf/protobuf-java/2.5.0/protobuf-java-2.5.0.jar:/Users/sunxiaoju/.m2/repository/com/google/code/gson/gson/2.2.4/gson-2.2.4.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-auth/2.7.7/hadoop-auth-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/directory/server/apacheds-kerberos-codec/2.0.0-M15/apacheds-kerberos-codec-2.0.0-M15.jar:/Users/sunxiaoju/.m2/repository/org/apache/directory/server/apacheds-i18n/2.0.0-M15/apacheds-i18n-2.0.0-M15.jar:/Users/sunxiaoju/.m2/repository/org/apache/directory/api/api-asn1-api/1.0.0-M20/api-asn1-api-1.0.0-M20.jar:/Users/sunxiaoju/.m2/repository/org/apache/directory/api/api-util/1.0.0-M20/api-util-1.0.0-M20.jar:/Users/sunxiaoju/.m2/repository/org/apache/curator/curator-client/2.7.1/curator-client-2.7.1.jar:/Users/sunxiaoju/.m2/repository/org/apache/htrace/htrace-core/3.1.0-incubating/htrace-core-3.1.0-incubating.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-hdfs/2.7.7/hadoop-hdfs-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/mortbay/jetty/jetty-util/6.1.26/jetty-util-6.1.26.jar:/Users/sunxiaoju/.m2/repository/xerces/xercesImpl/2.9.1/xercesImpl-2.9.1.jar:/Users/sunxiaoju/.m2/repository/xml-apis/xml-apis/1.3.04/xml-apis-1.3.04.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-app/2.7.7/hadoop-mapreduce-client-app-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-common/2.7.7/hadoop-mapreduce-client-common-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-client/2.7.7/hadoop-yarn-client-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-server-common/2.7.7/hadoop-yarn-server-common-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-shuffle/2.7.7/hadoop-mapreduce-client-shuffle-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-api/2.7.7/hadoop-yarn-api-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-core/2.7.7/hadoop-mapreduce-client-core-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-yarn-common/2.7.7/hadoop-yarn-common-2.7.7.jar:/Users/sunxiaoju/.m2/repository/javax/xml/bind/jaxb-api/2.2.2/jaxb-api-2.2.2.jar:/Users/sunxiaoju/.m2/repository/javax/xml/stream/stax-api/1.0-2/stax-api-1.0-2.jar:/Users/sunxiaoju/.m2/repository/javax/servlet/servlet-api/2.5/servlet-api-2.5.jar:/Users/sunxiaoju/.m2/repository/com/sun/jersey/jersey-core/1.9/jersey-core-1.9.jar:/Users/sunxiaoju/.m2/repository/com/sun/jersey/jersey-client/1.9/jersey-client-1.9.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-jaxrs/1.9.13/jackson-jaxrs-1.9.13.jar:/Users/sunxiaoju/.m2/repository/org/codehaus/jackson/jackson-xc/1.9.13/jackson-xc-1.9.13.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-mapreduce-client-jobclient/2.7.7/hadoop-mapreduce-client-jobclient-2.7.7.jar:/Users/sunxiaoju/.m2/repository/org/apache/hadoop/hadoop-annotations/2.7.7/hadoop-annotations-2.7.7.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-reflect/jars/scala-reflect-2.11.12.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-library/jars/scala-library-2.11.12.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-reflect/srcs/scala-reflect-2.11.12-sources.jar:/Users/sunxiaoju/.ivy2/cache/org.scala-lang/scala-library/srcs/scala-library-2.11.12-sources.jar:/Applications/IntelliJ IDEA.app/Contents/lib/idea_rt.jar" com.sunxj.sparktest.WordCount

Connected to the target VM, address: '127.0.0.1:50386', transport: 'socket'

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/01/12 21:04:01 INFO SparkContext: Running Spark version 2.3.2

19/01/12 21:04:02 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/01/12 21:04:03 INFO SparkContext: Submitted application: Spark 学习

19/01/12 21:04:03 INFO SecurityManager: Changing view acls to: sunxiaoju

19/01/12 21:04:03 INFO SecurityManager: Changing modify acls to: sunxiaoju

19/01/12 21:04:03 INFO SecurityManager: Changing view acls groups to:

19/01/12 21:04:03 INFO SecurityManager: Changing modify acls groups to:

19/01/12 21:04:03 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(sunxiaoju); groups with view permissions: Set(); users with modify permissions: Set(sunxiaoju); groups with modify permissions: Set()

19/01/12 21:04:04 INFO Utils: Successfully started service 'sparkDriver' on port 50391.

19/01/12 21:04:05 INFO SparkEnv: Registering MapOutputTracker

19/01/12 21:04:05 INFO SparkEnv: Registering BlockManagerMaster

19/01/12 21:04:05 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/01/12 21:04:05 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/01/12 21:04:05 INFO DiskBlockManager: Created local directory at /private/var/folders/7m/ls3n9dj958g25ktsw8d9cym80000gn/T/blockmgr-48e50794-9702-4c62-96ac-2cbf1df3a320

19/01/12 21:04:05 INFO MemoryStore: MemoryStore started with capacity 912.3 MB

19/01/12 21:04:05 INFO SparkEnv: Registering OutputCommitCoordinator

19/01/12 21:04:06 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

19/01/12 21:04:06 INFO Utils: Successfully started service 'SparkUI' on port 4041.

19/01/12 21:04:06 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.0.104:4041

19/01/12 21:04:07 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://sunxj-mac-air.local:7077...

19/01/12 21:04:07 INFO TransportClientFactory: Successfully created connection to sunxj-mac-air.local/192.168.0.104:7077 after 104 ms (0 ms spent in bootstraps)

19/01/12 21:04:07 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20190112210407-0000

19/01/12 21:04:07 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 50394.

19/01/12 21:04:07 INFO NettyBlockTransferService: Server created on 192.168.0.104:50394

19/01/12 21:04:07 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

19/01/12 21:04:07 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/0 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:07 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/0 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:08 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.0.104, 50394, None)

19/01/12 21:04:08 INFO BlockManagerMasterEndpoint: Registering block manager 192.168.0.104:50394 with 912.3 MB RAM, BlockManagerId(driver, 192.168.0.104, 50394, None)

19/01/12 21:04:08 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.0.104, 50394, None)

19/01/12 21:04:08 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 192.168.0.104, 50394, None)

19/01/12 21:04:08 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/0 is now RUNNING

19/01/12 21:04:09 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

19/01/12 21:04:11 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 250.2 KB, free 912.1 MB)

19/01/12 21:04:11 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 23.7 KB, free 912.0 MB)

19/01/12 21:04:11 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.0.104:50394 (size: 23.7 KB, free: 912.3 MB)

19/01/12 21:04:11 INFO SparkContext: Created broadcast 0 from textFile at WordCount.scala:16

19/01/12 21:04:12 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/0 is now EXITED (Command exited with code 1)

19/01/12 21:04:12 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/0 removed: Command exited with code 1

19/01/12 21:04:12 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/1 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:12 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/1 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:12 INFO BlockManagerMaster: Removal of executor 0 requested

19/01/12 21:04:12 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/1 is now RUNNING

19/01/12 21:04:12 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 0

19/01/12 21:04:13 INFO BlockManagerMasterEndpoint: Trying to remove executor 0 from BlockManagerMaster.

19/01/12 21:04:15 INFO FileInputFormat: Total input paths to process : 1

19/01/12 21:04:15 INFO SparkContext: Starting job: collect at WordCount.scala:18

19/01/12 21:04:16 INFO DAGScheduler: Registering RDD 3 (map at WordCount.scala:17)

19/01/12 21:04:16 INFO DAGScheduler: Got job 0 (collect at WordCount.scala:18) with 2 output partitions

19/01/12 21:04:16 INFO DAGScheduler: Final stage: ResultStage 1 (collect at WordCount.scala:18)

19/01/12 21:04:16 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

19/01/12 21:04:16 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

19/01/12 21:04:16 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[3] at map at WordCount.scala:17), which has no missing parents

19/01/12 21:04:16 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 912.0 MB)

19/01/12 21:04:16 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.9 KB, free 912.0 MB)

19/01/12 21:04:16 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.0.104:50394 (size: 2.9 KB, free: 912.3 MB)

19/01/12 21:04:16 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1039

19/01/12 21:04:16 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[3] at map at WordCount.scala:17) (first 15 tasks are for partitions Vector(0, 1))

19/01/12 21:04:16 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

19/01/12 21:04:16 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/1 is now EXITED (Command exited with code 1)

19/01/12 21:04:16 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/1 removed: Command exited with code 1

19/01/12 21:04:16 INFO BlockManagerMaster: Removal of executor 1 requested

19/01/12 21:04:16 INFO BlockManagerMasterEndpoint: Trying to remove executor 1 from BlockManagerMaster.

19/01/12 21:04:16 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 1

19/01/12 21:04:16 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/2 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:16 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/2 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:16 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/2 is now RUNNING

19/01/12 21:04:19 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/2 is now EXITED (Command exited with code 1)

19/01/12 21:04:19 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/2 removed: Command exited with code 1

19/01/12 21:04:19 INFO BlockManagerMaster: Removal of executor 2 requested

19/01/12 21:04:19 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 2

19/01/12 21:04:19 INFO BlockManagerMasterEndpoint: Trying to remove executor 2 from BlockManagerMaster.

19/01/12 21:04:19 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/3 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:19 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/3 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:19 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/3 is now RUNNING

19/01/12 21:04:22 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/3 is now EXITED (Command exited with code 1)

19/01/12 21:04:22 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/3 removed: Command exited with code 1

19/01/12 21:04:22 INFO BlockManagerMaster: Removal of executor 3 requested

19/01/12 21:04:22 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 3

19/01/12 21:04:22 INFO BlockManagerMasterEndpoint: Trying to remove executor 3 from BlockManagerMaster.

19/01/12 21:04:22 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/4 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:22 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/4 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:22 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/4 is now RUNNING

19/01/12 21:04:26 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/4 is now EXITED (Command exited with code 1)

19/01/12 21:04:26 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/4 removed: Command exited with code 1

19/01/12 21:04:26 INFO BlockManagerMaster: Removal of executor 4 requested

19/01/12 21:04:26 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 4

19/01/12 21:04:26 INFO BlockManagerMasterEndpoint: Trying to remove executor 4 from BlockManagerMaster.

19/01/12 21:04:26 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/5 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:26 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/5 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:26 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/5 is now RUNNING

19/01/12 21:04:30 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/5 is now EXITED (Command exited with code 1)

19/01/12 21:04:30 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/5 removed: Command exited with code 1

19/01/12 21:04:30 INFO BlockManagerMaster: Removal of executor 5 requested

19/01/12 21:04:30 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 5

19/01/12 21:04:30 INFO BlockManagerMasterEndpoint: Trying to remove executor 5 from BlockManagerMaster.

19/01/12 21:04:30 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/6 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:30 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/6 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:30 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/6 is now RUNNING

19/01/12 21:04:31 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

19/01/12 21:04:33 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/6 is now EXITED (Command exited with code 1)

19/01/12 21:04:33 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/6 removed: Command exited with code 1

19/01/12 21:04:33 INFO BlockManagerMaster: Removal of executor 6 requested

19/01/12 21:04:33 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 6

19/01/12 21:04:33 INFO BlockManagerMasterEndpoint: Trying to remove executor 6 from BlockManagerMaster.

19/01/12 21:04:33 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/7 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:33 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/7 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:33 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/7 is now RUNNING

19/01/12 21:04:36 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/7 is now EXITED (Command exited with code 1)

19/01/12 21:04:36 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/7 removed: Command exited with code 1

19/01/12 21:04:36 INFO BlockManagerMaster: Removal of executor 7 requested

19/01/12 21:04:36 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 7

19/01/12 21:04:36 INFO BlockManagerMasterEndpoint: Trying to remove executor 7 from BlockManagerMaster.

19/01/12 21:04:36 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/8 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:36 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/8 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:36 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/8 is now RUNNING

19/01/12 21:04:39 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/8 is now EXITED (Command exited with code 1)

19/01/12 21:04:39 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/8 removed: Command exited with code 1

19/01/12 21:04:39 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/9 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:39 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/9 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:39 INFO BlockManagerMasterEndpoint: Trying to remove executor 8 from BlockManagerMaster.

19/01/12 21:04:39 INFO BlockManagerMaster: Removal of executor 8 requested

19/01/12 21:04:39 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 8

19/01/12 21:04:39 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/9 is now RUNNING

19/01/12 21:04:42 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/9 is now EXITED (Command exited with code 1)

19/01/12 21:04:42 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/9 removed: Command exited with code 1

19/01/12 21:04:42 INFO BlockManagerMaster: Removal of executor 9 requested

19/01/12 21:04:42 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 9

19/01/12 21:04:42 INFO BlockManagerMasterEndpoint: Trying to remove executor 9 from BlockManagerMaster.

19/01/12 21:04:42 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/10 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:42 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/10 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:42 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/10 is now RUNNING

19/01/12 21:04:45 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/10 is now EXITED (Command exited with code 1)

19/01/12 21:04:45 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/10 removed: Command exited with code 1

19/01/12 21:04:45 INFO BlockManagerMaster: Removal of executor 10 requested

19/01/12 21:04:45 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 10

19/01/12 21:04:45 INFO BlockManagerMasterEndpoint: Trying to remove executor 10 from BlockManagerMaster.

19/01/12 21:04:45 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/11 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:45 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/11 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:45 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/11 is now RUNNING

19/01/12 21:04:46 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

19/01/12 21:04:48 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/11 is now EXITED (Command exited with code 1)

19/01/12 21:04:48 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/11 removed: Command exited with code 1

19/01/12 21:04:48 INFO BlockManagerMasterEndpoint: Trying to remove executor 11 from BlockManagerMaster.

19/01/12 21:04:48 INFO BlockManagerMaster: Removal of executor 11 requested

19/01/12 21:04:48 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 11

19/01/12 21:04:48 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/12 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:48 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/12 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:48 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/12 is now RUNNING

19/01/12 21:04:51 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/12 is now EXITED (Command exited with code 1)

19/01/12 21:04:51 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/12 removed: Command exited with code 1

19/01/12 21:04:51 INFO BlockManagerMaster: Removal of executor 12 requested

19/01/12 21:04:51 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 12

19/01/12 21:04:51 INFO BlockManagerMasterEndpoint: Trying to remove executor 12 from BlockManagerMaster.

19/01/12 21:04:51 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/13 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:51 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/13 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:51 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/13 is now RUNNING

19/01/12 21:04:53 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/13 is now EXITED (Command exited with code 1)

19/01/12 21:04:53 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/13 removed: Command exited with code 1

19/01/12 21:04:53 INFO BlockManagerMaster: Removal of executor 13 requested

19/01/12 21:04:53 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 13

19/01/12 21:04:53 INFO BlockManagerMasterEndpoint: Trying to remove executor 13 from BlockManagerMaster.

19/01/12 21:04:53 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/14 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)

19/01/12 21:04:53 INFO StandaloneSchedulerBackend: Granted executor ID app-20190112210407-0000/14 on hostPort 192.168.0.104:49749 with 1 core(s), 500.0 MB RAM

19/01/12 21:04:53 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/14 is now RUNNING

19/01/12 21:04:57 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190112210407-0000/14 is now EXITED (Command exited with code 1)

19/01/12 21:04:57 INFO StandaloneSchedulerBackend: Executor app-20190112210407-0000/14 removed: Command exited with code 1

19/01/12 21:04:57 INFO BlockManagerMaster: Removal of executor 14 requested

19/01/12 21:04:57 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 14

19/01/12 21:04:57 INFO BlockManagerMasterEndpoint: Trying to remove executor 14 from BlockManagerMaster.

19/01/12 21:04:57 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190112210407-0000/15 on worker-20190112203002-192.168.0.104-49749 (192.168.0.104:49749) with 1 core(s)