基于双目摄像头SGBM视差图的障碍物提取

基于双目摄像头所拍摄的图像进行障碍物提取,主要分为一下四个步骤:

1.双目摄像头校正

2.视差图提取

3.删除无关干扰区域

4.提取障碍物轮廓,重心,大小等属性

一丶相机标定

相机标定有很多方法,光流自标定,棋盘格标定等,张正友标定法,网上可以了解一下,最后得出相机的一系列参数

/*

事先标定好的相机的参数

fx 0 cx

0 fy cy

0 0 1

*/

//MATLAB优化前

Mat cameraMatrixL = (Mat_(3, 3) << 1440.07133, 0, 954.95752,

0, 1440.87527, 537.00282,

0, 0, 1);

Mat distCoeffL = (Mat_(5, 1) << 0.00691, -0.00898, 0.00032, 0.00235, 0);

Mat cameraMatrixR = (Mat_(3, 3) << 1435.24677, 0, 1011.06019,

0, 1435.19970, 545.11155,

0, 0, 1);

Mat distCoeffR = (Mat_(5, 1) << 0.01228, -0.02151, 0.00005, 0.00199, 0);

Mat T = (Mat_(3, 1) << -59.33860, -0.18628, -0.62481);//T平移向量

Mat rec = (Mat_(3, 1) << 0.00484, -0.01154, 0.00018);//rec旋转向量

Mat R;//R 旋转矩阵 二丶视差图提取

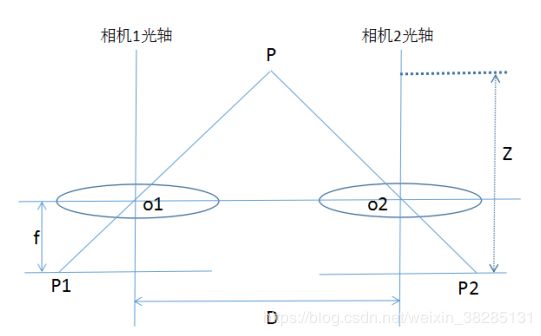

上图中,两个光轴保持平行的相机对视场一点进行拍摄,O1,O2分别为两个相机的光心,两个相机之间的距离也就是基线为D,Z为场景目标与成像面之间的距离,f为两个相机的焦距,P1,P2分别为两个摄像机拍摄的图像中对应的两个像素点,在传感器上的坐标点分别为(x1,y1),(x2,y2),其中y1=y2。根据三角形的相似原理可知:

本文采用opencv中的sgbm算法,进行视差图提取,部分代码如下:

void stereo_match(Ptr sgbm,Mat rectifyImageL,Mat rectifyImageR,Mat &disp8,Mat &xyz)

{

sgbm->setPreFilterCap(63);

int sgbmWinSize = 5;//根据实际情况自己设定

int NumDisparities = 416;//根据实际情况自己设定

int UniquenessRatio = 6;//根据实际情况自己设定

sgbm->setBlockSize(sgbmWinSize);

int cn = rectifyImageL.channels();

sgbm->setP1(8 * cn*sgbmWinSize*sgbmWinSize);

sgbm->setP2(32 * cn*sgbmWinSize*sgbmWinSize);

sgbm->setMinDisparity(0);

sgbm->setNumDisparities(NumDisparities);

sgbm->setUniquenessRatio(UniquenessRatio);

sgbm->setSpeckleWindowSize(100);

sgbm->setSpeckleRange(10);

sgbm->setDisp12MaxDiff(1);

sgbm->setMode(StereoSGBM::MODE_SGBM);

Mat disp,dispf;

sgbm->compute(rectifyImageL, rectifyImageR, disp);

//去黑边

Mat img1p, img2p;

copyMakeBorder(rectifyImageL, img1p, 0, 0, NumDisparities, 0, IPL_BORDER_REPLICATE);

copyMakeBorder(rectifyImageR, img2p, 0, 0, NumDisparities, 0, IPL_BORDER_REPLICATE);

dispf = disp.colRange(NumDisparities, img2p.cols - NumDisparities);

dispf.convertTo(disp8, CV_8U, 255 / (NumDisparities *16.));

imshow("disparity", disp8);

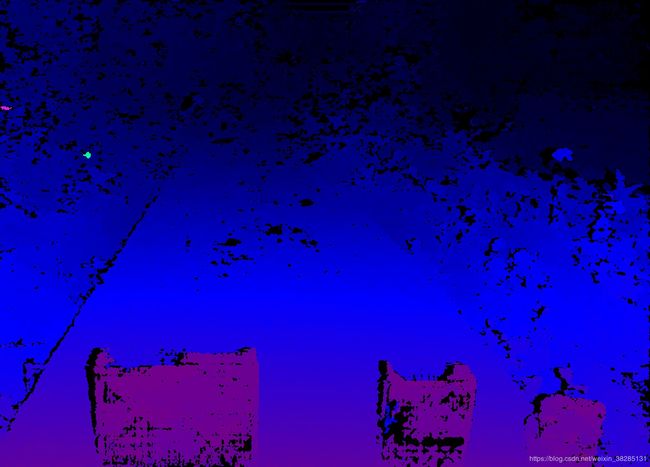

} 并且在程序中对视差图进行了上色,程序如下:

/*给深度图上色*/

void GenerateFalseMap(cv::Mat &src, cv::Mat &disp)//视差图和彩色视差图

{

// color map

float max_val = 255.0f;

float map[8][4] = { { 0,0,0,114 },{ 0,0,1,185 },{ 1,0,0,114 },{ 1,0,1,174 },

{ 0,1,0,114 },{ 0,1,1,185 },{ 1,1,0,114 },{ 1,1,1,0 } };

float sum = 0;

for (int i = 0; i < 8; i++)

sum += map[i][3];

float weights[8]; // relative weights

float cumsum[8]; // cumulative weights

cumsum[0] = 0;

for (int i = 0; i < 7; i++) {

weights[i] = sum / map[i][3];

cumsum[i + 1] = cumsum[i] + map[i][3] / sum;

}

int height_ = src.rows;

int width_ = src.cols;

// for all pixels do

for (int v = 0; v < height_; v++) {

for (int u = 0; u < width_; u++) {

// get normalized value

float val = std::min(std::max(src.data[v*width_ + u] / max_val, 0.0f), 1.0f);

// find bin

int i;

for (i = 0; i < 7; i++)

if (val < cumsum[i + 1])

break;

// compute red/green/blue values

float w = 1.0 - (val - cumsum[i])*weights[i];

uchar r = (uchar)((w*map[i][0] + (1.0 - w)*map[i + 1][0]) * 255.0);

uchar g = (uchar)((w*map[i][1] + (1.0 - w)*map[i + 1][1]) * 255.0);

uchar b = (uchar)((w*map[i][2] + (1.0 - w)*map[i + 1][2]) * 255.0);

//rgb内存连续存放

disp.data[v*width_ * 3 + 3 * u + 0] = b;

disp.data[v*width_ * 3 + 3 * u + 1] = g;

disp.data[v*width_ * 3 + 3 * u + 2] = r;

}

}

}最终得到的视差图如下所示:

三丶干扰区域去除

在提取视差图之后,通过Opencv自带的reprojectionImageTo3D函数将视差图转化为实际距离,以双目摄像头为中心建立的世界坐标系。经过函数处理的x,y,z坐标值是以mm为单位的世界坐标系坐标。

1.行进路径外场景去除

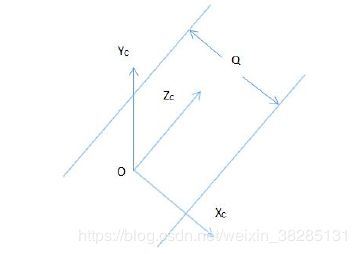

以相机的左光心简历摄像机坐标系,也就是世界坐标系,x轴与行进路径水平,y与路面垂直,z指向前进方向,假设相机位于道路中间,道路的宽度为Q,双目摄像头之间的基线为T,则行进路线在世界坐标系的X坐标范围为:{-(Q/2-T/2),Q/2-T/2},示意图如下:

2.地面去除

设光心距离地面的高度为H,(x,y,z)实际空间中点p的三维坐标,则p点距地面的高度为:

h=H-y

设定一个阈值,就可以消除地面低于一定高度的物体了,包括地面,

注:我由于xyz坐标转换有点问题,效果不是很理想,就不放图了

四丶轮廓提取,高度等属性

opencv中常见的一些操作,可以自行查一下,我直接放一下程序。

//阈值化

int threshValue = Otsu(result1);

Mat local;

threshold(result1, local, 20,255,CV_THRESH_BINARY);

imshow("二值化", local);

imwrite("thresholded.jpg", local);

//计算凸包

cout << "计算凸包和轮廓....." << endl;

vector > contours;

vector hierarchy;

findContours(local, contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0));

/// 对每个轮廓计算其凸包

vector >hull(contours.size());

vector > result;

for (int i = 0; i < contours.size(); i++)

{

convexHull(Mat(contours[i]), hull[i], false);

}

cout << "轮廓凸包绘制......" << endl;

/// 绘出轮廓及其凸包

Mat drawing = Mat::zeros(local.size(), CV_8UC3);

for (int i = 0; i < contours.size(); i++)

{

if (contourArea(contours[i]) < 500)//面积小于area的凸包,可忽略

continue;

result.push_back(hull[i]);

Scalar color = Scalar(0,0,255);

drawContours(drawing, contours, i, color, 1, 8, vector(), 0, Point());

drawContours(drawing, hull, i, color, 1, 8, vector(), 0, Point());

}

imshow("contours", drawing);

imwrite("contours.jpg", drawing);

//计算每一个凸包的位置和高度(也就是物体高度和位置)

cout << "计算物体位置....." << endl;

Point pt[100000];

Moments moment;//矩

vectorCenter;//创建保存物体重心的向量

Vec3f Point3v;//三维坐标点

for (int i = 0; i >= 0; i = hierarchy[i][0])//读取每一个轮廓求取重心

{

Mat temp(contours.at(i));

Scalar color(0, 0, 255);

moment = moments(temp, false);

if (contourArea(contours[i]) < 500)//面积小于area的凸包,可忽略

continue;

if (moment.m00 != 0)//除数不能为0

{

pt[i].x = cvRound(moment.m10 / moment.m00);//计算重心横坐标

pt[i].y = cvRound(moment.m01 / moment.m00);//计算重心纵坐标

}

//重心坐标

Point3v = xyz.at(pt[i].y, pt[i].x);

Center.push_back(Point3v);//将重心坐标保存到Center向量中

}

//统计物体高度

Point p1, p2;//分别是物体最高点和最低点的位置

float height,width;//物体高度

Vec3f point1,point2;//物体的最高点和最低点的实际高度

vectorall_height;

vectorall_width;

for (int i = 0; i < result.size(); i++)

{

sort(hull[i].begin(), hull[i].end(), sortRuleY);

p1 = hull[i][0];

p2 = hull[i][hull[i].size() - 1];

point1 = xyz.at(p1.y, p1.x);

point2 = xyz.at(p2.y, p2.x);

height = abs(point1[1] - point2[1]);

sort(hull[i].begin(), hull[i].end(), sortRuleX);

p1 = hull[i][0];

p2 = hull[i][hull[i].size() - 1];

point1 = xyz.at(p1.y, p1.x);

point2 = xyz.at(p2.y, p2.x);

width = abs(point1[0] - point2[0]);

all_height.push_back(height);

all_width.push_back(width);

}

//输出物体的位置和高度

if (all_height.size() == Center.size()&&all_height.size()!=0)

{

for (int i = 0; i < Center.size(); i++)

{

cout << "障碍物坐标:" << Center[i] << " " << "障碍物高度:" << all_height[i] <<"障碍物宽度:"< 转载请注明:转载自:https://blog.csdn.net/weixin_38285131