快速掌握Latex科技论文写作技巧

上了一周的latex培训课,很水,只知道了有这个软件,直到今天交期末作业才把它完整走一遍。一个小时足够搞定所有基本操作(题目,摘要,图片,表格,公式)。但如果所投期刊有具体要求,并且你是个完美主义,那就另当别论了。

首先去https://mirrors.tuna.tsinghua.edu.cn/ctex/legacy/2.9/下载Ctex套装1.3G就行。

![]()

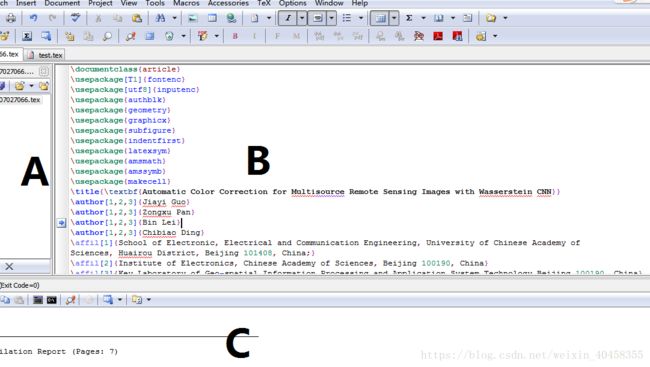

然后就是打开你所需要修改的论文,打开-所有程序-CteX-WinEdt,如下图

风格类似于matlab,然后就是下载你论文所需要的模板在B区域涂涂改改就行了。如果只是单纯想学学,要自己写可以用下面我给的代码。可以省去你很多时间找资源,不用下载任何package放到B区运行出来,就可以明白Latex用于写论文的基本操作。唯一需要改的是图片名这里是1.png。

我想说的:

1.注意菜单栏可以直接用,省去好多代码

2.遇到bug时很有可能是你没有添加\usepackage{##}

3.很多错误网上都有解决方法,不要去群里问同学,除非你们很熟或者他很厉害。

另附:很全的数学符号代码表示方法http://www.mohu.org/info/symbols/symbols.htm

\documentclass{article}

\usepackage[T1]{fontenc}

\usepackage[utf8]{inputenc}

\usepackage{authblk}

\usepackage{geometry}

\usepackage{graphicx}

\usepackage{subfigure}

\usepackage{indentfirst}

\usepackage{latexsym}

\usepackage{amsmath}

\usepackage{amssymb}

\usepackage{makecell}

\title{\textbf{Automatic Color Correction for Multisource Remote Sensing Images with Wasserstein CNN}}

\author[1,2,3]{Jiayi Guo}

\author[1,2,3]{Zongxu Pan}

\author[1,2,3]{Bin Lei}

\author[1,2,3]{Chibiao Ding}

\affil[1]{School of Electronic, Electrical and Communication Engineering, University of Chinese Academy of

Sciences, Huairou District, Beijing 101408, China;}

\affil[2]{Institute of Electronics, Chinese Academy of Sciences, Beijing 100190, China}

\affil[3]{Key Laboratory of Geo-spatial Information Processing and Application System Technology,Beijing 100190, China}

\renewcommand\Authands{ and }

\begin{document}

\maketitle

\begin{abstract}

Abstract: In this paper a non-parametric model based on Wasserstein CNN is proposed for color

correction. It is suitable for large-scale remote sensing image preprocessing from multiple sources

under various viewing conditions, including illumination variances, atmosphere disturbances,

and sensor and aspect angles. Color correction aims to alter the color palette of an input image

to a standard reference which does not suffer from the mentioned disturbances. Most of current

methods highly depend on the similarity between the inputs and the references, with respect to

both the contents and the conditions, such as illumination and atmosphere condition. Segmentation

is usually necessary to alleviate the color leakage effect on the edges. Different from the previous

studies, the proposed method matches the color distribution of the input dataset with the references

in a probabilistic optimal transportation framework. Multi-scale features are extracted from the

intermediate layers of the lightweight CNN model and are utilized to infer the undisturbed

distribution. The Wasserstein distance is utilized to calculate the cost function to measure the

discrepancy between two color distributions. The advantage of the method is that no registration

or segmentation processes are needed, benefiting from the local texture processing potential of the

CNN models. Experimental results demonstrate that the proposed method is effective when the

input and reference images are of different sources, resolutions, and under different illumination and

atmosphere conditions.

\\\textbf{Keywords}: remote sensing image correction; color matching; optimal transport; CNN

\end{abstract}

\section{Introduction}

Large-scale remote sensing content providers aggregate remote sensing imagery from different

platforms, providing a vast geographical coverage with a range of spatial and temporal resolutions.

One of the challenges is that the color correction task becomes more complicated due to the

wide difference in viewing angles, platform characteristics, and light and atmosphere conditions

(see Figure 1). For further processing purposes, it is often desired to perform color correction to the

images. Histogram matching [1,2] is a cheap way to address this when a reference image with no color

errors is available that shares the same coverage of land and reflectance distribution.

To gain a deeper insight, first we would like to place histogram matching in a broader context

as the simplest form of color matching [3]. These methods try to match the color distribution of the

input images to a reference, also known as color transferring. They can either work by matching low order statistics [3–5] or by transferring the exact distribution [6–8]. Matching the low order

statistics is sensitive to the color space selected [9]. The performances of both methods are highly

related to the similarity between the contents of the input and the reference. Picking an appropriate

reference requires manual intervention and may become the bottle neck for processing. A drawback

of such methods is that the colors on the edges of the targets would be mixed up [10–12]. Methods

exploiting the spatial information were proposed to migrate the problem, but segmentation, spatial

matching, and alignment are required [13,14]. Matching the exact distribution is not sensitive to the

color space selection, but has to work in an iterative fashion [8]. Both the segmentation and the iteration

increase the computation burden and are not suitable for online viewing and querying. For video

and stereo cases, extra information from the correlation between frames can be exploited to achieve

better color harmony [15,16]. The holography method is introduced into color transfer to eliminate

the artifacts [17]. Manifold learning is an interesting framework to find the similarity between the

pixels, so that the output color can be more natural and it can suppress the color leakage as well [18].

Another perspective to comprehend the problem is image-to-image translation. Convolutional neural

networks have proven to be successful for such applications [19], for example, the auto colorization

of grayscale images [20,21]. Recently, deep learning shows its potential and power in hyper-spectral

image understanding applications [22].

\begin{figure}[h]

\centering

\includegraphics[scale=0.35]{1}

\caption{Color discrepancy in remote sensing images. (a,b) Digital Globe images on different dates

from Google Earth; (c,d) Digital Globe (bottom, right) and NASA (National Aeronautics and Space

on the same date from Google Earth; (e) GF1 (Gaofen-1) images from different sensors, same area and date.}

\end{figure}

Unfortunately, for large-scale applications, it is too strict a requirement that the whole

reflectance distribution should be the same between the reference image and the ones to be processed.

As a result, such reference histograms are usually not available and have greatly restricted the

applications of these sample-based color matching methods. In [23] the authors choose a color

correction plan that minimizes the color discrepancy between it and both the input image and the

reference image. This is a good solution in stitching applications. However, the purpose of this paper

is to eliminate the errors raised by atmosphere, light, etc., so that the result can be further employed

in ground reflectance retrieval or atmosphere parameters retrieval. We hope that the output is as

close as possible to the reference images, rather than modifying the ground truth values as in [23].

Since it is usually infeasible the reference images, rather than modifying the ground truth values as in [23]. Since it is usually

infeasible to find such a reference, a natural question is, can we develop a universal function which

can automatically determine the references directly according to the input images? Once this function

is obtained, we can combine it with simple histogram matching or other color transfer methods into

a very powerful algorithm. In this paper, a Wasserstein CNN model is built to infer the reference

histograms for remote sensing image color correction applications. The model is completely data

driven, and no registration or segmentation is needed in both the training phase and the inferring

phase. Besides, as will be explained in Section 2, the input and the reference can be of different

scales and sources. In Section 2, the details of the proposed method are elaborated in an optimal

transporting framework [24,25]. In Section 3, the experiments are conducted to validate the feasibility

of the proposed method, in which images from the GF1 and GF2 satellites are used as the input and

the reference datasets accordingly. Section 4 comprises the discussions and comparisons with other

color matching (correcting) methods. And finally, Section 5 gives the conclusion and points out our

future works.

\section{Materials and Methods}

\subsection{Analysis}

Given an input image $I^{'}$ and a reference image $I^{'}$ with $N_{c}$ channels, an automatic color matching

algorithm aims to alter the color palette of I to that of $I^{'}$, the reference. Some of the algorithms

require that the reference image is known, which are called sample-based methods. Of course

an ideal algorithm should work without knowing $I^{'}$. The matching can be operated either in the

Nc-dimensional color space at once, or in each dimension separately [8,26]. The influence of the light

and the atmosphere conditions and other factors can be included into a function \emph{h}($I^{'}$, \emph{x, y)} that acts on

the grayscale value of the pixel located at \emph{(x, y)}. Under such circumstances, the problem is converted

to learning an inverse transfer function \emph{f (I, x, y)} that maps the grayscale values of the input image \emph{I}

back to that of the reference image $I^{'}$, where \emph{(x, y)} denotes the location of the target pixel inside \emph{I}.

When the input image is divided into patches that each possess a relatively small geographical

coverage, the spatial variance of the color discrepancy inside each patch is usually small enough to be

neglected. Thus \emph{h($I^{'}$, x, y) }should be the same with \emph{h($I^{'}$, $x^{'}$, $y^{'}$)} as long as \emph{(x, y)} and \emph{($x^{'}$, $y^{'}$)} share the

same gray scale values. Let $u_{x,y}$ and $v_{x,y}$ be the gray scale values of the pixels located at (x, y) in \emph{I} and $I^{'}$

accordingly, and \emph{h($I^{'}$, x, y)} can be rewritten as \emph{h($I^{'}$, $v_{x,y}$)}, because the color discrepancy function is not

related to the location of the pixel but only to its value. The three assumptions of the transformation

from the input images to the reference images are made as follows, and some properties which\emph{ f}

should satisfy can be derived from them.

\\\textbf{Assumption 1}: $v_{x,y}$ = $v_{x^{'},y^{'}}$ $\Rightarrow$ $u_{x,y}$ = $u_{x^{'},y^{'}}$

Assumption 1 suggests that when two pixels in $I^{'}$ have the same grayscale value, so do the

corresponding pixels in \emph{I}. This assumption is straight forward since in general cases the cameras

are well calibrated and the inhomogeneity of light and atmosphere is usually small within a small

geographical coverage. It is true that when severe sensor errors occur this assumption may not hold,

however that is not the focus of this paper.

\\\textbf{Assumption 2}: $u_{x,y}$ = $u_{x^{'},y^{'}}$ $\Rightarrow$ $v_{x,y}$ = $v_{x^{'},y^{'}}$

Assumption 2 indicates that when two pixels in \emph{I} have the same grayscale, so are their

corresponding pixels in $I^{'}$. The assumption is based on the fact that the pixel value the sensor

recorded is not related to its context or location, but only to its raw physical intensity.

\\\textbf{Assumption 3}:$u_{x,y}$ > $u_{x^{'},y^{'}}$ ,$v_{x,y}$ > $v_{x^{'},y^{'}}$

Assumption 3 implies that the transformation is order preserving, or a brighter pixel in \emph{I} should

also be brighter in $I^{'}$, and vice versa.

According to the above assumptions, we expect the transfer function \emph{f }to possess the

following properties.

\\\textbf{Property 1:}$u_{x,y}$ = $u_{x^{'},y^{'}}$ $\Rightarrow$ \emph{f(I,$u_{x,y}$)}=\emph{f(I,$u_{x^{'},y^{'}}$)}

\\\textbf{Property 2:}$u_{x,y}$ > $u_{x^{'},y^{'}}$ \emph{f(I,$u_{x,y}$)}>\emph{f(I,$u_{x^{'},y^{'}}$)}

\\\textbf{Property 3:}$I_{1}$ $\not=$ $I_{2}$ $\Rightarrow$ \emph{f($I_{1}$,$\bullet$) $\not=$ f($I_{2}$,$\bullet$)}

Consider that even when two pixels inside $I_{1}$and$I_{2}$ share the same grayscale values, the corrected

values can still be different according to their ground truth values in the references. Property 3 is to say

that f should be content related. In other words, for different input images, the transfer function values

should be different to maintain the content consistency. To better explain the point, consider that two

input images having different contents, the grassland and the lake so to speak, happen to be of similar

color distributions. The pixel in the lake should be darker and the other pixel in the grassland should

be brighter in the corresponding reference images. If \emph{f }is only related to the grayscale values while

discarding the input images (the contexts of the pixels), this cannot be done because similar pixels in

different input images have to be mapped to similar output levels.

An issue to take into account is whether the raw image or its histogram of the input and reference

images should be made use of for the matching. Table 1 lists all possible cases, each of which will

be discussed.

\begin{center}

\textbf{Table 1.} Different color matching schemes according to the input form and the reference form.

\end{center}

\begin{center}

\begin{tabular}{c|c|c} \Xhline{0.8pt}

Input & Reference & Scheme \\ \Xhline{0.6pt}

Histogram &Histogram &A \\

Image & Image & B \\

Image & Histogram & C \\

Histogram & Image & D \\ \Xhline{0.8pt}

\end{tabular}

\end{center}

Scheme A is the case when both the input and reference are histograms, and this is essentially

histogram matching. Many previous studies employ this scheme for simplicity, for example, histogram

matching and low order statistics matching in various color spaces. Since histograms do not contain

the content information, the corresponding histogram matching is not content related. Concretely

speaking, two pixels that belong to two regions with different contents but with the same grayscale fall

into the same bin of the histogram, and have to be assigned to the same grayscale value in the output

image, which does not meet Property 3. In order for one distribution with different contexts to be

correctly matched to different corresponding distributions, we cannot enclose different transformations

in one unified mapping (see Figure 2). This should not be appropriate for large scale datasets that

demand a high degree of automation.

Scheme B corresponds to the case where both the input and output are images, which is usually

referred to as image to image translation. The image certainly contains much more information than

its histogram, thus providing a possibility that the mapping is content related. Although Property 3

can be satisfied, this scheme emphasizes the content of the image, and the consequence is that the

pixels with same grayscales may be mapped to different grayscales as their contexts could be different,

and in this case Property 1 is violated (see Figure 3).

\subsection{Optimal Transporting Perspective of View}

\subsection{The Model Structure}

\subsection{Data Augmentation}

\subsection{Algorithm Flow Chart}

\section{Results}

\section{Discussion}

\section{Conclusion}

This paper presents a nonparametric color correcting scheme in a probabilistic optimal transport

framework, based on theWasserstein CNN model. The multi-scale features are first to be extracted from

the intermediate layers, and then are used to infer the corrected color distribution which minimizes

the errors with respect to the ground truth. The experimental results demonstrate that the method is

able to handle images of different sources, resolutions, and illumination and atmosphere conditions.

With high efficiency in computing speed and memory consumption, the proposed method shows its

prospects for utilization in real time processing of large-scale remote sensing datasets.

We are currently extending the global color matching algorithm to take the local inhomogeneity

of illumination into consideration, in order to enhance the precision. Local histogram matching of

each band could serve for reflectance retrieval and atmospheric parameter retrieval purposes, and the

preliminary results are encouraging.

\\\textbf{Acknowledgments}: This work was supported by the National Natural Science Foundation of China (Grant

No. 61331017.)

\\\textbf{Author Contributions}: Jiayi Guo and Bin Lei conceived and designed the experiments; Jiayi Guo performed

the experiments; Jiayi Guo analyzed the data; Bin Lei and Chibiao Ding contributed materials and computing

resources; Jiayi Guo and Zongxu Pan wrote the paper.

\\\textbf{Conflicts of Interest}: The authors declare no conflict of interest.

\begin{thebibliography}{123456}

\bibitem {JW1}Haichao, L.; Shengyong, H.; Qi, Z. Fast seamless mosaic algorithm for multiple remote sensing images.

Infrared Laser Eng. 2011, 40, 1381–1386.

\bibitem {JW1}Rau, J.; Chen, N.-Y.; Chen, L.-C. True orthophoto generation of built-up areas using multi-view images.

Photogramm. Eng. Remote Sens. 2002, 68, 581–588.

\bibitem {JW1}Reinhard, E.; Adhikhmin, M.; Gooch, B.; Shirley, P. Color transfer between images. IEEE Comput. Graph. Appl.2001, 21, 34–41. [CrossRef]

\bibitem {JW1}Abadpour, A.; Kasaei, S. A fast and efficient fuzzy color transfer method. In Proceedings of the IEEE FourthInternational Symposium on Signal Processing and Information Technology, Rome, Italy, 18–21 Dcember 2004; pp. 491–494.

\bibitem {JW1} Kotera, H. A scene-referred color transfer for pleasant imaging on display. In Proceedings of the IEEE

International Conference on Image Processing, Genova, Italy, 14 November 2005.

\bibitem {JW1} Morovic, J.; Sun, P.-L. Accurate 3d image colour histogram transformation. Pattern Recognit. Lett. 2003, 24,

1725–1735. [CrossRef]

\bibitem {JW1}Neumann, L.; Neumann, A. Color style transfer techniques using hue, lightness and saturation histogram

matching. In Proceedings of the Computational Aesthetics in Graphics, Visualization and Imaging, Girona,

Spain, 18–20 May 2005; pp. 111–122.

\bibitem {JW1}Pitie, F.; Kokaram, A.C.; Dahyot, R. N-dimensional probability density function transfer and its application

to color transfer. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing,

China, 17–21 October 2005; pp. 1434–1439.

\bibitem {JW1} Reinhard, E.; Pouli, T. Colour spaces for colour transfer. In Proceedings of the International Workshop

on Computational Color Imaging, Milan, Italy, 20–21 April 2011; Springer: Berlin/Heidelberg, Germany;

pp. 1–15.

\bibitem {JW1} An, X.; Pellacini, F. User-controllable color transfer. In Computer Graphics Forum; Wiley Online Library:

Hoboken, NJ, USA, 2010; pp. 263–271.

\bibitem {JW1}Pouli, T.; Reinhard, E. Progressive color transfer for images of arbitrary dynamic range. Comput. Graph. 2011,

35, 67–80. [CrossRef]

\bibitem {JW1} Tai, Y.-W.; Jia, J.; Tang, C.-K. Local color transfer via probabilistic segmentation by expectation-maximization.

In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition,

San Diego, CA, USA, 20–26 June 2005; pp. 747–754.

\bibitem {JW1} HaCohen, Y.; Shechtman, E.; Goldman, D.B.; Lischinski, D. Non-rigid dense correspondence with

applications for image enhancement. ACM Trans. Graph. 2011, 30, 70. [CrossRef]

\bibitem {JW1} Kagarlitsky, S.; Moses, Y.; Hel-Or, Y. Piecewise-consistent color mappings of images acquired under various

conditions. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan,

29 September–2 October 2009; pp. 2311–2318.

\bibitem {JW1} Bonneel, N.; Sunkavalli, K.; Paris, S.; Pfister, H. Example-based video color grading. ACM Trans. Graph. 2013,

32, 39:1–39:12. [CrossRef]

\bibitem {JW1} Wang, Q.; Yan, P.; Yuan, Y.; Li, X. Robust color correction in stereo vision. In Proceedings of the 2011 18th

IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 965–968.

\bibitem {JW1} Gong, H.; Finlayson, G.D.; Fisher, R.B. Recoding color transfer as a color homography. arXiv 2016,

arXiv:1608.01505.

\bibitem {JW1}Liao, D.; Qian, Y.; Li, Z.-N. Semisupervised manifold learning for color transfer between multiview

images. In Proceedings of the 2016 23rd International Conference on Pattern Recognition, Cancun, Mexico,

4–8 December 2016; pp. 259–264.

\bibitem {JW1}Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks.

arXiv 2016, arXiv:1611.07004.

\bibitem {JW1} Larsson, G.;Maire,M.; Shakhnarovich, G. Learning representations for automatic colorization. In Proceedings of

the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 577–593.

\bibitem {JW1} Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on

Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 649–666.

\bibitem {JW1}Wang, Q.; Lin, J.; Yuan, Y. Salient band selection for hyperspectral image classification via manifold ranking.

IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1279–1289. [CrossRef] [PubMed]

\bibitem {JW1} Vallet, B.; Lelégard, L. Partial iterates for symmetrizing non-parametric color correction. ISPRS J. Photogramm.

Remote Sens. 2013, 82, 93–101. [CrossRef]

\bibitem {JW1} Danila, B.; Yu, Y.; Marsh, J.A.; Bassler, K.E. Optimal transport on complex networks. Phys. Rev. E Stat.

Nomlin. Soft. Matter Phys. 2006, 74, 046106. [CrossRef] [PubMed]

\bibitem {JW1} Villani, C. Optimal Transport: Old and New; Springer: Berlin, Germany, 2008.

\bibitem {JW1} Pitié, F.; Kokaram, A. The linear monge-kantorovitch linear colour mapping for example-based colour transfer.

In Proceedings of the European Conference on VisualMedia Production, London, UK, 27–28 November 2007.

\bibitem {JW1} Frogner, C.; Zhang, C.; Mobahi, H.; Araya, M.; Poggio, T.A. Learning with a wasserstein loss. In Proceedings

of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 2015; pp. 2053–2061.

\bibitem {JW1}Cuturi, M.; Avis, D. Ground metric learning. J. Mach. Learn. Res. 2014, 15, 533–564.

\bibitem {JW1} Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. Squeezenet: Alexnet-level

accuracy with 50x fewer parameters and <0.5 mb model size. arXiv 2016, arXiv:1602.07360.

\bibitem {JW1} Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings

of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 2015; pp. 3431–3440.

\end{thebibliography}

\end{document}