oracle RAC 高可用集群

oracle RAC集群简介

Oracle RAC主要支持Oracle9i、10g、11g,12C版本

在Oracle RAC环境下,Oracle集成提供了集群软件和存储管理软件,为用户降低了应用成本。当应用规模需要扩充时,用户可以按需扩展系统,以保证系统的性能。

1)多节点负载均衡;

2)提供高可用:故障容错和无缝切换功能,将硬件和软件错误造成的影响最小化;

3)通过并行执行技术提高事务响应时间----通常用于数据分析系统;

4)通过横向扩展提高每秒交易数和连接数----通常对于联机事务系统;

5)节约硬件成本,可以用多个廉价PC服务器代替昂贵的小型机或大型机,同时节约相应维护成本;

6)可扩展性好,可以方便添加删除节点,扩展硬件资源。

缺点:

1)相对单机,管理更复杂,要求更高;

2)在系统规划设计较差时性能甚至不如单节点;

3)可能会增加软件成本(如果使用高配置的pc服务器,Oracle一般按照CPU个数收费

搭建环境

硬件每个节点要求必须配置两个网卡

| 操作系统 | IP | 主机名 | |

|---|---|---|---|

| centos 7.4 | 192.168.169.10 | 192.168.69.10 | rac1 |

| centos 7.4 | 192.168.169.20 | 192.168.69.20 | rac2 |

| centos 7.4 | 192.168.169.30 | iscsi-server |

一. 基础环境规划

IP地址的规划、要求修改host文件,所有节点的host文件一样

| IP类型 | IP地址 | 对应的主机名 | 对IP地址的理解 |

|---|---|---|---|

| public ip | 192.168.169. 10 | rac1 | 这是本机物理网卡对应的IP用于网络之间的连接 |

| 192.168.169.20 | rac2 | ||

| priv ip | 192.168.69.10 | rac1-priv | 这个IP是私网的IP地址、每个节点的私网IP都必须要在同一网段、用于各节点之间做心跳检测 |

| 192.168.69.20 | rac2-priv | ||

| vip | 192.168.169.111 | rac1-vip | 虚拟IP地址,需要与物理网卡在同一网段、只需写入host文件,当服务启动时就会生成这个IP、当服务宕机这个IP地址就会漂移到其他存活的节点上 |

| 192.168.169.222 | rac2-vip | ||

| scan ip | 192.168.169.63 | rac-scan | Oracle 11g推出的新功能,用于DNS和GNS解析、一个节点最多有三个scan ip,但写入host文件解析只能有一个,在官网上不推荐这样做,因为这样做失去了他的新功能、但在实际的生产环境中我们都是写入host文件的 |

1. 节点1和节点2 的hosts文件必须一致

[root@rac1 ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.169.10 rac1

192.168.169.20 rac2

#priv ip

192.168.69.10 rac1-priv

192.168.69.20 rac2-priv

#vip

192.168.169.111 rac1-vip

192.168.169.222 rac2-vip

#scan

192.168.169.63 rac-scan

2. 直接把修改好的配置文件发送给节点2

[root@rac1 ~]# scp /etc/hosts [email protected]:/etc/hosts

The authenticity of host '192.168.169.20 (192.168.169.20)' can't be established.

ECDSA key fingerprint is SHA256:qshWKJ+cGX5OYoSmJA7YrwfvYaj9CuI5oG1UlRCmXsw.

ECDSA key fingerprint is MD5:27:a3:50:62:45:7e:6f:15:46:cf:26:96:ce:6f:cc:56.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.169.20' (ECDSA) to the list of known hosts.

[email protected]'s password:

hosts 100% 411 16.8KB/s 00:00

3. 配置yum 源、安装epel 源 (两个节点做相同操作)

[root@rac1 yum.repos.d]#curl -o CentOS7-Base-163.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1572 100 1572 0 0 11116 0 --:--:-- --:--:-- --:--:-- 11148

[root@rac1 yum.repos.d]# sed -i 's/\$releasever/7/g' /etc/yum.repos.d/CentOS7-Base-163.repo

[root@rac1 yum.repos.d]# sed -i 's/^enabled=.*/enabled=1/g' /etc/yum.repos.d/CentOS7-Base-163.repo

[root@rac1 yum.repos.d]# yum install -y epel-release

4. 关闭防火墙,关闭selinux (两个节点做相同操作)

[root@rac1 ~]# systemctl stop firewalld

[root@rac1 ~]# systemctl disable firewalld

[root@rac1 ~]# setenforce 0

[root@rac1 ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

5. 创建用户和组,并给用户配置密码 (两个节点做相同操作)

[root@rac1 ~]# groupadd oinstall

[root@rac1 ~]# groupadd dba

[root@rac1 ~]# groupadd oper

[root@rac1 ~]# groupadd asmdba

[root@rac1 ~]# groupadd asmoper

[root@rac1 ~]# groupadd asmadmin

[root@rac1 ~]# useradd -g oinstall -G dba,oper,asmdba oracle

[root@rac1 ~]# useradd -g oinstall -G asmdba,dba,asmadmin,asmoper grid

6. 创建目录 (两个节点做相同操作)

[root@rac1 ~]# mkdir /u01

[root@rac1 ~]# mkdir /u01/{grid,oracle,gridbase}

[root@rac1 ~]# ls /u01/

grid gridbase oracle

7. 对目录授权处理 (两个节点做相同操作)

[root@rac1 ~]# chown -R grid:oinstall /u01/

[root@rac1 ~]# chown -R oracle:oinstall /u01/oracle/

[root@rac1 ~]# chmod -R g+w /u01/

8. 添加环境变量 (两个节点做相同操作)

[root@rac1 ~]# vi /home/grid/.bash_profile

ORACLE_BASE=/u01/gridbase

ORACLE_HOME=/u01/grid

PATH=$ORACLE_HOME/bin:$PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:$LD_LIBRARY_PATH

export ORACLE_BASE ORACLE_HOME PATH LD_LIBRARY_PATH DISPLAY

[root@rac1 ~]# vi /home/oracle/.bash_profile

ORACLE_BASE=/u01/oracle

ORACLE_HOME=/u01/oracle/db

ORACLE_SID=cludb1 //注意节点1和节点2的SID 不能一样

PATH=$ORACLE_HOME/bin:$PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:$LD_LIBRARY_PATH

export ORACLE_BASE ORACLE_HOME ORACLE_SID PATH LD_LIBRARY_PATH DISPLAY

9. 重新读取配置文件,并检测是否生效 (两个节点做相同操作)

[root@rac1 ~]# source /home/grid/.bash_profile

[root@rac1 ~]# source /home/oracle/.bash_profile

[root@rac1 ~]# echo $ORACLE_HOME

/u01/oracle/db

10. 修改linux 内核参数 (两个节点做相同操作)

[root@rac1 ~]# cat /etc/sysctl.conf

fs.file-max = 6815744

fs.aio-max-nr = 1048576

kernel.shmall = 2097152

kernel.shmmax = 2147483648

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

[root@rac1 ~]# sysctl -p //使修改内核生效

11. 修改配置文件/etc/security/limits.conf (两个节点做相同操作)

[root@rac1 ~]# vi /etc/security/limits.conf //在文件尾部添加

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

二 、 创建共享磁盘、这里我用的iscsi 来做共享,步骤如下

| 类别 | IP | 主机名 |

|---|---|---|

| iscsi服务端 | 192.168.169.30 | iscsi-server |

| iscsi 客户端 | 192.168.169.10 | rac1 |

| iscsi 客户端 | 192.168.169.20 | rac2 |

服务端的操作

1. 服务端必须要添加三块盘作为共享盘

[root@iscsi-server ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 5G 0 part /boot

├─sda2 8:2 0 10G 0 part /

└─sda3 8:3 0 5G 0 part [SWAP]

sdb 8:16 0 10G 0 disk

sdc 8:32 0 1G 0 disk

sdd 8:48 0 1G 0 disk

sr0 11:0 1 4.2G 0 rom

2. 服务端下载targetd 、targetcli

[root@iscsi-server ~]# yum install targetd targetcli -y

3. 启动服务,并设置开机自启动

[root@iscsi-server ~]# systemctl start targetd

[root@iscsi-server ~]# systemctl enable targetd

4.用targetcli 来管理

[root@iscsi-server ~]# targetcli

/> cd backstores/block

/backstores/block> create disk0 /dev/sdb //加入这三块磁盘

/backstores/block> create disk1 /dev/sdc

/backstores/block> create disk2 /dev/sdd

/backstores/block> cd /iscsi

/iscsi> create //创建target名称,配置共享资源

/iscsi> cd iqn.2003-01.org.linux-iscsi.....

/iscsi/iqn.20....a1281bc5b561> cd tpg1/luns

/iscsi/iqn.20...561/tpg1/luns> create /backstores/block/disk0

/iscsi/iqn.20...561/tpg1/luns> create /backstores/block/disk1

/iscsi/iqn.20...561/tpg1/luns> create /backstores/block/disk2

/iscsi/iqn.20...561/tpg1/luns> cd ../acls

/iscsi/iqn.20...561/tpg1/acls> create iqn.2003-01.org.linux-iscsi.iscsi-server.x8664:sn.a1281bc5b561

/iscsi/iqn.20...561/tpg1/acls> cd ..

/iscsi/iqn.20.../tpg1/portals> delete 0.0.0.0 3260 //删除默认的监听地址

/iscsi/iqn.20.../tpg1/portals> create 192.168.169.30 //配置服务端的IP地址

/iscsi/iqn.20.../tpg1/portals> cd / //切回根目录

/> saveconfig //保存

/> exit //退出

客户端的配置

//注意这里要在 rac1和rac2 这两个节点上都配置,由于步骤相同这里只演示其中一个节点

1. 在客户端安装

[root@rac2 ~]# yum install iscsi-initiator-utils -y

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* epel: fedora.cs.nctu.edu.tw

Resolving Dependencies

--> Running transaction check

---> Package iscsi-initiator-utils.x86_64 0:6.2.0.874-10.el7 will be installed

--> Processing Dependency: iscsi-initiator-utils-iscsiuio >= 6.2.0.874-10.el7 for package: iscsi-initiator-utils-6.2.0.874-10.el7.x86_64

2. 启动服务并加入开机自启动

[root@rac2 ~]# systemctl start iscsid

[root@rac2 ~]# systemctl enable iscsid

3. 修改配置文件

[root@rac2 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2003-01.org.linux-iscsi.iscsi-server.x8664:sn.a1281bc5b561

4. 重启客户端服务

[root@rac2 ~]# systemctl restart iscsid

5. 查找目标存储

[root@rac2 ~]# iscsiadm -m discovery -t sendtargets -p 192.168.169.30

192.168.169.30:3260,1 iqn.2003-01.org.linux-iscsi.iscsi-server.x8664:sn.a1281bc5b561

6. 登录到到服务端

[root@rac2 ~]# iscsiadm --mode node --targetname iqn.2003-01.org.linux-iscsi.iscsi-server.x8664:sn.a1281bc5b561 --portal 192.168.169.30 -l

[root@rac2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 6G 0 part /boot

└─sda2 8:2 0 14G 0 part

├─centos-root 253:0 0 8G 0 lvm /

└─centos-swap 253:1 0 6G 0 lvm [SWAP]

sdb 8:16 0 10G 0 disk //这就是从服务端共享出来的三个磁盘

sdc 8:32 0 1G 0 disk

sdd 8:48 0 1G 0 disk

7. 对共享磁盘进行分区

[root@rac1 ~]# fdisk /dev/sdb

[root@rac1 ~]# fdisk /dev/sdc

[root@rac1 ~]# fdisk /dev/sdd

[root@rac1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 6G 0 part /boot

└─sda2 8:2 0 14G 0 part

├─centos-root 253:0 0 9G 0 lvm /

└─centos-swap 253:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 10G 0 disk

└─sdb1 8:17 0 10G 0 part

sdc 8:32 0 1G 0 disk

└─sdc1 8:33 0 1020M 0 part

sdd 8:48 0 1G 0 disk

└─sdd1 8:49 0 1020M 0 part

三、 用oracleasm 来创建磁盘

oracleasm需要下载两个安装包oracleasm-support,oracleasmlib在官网下载,或者在yum 源网站下载到本地,然后上传到服务器的两个节点rac1 和 rac2 进行安装

在官网查找对应的软件包

oracle 官网链接地址 如下图,在搜索栏直接搜索ASMLib可找到对应内核版本的包

// 注意:系统内核不同下载的oracleasm 包的版本也不相同

[root@rac1 ~]# ls

anaconda-ks.cfg oracleasmlib-2.0.12-1.el7.x86_64 .rpm oracleasm-support-2.1.11-2.el7.x86_64.rpm

2. 安装 oracleasm-support 这个包(在两个节点都安装)

[root@rac1 ~]# rpm -ivh oracleasm-supportt-2.1.11-2.el7.x86_64.rpm

warning: oracleasm-support.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:oracleasm-support-2.1.11-2.el7 ################################# [100%]

Note: Forwarding request to 'systemctl enable oracleasm.service'.

3. 在用yum 来安装oracleasmlib 这个软件包,如果用rpm 方式安装可能会报依赖关系的错误(在两个节点都安装)

[root@rac1 ~]# yum install oracleasmlib-2.0.12-1.el7.x86_64 .rpm -y

Loaded plugins: fastestmirror

Examining oracleasmlib-2.0.12.rpm: oracleasmlib-2.0.12-1.el7.x86_64

Marking oracleasmlib-2.0.12.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package oracleasmlib.x86_64 0:2.0.12-1.el7 will be installed

--> Processing Dependency: oracleasm >= 1.0.4 for package: oracleasmlib-2.0.12-1.el7.x86_64

base | 3.6 kB 00:00:00

centosplus | 3.4 kB 00:00:00

epel/x86_64/metalink | 7.3 kB 00:00:00

epel | 4.7 kB 00:00:00

extras | 3.4 kB 00:00:00

updates | 3.4 kB 00:00:00

(1/2): epel/x86_64/updateinfo | 1.0 MB 00:00:02

(2/2): epel/x86_64/primary_db | 6.6 MB 00:00:11

Loading mirror speeds from cached hostfile

* epel: mirrors.nipa.cloud

--> Running transaction check

---> Package kmod-oracleasm.x86_64 0:2.0.8-22.el7 will be installed

--> Finished Dependency Resolution

.....此处省略

4. 在节点1和节点2 分别执行以下操作

[root@rac1 ~]# oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: dba

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

[root@rac1 ~]# oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module "oracleasm": oracleasm //注意这个地方如果是failed那么请检查你的安装方式及顺序是否和我的一样、还要检查你的安装包与系统的内核版本是否一致

Configuring "oracleasm" to use device physical block size

Mounting ASMlib driver filesystem: /dev/oracleasm

5. 以上操作没问题,接下来创建DISK01、DISK02、DISK03(只需要在节点一创建)

[root@rac1 ~]# oracleasm createdisk DISK01 /dev/sdb1

Writing disk header: done

Instantiating disk: done

[root@rac1 ~]# oracleasm createdisk DISK02 /dev/sdc1

Writing disk header: done

Instantiating disk: done

[root@rac1 ~]# oracleasm createdisk DISK03 /dev/sdd1

Writing disk header: done

Instantiating disk: done

6. 查看创建的磁盘

[root@rac1 ~]# oracleasm listdisks

DISK01

DISK02

DISK03

7. 在节点2上扫描一遍就会发现这三块盘

[root@rac2 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "DISK01"

Instantiating disk "DISK02"

Instantiating disk "DISK03"

[root@rac2 ~]# oracleasm listdisks //如果没有发现这三块盘,请重启后在试

DISK01

DISK02

DISK03

四、 每个节点之间建立互信关系

1. 每个节点上的用户之间都要建立互信,方法可以用 openssh 由于用户之间建立互信比较繁多,oracleRAC 提供了另一种快速建立互信的脚本,在节点一上传oracleRAC的安装包解压到 /u01/目录下

[root@rac1 ~]# unzip pRAC_112040_Linux-x86-64_3of7.zip -d /u01/

[root@rac1 grid]# ls //RAC提供的sshsetup 脚本建立互信的方式

install readme.html response rpm runcluvfy.sh runInstaller sshsetup stage welcome.html

[oracle@rac1 sshsetup]#./sshUserSetup.sh -user grid -hosts "rac1 rac2" -advanced -noPromptPassphrase

[oracle@rac1 sshsetup]#./sshUserSetup.sh -user oracle -hosts "rac1 rac2" -advanced -noPromptPassphrase

[root@rac1 sshsetup]# ./sshUserSetup.sh -user root -hosts "rac1 rac2" -advanced -noPromptPassphrase //这是脚本的用法

The output of this script is also logged into /tmp/sshUserSetup_2019-04-01-10-52-04.log

Hosts are 192.168.169.20

user is root

Platform:- Linux

Checking if the remote hosts are reachable

PING 192.168.169.20 (192.168.169.20) 56(84) bytes of data.

64 bytes from 192.168.169.20: icmp_seq=1 ttl=64 time=0.410 ms

64 bytes from 192.168.169.20: icmp_seq=2 ttl=64 time=0.406 ms

64 bytes from 192.168.169.20: icmp_seq=3 ttl=64 time=0.388 ms

64 bytes from 192.168.169.20: icmp_seq=4 ttl=64 time=0.394 ms

.....根据提示输入yes 和 密码,此处省略

3. 验证阶段

随便在一个节点上,输入ssh执行命令,若提示输入密码,则验证失败,若不需要则验证成功

[root@rac1 grid]# ssh rac2 date //第一次可能需要密码,执行两次即可

Mon Apr 1 19:01:59 CST 2019

4. oracle RAC提供脚本验证

[root@rac1 ~]# su - grid

[grid@rac1 grid]$ ls

install readme.html response rpm runcluvfy.sh runInstaller sshsetup stage welcome.html

[grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac1"

Destination Node Reachable?

------------------------------------ ------------------------

rac2 yes

rac1 yes

Result: Node reachability check passed from node "rac1"

Checking user equivalence...

Check: User equivalence for user "grid"

Node Name Status

------------------------------------ ------------------------

rac2 passed //注意 这个状态只能是passed,如果为failed 状态则会安装失败,请仔细验证每个用户的互信关系

rac1 passed

Result: User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

.....此处省略

五、安装oracle 11g RAC

2. 在节点一加粗样式上设置环境变量

[root@rac1 ~]# yum install -y xorg-x11-server-utils

[root@rac1 ~]# export DISPLAY=192.168.111.231:0.0

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ export DISPLAY=192.168.111.231:0.0

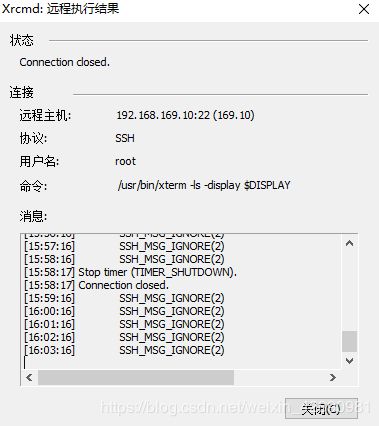

3. 设置xmanager

依此点击,文件—>新建——>Xstart ,按照如图设置好,双击运行

在桌面上会出现如下图

4. 切换到 grid 用户运行脚本,即可进入图形化安装

[grid@rac1 grid]$ ls

install readme.html response rpm runcluvfy.sh runInstaller sshsetup stage welcome.html

[grid@rac1 grid]$ ./runInstaller

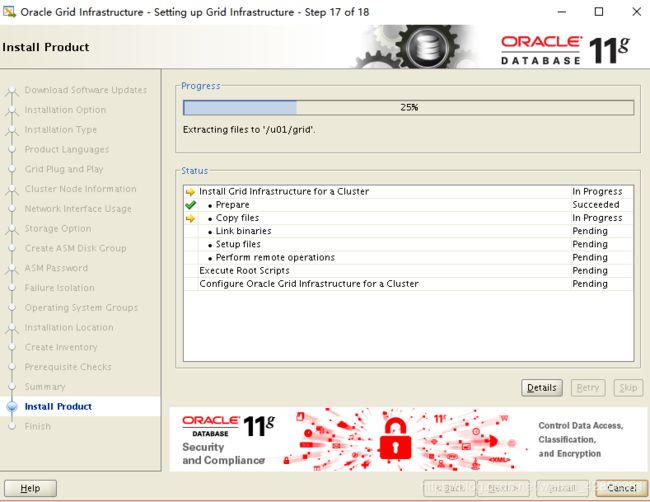

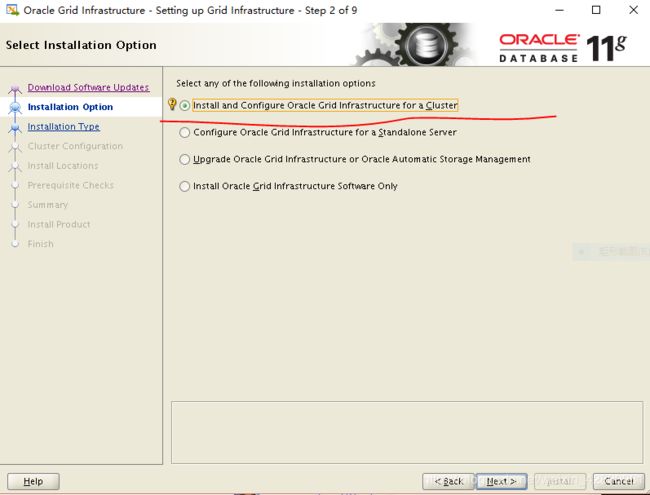

图形化安装步骤如下

选择Skip software updates,然后选择next

选择安装集群和配置环境,然后选择next

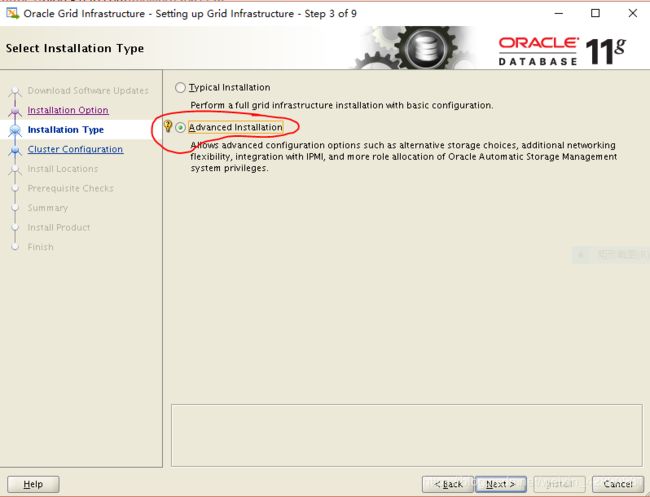

选择高级安装,然后在next

选择语言,这里就用英语,可根据你想要的语言调整

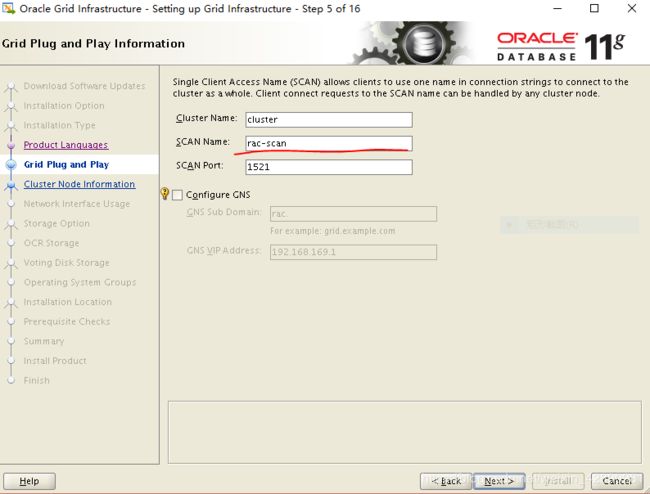

此处集群名自己取,SCAN名需要和/etc/hosts中的scan ip别名一致,端口默认,不选GNS,然后 next

选择Add添加节点二,按照hosts 设置的填写,然后在 next,如果节点一和节点二互信没配好,下一步会报错

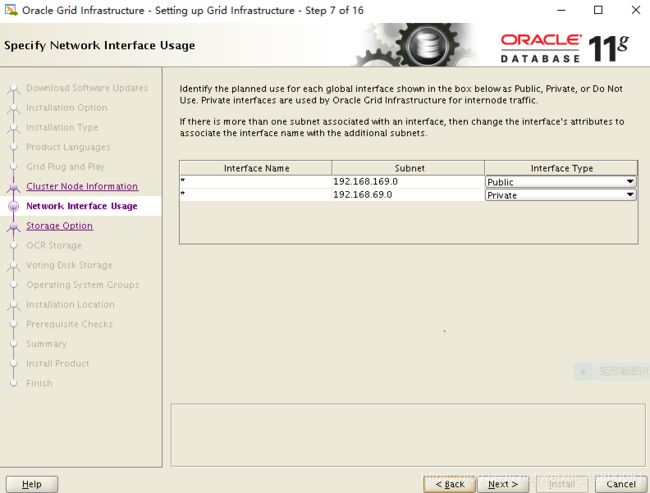

这一步是系统自动识别,不用管理,直接 next

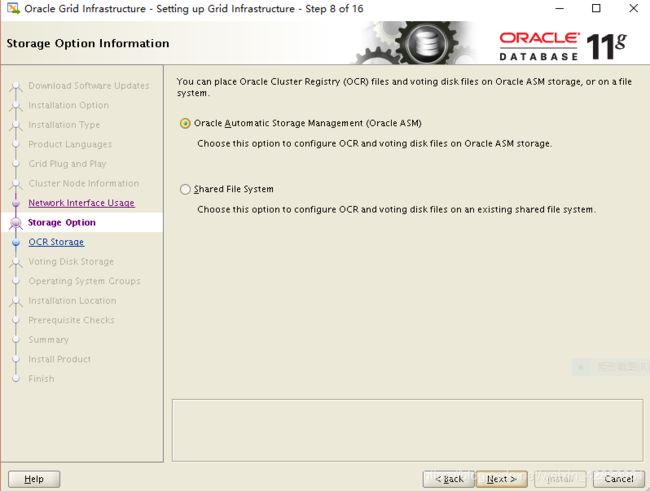

存储选择,选择ASM,然后在 next

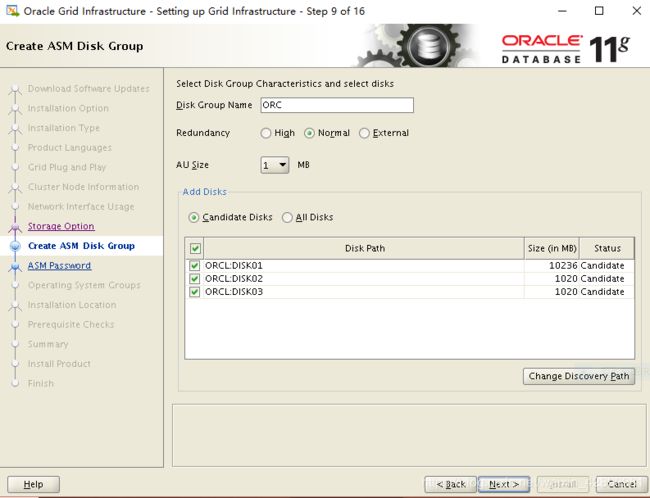

创建一个asm Disk Group Name 组,并给一个名称ORC,并选择下面的三块盘,然后 Next

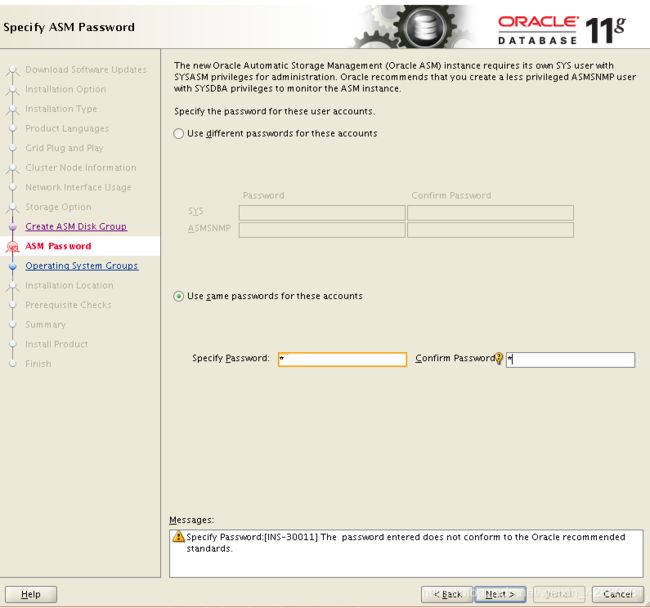

给这个组,设置一个密码

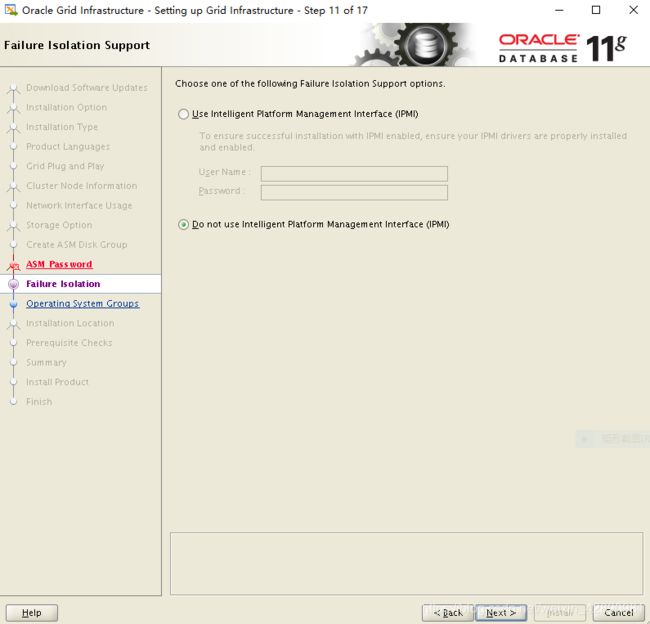

这里选择不使用用IPMI

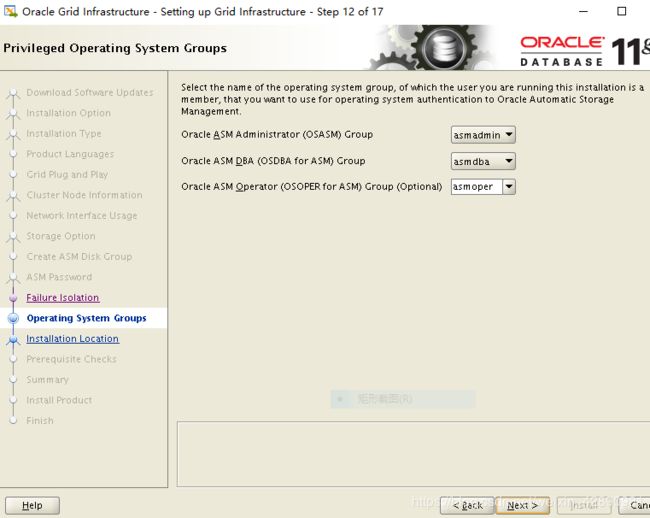

指定不同的ASM组,这里默认

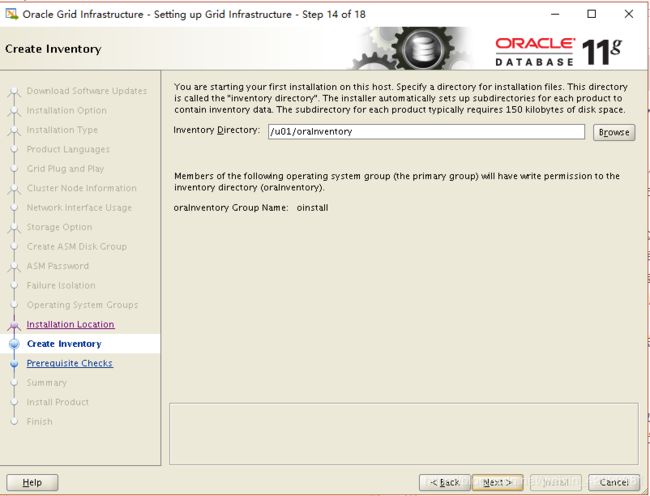

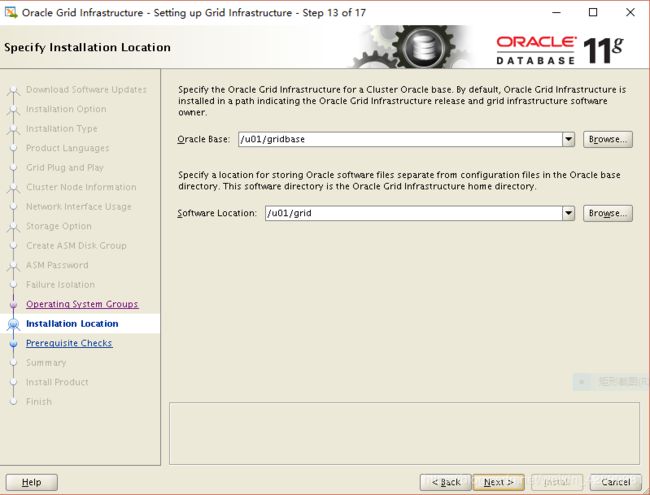

这里安装向导会帮你填写集群软件的安装路径,我们继续next,这里我们需要注意的是oracle_Home不能是oracle_base的子目录

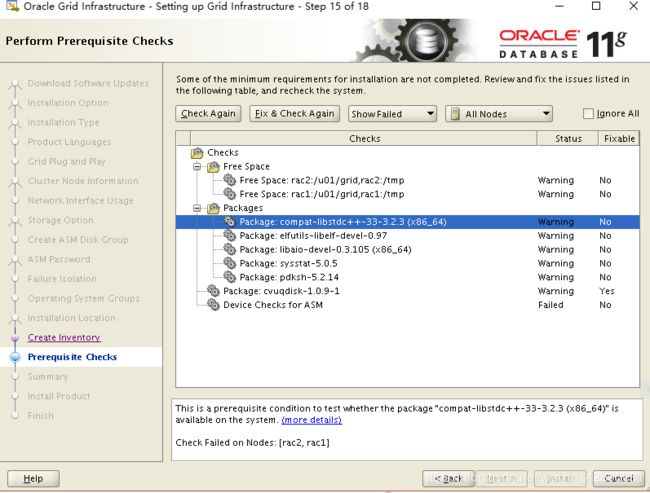

[root@rac1 ~]# yum install -y compat-libstdc++* elfutils-libelf-devel* libaio-devel* sysstat-*

以上的 Free Space 这一栏提示两个节点的/tmp 目录给的空间不足,先扩容,在安装

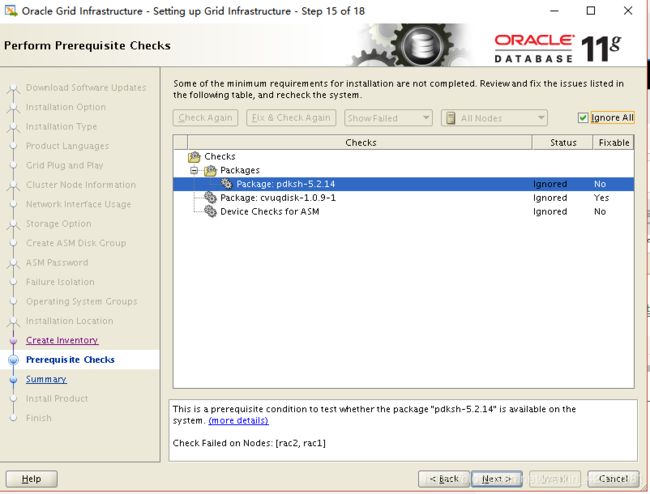

//pdksh 是以前的版本,在oracle中使用ksh,在两节点上安装ksh包,勾上右上角,忽略以上两个错误,Next

yum install ksh* -y

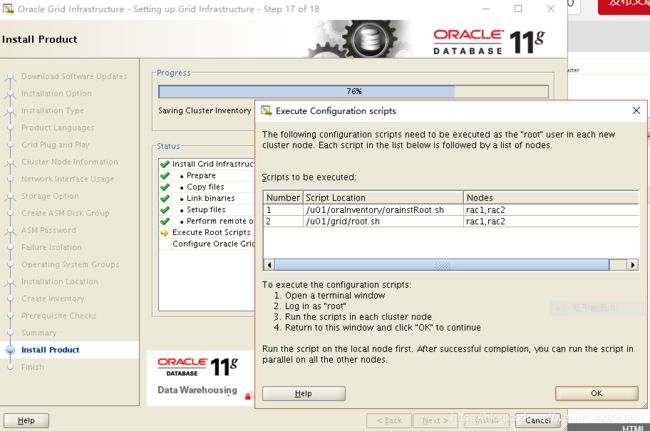

在两个节点上都执行(步骤相同这里只演示节点二)

[root@rac2 /]# ./u01/oraInventory/orainstRoot.sh

Changing permissions of /u01/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/oraInventory to oinstall.

The execution of the script is complete.

[root@rac2 /]# ./u01/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

Failed to create keys in the OLR, rc = 127, Message:

/u01/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory !!出现报错

Failed to create keys in the OLR at /u01/grid/crs/install/crsconfig_lib.pm line 7660.

/u01/grid/perl/bin/perl -I/u01/grid/perl/lib -I/u01/grid/crs/install /u01/grid/crs/install/rootcrs.pl execution failed

以上报错缺少compat 这个安装包导致,安装解决

[root@rac2 /]# rpm -ivh /mnt/Packages/compat-libcap1-1.10-7.el7.x86_64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:compat-libcap1-1.10-7.el7 ################################# [100%]

重新执行脚本

[root@rac2 /]# ./u01/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to inittab

ohasd failed to start

Failed to start the Clusterware. Last 20 lines of the alert log follow: //这里报错启动失败

2019-04-02 19:20:02.264:

[client(24679)]CRS-2101:The OLR was formatted using version 3.

2019-04-02 19:33:46.596:

[client(25560)]CRS-2101:The OLR was formatted using version 3.

以上失败的原因,是在centos 7/redhat 7里面启动方式为Systemd ,而这个脚本的启动方式为init 是 centos 6/redhat 6 里面的启动方式,解决方法如下:

以 root 用户创建服务文件

[root@rac2 /]# touch /usr/lib/systemd/system/ohas.service

[root@rac2 /]# chmod 777 /usr/lib/systemd/system/ohas.service

在文件里面添加以下内容

[root@rac2 /]# cat /usr/lib/systemd/system/ohas.service

[Unit]

Description=Oracle High Availability Services

After=syslog.target

[Service]

ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple

Restart=always

[Install]

WantedBy=multi-user.target

启动服务并设置开机自启动

[root@rac2 /]# systemctl daemon-reload

[root@rac2 /]# systemctl start ohas.service

[root@rac2 /]# systemctl enable ohas.service

[root@rac2 /]# systemctl status ohas.service //查看服务状态

● ohas.service - Oracle High Availability Services

Loaded: loaded (/usr/lib/systemd/system/ohas.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2019-04-02 19:57:54 CST; 27s ago

Main PID: 26958 (init.ohasd)

CGroup: /system.slice/ohas.service

└─26958 /bin/sh /etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple

Apr 02 19:57:54 rac2 systemd[1]: Started Oracle High Availability Services.

Apr 02 19:57:54 rac2 systemd[1]: Starting Oracle High Availability Services...

在次运行脚本

[root@rac2 /]# ./u01/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac2'

CRS-2676: Start of 'ora.mdnsd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac2'

CRS-2676: Start of 'ora.gpnpd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac2'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac2'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac2' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac2'

CRS-2676: Start of 'ora.diskmon' on 'rac2' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac2' succeeded

ASM created and started successfully.

Disk Group ORC created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 03fe2d22d5f14fd8bfd584f5ae6ee175.

Successful addition of voting disk 606e51635a074fadbfa28982844240a8.

Successful addition of voting disk 914f0522839a4fb4bf0fe571652799c8.

Successfully replaced voting disk group with +ORC.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 03fe2d22d5f14fd8bfd584f5ae6ee175 (/dev/oracleasm/disks/DISK01) [ORC]

2. ONLINE 606e51635a074fadbfa28982844240a8 (/dev/oracleasm/disks/DISK02) [ORC]

3. ONLINE 914f0522839a4fb4bf0fe571652799c8 (/dev/oracleasm/disks/DISK03) [ORC]

Located 3 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'rac2'

CRS-2676: Start of 'ora.asm' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.ORC.dg' on 'rac2'

CRS-2676: Start of 'ora.ORC.dg' on 'rac2' succeeded

节点一需要做相同的操作,注意这里在节点一遇到的一些问题及解决方法

[root@rac1 /]# /u01/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

1^HPreparing packages...

ls: cannot access /usr/sbin/smartctl: No such file or directory

/usr/sbin/smartctl not found.

error: %pre(cvuqdisk-1.0.9-1.x86_64) scriptlet failed, exit status 1

error: cvuqdisk-1.0.9-1.x86_64: install failed

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

解决方法

[root@rac2 /]# yum install -y smartmontools //先安装这个包

Loaded plugins: fastestmirror

base | 3.6 kB 00:00:00

centosplus | 3.4 kB 00:00:00

epel/x86_64/metalink | 5.2 kB 00:00:00

epel | 4.7 kB 00:00:00

extras | 3.4 kB 00:00:00

updates | 3.4 kB 00:00:00

Loading mirror speeds from cached hostfile

* epel: ftp.jaist.ac.jp

Resolving Dependencies

--> Running transaction check

---> Package smartmontools.x86_64 1:6.5-1.el7 will be installed

--> Processing Dependency: mailx for package: 1:smartmontools-6.5-1.el7.x86_64

--> Running transaction check

---> Package mailx.x86_64 0:12.5-19.el7 will be installed

--> Finished Dependency Resolution

....此处省略

[root@rac1 ~]# find /u01/grid/ -iname cvuqdisk* //找到这个rpm包的位置

/u01/grid/stage/cvu/cv/remenv/cvuqdisk-1.0.9-1.rpm

/u01/grid/rpm/cvuqdisk-1.0.9-1.rpm

/u01/grid/cv/remenv/cvuqdisk-1.0.9-1.rpm

/u01/grid/cv/rpm/cvuqdisk-1.0.9-1.rpm

[root@rac2 remenv]# rpm -ivh cvuqdisk-1.0.9-1.rpm //安装这个rpm包

Preparing... ################################# [100%]

Updating / installing...

1:cvuqdisk-1.0.9-1 ################################# [100%]

重新执行这个脚本

[root@rac1 ~]# /u01/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

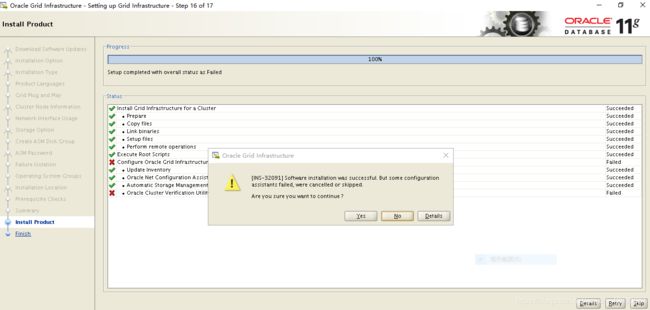

回到图形化安装界面

这个报错不用去管,不影响使用,点击ok ,在next

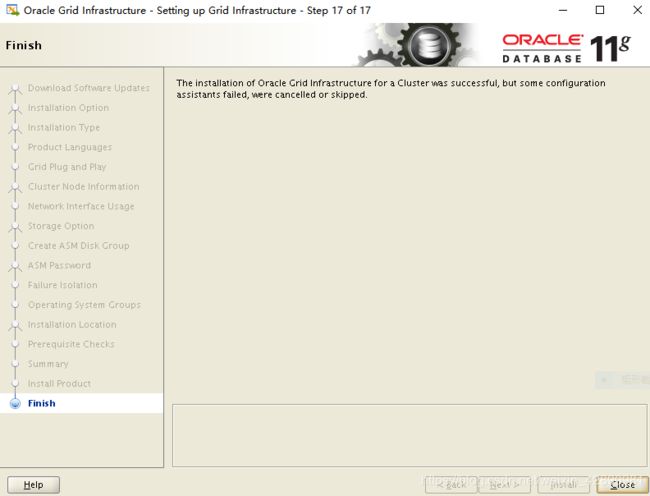

到这里oracle RAC 就部署完成!!!

验证,基于oracle RAC的特性,查看IP地址,会发现 hosts文件里面的与节点对应的IP都有,VIP和scan IP会启动成功后自动出现在网卡上

节点一

[root@rac1 ~]# ifconfig

ens32: flags=4163 mtu 1500

inet 192.168.169.10 netmask 255.255.255.0 broadcast 192.168.169.255

inet6 fe80::20c:29ff:fe39:cb59 prefixlen 64 scopeid 0x20

ether 00:0c:29:39:cb:59 txqueuelen 1000 (Ethernet)

RX packets 531641 bytes 169187531 (161.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4407806 bytes 11499468793 (10.7 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32:1: flags=4163 mtu 1500

inet 192.168.169.111 netmask 255.255.255.0 broadcast 192.168.169.255

ether 00:0c:29:39:cb:59 txqueuelen 1000 (Ethernet)

ens32:2: flags=4163 mtu 1500

inet 192.168.169.63 netmask 255.255.255.0 broadcast 192.168.169.255

ether 00:0c:29:39:cb:59 txqueuelen 1000 (Ethernet)

ens34: flags=4163 mtu 1500

inet 192.168.69.10 netmask 255.255.255.0 broadcast 192.168.69.255

inet6 fe80::20c:29ff:fe39:cb63 prefixlen 64 scopeid 0x20

ether 00:0c:29:39:cb:63 txqueuelen 1000 (Ethernet)

RX packets 25069 bytes 16204814 (15.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 20507 bytes 16315657 (15.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens34:1: flags=4163 mtu 1500

inet 169.254.151.109 netmask 255.255.0.0 broadcast 169.254.255.255

ether 00:0c:29:39:cb:63 txqueuelen 1000 (Ethernet)

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 19096 bytes 12767402 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 19096 bytes 12767402 (12.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

节点二

[root@rac2 ~]# ifconfig

ens32: flags=4163 mtu 1500

inet 192.168.169.20 netmask 255.255.255.0 broadcast 192.168.169.255

inet6 fe80::20c:29ff:fec2:5275 prefixlen 64 scopeid 0x20

ether 00:0c:29:c2:52:75 txqueuelen 1000 (Ethernet)

RX packets 3880882 bytes 5766070909 (5.3 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 159379 bytes 15401359 (14.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32:1: flags=4163 mtu 1500

inet 192.168.169.222 netmask 255.255.255.0 broadcast 192.168.169.255

ether 00:0c:29:c2:52:75 txqueuelen 1000 (Ethernet)

ens34: flags=4163 mtu 1500

inet 192.168.69.20 netmask 255.255.255.0 broadcast 192.168.69.255

inet6 fe80::20c:29ff:fec2:527f prefixlen 64 scopeid 0x20

ether 00:0c:29:c2:52:7f txqueuelen 1000 (Ethernet)

RX packets 23181 bytes 14226969 (13.5 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 23942 bytes 17856083 (17.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens34:1: flags=4163 mtu 1500

inet 169.254.49.129 netmask 255.255.0.0 broadcast 169.254.255.255

ether 00:0c:29:c2:52:7f txqueuelen 1000 (Ethernet)

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 8264 bytes 4330241 (4.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8264 bytes 4330241 (4.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

验证oracleRAC 的高可用

由于还没装数据库,所以关闭节点二这台服务器,来表示数据库宕机,看节点二的vip 是否会发生漂移

[root@rac2 ~]# shutdown -h now

PolicyKit daemon disconnected from the bus.

We are no longer a registered authentication agent.

Connection closing...Socket close.

查看节点一,节点二的IP地址发生漂移

[root@rac1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:39:cb:59 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.10/24 brd 192.168.169.255 scope global ens32

valid_lft forever preferred_lft forever

inet 192.168.169.111/24 brd 192.168.169.255 scope global secondary ens32:1

valid_lft forever preferred_lft forever

inet 192.168.169.63/24 brd 192.168.169.255 scope global secondary ens32:2

valid_lft forever preferred_lft forever

inet 192.168.169.222/24 brd 192.168.169.255 scope global secondary ens32:3 //发生漂移,实现高可用

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe39:cb59/64 scope link

valid_lft forever preferred_lft forever

3: ens34: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:39:cb:63 brd ff:ff:ff:ff:ff:ff

inet 192.168.69.10/24 brd 192.168.69.255 scope global ens34

valid_lft forever preferred_lft forever

inet 169.254.151.109/16 brd 169.254.255.255 scope global ens34:1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe39:cb63/64 scope link

valid_lft forever preferred_lft forever