ConcurrentHashMap的JDK1.8实现

今天我们介绍一下ConcurrentHashMap在JDK1.8中的实现。

基本结构

相关节点

get()操作

size()操作

两个方法都同时调用了,sumCount()方法。对于每个table[i]都有一个CounterCell与之对应,上面方法做了求和之后就返回了。从而可以看出,size()和mappingCount()返回的都是一个 估计值。 (这一点与JDK1.7里面的实现不同,1.7里面使用了加锁的方式实现。这里面也可以看出JDK1.8牺牲了精度,来换取更高的效率。)

链接: http://moguhu.com/article/detail?articleId=36

基本结构

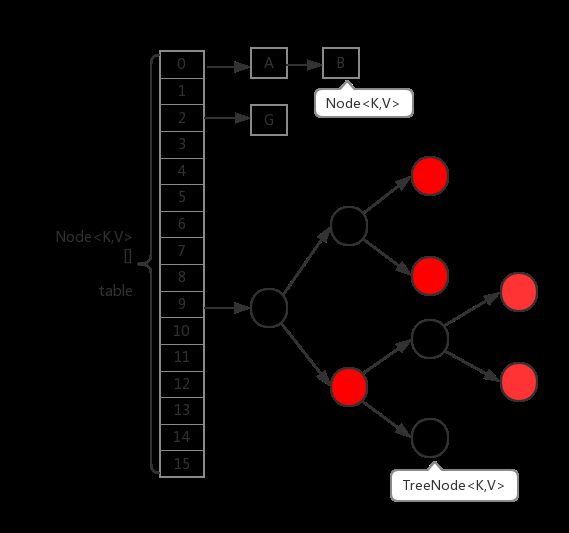

ConcurrentHashMap在1.8中的实现,相比于1.7的版本基本上全部都变掉了。首先,取消了Segment分段锁的数据结构,取而代之的是数组+链表(红黑树)的结构。而对于锁的粒度,调整为对每个数组元素加锁(Node)。然后是定位节点的hash算法被简化了,这样带来的弊端是Hash冲突会加剧。因此在链表节点数量大于8时,会将链表转化为红黑树进行存储。这样一来,查询的时间复杂度就会由原先的O(n)变为O(logN)。下面是其基本结构:

相关属性

private transient volatile int sizeCtl;- -1:table[]正在初始化。

- -N:表示有N-1个线程正在进行扩容操作。

- 如果table[]未初始化,则表示table需要初始化的大小。

- 如果初始化完成,则表示table[]扩容的阀值,默认是table[]容量的0.75 倍。

private static finalint DEFAULT_CONCURRENCY_LEVEL = 16;- DEFAULT_CONCURRENCY_LEVEL:表示默认的并发级别,也就是table[]的默认大小。

private static final float LOAD_FACTOR = 0.75f;- LOAD_FACTOR:默认的负载因子。

static final int TREEIFY_THRESHOLD = 8;- TREEIFY_THRESHOLD:链表转红黑树的阀值,当table[i]下面的链表长度大于8时就转化为红黑树结构。

static final int UNTREEIFY_THRESHOLD = 6;- UNTREEIFY_THRESHOLD:红黑树转链表的阀值,当链表长度<=6时转为链表(扩容时)。

public ConcurrentHashMap(int initialCapacity,

float loadFactor, int concurrencyLevel) {

if (!(loadFactor > 0.0f) || initialCapacity < 0 || concurrencyLevel <= 0)

throw new IllegalArgumentException();

if (initialCapacity < concurrencyLevel) // 初始化容量至少要为concurrencyLevel

initialCapacity = concurrencyLevel;

long size = (long)(1.0 + (long)initialCapacity / loadFactor);

int cap = (size >= (long)MAXIMUM_CAPACITY) ?

MAXIMUM_CAPACITY : tableSizeFor((int)size);

this.sizeCtl = cap;

}相关节点

- Node:该类用于构造table[],只读节点(不提供修改方法)。

- TreeBin:红黑树结构。

- TreeNode:红黑树节点。

- ForwardingNode:临时节点(扩容时使用)。

public V put(K key, V value) {

return putVal(key, value, false);

}

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node[] tab = table;;) {

Node f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)// 若table[]未创建,则初始化

tab = initTable();

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {// table[i]后面无节点时,直接创建Node(无锁操作)

if (casTabAt(tab, i, null,

new Node(hash, key, value, null)))

break; // no lock when adding to empty bin

}

else if ((fh = f.hash) == MOVED)// 如果当前正在扩容,则帮助扩容并返回最新table[]

tab = helpTransfer(tab, f);

else {// 在链表或者红黑树中追加节点

V oldVal = null;

synchronized (f) {// 这里并没有使用ReentrantLock,说明synchronized已经足够优化了

if (tabAt(tab, i) == f) {

if (fh >= 0) {// 如果为链表结构

binCount = 1;

for (Node e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {// 找到key,替换value

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node pred = e;

if ((e = e.next) == null) {// 在尾部插入Node

pred.next = new Node(hash, key,

value, null);

break;

}

}

}

else if (f instanceof TreeBin) {// 如果为红黑树

Node p;

binCount = 2;

if ((p = ((TreeBin)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

if (binCount >= TREEIFY_THRESHOLD)// 到达阀值,变为红黑树结构

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

addCount(1L, binCount);

return null;

} - 参数校验。

- 若table[]未创建,则初始化。

- 当table[i]后面无节点时,直接创建Node(无锁操作)。

- 如果当前正在扩容,则帮助扩容并返回最新table[]。

- 然后在链表或者红黑树中追加节点。

- 最后还回去判断是否到达阀值,如到达变为红黑树结构。

get()操作

public V get(Object key) {

Node[] tab; Node e, p; int n, eh; K ek;

int h = spread(key.hashCode());// 定位到table[]中的i

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {// 若table[i]存在

if ((eh = e.hash) == h) {// 比较链表头部

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

return e.val;

}

else if (eh < 0)// 若为红黑树,查找树

return (p = e.find(h, key)) != null ? p.val : null;

while ((e = e.next) != null) {// 循环链表查找

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

return null;// 未找到

} - 首先定位到table[]中的i。

- 若table[i]存在,则继续查找。

- 首先比较链表头部,如果是则返回。

- 然后如果为红黑树,查找树。

- 最后再循环链表查找。

size()操作

// 1.2时加入

public int size() {

long n = sumCount();

return ((n < 0L) ? 0 :

(n > (long)Integer.MAX_VALUE) ? Integer.MAX_VALUE :

(int)n);

}

// 1.8加入的API

public long mappingCount() {

long n = sumCount();

return (n < 0L) ? 0L : n; // ignore transient negative values

}

final long sumCount() {

CounterCell[] as = counterCells; CounterCell a;

long sum = baseCount;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}两个方法都同时调用了,sumCount()方法。对于每个table[i]都有一个CounterCell与之对应,上面方法做了求和之后就返回了。从而可以看出,size()和mappingCount()返回的都是一个 估计值。 (这一点与JDK1.7里面的实现不同,1.7里面使用了加锁的方式实现。这里面也可以看出JDK1.8牺牲了精度,来换取更高的效率。)

链接: http://moguhu.com/article/detail?articleId=36