android USB摄像头做条形码及二维码扫描(1)

摘要:

1、前言

2、底层配置

3、JNI实现

4、总结

5、BUG及优化记录

android USB摄像头做条形码及二维码扫描(2)

1. 前言

公司做的产品基于android开发板搭建的控制系统,需要做一个二维码扫描功能,用的UVCCamera。这里做一下笔记,顺便理一下之前的思路。android版本是4.4.4W,定制ROM。这个功能的实现是基于simplewebcam这个开源项目的,有兴趣的可以看看这个,但是这个源码有些异常处理有问题。

2. 底层配置

传送门:Android USB Camera(1) : 调试记录

底层需要的操作不是我做的,所以就不啰嗦了,可以参考这篇文章。但是识别的摄像头的设备可能会不一样,我这个ROM识别出来的是video4,具体根据实际的开启对应摄像头设备。

3、JNI实现

V4L2是Video4linux2的简称,为linux中关于视频设备的内核驱动。在Linux中,视频设备是设备文件,可以像访问普通文件一样对其进行读写,摄像头在/dev/video4下。

关于UVC和V4L2不太懂的可以自行百度。

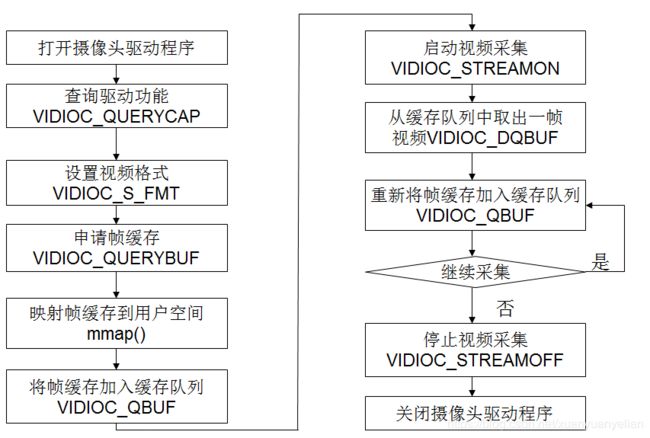

3.1 操作流程

盗个图:大概的流程如下图:

3.2 具体代码实现

JNI有4个接口方法

jint Java_com_camera_usbcam_CameraPreview_prepareCamera( JNIEnv* env,jobject thiz, jint videoid, jint videobase, jint mirrorType, jint WIDTH, jint HEIGHT);

jint Java_com_camera_usbcam_CameraPreview_processCamera( JNIEnv* env,jobject thiz);

void Java_com_camera_usbcam_CameraPreview_stopCamera(JNIEnv* env,jobject thiz);

void Java_com_camera_usbcam_CameraPreview_pixeltobmp(JNIEnv* env,jobject thiz, jobject bitmap);prepareCamera:在启动摄像头预览之前进行初始化,说明下:mirrorType是用来控制图像翻转的,摄像头是固定在仪器上的,所以需要翻转采集到的数据将图片显示为正的图片

stopCamera:在界面退出的时候注销摄像头驱动

processCamera:获取摄像头采集到的图像数据

pixeltobmp:将图像数据转为bitmap用于java层显示

(1) 打开设备驱动节点

//打开video设备

int opendevice(int i)

{

struct stat st;

sprintf(dev_name,"/dev/video%d",i);

//stat() 获得文件属性,返回:若成功则为0,若出错则为-1

if (-1 == stat (dev_name, &st)) {

LOGE("Cannot identify '%s': %d, %s", dev_name, errno, strerror (errno));

return ERROR_LOCAL;

}

//判断是否为字符设备文件

if (!S_ISCHR (st.st_mode)) {

LOGE("%s is no device", dev_name);

return ERROR_LOCAL;

}

//打开设备

fd = open (dev_name, O_RDWR);

if (-1 == fd) {

LOGE("Cannot open '%s': %d, %s", dev_name, errno, strerror (errno));

return ERROR_LOCAL;

}

return SUCCESS_LOCAL;

}(2) 查询并初始化摄像头驱动、设置视频格式

//初始化设备

int initdevice(void)

{

struct v4l2_capability cap;

struct v4l2_cropcap cropcap;

struct v4l2_crop crop;

struct v4l2_format fmt;

unsigned int min;

//VIDIOC_QUERYCAP 命令 来获得当前设备的各个属性

if (-1 == xioctl (fd, VIDIOC_QUERYCAP, &cap)) {

if (EINVAL == errno) {

LOGE("%s is no V4L2 device", dev_name);

return ERROR_LOCAL;

} else {

return errnoexit ("VIDIOC_QUERYCAP");

}

}

//V4L2_CAP_VIDEO_CAPTURE 0x00000001

// 这个设备支持 video capture 的接口,即这个设备具备 video capture 的功能

if (!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)) {

LOGE("%s is no video capture device", dev_name);

return ERROR_LOCAL;

}

//V4L2_CAP_STREAMING 0x04000000

// 这个设备是否支持 streaming I/O 操作函数

if (!(cap.capabilities & V4L2_CAP_STREAMING)) {

LOGE("%s does not support streaming i/o", dev_name);

return ERROR_LOCAL;

}

//获得设备对 Image Cropping 和 Scaling 的支持

CLEAR (cropcap);

cropcap.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

//设置图形格式

CLEAR (fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = IMG_WIDTH;

fmt.fmt.pix.height = IMG_HEIGHT;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

//检查流权限

if (-1 == xioctl (fd, VIDIOC_S_FMT, &fmt))

return errnoexit ("VIDIOC_S_FMT");

min = fmt.fmt.pix.width * 2;

//每行像素所占的 byte 数

if (fmt.fmt.pix.bytesperline < min)

fmt.fmt.pix.bytesperline = min;

min = fmt.fmt.pix.bytesperline * fmt.fmt.pix.height;

if (fmt.fmt.pix.sizeimage < min)

fmt.fmt.pix.sizeimage = min;

return initmmap ();

}(3) 设置IO模式、申请内存

//I/O模式选择

int initmmap(void)

{

struct v4l2_requestbuffers req;

CLEAR (req);

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl (fd, VIDIOC_REQBUFS, &req)) {

if (EINVAL == errno) {

LOGE("%s does not support memory mapping", dev_name);

return ERROR_LOCAL;

} else {

return errnoexit ("VIDIOC_REQBUFS");

}

}

if (req.count < 2) {

LOGE("Insufficient buffer memory on %s", dev_name);

return ERROR_LOCAL;

}

buffers = calloc (req.count, sizeof (*buffers));

if (!buffers) {

LOGE("Out of memory");

return ERROR_LOCAL;

}

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

struct v4l2_buffer buf;

CLEAR (buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if (-1 == xioctl (fd, VIDIOC_QUERYBUF, &buf))

return errnoexit ("VIDIOC_QUERYBUF");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start =

mmap (NULL ,

buf.length,

PROT_READ | PROT_WRITE,

MAP_SHARED,

fd, buf.m.offset);

if (MAP_FAILED == buffers[n_buffers].start)

return errnoexit ("mmap");

}

return SUCCESS_LOCAL;

}(4)开始采集

int startcapturing(void)

{

unsigned int i;

enum v4l2_buf_type type;

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR (buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (-1 == xioctl (fd, VIDIOC_QBUF, &buf))

return errnoexit ("VIDIOC_QBUF");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl (fd, VIDIOC_STREAMON, &type))

return errnoexit ("VIDIOC_STREAMON");

return SUCCESS_LOCAL;

}(5) 从缓存队列中取出一帧

int readframeonce(void)

{

for (;;) {

fd_set fds;

struct timeval tv;

int r;

FD_ZERO (&fds);

FD_SET (fd, &fds);

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select (fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r) {

if (EINTR == errno)

continue;

return errnoexit ("select");

}

if (0 == r) {

LOGE("select timeout");

return ERROR_LOCAL;

}

if (readframe ()==1)

break;

else

// LOGE(">>> No device was found !");

return ERROR_LOCAL;

}

// LOGE(">>> SUCCESS_LOCAL!");

return SUCCESS_LOCAL;

}int readframe(void){

struct v4l2_buffer buf;

unsigned int i;

CLEAR (buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

//buf.memory = V4L2_MEMORY_USERPTR;

//LOGE("fd=%d,request=%d,buf=%d",fd,VIDIOC_DQBUF,&buf);

if (-1 == xioctl (fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

default:

stopcapturing ();

uninitdevice ();

closedevice ();

if(rgb) {

free(rgb);

rgb = NULL;

}

return errnoexit ("VIDIOC_DQBUF");

}

}

assert (buf.index < n_buffers);

processimage (buffers[buf.index].start);

if (-1 == xioctl (fd, VIDIOC_QBUF, &buf))

return errnoexit ("VIDIOC_QBUF");

return 1;

}(6) 将YUYV格式转为RGB格式

void processimage (const void *p){

yuyv422toABGRY((unsigned char *)p);

}void yuyv422toABGRY(unsigned char *src)

{

int width=0;

int height=0;

width = IMG_WIDTH;

height = IMG_HEIGHT;

//后面会介绍为什么这里要乘以2

int frameSize =width*height*2;

int i;

if(!rgb){

return;

}

int *lrgb = NULL;

//modify by yozad.von 2019/05/21 删除没用到的lybuf

//将rgb的首地址赋给lrgb,这样只要给lrgb指向的地址赋值,那么rgb的值也会相应改变。

lrgb = &rgb[0];

if(yuv_tbl_ready==0){

for(i=0 ; i<256 ; i++){

//按照下面的代码,y1192_tbl[i]前面的16个数组都为0

y1192_tbl[i] = 1192*(i-16);

if(y1192_tbl[i]<0){

y1192_tbl[i]=0;

}

v1634_tbl[i] = 1634*(i-128);

v833_tbl[i] = 833*(i-128);

u400_tbl[i] = 400*(i-128);

u2066_tbl[i] = 2066*(i-128);

}

yuv_tbl_ready=1;

}

//由于是两个字节表示一个像素值,而这里每一次for循环,都会向前移4个字节,产生两个像素值,所以要产生IMG_WIDTH*IMG_HEIGHT个像素值,就必须乘以2

for(i=0 ; i>10;

int g1 = (y1192_1 - v833_tbl[v] - u400_tbl[u])>>10;

int b1 = (y1192_1 + u2066_tbl[u])>>10;

int y1192_2=y1192_tbl[y2];

int r2 = (y1192_2 + v1634_tbl[v])>>10;

int g2 = (y1192_2 - v833_tbl[v] - u400_tbl[u])>>10;

int b2 = (y1192_2 + u2066_tbl[u])>>10;

//当r、g、b的值大于255时,赋值为255.当小于0时,赋值为0.

r1 = r1>255 ? 255 : r1<0 ? 0 : r1;

g1 = g1>255 ? 255 : g1<0 ? 0 : g1;

b1 = b1>255 ? 255 : b1<0 ? 0 : b1;

r2 = r2>255 ? 255 : r2<0 ? 0 : r2;

g2 = g2>255 ? 255 : g2<0 ? 0 : g2;

b2 = b2>255 ? 255 : b2<0 ? 0 : b2;

//r由8位表示,g也由8位表示,b由8位表示。将两个点的RGB值传入lrgb中

*lrgb++ = 0xff000000 | b1<<16 | g1<<8 | r1;

*lrgb++ = 0xff000000 | b2<<16 | g2<<8 | r2;

}

} (7) java层显示

...

try {

if (dstRect == null) {

//如果dstRect为null,则获取显示的宽高,并初始化dstRect

winWidth = this.getWidth();

winHeight = this.getHeight();

LoggerUtils.e(TAG, ">>> winWidth "+winWidth+" "+winHeight);

dstRect = new Rect(0, 0, winWidth, winHeight);

}

if (!isNoDevice) {

if (processCamera() == 0) {

pixeltobmp(bmp);

Canvas canvas = holder.lockCanvas();

if (canvas != null) {

synchronized(bmp) {

// draw camera bmp on canvas

canvas.drawBitmap(bmp, null, dstRect, null);

getHolder().unlockCanvasAndPost(canvas);

}

}

}else {

isNoDevice = true;

}

}else {

isQRCode = false;

((Main) context).exit(1);

scanStart = false;

cameraExists = false;

}

} catch (Exception e1) {

e1.printStackTrace();

}

...4. 总结

之前提到的,相比较开源的SimpleWebCam,有些异常处理有问题,所以稍微有做改动。

还有一个开源项目UVCCamera,因为项目及行业要求,故不采用。

后面会更新,如何利用这个摄像头的预览进行二维码解析。

5. BUG及优化记录

5.1 BUG

1、会导致APP停止运行的BUG,下面贴出部分错误日志,主要是内存方面的报错:

06-11 13:25:29.260: E/ImageProc(1760): VIDIOC_STREAMOFF error 19, No such device

06-11 13:25:29.260: I/EventHub(1572): Removing device '/dev/input/event2' due to inotify event

06-11 13:25:29.260: I/EventHub(1572): Removed device: path=/dev/input/event2 name=USB 2.0 PC Cam2 id=1 fd=90 classes=0x80000001

06-11 13:25:29.275: I/ActivityManager(1572): Start proc com.hp.android.printservice for broadcast com.hp.android.printservice/.usb.ReceiverUSBDeviceDetached: pid=3015 uid=10046 gids={50046, 3003, 1028, 1015, 1023}

06-11 13:25:29.280: I/InputReader(1572): Device removed: id=1, name='USB 2.0 PC Cam2', sources=0x00000101

06-11 13:25:29.280: E/ImageProc(1760): VIDIOC_DQBUF error 19, No such device

06-11 13:25:29.280: E/ImageProc(1760): VIDIOC_STREAMOFF error 9, Bad file number

06-11 13:25:29.280: A/libc(1760): Fatal signal 11 (SIGSEGV) at 0x00000000 (code=1), thread 2107 (pool-1-thread-1)

...

06-11 13:25:29.385: I/DEBUG(1229): *** *** *** *** *** *** *** *** *** *** *** *** *** *** *** ***

06-11 13:25:29.385: I/DEBUG(1229): Build fingerprint: 'Android/smdk4x12/smdk4x12:4.4.4/KTU84P/eng.root.20180107.235318:eng/test-keys'

06-11 13:25:29.385: I/DEBUG(1229): Revision: '0'

06-11 13:25:29.385: I/DEBUG(1229): pid: 1760, tid: 2107, name: pool-1-thread-1 >>> com.reallife.main <<<

06-11 13:25:29.385: I/DEBUG(1229): signal 11 (SIGSEGV), code 1 (SEGV_MAPERR), fault addr 00000000

...

06-11 13:25:29.460: I/DEBUG(1229): #00 pc 00001974 /data/app-lib/com.reallife.main-1/libImageProc.so (uninitdevice+35)

06-11 13:25:29.460: I/DEBUG(1229): #01 pc 0000225d /data/app-lib/com.reallife.main-1/libImageProc.so (Java_com_camera_usbcam_CameraPreview_stopCamera+8)

06-11 13:25:29.460: I/DEBUG(1229): #02 pc 0001dbcc /system/lib/libdvm.so (dvmPlatformInvoke+112)

06-11 13:25:29.460: I/DEBUG(1229): #03 pc 0004e123 /system/lib/libdvm.so (dvmCallJNIMethod(unsigned int const*, JValue*, Method const*, Thread*)+398)

06-11 13:25:29.460: I/DEBUG(1229): #04 pc 00026fe0 /system/lib/libdvm.so

06-11 13:25:29.460: I/DEBUG(1229): #05 pc 0002dfa0 /system/lib/libdvm.so (dvmMterpStd(Thread*)+76)

06-11 13:25:29.460: I/DEBUG(1229): #06 pc 0002b638 /system/lib/libdvm.so (dvmInterpret(Thread*, Method const*, JValue*)+184)

06-11 13:25:29.460: I/DEBUG(1229): #07 pc 0006057d /system/lib/libdvm.so (dvmCallMethodV(Thread*, Method const*, Object*, bool, JValue*, std::__va_list)+336)

06-11 13:25:29.460: I/DEBUG(1229): #08 pc 000605a1 /system/lib/libdvm.so (dvmCallMethod(Thread*, Method const*, Object*, JValue*, ...)+20)

06-11 13:25:29.460: I/DEBUG(1229): #09 pc 00055287 /system/lib/libdvm.so

06-11 13:25:29.460: I/DEBUG(1229): #10 pc 0000d228 /system/lib/libc.so (__thread_entry+72)

06-11 13:25:29.460: I/DEBUG(1229): #11 pc 0000d3c0 /system/lib/libc.so (pthread_create+240)解决方法:因为这个JNI在操作出现问题是会调用

stopcapturing();

uninitdevice ();

closedevice ();

然后在surfaceview结束的时候还会再调用一次,但这时的buffers 已经变成NULL了,所以后续的操作就会包报异常,所以需要在uninitdevice中添加

int uninitdevice(void)

{

unsigned int i;

//加上这一句,防止多次调用但buffers=NULL造成内存异常的问题

if (buffers == NULL)

return SUCCESS_LOCAL;

for (i = 0; i < n_buffers; ++i)

...

}2、原代码中

free (buffers);

后没有加

buffers = NULL;

这样会造成野指针的出现,在频繁开启摄像头的场景下就有可能内存问题

Fatal signal XX (SIGSEGV) at XXX (code=X), thread XX

5.2 优化

1、这个库将YUV数据获取到了之后,远代码在processCamera中将YUV转为RGB,然后在pixeltobmp中将RGB转为bitmap,java层绘制bitmap,中间多了一步,所以转化的速度比较慢,导致解析度高的时候画面延时比较严重。所以稍作修改:

删除pixeltobmp,在processCamera添加

...

AndroidBitmapInfo info;

void* pixels;

int ret;

if ((ret = AndroidBitmap_getInfo(env, bitmap, &info)) < 0) {

LOGE("AndroidBitmap_getInfo() failed ! error=%d", ret);

return -1;

}

if (info.format != ANDROID_BITMAP_FORMAT_RGBA_8888) {

LOGE("Bitmap format is not RGBA_8888 !");

return -1;

}

if ((ret = AndroidBitmap_lockPixels(env, bitmap, &pixels)) < 0) {

LOGE("AndroidBitmap_lockPixels() failed ! error=%d", ret);

return -1;

}

//由于pixels所指向的地址与bitmap密切相关,将pixels指向的地址传递给colors,这时只要改变colors指向的地址的值,就可以改变pixel指向地址的值

int res = readframeonce((int*)pixels);

AndroidBitmap_unlockPixels(env, bitmap);

...原先需要的镜像操作在yuyv422toABGRY中进行(直接在将YUV转为RGB的时候进行镜像算法),这是原来在pixeltobmp中的镜像算法,基本思路是一样的,有需要的朋友可以参考下。简单来说就是左右镜像的时候讲一行中的最左边和最右边的像素互换,然后第二个,第三个...依次类推。

if(mirror == 3){

//上下左右镜像 2019/04/19

for (j = height; j > 0; j--) {

lrgb = &rgb[j*width - 1];

for(i = 0; i < width; i++){

*colors++ = *lrgb--;

}

}

}else if (mirror == 2) {

//上下镜像 2019/04/19

for (j = height; j > 0; j--) {

lrgb = &rgb[(j-1)*width];

for(i=0 ; i < width ; i++){

*colors++ = *lrgb++;

}

}

}else if (mirror == 1) {

//左右镜像 2019/01/21

for (j = 0; j < height; ++j) {

lrgb = &rgb[(j+1)*width - 1];

for(i=0 ; i < width ; i++){

*colors++ = *lrgb--;

}

}

}else if (mirror == 0) {

//原数据 2019/01/21

lrgb = &rgb[0];

for(i=0 ; i < width*height ; i++){

*colors++ = *lrgb++;

}

}