【Opencontrail】opencontrail对接k8s

1.预置条件

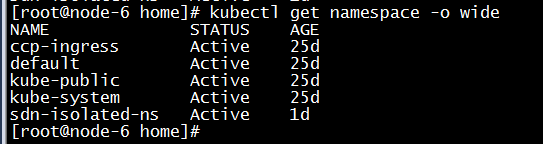

部署K8S集群,K8S集群工作正常;

2.部署sdn控制器

控制器集群和K8S集群,使用新的数据网卡通信,使用fab安装。

3.部署sdn前k8s集群配置

3.1 修改k8s-master节点配置

1) 停掉calico

kubectl delete daemonset calico-node -nkube-system

将master节点上的相关calico配置文件删除:

/etc/cni/net.d/10-calico.conflist

/opt/cni/bin/calico

/opt/cni/bin/calico-ipam

2) 修改 /etc/kubernetes/kubelet.env

KUBELET_ADDRESS="--address=0.0.0.0 --node-ip=12.12.12.182" 其中node-ip为本节点上sdn-vrouter的数据网卡ip

3) 修改/usr/local/bin/kubelet,增加如下

-v /var/lib/contrail:/var/lib/contrail:shared

4)将kube-proxy.manifest文件移动至其他路径,这样就不会启动kube-proxy服务 (SDN托管后,不需要iptables参与)

mv /etc/kubernetes/manifests/kube-proxy.manifest /home/

5) 重启kubelet服务

Service kubelet restart

3.2 修改k8s-slave节点配置

1)将slave节点上的相关calico配置文件删除:

/etc/cni/net.d/10-calico.conflist

/opt/cni/bin/calico

/opt/cni/bin/calico-ipam

2) 修改 /etc/kubernetes/kubelet.env

KUBELET_ADDRESS="--address=0.0.0.0 --node-ip=12.12.12.181" 其中node-ip为本节点上sdn-vrouter的数据网卡ip

3) 修改/usr/local/bin/kubelet,增加如下

-v /var/lib/contrail:/var/lib/contrail:shared

4)将kube-proxy.manifest文件移动至其他路径,这样就不会启动kube-proxy服务 (SDN托管后,不需要iptables参与)

mv /etc/kubernetes/manifests/kube-proxy.manifest /home/

5) 重启kubelet服务

Service kubelet restart

4.部署k8s-sdn组件

4.1 在k8s-master节点部署sdn

| [root@node-6 home]# cat contrail-host-centos-master.yaml --- apiVersion: v1 kind: ConfigMap metadata: name: contrail-config namespace: kube-system data: global-config: |- [GLOBAL] cloud_orchestrator = kubernetes sandesh_ssl_enable = False enable_config_service = True enable_control_service = True enable_webui_service = True introspect_ssl_enable = False config_nodes = 172.16.161.184 #sdn控制器集群ip controller_nodes = 172.16.161.184 analytics_nodes = 172.16.161.184 analyticsdb_nodes = 172.16.161.184 opscenter_ip = 172.16.161.184 # analyticsdb_minimum_diskgb = 50 # configdb_minimum_diskgb = 5 agent-config: |- [AGENT] compile_vrouter_module = False # Optional ctrl_data_network, if different from management ctrl_data_network = "12.12.12.0/24" #vrouter-agent的数据网段及网卡,独立 vrouter_physical_interface = ens36 kubemanager-config: |- [KUBERNETES] cluster_name = cluster.local #通过/etc/kubernetes/kubelet.env配置文件中查看 #cluster_project = {'domain': 'default-domain', 'project': 'k8s'} cluster_network = {} service_subnets = 10.233.64.0/18 #按需填写,service_subnets为k8s的服务段ip池,pod_subnets为默认的pod网段 pod_subnets = 172.168.1.0/24 api_server = 12.12.12.182 #填写master节点上sdn数据网卡的ip vnc_public_fip_pool = {'domain':'default-domain','project':'admin','network':'public','name':'default'} kubernetes-agent-config: |- [AGENT] --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: contrail-kube-manager namespace: kube-system labels: app: contrail-kube-manager spec: template: metadata: labels: app: contrail-kube-manager spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: "opencontrail.org/controller" operator: In values: - "true" - matchExpressions: - key: "node-role.kubernetes.io/master" #通过这个label来将contrail-kube-manager部署在master节点, kubectl get nodes --show-labels operator: Exists tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule automountServiceAccountToken: false hostNetwork: true containers: - name: contrail-kube-manager image: "contrail-kube-manager-centos7:v5.1" #本地docker images看到的contrail-kube-manager镜像的名称 imagePullPolicy: "" securityContext: privileged: true volumeMounts: - mountPath: /tmp/contrailctl name: tmp-contrail-config - mountPath: /tmp/serviceaccount name: pod-secret volumes: - name: tmp-contrail-config configMap: name: contrail-config items: - key: global-config path: global.conf - key: kubemanager-config path: kubemanager.conf - name: pod-secret secret: secretName: contrail-kube-manager-token --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: contrail-agent namespace: kube-system labels: app: contrail-agent spec: template: metadata: labels: app: contrail-agent spec: #Disable affinity for single node setup affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: "opencontrail.org/controller" operator: In values: - "true" - matchExpressions: - key: "node-role.kubernetes.io/master" #k8s master节点上也部署contrail-vrouter-agent容器 operator: Exists #Enable tolerations for single node setup tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule automountServiceAccountToken: false hostNetwork: true initContainers: - name: contrail-kubernetes-agent #该容器只启动一次,为initContainers类型,用于安装sdn-cni插件 image: "contrail-kubernetes-agent-centos7:v5.1" #本地docker images看到的contrail-kubernetes-agent镜像的名称 securityContext: privileged: true volumeMounts: - mountPath: /tmp/contrailctl name: tmp-contrail-config - mountPath: /var/lib/contrail/ name: var-lib-contrail - mountPath: /host/etc_cni name: etc-cni - mountPath: /host/opt_cni_bin name: opt-cni-bin - mountPath: /var/log/contrail/cni name: var-log-contrail-cni containers: - name: contrail-agent image: "30.20.0.2:7070/flexvisor/contrail-agent-centos7:v5.1" #本地docker images看到的contrail-agent-centos7镜像的名称 imagePullPolicy: "" securityContext: privileged: true volumeMounts: - mountPath: /usr/src name: usr-src - mountPath: /lib/modules name: lib-modules - mountPath: /tmp/contrailctl name: tmp-contrail-config - mountPath: /var/lib/contrail/ name: var-lib-contrail - mountPath: /host/etc_cni name: etc-cni - mountPath: /host/opt_cni_bin name: opt-cni-bin # This is a workaround just to make sure the directory is created on host - mountPath: /var/log/contrail/cni name: var-log-contrail-cni - mountPath: /tmp/serviceaccount name: pod-secret volumes: - name: tmp-contrail-config configMap: name: contrail-config items: - key: global-config path: global.conf - key: agent-config path: agent.conf - key: kubemanager-config path: kubemanager.conf - key: kubernetes-agent-config path: kubernetesagent.conf - name: pod-secret secret: secretName: contrail-kube-manager-token - name: usr-src hostPath: path: /usr/src - name: usr-src-kernels hostPath: path: /usr/src/kernels - name: lib-modules hostPath: path: /lib/modules - name: var-lib-contrail hostPath: path: /var/lib/contrail/ - name: etc-cni hostPath: path: /etc/cni - name: opt-cni-bin hostPath: path: /opt/cni/bin - name: var-log-contrail-cni hostPath: path: /var/log/contrail/cni/ --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: contrail-kube-manager namespace: kube-system rules: - apiGroups: ["*"] resources: ["*"] verbs: ["*"] --- apiVersion: v1 kind: ServiceAccount metadata: name: contrail-kube-manager namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: contrail-kube-manager roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: contrail-kube-manager subjects: - kind: ServiceAccount name: contrail-kube-manager namespace: kube-system --- apiVersion: v1 kind: Secret metadata: name: contrail-kube-manager-token namespace: kube-system annotations: kubernetes.io/service-account.name: contrail-kube-manager type: kubernetes.io/service-account-token |

kubectl apply -f contrail-host-centos-master.yaml

即可在master节点部署相应的sdn容器。

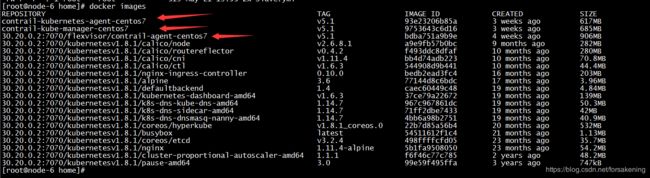

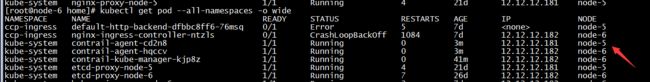

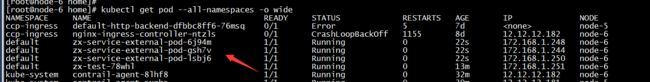

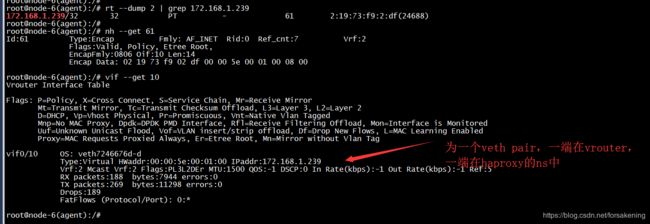

如上图所示,在k8s-master节点(node-6)上创建了 contrail-agent 和 contrail-kube-manager 两个pod。

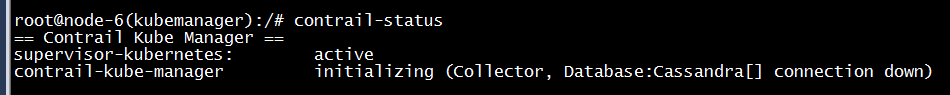

在master节点上执行:docker exec -it `docker ps | grep k8s_contrail-kube-manager | awk '{print $1}'` bash

进入contrail-kube-manager容器,contrail-status查看contrail-kube-manager的运行状态:

原因是截至目前(20190611 5.1版本的原因)/etc/contrail/contrail-kubernetes.conf配置文件不正确,手动修改一下:

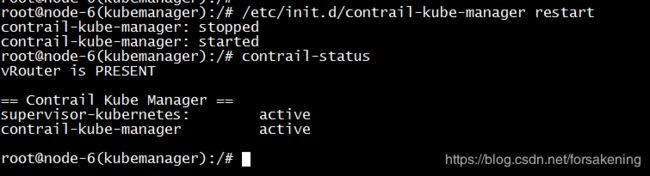

重启contrail-kube-manager,并查看状态

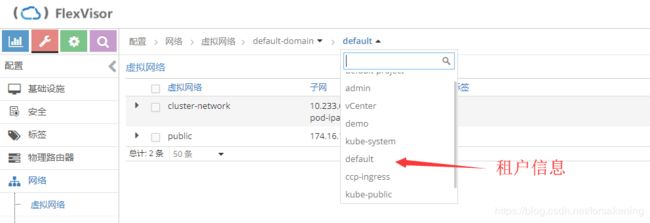

在contrail-kube-manager正常运行后,在contrail侧会同步相应的租户信息,并创建对应的service-network和pod-network

4.2 在k8s-slave节点部署sdn

yaml文件

| [root@node-6 home]# cat contrail-host-centos-slave.yaml --- apiVersion: v1 kind: ConfigMap metadata: name: contrail-config namespace: kube-system data: global-config: |- [GLOBAL] cloud_orchestrator = kubernetes sandesh_ssl_enable = False enable_config_service = True enable_control_service = True enable_webui_service = True introspect_ssl_enable = False config_nodes = 172.16.161.184 controller_nodes = 172.16.161.184 analytics_nodes = 172.16.161.184 analyticsdb_nodes = 172.16.161.184 opscenter_ip = 172.16.161.184 # analyticsdb_minimum_diskgb = 50 # configdb_minimum_diskgb = 5 agent-config: |- [AGENT] compile_vrouter_module = False # Optional ctrl_data_network, if different from management ctrl_data_network = "12.12.12.0/24" vrouter_physical_interface = ens36 kubemanager-config: |- [KUBERNETES] cluster_name = cluster.local #cluster_project = {'domain': 'default-domain', 'project': 'default'} #cluster_project = {'domain': 'default-domain', 'project': 'k8s'} cluster_network = {} service_subnets = 10.233.64.0/18 pod_subnets = 172.168.1.0/24 api_server = 12.12.12.182 kubernetes-agent-config: |- [AGENT] --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: contrail-agent namespace: kube-system labels: app: contrail-agent spec: template: metadata: labels: app: contrail-agent spec: #Disable affinity for single node setup affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: "opencontrail.org/controller" operator: In values: - "true" - matchExpressions: - key: "node-role.kubernetes.io/node" operator: Exists #Enable tolerations for single node setup tolerations: - key: node-role.kubernetes.io/node operator: Exists effect: NoSchedule automountServiceAccountToken: false hostNetwork: true initContainers: - name: contrail-kubernetes-agent image: "contrail-kubernetes-agent-centos7:v5.1" securityContext: privileged: true volumeMounts: - mountPath: /tmp/contrailctl name: tmp-contrail-config - mountPath: /var/lib/contrail/ name: var-lib-contrail - mountPath: /host/etc_cni name: etc-cni - mountPath: /host/opt_cni_bin name: opt-cni-bin - mountPath: /var/log/contrail/cni name: var-log-contrail-cni containers: - name: contrail-agent image: "30.20.0.2:7070/flexvisor/contrail-agent-centos7:v5.1" imagePullPolicy: "" securityContext: privileged: true volumeMounts: - mountPath: /usr/src name: usr-src - mountPath: /lib/modules name: lib-modules - mountPath: /tmp/contrailctl name: tmp-contrail-config - mountPath: /var/lib/contrail/ name: var-lib-contrail - mountPath: /host/etc_cni name: etc-cni - mountPath: /host/opt_cni_bin name: opt-cni-bin # This is a workaround just to make sure the directory is created on host - mountPath: /var/log/contrail/cni name: var-log-contrail-cni - mountPath: /tmp/serviceaccount name: pod-secret volumes: - name: tmp-contrail-config configMap: name: contrail-config items: - key: global-config path: global.conf - key: agent-config path: agent.conf - key: kubemanager-config path: kubemanager.conf - key: kubernetes-agent-config path: kubernetesagent.conf - name: pod-secret secret: secretName: contrail-kube-manager-token - name: usr-src hostPath: path: /usr/src - name: usr-src-kernels hostPath: path: /usr/src/kernels - name: lib-modules hostPath: path: /lib/modules - name: var-lib-contrail hostPath: path: /var/lib/contrail/ - name: etc-cni hostPath: path: /etc/cni - name: opt-cni-bin hostPath: path: /opt/cni/bin - name: var-log-contrail-cni hostPath: path: /var/log/contrail/cni/ --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: contrail-kube-manager namespace: kube-system rules: - apiGroups: ["*"] resources: ["*"] verbs: ["*"] --- apiVersion: v1 kind: ServiceAccount metadata: name: contrail-kube-manager namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: contrail-kube-manager roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: contrail-kube-manager subjects: - kind: ServiceAccount name: contrail-kube-manager namespace: kube-system --- apiVersion: v1 kind: Secret metadata: name: contrail-kube-manager-token namespace: kube-system annotations: kubernetes.io/service-account.name: contrail-kube-manager type: kubernetes.io/service-account-token |

kubectl apply -f contrail-host-centos-slave.yaml 在slave节点进行部署

5.部署SDN后修改k8s配置

1)在每个k8s节点上增加路由:

route add -net 10.233.64.0/18 dev vhost0 -- 宿主机可以访问k8s service-network网段

route add -net 172.168.1.0/24 dev vhost0 -- 宿主机可以访问k8s pod-network网段,比如kube-dns的健康监测,宿主机就需要去访问dns pod的ip

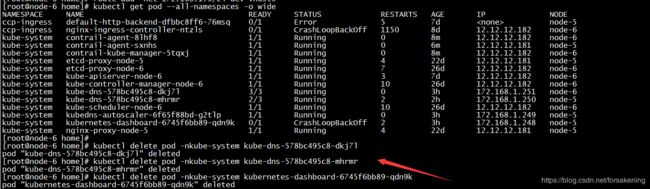

2)重建dns,dashboard等pod服务

6.检查对接情况

1)检查DNS服务

创建default命名空间下的pod,并在该pod中nslookup k8s service的域名,看是否可以获得正确的ip

| [root@node-6 home]# cat zx-master.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-test spec: replicas: 1 template: metadata: labels: run: zx-test spec: containers: - name: zx-test image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine nodeSelector: slave: "k8master" [root@node-6 home]# kubectl apply -f zx-master.yml replicationcontroller "zx-test" created |

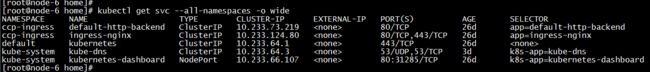

2)检查Service

- 在sdn侧,创建public网络,用于绑定service的external ip

- 修改contrail-kube-manager中的配置 public_fip_pool = {'domain':'default-domain', 'project':'admin', 'network':'public', 'name':'default'},并重启contrail-kube-manager进程

创建带有externalIP类型的service

| [root@node-6 home]# cat zx-service-external.yml kind: Service apiVersion: v1 metadata: name: zx-external-svc spec: #type: LoadBalancer externalIPs: [174.16.161.6] selector: app: zx-external-svc ports: - protocol: TCP port: 8080 targetPort: 80 [root@node-6 home]# [root@node-6 home]# kubectl apply -f zx-service-external.yml service "zx-external-svc" created |

创建该service下的pod

| [root@node-6 home]# cat zx-service-external-master-rc.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-service-external-pod spec: replicas: 3 template: metadata: name: zx-service-external-pod labels: app: zx-external-svc spec: containers: - name: zx-service-external-pod image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine nodeSelector: slave: "k8master" [root@node-6 home]# [root@node-6 home]# kubectl apply -f zx-service-external-master-rc.yml replicationcontroller "zx-service-external-pod" created |

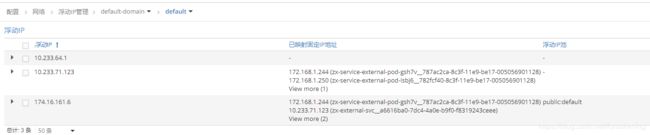

具体在SDN侧的表现如下:

分别把servie-ip和public-ip和各个endpoint,通过浮动ip的形式绑定起来。

测试如下:

3)检查Namespace

使用yaml文件创建隔离的namespace

| [root@node-6 home]# cat zx-iso-ns.yml apiVersion: v1 kind: Namespace metadata: name: "isolated-sdn-ns" annotations: { "opencontrail.org/isolation" : "true", } [root@node-6 home]# [root@node-6 home]# kubectl apply -f zx-iso-ns.yml namespace "isolated-sdn-ns" created |

此时,在sdn侧会同步一个namespace对应的project,同时创建一个虚拟的网络,使用的ipam和全局的pod-ipam保持一致。

虽然使用了pod-ipam,但由于加了isolated属性,默认在该namespace下创建的pod和default下的pods不通。

| 在master节点创建一个pod [root@node-6 home]# cat zx-master-ns.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-test namespace: isolated-sdn-ns spec: replicas: 1 template: metadata: labels: run: zx-test spec: containers: - name: zx-test image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine nodeSelector: slave: "k8master" [root@node-6 home]# [root@node-6 home]# kubectl apply -f zx-master-ns.yml replicationcontroller "zx-test" created [root@node-6 home]#

在slave节点创建一个pod [root@node-6 home]# cat zx-slave-ns.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-test-slave namespace: isolated-sdn-ns spec: replicas: 1 template: metadata: labels: run: zx-test-slave spec: containers: - name: zx-test-slave image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine nodeSelector: slave: "k8slave" [root@node-6 home]# [root@node-6 home]# kubectl apply -f zx-slave-ns.yml replicationcontroller "zx-test-slave" created [root@node-6 home]# |

在sdn侧,这种隔离性是通过安全组实现的。不同namespace下的pod互通需要加k8s的network-policy,network-policy在sdn通过安全组实现。

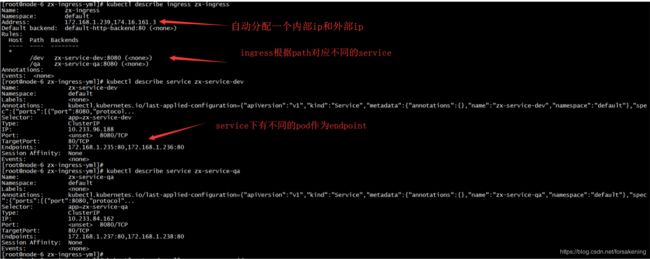

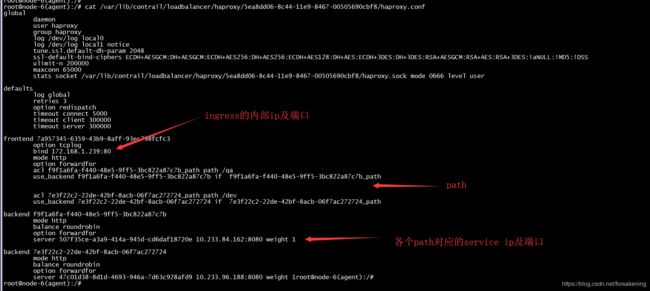

4)检查Ingress

通过yaml文件创建ingress及其相应的service、pod。

| [root@node-6 zx-ingress-yml]# cat zx-ingress.yml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: zx-ingress spec: rules: - host: ingress.zx-service.com - http: paths: - path: /dev backend: serviceName: zx-service-dev servicePort: 8080 - path: /qa backend: serviceName: zx-service-qa servicePort: 8080 [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# cat zx-service-dev.yml kind: Service apiVersion: v1 metadata: name: zx-service-dev spec: selector: app: zx-service-dev ports: - protocol: TCP port: 8080 targetPort: 80 [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# cat zx-service-qa.yml kind: Service apiVersion: v1 metadata: name: zx-service-qa spec: selector: app: zx-service-qa ports: - protocol: TCP port: 8080 targetPort: 80 [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# cat zx-service-dev-rc.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-service-dev-pod spec: replicas: 2 template: metadata: name: zx-service-dev-pod labels: app: zx-service-dev spec: containers: - name: zx-service-dev-pod image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# cat zx-service-qa-rc.yml apiVersion: v1 kind: ReplicationController metadata: name: zx-service-qa-pod spec: replicas: 2 template: metadata: name: zx-service-qa-pod labels: app: zx-service-qa spec: containers: - name: zx-service-qa-pod image: 30.20.0.2:7070/kubernetesv1.8.1/nginx:1.11.4-alpine [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# [root@node-6 zx-ingress-yml]# kubectl apply -f zx-ingress.yml ingress "zx-ingress" created [root@node-6 zx-ingress-yml]# kubectl apply -f zx-service-dev.yml service "zx-service-dev" created [root@node-6 zx-ingress-yml]# kubectl apply -f zx-service-qa.yml service "zx-service-qa" created [root@node-6 zx-ingress-yml]# kubectl apply -f zx-service-qa-rc.yml replicationcontroller "zx-service-qa-pod" created [root@node-6 zx-ingress-yml]# kubectl apply -f zx-service-dev-rc.yml replicationcontroller "zx-service-dev-pod" created [root@node-6 zx-ingress-yml]# |

在sdn侧表现如下:

附:

1) 对接后,再删除,可能出现残留,使用如下python脚本进行清空

| import vnc_api.vnc_api

PROJECT_PURGE=[ 'k8s', 'default', 'vCenter', 'demo', 'kube-system', 'ccp-ingress', 'kube-public', 'sdn-isolated-ns', ]

def purge(): client = vnc_api.vnc_api.VncApi()

for prj in PROJECT_PURGE: try: uuid = client.fq_name_to_id('project', ['default-domain', prj]) print 'GET Projet:%s and uuid:%s' %(prj, uuid)

list = client.loadbalancer_members_list(parent_id=uuid) for ref in list['loadbalancer-members']: print 'LB Member:Delete %s' % (':'.join(ref['fq_name'])) client.loadbalancer_member_delete(id=ref['uuid'])

print ''

list = client.loadbalancer_pools_list(parent_id=uuid) for ref in list['loadbalancer-pools']: print 'LB Pool:Delete %s' % (':'.join(ref['fq_name'])) client.loadbalancer_pool_delete(id=ref['uuid'])

print ''

list = client.loadbalancer_listeners_list(parent_id=uuid) for ref in list['loadbalancer-listeners']: print 'LB Listener:Delete %s' % (':'.join(ref['fq_name'])) client.loadbalancer_listener_delete(id=ref['uuid'])

print ''

list = client.loadbalancers_list(parent_id=uuid) for ref in list['loadbalancers']: print 'LB :Delete %s' % (':'.join(ref['fq_name'])) client.loadbalancer_delete(id=ref['uuid'])

print ''

list = client.floating_ip_pools_list(parent_id=uuid) for ref in list['floating-ip-pools']: print 'FIP-Pool: Delete %s' % (':'.join(ref['fq_name'])) client.floating_ip_pool_delete(id=ref['uuid'])

print ''

list = client.instance_ips_list(parent_id=uuid) for ref in list['instance-ips']: print 'Instance IP: Delete %s' % (':'.join(ref['fq_name'])) client.instance_ip_delete(id=ref['uuid'])

print ''

list = client.floating_ips_list(parent_id=uuid) for ref in list['floating-ips']: print 'FIP: Delete %s' % (':'.join(ref['fq_name'])) client.floating_ip_delete(id=ref['uuid'])

print ''

list = client.virtual_machine_interfaces_list(parent_id=uuid) for ref in list['virtual-machine-interfaces']: print 'VM-Intf: Delete %s' % (':'.join(ref['fq_name'])) client.virtual_machine_interface_delete(id=ref['uuid'])

print ''

list = client.virtual_machines_list(parent_id=uuid) for ref in list['virtual-machines']: print 'VM: Delete %s' % (':'.join(ref['fq_name'])) client.virtual_machine_delete(id=ref['uuid'])

print ''

list = client.virtual_networks_list(parent_id=uuid) for ref in list['virtual-networks']: print 'NetWork: Delete %s' % (':'.join(ref['fq_name'])) client.virtual_network_delete(id=ref['uuid'])

print ''

list = client.network_policys_list(parent_id=uuid) for ref in list['network-policys']: print 'NetPolicy: Delete %s' % (':'.join(ref['fq_name'])) client.network_policy_delete(id=ref['uuid'])

print ''

list = client.security_groups_list(parent_id=uuid) for ref in list['security-groups']: print 'SG: Delete %s' % (':'.join(ref['fq_name'])) client.security_group_delete(id=ref['uuid'])

list = client.network_ipams_list(parent_id=uuid) for ref in list['network-ipams']: print 'IPAM: Delete %s' % (':'.join(ref['fq_name'])) client.network_ipam_delete(id=ref['uuid'])

client.project_delete(id=uuid) print 'Project: Delete %s' % (uuid) except: print 'Get Exception!'

if __name__ == '__main__': purge() |