hadoop-2.7.7 与 hbase2.0.4 实时备份与恢复

一、基础环境

1.1、系统环境

OS版本:CentOS release 6.4 (Final)

java version "1.8.0_201" (要求1.6+)

ZooKeeper:HBASE自带

安装参考:https://blog.csdn.net/jisen_huang/article/details/48490295

Hadoop:hadoop-2.7.7.tar.gz

http://apache.fayea.com/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

HBase:hbase-2.0.4-bin.tar.gz

服务器列表:

主机名 IP地址 JDK myid

ca 192.168.253.10 1.8.0_201 0

cb 192.168.253.11 1.8.0_201 1

cc 192.168.253.12 1.8.0_201 2

cd 192.168.253.13 1.8.0_201 3

1.2、实时备份环境:

info2soft-ctrlcenter-6.1-28439.el6.x86_64(为商业软件)

备份IP:192.168.253.81

Info2soft通过部署在生产系统上的轻量级客户端模块,对要保护的数据进行系统级I/O旁路监听,以细粒度的字节级增量数据捕捉方式,将生产端变化的数据复制到灾备中心并将变化的数据实时地传输到任意距离外的灾备站点,且通过数据序列化传输技术(Data Order Transfer,简称DOT),严格保证生产系统和灾备中心数据的一致性和完整性。

二、hadoop安装

hadoop 安装参考《hadoop-2.7.1 For CentOS6.5安装》

https://blog.csdn.net/jisen_huang/article/details/48490045

三、HBase 安装(自带ZK)

https://blog.csdn.net/jisen_huang/article/details/87814720

四、启动HBASE

[hdp@ca ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

19/02/21 19:13:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [ca]

ca: starting namenode, logging to /hadoop/hadoop/logs/hadoop-hdp-namenode-ca.out

cb: starting datanode, logging to /hadoop/hadoop/logs/hadoop-hdp-datanode-cb.out

cd: starting datanode, logging to /hadoop/hadoop/logs/hadoop-hdp-datanode-cd.out

cc: starting datanode, logging to /hadoop/hadoop/logs/hadoop-hdp-datanode-cc.out

Starting secondary namenodes [ca]

ca: starting secondarynamenode, logging to /hadoop/hadoop/logs/hadoop-hdp-secondarynamenode-ca.out

19/02/21 19:14:34 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /hadoop/hadoop/logs/yarn-hdp-resourcemanager-ca.out

cc: starting nodemanager, logging to /hadoop/hadoop/logs/yarn-hdp-nodemanager-cc.out

cb: starting nodemanager, logging to /hadoop/hadoop/logs/yarn-hdp-nodemanager-cb.out

cd: starting nodemanager, logging to /hadoop/hadoop/logs/yarn-hdp-nodemanager-cd.out

[hdp@ca bin]$ start-hbase.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/hadoop/hbase-2.0.4/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/hadoop/hbase-2.0.4/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

192.168.253.10: running zookeeper, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-zookeeper-ca.out

192.168.253.12: running zookeeper, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-zookeeper-cc.out

192.168.253.11: running zookeeper, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-zookeeper-cb.out

192.168.253.13: running zookeeper, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-zookeeper-cd.out

running master, logging to /hadoop/hbase-2.0.4/logs/hbase-hdp-master-ca.out

cc: running regionserver, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-regionserver-cc.out

cd: running regionserver, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-regionserver-cd.out

cb: running regionserver, logging to /hadoop/hbase-2.0.4/bin/../logs/hbase-hdp-regionserver-cb.out

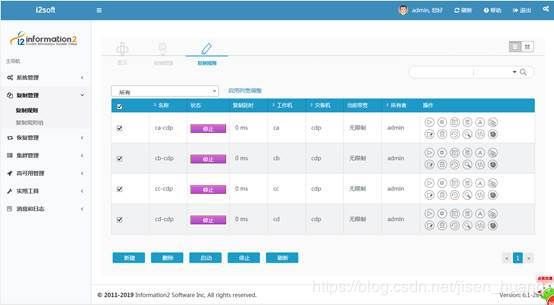

五、实时备份状态

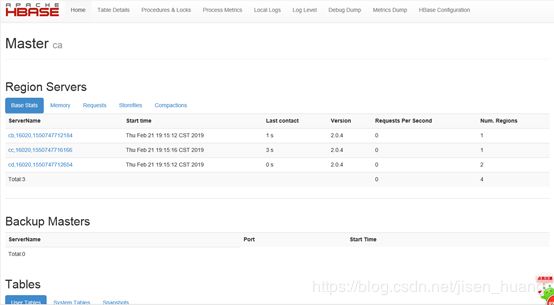

5.1、节点状态

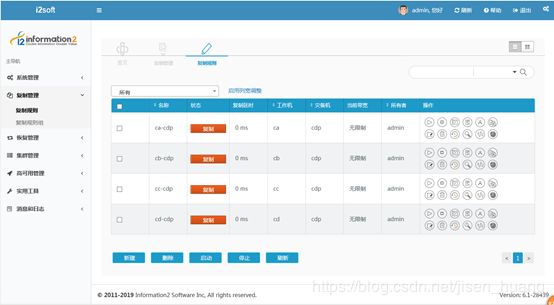

5.2、复制状态

六、HBASE恢复测试

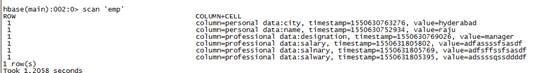

6.1、创建表与测试表

6.2、删除表测试

6.3、直接将每一台的hadoop与HDFS与HBASE统统删除

![]()

6.4、将备份软件停止

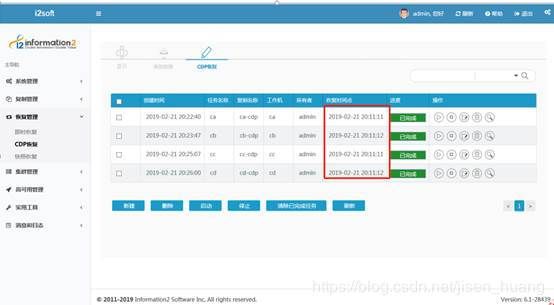

6.5、进行CDP数据恢复

恢复时间要将整个环境找最接近的时间点

6.6、启动Master上HDFS

6.7、启动HBASE

6.8、登录浏览器,HDFS已经恢复