Linux----kubernetes单节点部署

etcd数据库详解

架构

- HTTP Server: 用于处理用户发送的API请求以及其它etcd节点的同步与心跳信息请求。

- Store:用于处理etcd支持的各类功能的事务,包括数据索引、节点状态变更、监控与反馈、事件处理与执行等等,是etcd对用户提供的大多数API功能的具体实现。

- Raft:Raft强一致性算法的具体实现,是etcd的核心。

- WAL:Write Ahead Log(预写式日志),是etcd的数据存储方式。除了在内存中存有所有数据的状态以及节点的索引以外,etcd就通过WAL进行持久化存储。WAL中,所有的数据提交前都会事先记录日志。Snapshot是为了防止数据过多而进行的状态快照;Entry表示存储的具体日志内容。

etcd概念词汇表

- Raft:etcd所采用的保证分布式系统强一致性的算法。

- Node:一个Raft状态机实例。

- Member: 一个etcd实例。它管理着一个Node,并且可以为客户端请求提供服务。

- Cluster:由多个Member构成可以协同工作的etcd集群。

- Peer:对同一个etcd集群中另外一个Member的称呼。

- Client: 向etcd集群发送HTTP请求的客户端。

- WAL:预写式日志,etcd用于持久化存储的日志格式。

- snapshot:etcd防止WAL文件过多而设置的快照,存储etcd数据状态。

- Proxy:etcd的一种模式,为etcd集群提供反向代理服务。

- Leader:Raft算法中通过竞选而产生的处理所有数据提交的节点。

- Follower:竞选失败的节点作为Raft中的从属节点,为算法提供强一致性保证。

- Candidate:当Follower超过一定时间接收不到Leader的心跳时转变为Candidate开始竞选。

- Term:某个节点成为Leader到下一次竞选时间,称为一个Term。

- Index:数据项编号。Raft中通过Term和Index来定位数据

Kubernetes网络模式

Kubernetes与Docker网络有些不同。Kubernetes网络需要解决下面的4个问题

- 集群内:

- 容器与容器之间的通信

- Pod和Pod之间的通信

- Pod和服务之间的通信

- 集群外:

- 外部应用与服务之间的通信

同一个Pod中容器之间的通信

这种场景对于Kubernetes来说没有任何问题,根据Kubernetes的架构设计。Kubernetes创建Pod时,首先会创建一个pause容器,为Pod指派一个唯一的IP地址。然后,以pause的网络命名空间为基础,创建同一个Pod内的其它容器(–net=container:xxx)。因此,同一个Pod内的所有容器就会共享同一个网络命名空间,在同一个Pod之间的容器可以直接使用localhost进行通信。

不同Pod中容器之间的通信

flannel会在每一个宿主机上运行名为flanneld代理,其负责为宿主机预先分配一个子网,并为Pod分配IP地址。Flannel使用Kubernetes或etcd来存储网络配置、分配的子网和主机公共IP等信息。数据包则通过VXLAN、UDP或host-gw这些类型的后端机制进行转发。

Flannel网络详解

概述

- Flannel项目会在每一个节点上运行一个名为flanneld的守护进程,它负责在一个全局的大的子网网段中给当前节点分配一个小的子网网段,它可以与Kubernetes API Server进行通信来存储数据,或者直接将数据存储在Etcd中。数据包可以通过包括Vxlan和host-gw在内的一些方式来实现跨节点的通信。

- Kubernetes会给每一个Pod分配一个IP地址,这个IP地址在当前集群中唯一,并且可以通过路由直达。这种网络模型的好处是避免了端口映射等方案中在节点上共享同一个IP地址的复杂性。Flannel在一个集群的多个节点之间提供了一个三层网络,Flannel本身并不负责节点上的pod与当前节点之间的连接(可以通过flannel、gateway等CNI Plugin来实现这部分的功能

实验环境

| 角色 | IP地址 | 操作系统 | 软件包 |

| mster | 192.168.179.150 | Centos7-4 | etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz |

| node1 | 192.168.179.151 | Centos7-4 | flannel-v0.10.0-linux-amd64.tar.gz |

| node2 | 192.168.179.152 | Centos7-4 | flannel-v0.10.0-linux-amd64.tar.gz |

部署网络环境,配置固定IP地址

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=61a00dac-a101-4f57-9af4-c4bc792aecde

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.179.150

NETMASK=255.255.255.0

GATEWAY=192.168.179.2

DNS1=192.168.179.2

node1节点IP

192.168.179.151

node2节点IP

192.168.179.152

关闭网络功能,使其IP地址不可以变动

systemctl stop NetworkManager

systemctl enaable NetworkManager

清空防火墙规则

iptables -F

永久关闭保护功能

setenforce 0

vim /etc/selinux/config

SELINUX=disabled(enabled改为disabled)

三个节点服务器都要更改node1节点服务器与node2节点服务器安装docker-ce

安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

加载docker源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

yum install -y docker-ce

开启docker服务

systemctl start docker

systemctl enable docker

镜像加速

cd /etc/docker 服务开启之后生成

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://o0lhkgnw.mirror.aliyuncs.com"]

}

EOF

重新加载

systemctl daemon-reload

重启服务

systemctl restart dockermaster服务器配置

创建工作目录

mkdir /root/k8s

创建存放证书目录

cd /root/k8s

mkdir etcd-cert

//下载创建证书工具

vim cfssl.sh

curl -L https://pkg.cssl.org/R1.2/cfssl linux amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/crssljson linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkgcfssl.org/R1.2/cfssl-certinfo linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /sr/local/bin/cfssl /usr/loca/bin/crssljson /usr/local/bin/cfssl-certinfo

//执行脚本下载cfssl官方包

bash cfssl.sh

[root@localhost k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

定义ca证书

cd /root/k8s/etcd-cert/

vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

实现签名证书

vim ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

生成两个证书,ca-key.pem,ca.pem

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

指定etcd三个节点之间的通信验证,注意IP地址

cat > server-csr.json <$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

进入等待状态,等待etcd2,etcd3加入集群,有设置超时时间

bash etcd.sh etcd01 192.168.179.150 etcd02=https://192.168.179.151:2380,etcd03=https://192.168.179.152:2380

可以查看此时服务状态

ps -et | grep etcd

拷贝证书到node1和node2节点服务器中

scp -r /opt/etcd/ [email protected]:/opt/

scp -r /opt/etcd/ [email protected]:/opt/

node节点服务器配置(两个节点服务器配置相同,以node1为例配置演示)

两台node节点服务器更改etcd的配置文件

vim /opt/etcd/cfg/etcd

更改IP地址,四个地方要更改本地IP一个集群的IP不用更改

#[Member]

ETCD_NAME="etcd01" #更改为节点名称(etcd02和etcd03)

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.179.151:2380" #更改本地IP

ETCD_LISTEN_CLIENT_URLS="https://192.168.179.151:2379" #更改本地IP

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.179.151:2380" #更改本地IP

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.179.151:2379" #更改本地IP

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.179.150:2380,etcd02=https://192.168.179.151:2380,etcd03=https://192.168.179.152:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

启动脚本拷贝到节点服务器中

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[root@localhost cfg]# ls /usr/lib/systemd/system/etcd.service

/usr/lib/systemd/system/etcd.service

master服务器查看是否生成ercd服务文件

vim /usr/lib/systemd/system/etcd.service

进入等待状态,开启节点服务器的etcd

bash etcd.sh etcd01 192.168.179.150 etcd02=https://192.168.179.151:2380,etcd03=https://192.168.179.152:2380两台node节点服务器开启etcd服务

systemctl start etcd

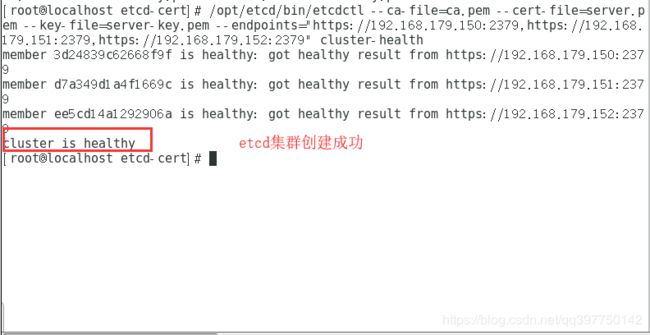

master服务器查看集群状态

cd /root/k8s/etcd-cert

/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.179.150:2379,https://192.168.179.151:2379,https://192.168.179.152:2379" cluster-health

master节点服务操作

master节点服务器操作,写入分配的子网点到ETCD中,供flannel使用

cd /root/k8s/etcd-cert

/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.179.150:2379,https://192.168.179.151:2379,https://192.168.179.152:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

查看写入的信息

/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.179.150:2379,https://192.168.179.151:2379,https://192.168.179.152:2379" get /coreos.com/network/config

将flannel压缩包传送给两个node节点,可以从官方下载

scp /mnt/flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root/

scp /mnt/flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root/node节点服务器配置安装flannel,以node1为例,操作相同

node节点解压缩包

tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

创建k8s工作目录

mkdir -p /opt/kubernetes/{cfg,bin,ssl}

mv mk-docker-opts.sh flanneld /opt/kubernetes/bin

编写flannel启动脚本

cd /root/

vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat </opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat </usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

开启flannel功能,两台节点服务器都开启

bash flannel.sh https://192.168.179.150:2379,https://192.168.179.151:2379,https://192.168.179.152:2379

更改docker.service文件,使docker连接flannel,两台节点服务器都要配置

vim /usr/lib/systemd/system/docker.service

[service]中

EnvironmentFile=/run/flannel/subnet.env #添加

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H #后面添加变量名称

查看网段是否设置成功

cat /run/flannel/subnet.env

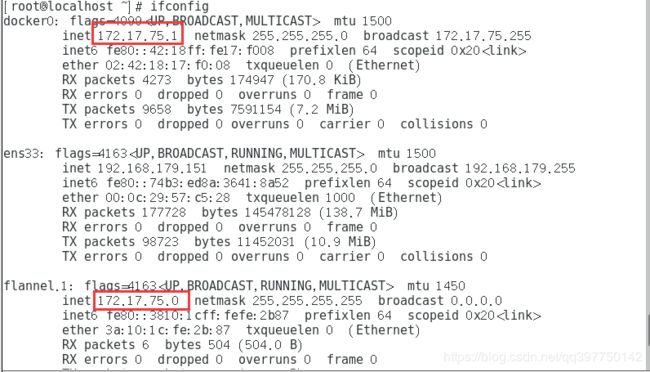

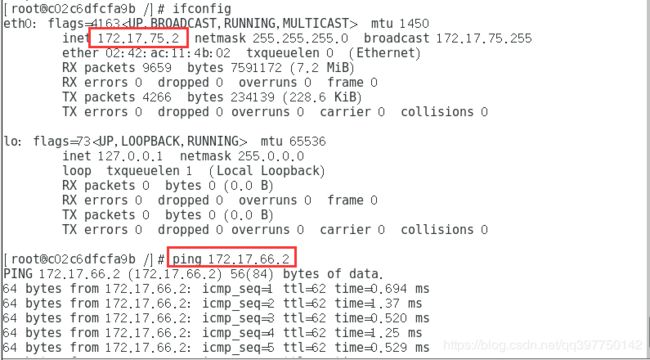

node1节点服务器

[root@localhost ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.75.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.75.1/24 --ip-masq=false --mtu=1450"

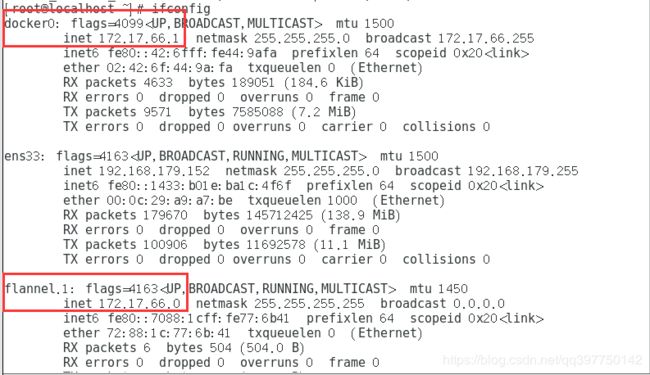

node2节点服务器

[root@localhost ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.66.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.66.1/24 --ip-masq=false --mtu=1450"

重启docker服务

systemctl daemon-reload

systemctl restart docker

node1服务器网卡信息

node2服务器网卡信息

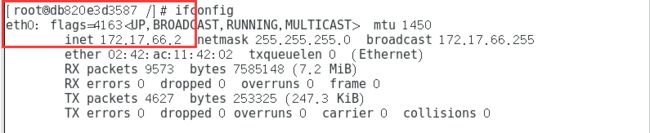

两台节点服务器分别创建一个容器,下载net-tools工具查看容器的IP地址

docker run -it centos:7 /bin/bash

yum install -y net-tools

node1服务器容器网卡信息

node0服务器容器网卡信息

验证容器互通

master节点配置

//生成api-server证书

cp /mnt/master.zip /root/k8s/

//解压缩包

unzip master.zip

chmod +x controller-manager.sh

//创建kubernetes证书目录

mkdir k8s-cert

//编辑脚本,生成证书

cd k8s-cert/

vim master.sh

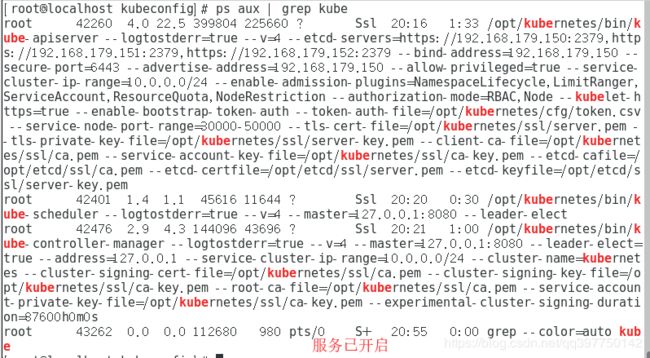

cat > ca-config.json < ca-csr.json < server-csr.json < admin-csr.json < kube-proxy-csr.json < netstat -ntap | grep 6443

ps aux | grep kube

//开启监控服务组件

./controller-manager.sh 127.0.0.1

//查看master节点状态

/opt/kubernetes/bin/kubectl get cs

[root@localhost k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

scheduler Healthy ok master上操作,配置授权用户

//拷贝文件

cd /root/k8s/kubernetes/server/bin/

scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

//创建工作目录

cd /root/k8s/

mkdir kubeconfig/

//编辑配置脚本

cd kubeconfig/

vim kubeconfig

APISERVER=$1

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=d2b8b9fe6d91ed4b604831ea4b3344ca \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

注意脚本中的-----(设置客户端认证参数)-----中的token序列号

//修改环境变量

vim /etc/profile

export PATH=$PATH:/opt/kubernetes/bin/

source /etc/profile

//生成配置文件

bash kubeconfig 192.168.179.150 /root/k8s/k8s-cert

[root@localhost kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

//拷贝node节点中

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/node节点部署操作

//编辑脚本文件并折行

vim kubelet.sh

#!/bin/bash

NODE_ADDRESS=$1

DNS_SERVER_IP=${2:-"10.0.0.2"}

cat </opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \\

--v=4 \\

--hostname-override=${NODE_ADDRESS} \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet.config \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

cat </opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${NODE_ADDRESS}

port: 10250

bash kubelet.sh 192.168.179.151 master服务器查看

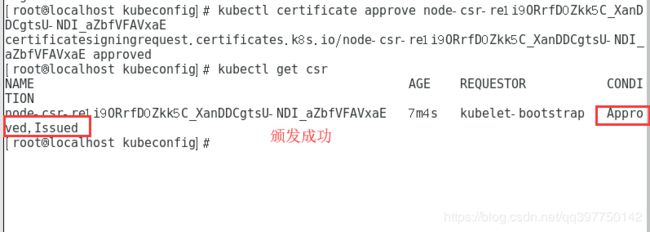

master同意颁发证书

kubectl certificate approve node-csr-re1i9ORrfD0Zkk5C_XanDDCgtsU-NDI_aZbfVFAVxaE

注意序列号

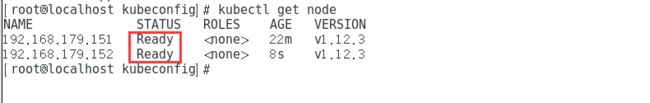

查看节点信息

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.179.151 Ready 2m9s v1.12.3

Ready为成功加入 node节点操作

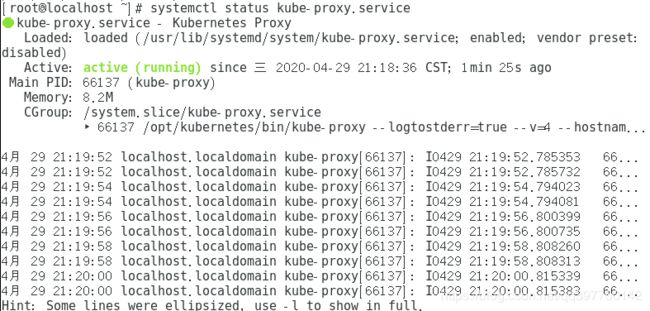

启动proxy服务

[root@localhost ~]# bash proxy.sh 192.168.179.151

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

- 查看服务状态

node2节点部署

//从node1节点复制工作目录到node2服务器中(node1操作)

scp -r /opt/kubernetes/ [email protected]:/opt/

//拷贝启动脚本,从node1中拷贝到node2服务器中

scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system/

//删除node2证书,证书要自己重新创建(node2操作)

cd /opt/kubernetes/ssl/

rm -rf *

//更改配置文件IP地址为本地IP地址(kubelet,kubelet.config,kube-proxy三个配置文件)

vim /opt/kubernetes/cfg/kubelet

vim /opt/kubernetes/cfg/kube-proxy

vim /opt/kubernetes/cfg/kubelet.config

//开启kubelet服务

systemctl start kubelet.service

systemctl enable kubelet.servicemaster查看请求信息

mastet查看请求信息kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-__J1o7P9oBnophUQ1-YR0SVxCV3rTFei3zOGh8fhbwo 49s kubelet-bootstrap Pendingnode-csr-re1i9ORrfD0Zkk5C_XanDDCgtsU-NDI_aZbfVFAVxaE 26m kubelet-bootstrap Approve

同意请求

kubectl certificate approve node-csr-__J1o7P9oBnophUQ1-YR0SVxCV3rTFei3zOGh8fhbwomaster查看节点状态