比较好的Mask R-CNN解读以及代码实现

解读:https://blog.csdn.net/zziahgf/article/details/78730859

https://blog.csdn.net/baobei0112/article/details/79130855

https://zhuanlan.zhihu.com/p/37998710

ROI Align 和 ROI Pooling 的区别:http://blog.leanote.com/post/[email protected]/b5f4f526490b

ROI Align代码:https://github.com/katotetsuro/roi_align/blob/master/roi_align_2d.py

双线性插值:https://blog.csdn.net/sinat_37011812/article/details/81842957

Mask-RCNN代码:https://github.com/facebookresearch/maskrcnn-benchmark

MaskRCNN-Benchmark(Pytorch版本)训练自己的数据以及避坑指南:https://juejin.im/post/5cd0172a518825420068639a

generalized_rcnn.py

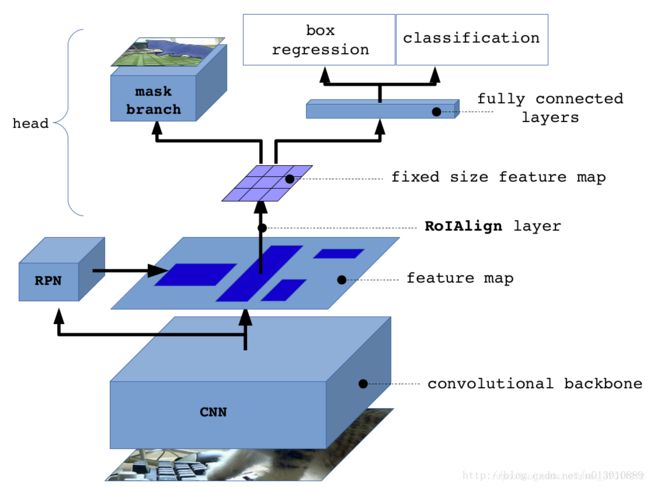

这个构成了整个网络的框架,分三个部分 backbone,rpn以及roi heads

backbone提取特征,rpn生成候选框,roi heads 生成最后的结果

class GeneralizedRCNN(nn.Module):

"""

Main class for Generalized R-CNN. Currently supports boxes and masks.

It consists of three main parts:

- backbone

- rpn

- heads: takes the features + the proposals from the RPN and computes

detections / masks from it.

"""

def __init__(self, cfg):

super(GeneralizedRCNN, self).__init__()

self.backbone = build_backbone(cfg)

self.rpn = build_rpn(cfg, self.backbone.out_channels)

self.roi_heads = build_roi_heads(cfg, self.backbone.out_channels)

def forward(self, images, targets=None):

"""

Arguments:

images (list[Tensor] or ImageList): images to be processed

targets (list[BoxList]): ground-truth boxes present in the image (optional)

Returns:

result (list[BoxList] or dict[Tensor]): the output from the model.

During training, it returns a dict[Tensor] which contains the losses.

During testing, it returns list[BoxList] contains additional fields

like `scores`, `labels` and `mask` (for Mask R-CNN models).

"""

if self.training and targets is None:

raise ValueError("In training mode, targets should be passed")

images = to_image_list(images)

features = self.backbone(images.tensors)

proposals, proposal_losses = self.rpn(images, features, targets)

if self.roi_heads:

x, result, detector_losses = self.roi_heads(features, proposals, targets)

else:

# RPN-only models don't have roi_heads

x = features

result = proposals

detector_losses = {}

if self.training:

losses = {}

losses.update(detector_losses)

losses.update(proposal_losses)

return losses

return resultbackbone.py

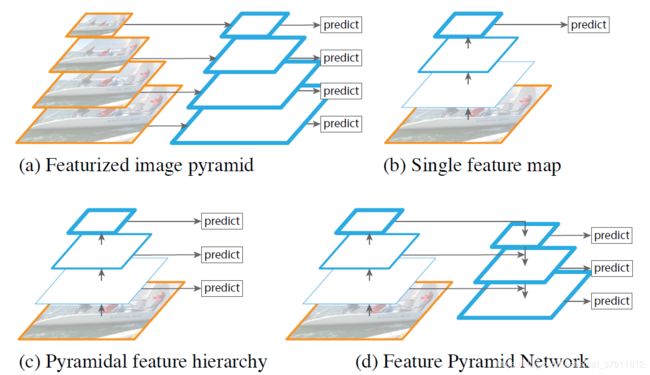

整个提取特征的网路构造,FPN表示 feature pyramid networks

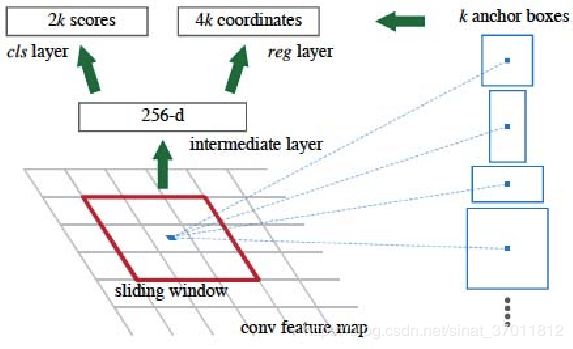

rpn.py

这里构造了region proposal network,计算 rpn输出的objectness 物体置信度以及 anchor box regression 回归候选框的坐标

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

import torch

import torch.nn.functional as F

from torch import nn

from maskrcnn_benchmark.modeling import registry

from maskrcnn_benchmark.modeling.box_coder import BoxCoder

from maskrcnn_benchmark.modeling.rpn.retinanet.retinanet import build_retinanet

from .loss import make_rpn_loss_evaluator

from .anchor_generator import make_anchor_generator

from .inference import make_rpn_postprocessor

class RPNHeadConvRegressor(nn.Module):

"""

A simple RPN Head for classification and bbox regression

"""

def __init__(self, cfg, in_channels, num_anchors):

"""

Arguments:

cfg : config

in_channels (int): number of channels of the input feature

num_anchors (int): number of anchors to be predicted

"""

super(RPNHeadConvRegressor, self).__init__()

self.cls_logits = nn.Conv2d(in_channels, num_anchors, kernel_size=1, stride=1)

self.bbox_pred = nn.Conv2d(

in_channels, num_anchors * 4, kernel_size=1, stride=1

)

for l in [self.cls_logits, self.bbox_pred]:

torch.nn.init.normal_(l.weight, std=0.01)

torch.nn.init.constant_(l.bias, 0)

def forward(self, x):

assert isinstance(x, (list, tuple))

logits = [self.cls_logits(y) for y in x]

bbox_reg = [self.bbox_pred(y) for y in x]

return logits, bbox_reg

class RPNHeadFeatureSingleConv(nn.Module):

"""

Adds a simple RPN Head with one conv to extract the feature

"""

def __init__(self, cfg, in_channels):

"""

Arguments:

cfg : config

in_channels (int): number of channels of the input feature

"""

super(RPNHeadFeatureSingleConv, self).__init__()

self.conv = nn.Conv2d(

in_channels, in_channels, kernel_size=3, stride=1, padding=1

)

for l in [self.conv]:

torch.nn.init.normal_(l.weight, std=0.01)

torch.nn.init.constant_(l.bias, 0)

self.out_channels = in_channels

def forward(self, x):

assert isinstance(x, (list, tuple))

x = [F.relu(self.conv(z)) for z in x]

return x

@registry.RPN_HEADS.register("SingleConvRPNHead")

class RPNHead(nn.Module):

"""

Adds a simple RPN Head with classification and regression heads

"""

def __init__(self, cfg, in_channels, num_anchors):

"""

Arguments:

cfg : config

in_channels (int): number of channels of the input feature

num_anchors (int): number of anchors to be predicted

"""

super(RPNHead, self).__init__()

self.conv = nn.Conv2d(

in_channels, in_channels, kernel_size=3, stride=1, padding=1

)

self.cls_logits = nn.Conv2d(in_channels, num_anchors, kernel_size=1, stride=1)

self.bbox_pred = nn.Conv2d(

in_channels, num_anchors * 4, kernel_size=1, stride=1

)

for l in [self.conv, self.cls_logits, self.bbox_pred]:

torch.nn.init.normal_(l.weight, std=0.01)

torch.nn.init.constant_(l.bias, 0)

def forward(self, x):

logits = []

bbox_reg = []

for feature in x:

t = F.relu(self.conv(feature))

logits.append(self.cls_logits(t))

bbox_reg.append(self.bbox_pred(t))

return logits, bbox_reg

class RPNModule(torch.nn.Module):

"""

Module for RPN computation. Takes feature maps from the backbone and RPN

proposals and losses. Works for both FPN and non-FPN.

"""

def __init__(self, cfg, in_channels):

super(RPNModule, self).__init__()

self.cfg = cfg.clone()

anchor_generator = make_anchor_generator(cfg)

rpn_head = registry.RPN_HEADS[cfg.MODEL.RPN.RPN_HEAD]

head = rpn_head(

cfg, in_channels, anchor_generator.num_anchors_per_location()[0]

)

rpn_box_coder = BoxCoder(weights=(1.0, 1.0, 1.0, 1.0))

box_selector_train = make_rpn_postprocessor(cfg, rpn_box_coder, is_train=True)

box_selector_test = make_rpn_postprocessor(cfg, rpn_box_coder, is_train=False)

loss_evaluator = make_rpn_loss_evaluator(cfg, rpn_box_coder)

self.anchor_generator = anchor_generator

self.head = head

self.box_selector_train = box_selector_train

self.box_selector_test = box_selector_test

self.loss_evaluator = loss_evaluator

def forward(self, images, features, targets=None):

"""

Arguments:

images (ImageList): images for which we want to compute the predictions

features (list[Tensor]): features computed from the images that are

used for computing the predictions. Each tensor in the list

correspond to different feature levels

targets (list[BoxList): ground-truth boxes present in the image (optional)

Returns:

boxes (list[BoxList]): the predicted boxes from the RPN, one BoxList per

image.

losses (dict[Tensor]): the losses for the model during training. During

testing, it is an empty dict.

"""

objectness, rpn_box_regression = self.head(features)

anchors = self.anchor_generator(images, features)

if self.training:

return self._forward_train(anchors, objectness, rpn_box_regression, targets)

else:

return self._forward_test(anchors, objectness, rpn_box_regression)

def _forward_train(self, anchors, objectness, rpn_box_regression, targets):

if self.cfg.MODEL.RPN_ONLY:

# When training an RPN-only model, the loss is determined by the

# predicted objectness and rpn_box_regression values and there is

# no need to transform the anchors into predicted boxes; this is an

# optimization that avoids the unnecessary transformation.

boxes = anchors

else:

# For end-to-end models, anchors must be transformed into boxes and

# sampled into a training batch.

with torch.no_grad():

boxes = self.box_selector_train(

anchors, objectness, rpn_box_regression, targets

)

loss_objectness, loss_rpn_box_reg = self.loss_evaluator(

anchors, objectness, rpn_box_regression, targets

)

losses = {

"loss_objectness": loss_objectness,

"loss_rpn_box_reg": loss_rpn_box_reg,

}

return boxes, losses

def _forward_test(self, anchors, objectness, rpn_box_regression):

boxes = self.box_selector_test(anchors, objectness, rpn_box_regression)

if self.cfg.MODEL.RPN_ONLY:

# For end-to-end models, the RPN proposals are an intermediate state

# and don't bother to sort them in decreasing score order. For RPN-only

# models, the proposals are the final output and we return them in

# high-to-low confidence order.

inds = [

box.get_field("objectness").sort(descending=True)[1] for box in boxes

]

boxes = [box[ind] for box, ind in zip(boxes, inds)]

return boxes, {}

def build_rpn(cfg, in_channels):

"""

This gives the gist of it. Not super important because it doesn't change as much

"""

if cfg.MODEL.RETINANET_ON:

return build_retinanet(cfg, in_channels)

return RPNModule(cfg, in_channels)

roi_heads.py

这里是最后的输出层,输出mask or box and objectness

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

import torch

from .box_head.box_head import build_roi_box_head

from .mask_head.mask_head import build_roi_mask_head

from .keypoint_head.keypoint_head import build_roi_keypoint_head

class CombinedROIHeads(torch.nn.ModuleDict):

"""

Combines a set of individual heads (for box prediction or masks) into a single

head.

"""

def __init__(self, cfg, heads):

super(CombinedROIHeads, self).__init__(heads)

self.cfg = cfg.clone()

if cfg.MODEL.MASK_ON and cfg.MODEL.ROI_MASK_HEAD.SHARE_BOX_FEATURE_EXTRACTOR:

self.mask.feature_extractor = self.box.feature_extractor

if cfg.MODEL.KEYPOINT_ON and cfg.MODEL.ROI_KEYPOINT_HEAD.SHARE_BOX_FEATURE_EXTRACTOR:

self.keypoint.feature_extractor = self.box.feature_extractor

def forward(self, features, proposals, targets=None):

losses = {}

# TODO rename x to roi_box_features, if it doesn't increase memory consumption

x, detections, loss_box = self.box(features, proposals, targets)

losses.update(loss_box)

if self.cfg.MODEL.MASK_ON:

mask_features = features

# optimization: during training, if we share the feature extractor between

# the box and the mask heads, then we can reuse the features already computed

if (

self.training

and self.cfg.MODEL.ROI_MASK_HEAD.SHARE_BOX_FEATURE_EXTRACTOR

):

mask_features = x

# During training, self.box() will return the unaltered proposals as "detections"

# this makes the API consistent during training and testing

x, detections, loss_mask = self.mask(mask_features, detections, targets)

losses.update(loss_mask)

if self.cfg.MODEL.KEYPOINT_ON:

keypoint_features = features

# optimization: during training, if we share the feature extractor between

# the box and the mask heads, then we can reuse the features already computed

if (

self.training

and self.cfg.MODEL.ROI_KEYPOINT_HEAD.SHARE_BOX_FEATURE_EXTRACTOR

):

keypoint_features = x

# During training, self.box() will return the unaltered proposals as "detections"

# this makes the API consistent during training and testing

x, detections, loss_keypoint = self.keypoint(keypoint_features, detections, targets)

losses.update(loss_keypoint)

return x, detections, losses

def build_roi_heads(cfg, in_channels):

# individually create the heads, that will be combined together

# afterwards

roi_heads = []

if cfg.MODEL.RETINANET_ON:

return []

if not cfg.MODEL.RPN_ONLY:

roi_heads.append(("box", build_roi_box_head(cfg, in_channels)))

if cfg.MODEL.MASK_ON:

roi_heads.append(("mask", build_roi_mask_head(cfg, in_channels)))

if cfg.MODEL.KEYPOINT_ON:

roi_heads.append(("keypoint", build_roi_keypoint_head(cfg, in_channels)))

# combine individual heads in a single module

if roi_heads:

roi_heads = CombinedROIHeads(cfg, roi_heads)

return roi_heads

rpn/loss.py

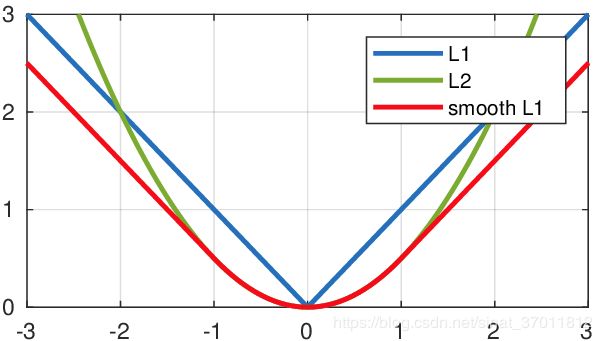

这里计算rpn的loss 会先对每个anchor找到对应的targets,然后将对应background的anchor label设置为0,objects的为1,丢弃的为-1 然后用smooth l1 和 binary cross entropy计算loss

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

"""

This file contains specific functions for computing losses on the RPN

file

"""

import torch

from torch.nn import functional as F

from .utils import concat_box_prediction_layers

from ..balanced_positive_negative_sampler import BalancedPositiveNegativeSampler

from ..utils import cat

from maskrcnn_benchmark.layers import smooth_l1_loss

from maskrcnn_benchmark.modeling.matcher import Matcher

from maskrcnn_benchmark.structures.boxlist_ops import boxlist_iou

from maskrcnn_benchmark.structures.boxlist_ops import cat_boxlist

class RPNLossComputation(object):

"""

This class computes the RPN loss.

"""

def __init__(self, proposal_matcher, fg_bg_sampler, box_coder,

generate_labels_func):

"""

Arguments:

proposal_matcher (Matcher)

fg_bg_sampler (BalancedPositiveNegativeSampler)

box_coder (BoxCoder)

"""

# self.target_preparator = target_preparator

self.proposal_matcher = proposal_matcher

self.fg_bg_sampler = fg_bg_sampler

self.box_coder = box_coder

self.copied_fields = []

self.generate_labels_func = generate_labels_func

self.discard_cases = ['not_visibility', 'between_thresholds']

def match_targets_to_anchors(self, anchor, target, copied_fields=[]):

match_quality_matrix = boxlist_iou(target, anchor)

matched_idxs = self.proposal_matcher(match_quality_matrix)

# RPN doesn't need any fields from target

# for creating the labels, so clear them all

target = target.copy_with_fields(copied_fields)

# get the targets corresponding GT for each anchor

# NB: need to clamp the indices because we can have a single

# GT in the image, and matched_idxs can be -2, which goes

# out of bounds

matched_targets = target[matched_idxs.clamp(min=0)]

matched_targets.add_field("matched_idxs", matched_idxs)

return matched_targets

def prepare_targets(self, anchors, targets):

labels = []

regression_targets = []

for anchors_per_image, targets_per_image in zip(anchors, targets):

matched_targets = self.match_targets_to_anchors(

anchors_per_image, targets_per_image, self.copied_fields

)

matched_idxs = matched_targets.get_field("matched_idxs")

labels_per_image = self.generate_labels_func(matched_targets)

labels_per_image = labels_per_image.to(dtype=torch.float32)

# Background (negative examples)

bg_indices = matched_idxs == Matcher.BELOW_LOW_THRESHOLD

labels_per_image[bg_indices] = 0

# discard anchors that go out of the boundaries of the image

if "not_visibility" in self.discard_cases:

labels_per_image[~anchors_per_image.get_field("visibility")] = -1

# discard indices that are between thresholds

if "between_thresholds" in self.discard_cases:

inds_to_discard = matched_idxs == Matcher.BETWEEN_THRESHOLDS

labels_per_image[inds_to_discard] = -1

# compute regression targets

regression_targets_per_image = self.box_coder.encode(

matched_targets.bbox, anchors_per_image.bbox

)

labels.append(labels_per_image)

regression_targets.append(regression_targets_per_image)

return labels, regression_targets

def __call__(self, anchors, objectness, box_regression, targets):

"""

Arguments:

anchors (list[BoxList])

objectness (list[Tensor])

box_regression (list[Tensor])

targets (list[BoxList])

Returns:

objectness_loss (Tensor)

box_loss (Tensor

"""

anchors = [cat_boxlist(anchors_per_image) for anchors_per_image in anchors]

labels, regression_targets = self.prepare_targets(anchors, targets)

sampled_pos_inds, sampled_neg_inds = self.fg_bg_sampler(labels)

sampled_pos_inds = torch.nonzero(torch.cat(sampled_pos_inds, dim=0)).squeeze(1)

sampled_neg_inds = torch.nonzero(torch.cat(sampled_neg_inds, dim=0)).squeeze(1)

sampled_inds = torch.cat([sampled_pos_inds, sampled_neg_inds], dim=0)

objectness, box_regression = \

concat_box_prediction_layers(objectness, box_regression)

objectness = objectness.squeeze()

labels = torch.cat(labels, dim=0)

regression_targets = torch.cat(regression_targets, dim=0)

box_loss = smooth_l1_loss(

box_regression[sampled_pos_inds],

regression_targets[sampled_pos_inds],

beta=1.0 / 9,

size_average=False,

) / (sampled_inds.numel())

objectness_loss = F.binary_cross_entropy_with_logits(

objectness[sampled_inds], labels[sampled_inds]

)

return objectness_loss, box_loss

# This function should be overwritten in RetinaNet

def generate_rpn_labels(matched_targets):

matched_idxs = matched_targets.get_field("matched_idxs")

labels_per_image = matched_idxs >= 0

return labels_per_image

def make_rpn_loss_evaluator(cfg, box_coder):

matcher = Matcher(

cfg.MODEL.RPN.FG_IOU_THRESHOLD,

cfg.MODEL.RPN.BG_IOU_THRESHOLD,

allow_low_quality_matches=True,

)

fg_bg_sampler = BalancedPositiveNegativeSampler(

cfg.MODEL.RPN.BATCH_SIZE_PER_IMAGE, cfg.MODEL.RPN.POSITIVE_FRACTION

)

loss_evaluator = RPNLossComputation(

matcher,

fg_bg_sampler,

box_coder,

generate_rpn_labels

)

return loss_evaluator

mask_head/loss.py

mask head 的loss计算逻辑是这样的:

match target to proposals 将rpn的proposals和ground truth的targets配对 配对的原则是IOU最大的那个 得到matched_targets

prepare targets 获取match_targets 中的index 然后从category, proposals和segmentation masks中挑选不是背景的(positive的), 之后用positive的proposal得到positive的masks用来计算后面的loss

这里的loss只计算是物体的loss,这里指的是上述所说的positive的proposal里positive的类别的mask的loss

值得注意的是这里的计算mask loss 的 mask的大小是 28*28的

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

import torch

from torch.nn import functional as F

from maskrcnn_benchmark.layers import smooth_l1_loss

from maskrcnn_benchmark.modeling.matcher import Matcher

from maskrcnn_benchmark.structures.boxlist_ops import boxlist_iou

from maskrcnn_benchmark.modeling.utils import cat

def project_masks_on_boxes(segmentation_masks, proposals, discretization_size):

"""

Given segmentation masks and the bounding boxes corresponding

to the location of the masks in the image, this function

crops and resizes the masks in the position defined by the

boxes. This prepares the masks for them to be fed to the

loss computation as the targets.

Arguments:

segmentation_masks: an instance of SegmentationMask

proposals: an instance of BoxList

"""

masks = []

M = discretization_size

device = proposals.bbox.device

proposals = proposals.convert("xyxy")

assert segmentation_masks.size == proposals.size, "{}, {}".format(

segmentation_masks, proposals

)

# FIXME: CPU computation bottleneck, this should be parallelized

proposals = proposals.bbox.to(torch.device("cpu"))

for segmentation_mask, proposal in zip(segmentation_masks, proposals):

# crop the masks, resize them to the desired resolution and

# then convert them to the tensor representation.

cropped_mask = segmentation_mask.crop(proposal)

scaled_mask = cropped_mask.resize((M, M))

mask = scaled_mask.get_mask_tensor()

masks.append(mask)

if len(masks) == 0:

return torch.empty(0, dtype=torch.float32, device=device)

return torch.stack(masks, dim=0).to(device, dtype=torch.float32)

class MaskRCNNLossComputation(object):

def __init__(self, proposal_matcher, discretization_size):

"""

Arguments:

proposal_matcher (Matcher)

discretization_size (int)

"""

self.proposal_matcher = proposal_matcher

self.discretization_size = discretization_size

def match_targets_to_proposals(self, proposal, target):

match_quality_matrix = boxlist_iou(target, proposal)

matched_idxs = self.proposal_matcher(match_quality_matrix)

# Mask RCNN needs "labels" and "masks "fields for creating the targets

target = target.copy_with_fields(["labels", "masks"])

# get the targets corresponding GT for each proposal

# NB: need to clamp the indices because we can have a single

# GT in the image, and matched_idxs can be -2, which goes

# out of bounds

matched_targets = target[matched_idxs.clamp(min=0)]

matched_targets.add_field("matched_idxs", matched_idxs)

return matched_targets

def prepare_targets(self, proposals, targets):

labels = []

masks = []

for proposals_per_image, targets_per_image in zip(proposals, targets):

matched_targets = self.match_targets_to_proposals(

proposals_per_image, targets_per_image

)

matched_idxs = matched_targets.get_field("matched_idxs")

labels_per_image = matched_targets.get_field("labels")

labels_per_image = labels_per_image.to(dtype=torch.int64)

# this can probably be removed, but is left here for clarity

# and completeness

neg_inds = matched_idxs == Matcher.BELOW_LOW_THRESHOLD

labels_per_image[neg_inds] = 0

# mask scores are only computed on positive samples

positive_inds = torch.nonzero(labels_per_image > 0).squeeze(1)

segmentation_masks = matched_targets.get_field("masks")

segmentation_masks = segmentation_masks[positive_inds]

positive_proposals = proposals_per_image[positive_inds]

masks_per_image = project_masks_on_boxes(

segmentation_masks, positive_proposals, self.discretization_size

)

labels.append(labels_per_image)

masks.append(masks_per_image)

return labels, masks

def __call__(self, proposals, mask_logits, targets):

"""

Arguments:

proposals (list[BoxList])

mask_logits (Tensor)

targets (list[BoxList])

Return:

mask_loss (Tensor): scalar tensor containing the loss

"""

labels, mask_targets = self.prepare_targets(proposals, targets)

labels = cat(labels, dim=0)

mask_targets = cat(mask_targets, dim=0)

positive_inds = torch.nonzero(labels > 0).squeeze(1)

labels_pos = labels[positive_inds]

# torch.mean (in binary_cross_entropy_with_logits) doesn't

# accept empty tensors, so handle it separately

if mask_targets.numel() == 0:

return mask_logits.sum() * 0

print(mask_targets.shape)

mask_loss = F.binary_cross_entropy_with_logits(

mask_logits[positive_inds, labels_pos], mask_targets

)

return mask_loss

def make_roi_mask_loss_evaluator(cfg):

matcher = Matcher(

cfg.MODEL.ROI_HEADS.FG_IOU_THRESHOLD,

cfg.MODEL.ROI_HEADS.BG_IOU_THRESHOLD,

allow_low_quality_matches=False,

)

loss_evaluator = MaskRCNNLossComputation(

matcher, cfg.MODEL.ROI_MASK_HEAD.RESOLUTION

)

return loss_evaluator