Linux Network Namespace(网络名称空间)介绍、应用 及 详细的互通案例

Linux Network Namespace(网络名称空间)介绍、应用 及 详细的互通案例

- 1、Network Namespace 介绍

- 1.1 基本概念

- 1.2 命令帮助 Network Namespace

- 1.3 创建 Network Namespace 及后续相关命令操作

- 1.3.1 ip netns exec 说明

- 1.3.2 新创建名称空间启动 lo 接口

- 2、Network Namespace 之间的通信

- 2.1 使用 veth pair 实现不同网络名称空间的互通

- 2.1.1 创建一个新的名称空间,进行试验

- 2.1.2 创建一对 veth pair

- 2.1.2.1 创建 veth pair ,使用系统默认名称

- 2.1.2.2 创建 veth pair ,指定名称

- 2.1.3 名称空间与 veth pair 进行绑定

- 2.1.4 名称空间中绑定的 veth pair 配置 IP 地址并进行启用

- 2.1.5 两个名称空间中的接口 IP 地址进行互 Ping,已通

- 2.2 使用 bridge 实现不同网络名称空间的互通

- 2.2.1 拓扑

- 2.2.2 创建 3 对 veth pair

- 2.2.3 查看 ip link

- 2.2.4 创建 3 个新的网络名称空间

- 2.2.5 创建新的桥接口,并启用

- 2.2.6 将 3 对 veth pair 分别与 Network Namespace 和 Bridge 进行绑定

- 2.2.6.1 第一对 veth pair

- 2.2.6.2 第二对 veth pair

- 2.2.6.3 第三对 veth pair

- 2.2.7 各个网络名称空间中进行互 Ping,能够互通

- 2.2.8 配置桥接口 IP,各个网络名称空间能够与此 IP 互通

- 2.2.9 让网络名称空间可以此主机所连接的网络

- 2.2.9.1 配置网络名称空间 tang3 的默认路由

- 2.2.9.2 配置主机的转发和 SNAT

- 2.2.9.3 网络名称空间 tang3 进行 172.16.141.X 网络的访问

- 2.2.10 其它情况说明

1、Network Namespace 介绍

1.1 基本概念

Network Namespace 是实现网络虚拟化的重要功能,它能创建多个隔离的网络空间,它们有独自的网络栈信息。不管是虚拟机还是容器,运行的时候仿佛自己就在独立的网络中。

Network Namespace 是 Linux 内核提供的功能,可以借助 IP 命令来完成各种操作。IP 命令来自于 iproute2 安装包,一般系统会默认安装。

[root@Tang ~]# yum info iproute

Installed Packages

Name : iproute

Arch : x86_64

Version : 4.11.0

Release : 14.el7

Size : 1.7 M

Repo : installed

From repo : anaconda

Summary : Advanced IP routing and network device configuration tools

URL : http://kernel.org/pub/linux/utils/net/iproute2/

License : GPLv2+ and Public Domain

Description : The iproute package contains networking utilities (ip and rtmon, for example)

: which are designed to use the advanced networking capabilities of the Linux

: kernel.

1.2 命令帮助 Network Namespace

IP 命令管理的功能很多, 和 Network Namespace 有关的操作都是在子命令 ip netns 下进行的,可以通过 ip netns help 查看所有操作的帮助信息。

[root@Tang ~]# ip netns help

Usage: ip netns list

ip netns add NAME

ip netns set NAME NETNSID

ip [-all] netns delete [NAME]

ip netns identify [PID]

ip netns pids NAME

ip [-all] netns exec [NAME] cmd ...

ip netns monitor

ip netns list-id

1.3 创建 Network Namespace 及后续相关命令操作

使用如下命令进行网络名称空间添加:

[root@Tang ~]# ip netns add neo

[root@Tang ~]# ip netns list

neo

ip netns 命令创建的 Network Namespace 会出现在 /var/run/netns/ 目录下,如果需要管理其他不是 ip netns 创建的 Network Namespace,只要在这个目录下创建一个指向对应 Network Namespace 文件的链接就行。

[root@Tang ~]# ll /var/run/netns/

total 0

-r--r--r--. 1 root root 0 Nov 19 13:46 neo

有了自己创建的 Network Namespace,我们还需要看看它里面的具体配置。

对于每个 Network Namespace 来说,它会有自己独立的网卡、路由表、ARP 表、iptables 等和网络相关的资源。

1.3.1 ip netns exec 说明

ip 命令提供了 ip netns exec 子命令可以在对应的 Network Namespace 中执行命令。 比如,使用如下命令查看网络名称空间 neo 中查看:

[root@Tang ~]# ip netns exec neo ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

在使用 ip netns exec NAME 执行 bash 命令了之后,后面所有的命令都是在这个 Network Namespace 中执行的。此操作:

- 同一名称空间下的命令执行,不用每次都输入 ip netns exec NAME

- 无法清楚知道自己当前所在的 shell,容易混淆(使用 exit 退出)

- 但是通过修改 bash 的前缀信息可以区分不同 shell

[root@Tang ~]# ip netns exec neo bash # 进入到名称空间 neo 的 bash

[root@Tang ~]# ip addr list # 此后执行的命令都是在此名称空间下执行的

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[root@Tang ~]# exit # 退出此名称空间的 bash

exit

[root@Tang ~]# ip addr list

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether e4:3a:6e:0a:9b:88 brd ff:ff:ff:ff:ff:ff

inet 172.16.141.252/24 brd 172.16.141.255 scope global noprefixroute enp1s0

[root@Tang ~]# ip netns exec neo /bin/bash --rcfile <(echo "PS1=\"namespace neo> \"")

namespace neo> ip addr list # shell 名称已修改

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

namespace neo> exit # 退出后失效,重新进入时,需要再次修改 shell 名称

exit

[root@Tang ~]# ip netns exec neo bash

[root@Tang ~]# ip addr list

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[root@Tang ~]# exit

exit

1.3.2 新创建名称空间启动 lo 接口

每个 namespace 在创建的时候会自动创建一个 lo 的 interface,它的作用和 linux 系统中默认看到的lo 一样,都是为了实现 loopback 通信。如果希望 lo 能工作,需要进行启动。

[root@Tang ~]# ip netns exec neo ip link set lo up

[root@Tang ~]# ip netns exec neo ip addr list

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2、Network Namespace 之间的通信

默认情况下,network namespace 是不能和主机网络,或者其他 network namespace 通信的。

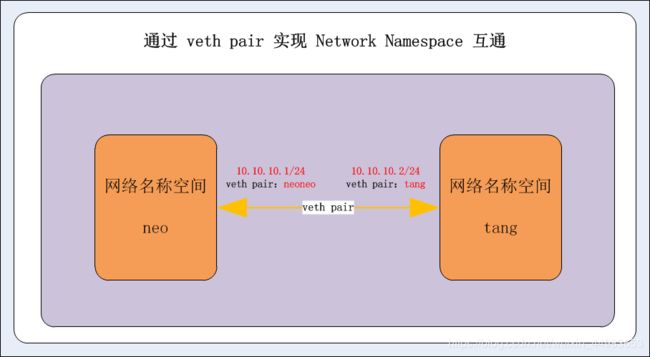

2.1 使用 veth pair 实现不同网络名称空间的互通

有了不同 network namespace 之后,也就有了网络的隔离,但是如果它们之间没有办法通信,也没有实际用处。要把两个网络连接起来,linux 提供了 veth pair 。可以把 veth pair 当做是双向的 pipe(管道),从一个方向发送的网络数据,可以直接被另外一端接收到;或者也可以想象成两个 namespace 直接通过一个特殊的虚拟网卡连接起来,可以直接通信。

2.1.1 创建一个新的名称空间,进行试验

[root@Tang ~]# ip netns add tang

[root@Tang ~]# ip netns list

tang

neo

2.1.2 创建一对 veth pair

使用 ip link add type veth 来创建一对 veth pair 出来,需要记住的是 veth pair 无法单独存在,删除其中一个,另一个也会自动消失。如下:

[root@Tang ~]# ip link

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a3:89:ef:df brd ff:ff:ff:ff:ff:ff

5: veth0@veth1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether de:ee:db:c3:26:f1 brd ff:ff:ff:ff:ff:ff

6: veth1@veth0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether a6:23:e6:80:cc:7f brd ff:ff:ff:ff:ff:ff

[root@Tang ~]# ip link del veth0 type veth

[root@Tang ~]# ip link

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a3:89:ef:df brd ff:ff:ff:ff:ff:ff

创建 veth pair 时,可以指定名称,也可以使用其默认名称。

如果 pair 的一端接口处于 DOWN 状态,另一端能自动检测到这个信息,并把自己的状态设置为NO-CARRIER。

ip link 是有 iproute2 家族中的操作命令,关于此家族的详细命令解释,可参照以下博客链接。

Linux iproute2 命令家族(ip / ss)

2.1.2.1 创建 veth pair ,使用系统默认名称

[root@Tang ~]# ip link add type veth

[root@Tang ~]# ip link

5: veth0@veth1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether de:ee:db:c3:26:f1 brd ff:ff:ff:ff:ff:ff

6: veth1@veth0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether a6:23:e6:80:cc:7f brd ff:ff:ff:ff:ff:ff

2.1.2.2 创建 veth pair ,指定名称

[root@Tang ~]# ip link add neoneo type veth peer name tangtang

[root@Tang ~]# ip link

5: veth0@veth1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether de:ee:db:c3:26:f1 brd ff:ff:ff:ff:ff:ff

6: veth1@veth0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether a6:23:e6:80:cc:7f brd ff:ff:ff:ff:ff:ff

7: tangtang@neoneo: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ba:f0:37:d9:a9:27 brd ff:ff:ff:ff:ff:ff

8: neoneo@tangtang: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether f6:be:88:dc:6d:79 brd ff:ff:ff:ff:ff:ff

2.1.3 名称空间与 veth pair 进行绑定

### 名称空间 neo 绑定 veth pair 中的 neoneo ###

[root@Tang ~]# ip link set neoneo netns neo

### 名称空间 tang 绑定 veth pair 中的 tangtang ###

[root@Tang ~]# ip link set tangtang netns tang

[root@Tang ~]# ip netns exec neo ip addr

8: neoneo@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether f6:be:88:dc:6d:79 brd ff:ff:ff:ff:ff:ff link-netnsid 1

[root@Tang ~]# ip netns exec tang ip addr

7: tangtang@if8: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ba:f0:37:d9:a9:27 brd ff:ff:ff:ff:ff:ff link-netnsid 0

2.1.4 名称空间中绑定的 veth pair 配置 IP 地址并进行启用

### 查看名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec neo ip addr list dev neoneo

8: neoneo@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether f6:be:88:dc:6d:79 brd ff:ff:ff:ff:ff:ff link-netnsid 1

### UP 名称空间中的接口 IP 接口 ###

[root@Tang ~]# ip netns exec neo ip link set neoneo up

### 配置名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec neo ip addr add 10.10.10.1/24 dev neoneo

### 查看名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec neo ip addr list dev neoneo

8: neoneo@if7: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether f6:be:88:dc:6d:79 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 10.10.10.1/24 scope global neoneo

valid_lft forever preferred_lft forever

### 查看名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec tang ip addr list tangtang

7: tangtang@if8: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ba:f0:37:d9:a9:27 brd ff:ff:ff:ff:ff:ff link-netnsid 0

### UP 名称空间中的接口 IP 接口 ###

[root@Tang ~]# ip netns exec tang ip link set tangtang up

### 配置名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec tang ip addr add 10.10.10.2/24 dev tangtang

### 查看名称空间中的接口 IP 地址 ###

[root@Tang ~]# ip netns exec tang ip addr list tangtang

7: tangtang@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ba:f0:37:d9:a9:27 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.2/24 scope global tangtang

valid_lft forever preferred_lft forever

inet6 fe80::b8f0:37ff:fed9:a927/64 scope link

valid_lft forever preferred_lft forever

2.1.5 两个名称空间中的接口 IP 地址进行互 Ping,已通

最每个 namespace 中,在配置完 ip 之后,还自动生成了对应的路由表信息,网络 10.10.10.0/24 数据报文都会通过 veth pair 进行传输。使用 ping 命令可以验证它们的连通性。

[root@Tang ~]# ip netns exec neo ping 10.10.10.2

PING 10.10.10.2 (10.10.10.2) 56(84) bytes of data.

64 bytes from 10.10.10.2: icmp_seq=1 ttl=64 time=0.068 ms

64 bytes from 10.10.10.2: icmp_seq=2 ttl=64 time=0.074 ms

64 bytes from 10.10.10.2: icmp_seq=3 ttl=64 time=0.075 ms

^C

--- 10.10.10.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.068/0.072/0.075/0.007 ms

[root@Tang ~]# ip netns exec tang ping 10.10.10.1

PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data.

64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=0.047 ms

64 bytes from 10.10.10.1: icmp_seq=2 ttl=64 time=0.036 ms

64 bytes from 10.10.10.1: icmp_seq=3 ttl=64 time=0.032 ms

^C

--- 10.10.10.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.032/0.038/0.047/0.008 ms

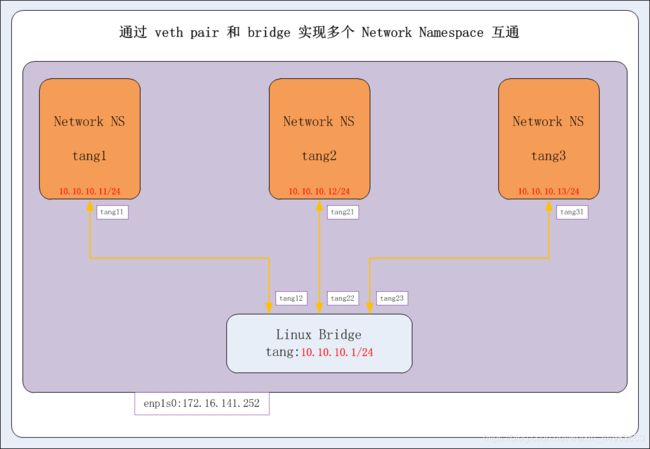

2.2 使用 bridge 实现不同网络名称空间的互通

虽然 veth pair 可以实现两个 network namespace 之间的通信,但是当多个 namespace 需要通信的时候,就无能为力了。讲到多个网络设备通信,我们首先想到的交换机和路由器。

因为这里要考虑的只是同个网络,所以只用到交换机的功能。linux 当然也提供了虚拟交换机的功能,我们还是用 ip 命令来完成所有的操作。

这里需要使用到 bridge 的操作命令 brctl ,具体操作可以参照以下博客链接。

Linux brctl 详解

2.2.1 拓扑

2.2.2 创建 3 对 veth pair

[root@Tang ~]# ip link add tang11 type veth peer name tang12

[root@Tang ~]# ip link add tang21 type veth peer name tang22

[root@Tang ~]# ip link add tang31 type veth peer name tang32

2.2.3 查看 ip link

[root@Tang ~]# ip link | grep tang -A 1

9: tang12@tang11: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff

10: tang11@tang12: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 76:2f:f3:78:e5:4f brd ff:ff:ff:ff:ff:ff

11: tang22@tang21: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ea:51:28:b4:9b:f2 brd ff:ff:ff:ff:ff:ff

12: tang21@tang22: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2a:74:43:67:56:32 brd ff:ff:ff:ff:ff:ff

13: tang32@tang31: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether fe:cf:e8:14:1e:d2 brd ff:ff:ff:ff:ff:ff

14: tang31@tang32: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 22:57:ae:27:f2:75 brd ff:ff:ff:ff:ff:ff

2.2.4 创建 3 个新的网络名称空间

[root@Tang ~]# ip netns add tang1

[root@Tang ~]# ip netns add tang2

[root@Tang ~]# ip netns add tang3

[root@Tang ~]# ip netns list

tang3

tang2

tang1

2.2.5 创建新的桥接口,并启用

[root@Tang ~]# ip link add tang type bridge

[root@Tang ~]# ip link set dev tang up

[root@Tang ~]# ip addr list | grep "tang:" -A 2

15: tang: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether b2:19:8c:58:dc:39 brd ff:ff:ff:ff:ff:ff

inet6 fe80::b019:8cff:fe58:dc39/64 scope link

2.2.6 将 3 对 veth pair 分别与 Network Namespace 和 Bridge 进行绑定

2.2.6.1 第一对 veth pair

### 将 tang11 绑定到网络名称空间 tang1 ###

[root@Tang ~]# ip link set dev tang11 netns tang1

[root@Tang ~]# ip netns exec tang1 ip link set dev tang11 up

[root@Tang ~]# ip netns exec tang1 ip addr add 10.10.10.11/24 dev tang11

[root@Tang ~]# ip netns exec tang1 ip addr

10: tang11@if9: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 76:2f:f3:78:e5:4f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.11/24 scope global tang11

valid_lft forever preferred_lft forever

### 将 tang12 绑定到桥接口 tang ###

[root@Tang ~]# ip link set dev tang12 master tang

[root@Tang ~]# ip link set dev tang12 up

[root@Tang ~]# ip link

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a3:89:ef:df brd ff:ff:ff:ff:ff:ff

9: tang12@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff link-netnsid 0

15: tang: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff

### 查看现有的桥接口上的接口 ###

[root@Tang ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242a389efdf no

tang 8000.0add50017a84 no tang12

2.2.6.2 第二对 veth pair

### 将 tang21 绑定到网络名称空间 tang2 ###

[root@Tang ~]# ip link set dev tang21 netns tang2

[root@Tang ~]# ip netns exec tang2 ip link set dev tang21 up

[root@Tang ~]# ip netns exec tang2 ip addr add 10.10.10.12/24 dev tang21

[root@Tang ~]# ip netns exec tang2 ip addr

12: tang21@if11: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 2a:74:43:67:56:32 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.12/24 scope global tang21

valid_lft forever preferred_lft forever

### 将 tang22 绑定到桥接口 tang ###

[root@Tang ~]# ip link set dev tang22 master tang

[root@Tang ~]# ip link set dev tang22 up

[root@Tang ~]# ip link

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a3:89:ef:df brd ff:ff:ff:ff:ff:ff

9: tang12@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff link-netnsid 0

11: tang22@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether ea:51:28:b4:9b:f2 brd ff:ff:ff:ff:ff:ff link-netnsid 1

15: tang: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff

### 查看现有的桥接口上的接口 ###

[root@Tang ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242a389efdf no

tang 8000.0add50017a84 no tang12

tang22

2.2.6.3 第三对 veth pair

### 将 tang31 绑定到网络名称空间 tang3 ###

[root@Tang ~]# ip link set dev tang31 netns tang3

[root@Tang ~]# ip netns exec tang3 ip link set dev tang31 up

[root@Tang ~]# ip netns exec tang3 ip addr add 10.10.10.13/24 dev tang31

[root@Tang ~]# ip netns exec tang3 ip addr

14: tang31@if13: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 22:57:ae:27:f2:75 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.13/24 scope global tang31

valid_lft forever preferred_lft forever

### 将 tang32 绑定到桥接口 tang ###

[root@Tang ~]# ip link set dev tang32 master tang

[root@Tang ~]# ip link set dev tang32 up

[root@Tang ~]# ip link

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a3:89:ef:df brd ff:ff:ff:ff:ff:ff

9: tang12@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff link-netnsid 0

11: tang22@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether ea:51:28:b4:9b:f2 brd ff:ff:ff:ff:ff:ff link-netnsid 1

13: tang32@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master tang state UP mode DEFAULT group default qlen 1000

link/ether fe:cf:e8:14:1e:d2 brd ff:ff:ff:ff:ff:ff link-netnsid 2

15: tang: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 0a:dd:50:01:7a:84 brd ff:ff:ff:ff:ff:ff

### 查看现有的桥接口上的接口 ###

[root@Tang ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242a389efdf no

tang 8000.0add50017a84 no tang12

tang22

tang32

2.2.7 各个网络名称空间中进行互 Ping,能够互通

[root@Tang ~]# ip netns exec tang1 ping -c 1 10.10.10.12

PING 10.10.10.12 (10.10.10.12) 56(84) bytes of data.

64 bytes from 10.10.10.12: icmp_seq=1 ttl=64 time=0.080 ms

--- 10.10.10.12 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.080/0.080/0.080/0.000 ms

[root@Tang ~]# ip netns exec tang1 ping -c 1 10.10.10.13

PING 10.10.10.13 (10.10.10.13) 56(84) bytes of data.

64 bytes from 10.10.10.13: icmp_seq=1 ttl=64 time=0.049 ms

--- 10.10.10.13 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.049/0.049/0.049/0.000 ms

[root@Tang ~]# ip netns exec tang2 ping -c 1 10.10.10.11

PING 10.10.10.11 (10.10.10.11) 56(84) bytes of data.

64 bytes from 10.10.10.11: icmp_seq=1 ttl=64 time=0.046 ms

--- 10.10.10.11 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.046/0.046/0.046/0.000 ms

[root@Tang ~]# ip netns exec tang2 ping -c 1 10.10.10.13

PING 10.10.10.13 (10.10.10.13) 56(84) bytes of data.

64 bytes from 10.10.10.13: icmp_seq=1 ttl=64 time=0.061 ms

--- 10.10.10.13 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.061/0.061/0.061/0.000 ms

[root@Tang ~]# ip netns exec tang3 ping -c 1 10.10.10.11

PING 10.10.10.11 (10.10.10.11) 56(84) bytes of data.

64 bytes from 10.10.10.11: icmp_seq=1 ttl=64 time=0.057 ms

--- 10.10.10.11 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.057/0.057/0.057/0.000 ms

[root@Tang ~]# ip netns exec tang3 ping -c 1 10.10.10.12

PING 10.10.10.12 (10.10.10.12) 56(84) bytes of data.

64 bytes from 10.10.10.12: icmp_seq=1 ttl=64 time=0.048 ms

--- 10.10.10.12 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.048/0.048/0.048/0.000 ms

2.2.8 配置桥接口 IP,各个网络名称空间能够与此 IP 互通

[root@Tang ~]# ip addr add 10.10.10.1/24 dev tang

[root@Tang ~]# ifconfig tang | grep tang -A 1

tang: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.10.10.1 netmask 255.255.255.0 broadcast 0.0.0.0

[root@Tang ~]# ip netns exec tang1 ping -c 1 10.10.10.1

PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data.

64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=0.083 ms

--- 10.10.10.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.083/0.083/0.083/0.000 ms

[root@Tang ~]# ip netns exec tang2 ping -c 1 10.10.10.1

PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data.

64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=0.086 ms

--- 10.10.10.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.086/0.086/0.086/0.000 ms

[root@Tang ~]# ip netns exec tang3 ping -c 1 10.10.10.1

PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data.

64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=0.086 ms

--- 10.10.10.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.086/0.086/0.086/0.000 ms

2.2.9 让网络名称空间可以此主机所连接的网络

各个网络名称空间内的网络,可以配置默认路由,指向桥接口 IP 地址,并在此主机上的外网接口和桥接口上进行 SNAT 配置,然后打开此主机的转发功能。

这样的话,此网络名称空间就可以此主机所连接的网络。

下面我们以 网络名称空间 tang3 为示例,进行演示。

2.2.9.1 配置网络名称空间 tang3 的默认路由

[root@Tang ~]# ip netns exec tang3 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

10.10.10.0 0.0.0.0 255.255.255.0 U 0 0 0 tang31

[root@Tang ~]# ip netns exec tang3 route add default gw 10.10.10.1

[root@Tang ~]# ip netns exec tang3 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.10.10.1 0.0.0.0 UG 0 0 0 tang31

10.10.10.0 0.0.0.0 255.255.255.0 U 0 0 0 tang31

2.2.9.2 配置主机的转发和 SNAT

[root@Tang ~]# cat /proc/sys/net/ipv4/ip_forward

1

[root@Tang ~]# iptables -t nat -I POSTROUTING -o tang -j MASQUERADE

[root@Tang ~]# iptables -t nat -I POSTROUTING -o enp1s0 -j MASQUERADE

[root@Tang ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.141.1 0.0.0.0 UG 100 0 0 enp1s0

10.10.10.0 0.0.0.0 255.255.255.0 U 0 0 0 tang

172.16.141.0 0.0.0.0 255.255.255.0 U 100 0 0 enp1s0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

2.2.9.3 网络名称空间 tang3 进行 172.16.141.X 网络的访问

[root@Tang ~]# ip netns exec tang3 ping -c 1 172.16.141.1

PING 172.16.141.1 (172.16.141.1) 56(84) bytes of data.

64 bytes from 172.16.141.1: icmp_seq=1 ttl=63 time=1.61 ms

--- 172.16.141.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.616/1.616/1.616/0.000 ms

[root@Tang ~]# ip netns exec tang3 ping -c 1 114.114.114.114

PING 114.114.114.114 (114.114.114.114) 56(84) bytes of data.

64 bytes from 114.114.114.114: icmp_seq=1 ttl=90 time=24.5 ms

--- 114.114.114.114 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 24.577/24.577/24.577/0.000 ms

[root@Tang ~]# ip netns exec tang3 ping -c 1 www.baidu.com

PING www.a.shifen.com (61.135.169.125) 56(84) bytes of data.

64 bytes from 61.135.169.125 (61.135.169.125): icmp_seq=1 ttl=48 time=4.76 ms

--- www.a.shifen.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 4.764/4.764/4.764/0.000 ms

2.2.10 其它情况说明

如果在 2.2.8 中,各个名称空间不通,可能是 iptables 的原因。

因为系统为 bridge 开启了 iptables 功能,导致所有经过桥接口的数据包都要受 iptables 里面规则的限制,而docker为了安全性(我的系统安装了 docker),将iptables里面filter表的FORWARD链的默认策略设置成了drop,于是所有不符合 docker 规则的数据包都不会被 forward,导致你这种情况ping不通。

解决办法有两个,二选一:

- 关闭系统bridge的iptables功能,这样数据包转发就不受 iptables 影响了:

# echo 0 > /proc/sys/net/bridge/bridge-nf-call-iptables - 为桥接扣添加一条iptables规则,让经过br0的包能被forward:

# iptables -A FORWARD -i tang -j ACCEPT

第一种方法不确定会不会影响docker,建议用第二种方法。