Multispectral Pedestrian Detection using Deep Fusion Convolutional Neural Networks (深度学习多光谱行人检测综述)

Now, salient detection methods most of current pedestrian detectors explored color images of good lighting, and they are very likely to be stuck with images captured at night, due to bad visibility of object. Such defect would cut these approaches off from the around-the-clock applications, e.g., self-driven car and surveillance system. In some sense, color and thermal image channels provide complementary visual information, so it is helpful to fuse the information of a visible camera with the information provided by a long-wavelength infrared.

We want to take advantage of color and thermal to detect object by deep Neural networks. Now, we must answer the following two questions: when strong detectors getting by neural networks are involved, does color and thermal images still provide complementary information? To what extend the improvement should be expected by fusing them together? So, we can model the multispectral pedestrian detection task as a neural networks fusion problem.

Now, we can learn various ConvNet fusion models through the papers[1-3], which discuss that fuse color and thermal images for pedestrian detection , a canonical case of general object detection problem.

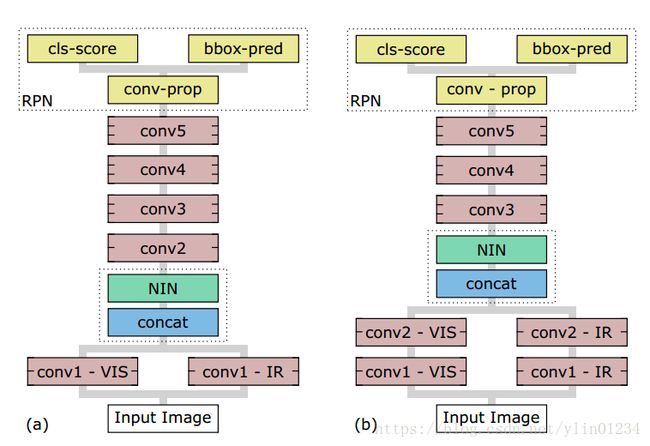

Multispectral pedestrian detectors can be divided into three categories based on the level of abstraction at which the fusion takes place – pixel-level, feature- level, and decision-level fusion(score fusion). The feature- level fusion can divided into early fusion(low-level visual features), halfway fusion(middle -level visual features) and late fusion(high-level visual features).

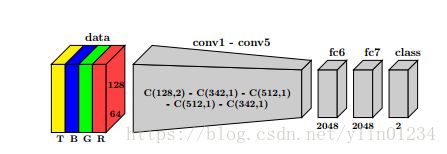

Pixel-level fusion from[1]

Early fusion from [3]

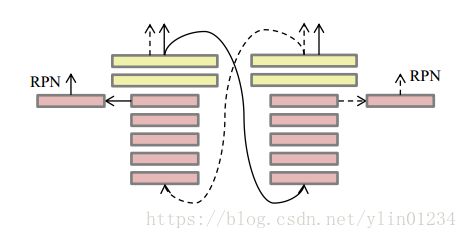

Decision-level fusion from [2]

The experiments show that middle-level convolutional features from color and thermal branches are more compatible in fusion: they contain some semantic meanings and meanwhile do not completely throw all fine visual details. Compared to the color image based detector, our multispectral pedestrian detector achieves more true detections, especially when some pedestrians are of bad external illumination. Meanwhile, some false alarms are also removed.

Another thing worth learning is how to select training examples. [2] select training samples only from RGB-Thermal datasets, however, [3] select training samples from RGB datasets, Thermal datasets, and RGB-Thermal datasets. Compared with [2], [3] get better results. So we can use this experimental results to select our own training datasets.

Because the task is different from pedestrian detection, we also consider the concrete construction of ConvNet fusion methods. This is the problem that we must first consider at this stage.

[1] Multispectral Pedestrian Detection using Deep Fusion Convolutional Neural Networks. (ESANN, 2016)

[2] Multispectral Deep Neural Networks for Pedestrian Detection. (BMVC, 2016)

[3] Fully Convolutional Region Proposal Networks for Multispectral Person Detection. (CVPR, 2017)

[4] Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. (NIPS, 2015)

[5] Multispectral pedestrian detection: Benchmark dataset and baseline. (CVPR, 2015)