python3+scrapy+selenium爬取英雄联盟英雄资料

继前一篇文章用nodejs+puppeteer+chromium爬取了这个英雄资料后,在本篇同样爬这个页面,思路都差不多,只是用不同语言来实现,可作为参考,个人觉得爬虫还是nodejs比较好用,可能是我python太菜吧

本例环境和所需第三方包:python3、pycharm、selenium2.48.0(用3.0+版本会报错,因为新版本放弃phantomjs了,当然也可以用chrome和firefox,不过可能需要另外装驱动)、scrapy1.6.0

对于还没了解过scrapy和phantomjs的可以先看下这两篇博客,写的很详细:

https://www.cnblogs.com/kongzhagen/p/6549053.html

https://jiayi.space/post/scrapy-phantomjs-seleniumdong-tai-pa-chong

安装就不多说了,可以先装scrapy,如果有报错需要什么再装什么,一般windos还需要安装一个pywin32

创建项目:

scrapy startproject loldocument

cd loldocument

scrapy genspider hero lol.qq.com/data/info-heros.shtml

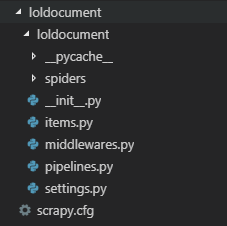

然后生成目录:

spiders/hero.py发起列英雄表页的请求获取每个英雄所对应的详情页url,循环访问每个url,然后得到想要的数据:

# -*- coding: utf-8 -*-

import scrapy

from loldocument.items import LoldocumentItem

import os

import urllib.request

import re

class HeroSpider(scrapy.Spider):

name = 'hero'

allowed_domains = ['lol.qq.com']

start_urls = ['https://lol.qq.com/data/info-heros.shtml']

def parse(self, response):

heros = response.xpath('//ul[@id="jSearchHeroDiv"]/li')

# heros = [heros[0], heros[1]]

for hero in heros: # 遍历每个li

imgu = 'http:' + hero.xpath("./a/img/@src").extract_first()

title = hero.xpath("./a/@title").extract_first()

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0'}

req = urllib.request.Request(url=imgu, headers=headers)

res = urllib.request.urlopen(req)

path = r'F:\loldocument\hero_logo' # 保存英雄头像的路径

if not os.path.exists(path):

os.makedirs(path)

file_name = os.path.join(r'F:\loldocument\hero_logo', title + '.jpg')

with open(file_name, 'wb') as fp:

fp.write(res.read())

url = 'https://lol.qq.com/data/' + hero.xpath("./a/@href").extract_first()

request = scrapy.Request(url=url, callback=self.parse_detail)

request.meta['PhantomJS'] = True

request.meta['title'] = title

yield request

def parse_detail(self, response):

# 英雄详情

item = LoldocumentItem()

item['title'] = response.meta['title']

item['DATAname'] = response.xpath('//h1[@id="DATAname"]/text()').extract_first()

item['DATAtitle'] = response.xpath('//h2[@id="DATAtitle"]/text()').extract_first()

item['DATAtags'] = response.xpath('//div[@id="DATAtags"]/span/text()').extract()

infokeys = response.xpath('//dl[@id="DATAinfo"]/dt/text()').extract()

infovalues = response.xpath('//dl[@id="DATAinfo"]/dd/i/@style').extract()

item['DATAinfo'] = {} # 英雄属性

for i,v in enumerate(infokeys):

item['DATAinfo'][v] = re.sub(r'width:', "", infovalues[i])

yield item

items.py定义要存入item的字段:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class LoldocumentItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

DATAname = scrapy.Field()

DATAtitle = scrapy.Field()

DATAtags = scrapy.Field()

DATAinfo = scrapy.Field()

因为用selenium爬取异步数据所以需要另外单独定义一个下载器中间件,在新建目录和文件,在/loldocument新建python package middlware.py:

from selenium import webdriver

from scrapy.http import HtmlResponse

import time

class JavaScriptMiddleware(object):

def process_request(self, request, spider):

print("PhantomJS is starting...")

driver = webdriver.PhantomJS() # 指定使用的浏览器

# driver = webdriver.Firefox()

driver.get(request.url)

time.sleep(1)

if 'PhantomJS' in request.meta :

js = "var q=document.documentElement.scrollTop=1000"

driver.execute_script(js) # 可执行js,模仿用户操作。此处为将页面拉至最底端。

time.sleep(1)

body = driver.page_source

print("访问详情页" + request.url)

return HtmlResponse(driver.current_url, body=body, encoding='utf-8', request=request)

else:

js = "var q=document.documentElement.scrollTop=1000"

driver.execute_script(js) # 可执行js,模仿用户操作。此处为将页面拉至最底端。

time.sleep(1)

body = driver.page_source

print("访问:" + request.url)

return HtmlResponse(driver.current_url, body=body, encoding='utf-8', request=request)

settings.py修改三个配置:

ROBOTSTXT_OBEY = False

DOWNLOADER_MIDDLEWARES = {

'loldocument.middlewares.middleware.JavaScriptMiddleware': 543, #键为中间件类的路径,值为中间件的顺序

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware':None, #禁止内置的中间件

}

ITEM_PIPELINES = {

'loldocument.pipelines.LoldocumentPipeline': 100,

}

数据处理pipelines.py:

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import json

class LoldocumentPipeline(object):

def process_item(self, item, spider):

with open('hero_detail.txt', 'a') as txt:

str = json.dumps(dict(item), ensure_ascii=False)

txt.write(str)

执行 scrapy crawl hero 执行程序,同时命令窗会输出详情及异常信息,加--nolog可以不输出详情

得到的数据hero_detail.txt和英雄头像hero_logo目录: