Hive1.2.2环境搭建(MariaDB版)【填坑】

2019.1.23 填坑,点我鸭.

2019.1.11 更新错误填坑,点我鸭.

1.下载

Hive下载具体参考官网;

MariaDB安装参考百度附链接;

centos 7 mariadb安装

2. 解压Hive1.2.2并重命名为hive1.2.2

3. 配置文件修改

cd hive-1.2.2/conf

(1)创建hive-site.xml

touch hive-site.xml

(2)编辑hive-site.xml(心情好的时候重新整理一下这一步)

javax.jdo.option.ConnectionURL

jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

创建数据库时的用户名

javax.jdo.option.ConnectionPassword

创建数据库时的密码

hive.metastore.warehouse.dir

/hive/warehouse #会在hdfs生成相应路径

hive.exec.scratchdir

/hive/tmp #会在hdfs生成相应路径

hive.querylog.location

/hive/log

(3)编辑hive-env.sh

cp hive-env.sh.template hive-env.sh

(4)编辑内容

vim hive-env.sh

#添加相关目录

export JAVA_HOME=/usr/local/src/jdk1.8.0_191

export HADOOP_HOME=/usr/local/src/hadoop-2.7.7

export HIVE_HOME=/usr/local/src/hive-1.2.2

export HIVE_CONF_DIR=/usr/local/src/hive-1.2.2/conf

(5)复制日志配置

cp hive-exec-log4j.properties.template hive-exec-log4j.properties

cp hive-log4j.properties.template hive-log4j.properties

4.创建相关目录(暂时没发现有什么用,可能没用吧)

/usr/local/src/hive-1.2.2/warehouse

/usr/local/src/hive-1.2.2/tmp

/usr/local/src/hive-1.2.2/log

5.修改环境变量

vim ~/.bashrc

添加内容

# hive

export HIVE_HOME=/usr/local/src/hive-1.2.2

export PATH=$PATH:$HIVE_HOME/bin

生效

source ~/.bashrc

6.下载Mysql connector 的jar包

因为安装的MariaDB所以就想着用MariaDB的jar包,但是实测不好使,所以还是下载MySQL的

用wget 方式获取

wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-8.0.13.tar.gz

之后解压,复制jar包到hive的lib目录下

cp mysql-connector-java-8.0.13/mysql-connector-java-8.0.13.jar hive-1.2.2/lib/

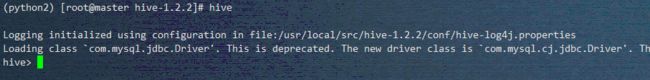

7.简单测试

输入 hive 命令

成功进入hive-cli界面

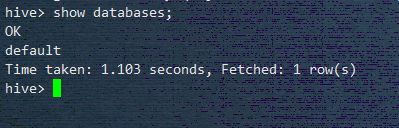

输入 show databases; 注意最后的分号

至此基本完成

安装参考

Hive1.x

基于Hadoop2.7.3集群数据仓库Hive1.2.2的部署及使用 (详细测试参考该链接的测试部分)

错误1

向 hive 数据库中写入数据时

load data local inpath "/home/ibean/student.txt" into table student;

报错信息

hive> load data local inpath "/home/ibean/student.txt" into table student;

Loading data to table testhive.student

Failed with exception Unable to move source file:/home/ibean/student.txt to destination hdfs://master:9000/usr/local/src/hive-1.2.2/warehouse/testhive.db/student/student.txt

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask

查看hive日志:日志目录在本地文件系统 /tmp/当前用户名/hive.log

Caused by: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /usr/local/src/hive-1.2.2/warehouse/testhive.db/student/student.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

关键点

There are 0 datanode(s) running and no node(s) are excluded in this operation

好吧。。。忘启动从节点。。。节点启动成功以后正常。

错误2

进入mariadb,use hive,下面的都执行一遍就好了

alter table COLUMNS_V2 modify column COMMENT varchar(256) character set utf8;

alter table TABLE_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8 ;

alter table PARTITION_KEYS modify column PKEY_COMMENT varchar(4000) character set utf8;

alter table INDEX_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

执行完这些,删掉原来的表重新创建一次就可以了

参考博文:https://www.cnblogs.com/qingyunzong/p/8724155.html

关于录入数据的点点问题

在读取数据的时候有时候可能会遇到表头或者表尾

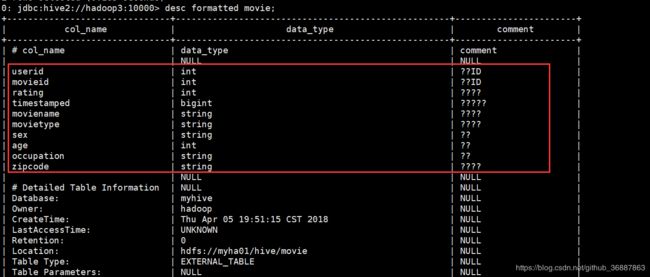

![]()

这样load的时候表头也会录入,所以在创建表的时候添加以下,跟在创建表后面,分号之前

tblproperties(

"skip.header.line.count"="1", --跳过文件行首1行

"skip.footer.line.count"="1" --跳过文件行尾1行

);

例如:

create external table if not exists ratings(

userId int comment '用户id',

movieId int comment '电影ID',

rating int comment '用户对电影的打分',

time string comment '时间'

)

row format delimited fields terminated by ','

stored as textfile

tblproperties ("skip.header.line.count"="1");