SparkSql 2.2.x 中 Broadcast Join的陷阱(hint不生效)

问题描述

- 在spark 2.2.0 的sparksql 中使用hint指定广播表,却无法进行指定广播;

前期准备

hive> select * from test.tmp_demo_small;

OK

tmp_demo_small.pas_phone tmp_demo_small.age

156 20

157 22

158 15

hive> analyze table test.tmp_demo_small compute statistics;

Table test.tmp_demo_small stats: [numFiles=1, numRows=3, totalSize=21, rawDataSize=18]

hive> select * from test.tmp_demo_big;

OK

tmp_demo_big.pas_phone tmp_demo_big.ord_id tmp_demo_big.dt

156 aa1 20191111

156 aa2 20191112

157 bb1 20191111

157 bb2 20191112

157 bb3 20191113

157 bb4 20191114

158 cc1 20191111

158 cc2 20191112

158 cc3 20191113

hive> analyze table test.tmp_demo_big compute statistics;

Table test.tmp_demo_big stats: [numFiles=1, numRows=9, totalSize=153, rawDataSize=144]

sparksql解析过程详见:Apache Spark源码走读之11 – sql的解析与执行 不是本篇重点,不过有个解析后的语法树有用,可以比较明显的展示左表右表,不然可能有小伙伴要纳闷buildright是个啥了

验证方式

结论为先: 当小表join小表时(都符合默认广播条件 spark.sql.autoBroadcastJoinThreshold默认10M),无论是否指定广播对象,都是以右表优先匹配;也就是说hint在这种情况下失效。

注释什么的都放在代码里面了

- 使用默认方式join自动广播

select

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

- 查看执行计划(每个执行过程从下往上读,模拟树结构)

== Parsed Logical Plan == -- 抽象语法树,由ANTLR解析

Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L]

+- Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L, ord_cnt#35L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38] -- 只知道是选择出了属性,却并不知道这些属性属于哪张表,更不知道其数据类型

+- Filter (age#38 > 21)

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Analyzed Logical Plan == -- 逻辑语法树

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint -- 数据类型解析

Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L]

+- Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L, ord_cnt#35L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38]

+- Filter (age#38 > 21)

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Optimized Logical Plan == -- 逻辑优化

Window [sum(1) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38]

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- Filter ((isnotnull(age#38) && (age#38 > 21)) && isnotnull(pas_phone#37)) -- 谓语下推优化

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- Project [pas_phone#39, ord_id#40]

+- Filter isnotnull(pas_phone#39)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- *Sort [pas_phone#39 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 449256327) hashpartitioning(pas_phone#39, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *Project [pas_phone#39, ord_id#40, age#38]

+- *BroadcastHashJoin [pas_phone#37], [pas_phone#39], Inner, BuildRight -- buildright表示使用右表进行广播

:- *Filter ((isnotnull(age#38) && (age#38 > 21)) && isnotnull(pas_phone#37))

: +- HiveTableScan [pas_phone#37, age#38], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

+- *Filter isnotnull(pas_phone#39)

+- HiveTableScan [pas_phone#39, ord_id#40], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

- 使用hint进行指定广播对象

select

/*+ BROADCAST(small) */

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

- 执行计划

== Parsed Logical Plan ==

Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L]

+- Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L, ord_cnt#57L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Filter (age#60 > 21)

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true

: +- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L]

+- Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L, ord_cnt#57L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Filter (age#60 > 21)

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true

: +- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true -- 这里可以看到在逻辑优化的时候,这个参数是生效的

: +- Filter ((isnotnull(age#60) && (age#60 > 21)) && isnotnull(pas_phone#59))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- Project [pas_phone#61, ord_id#62]

+- Filter isnotnull(pas_phone#61)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- *Sort [pas_phone#61 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 1477200907) hashpartitioning(pas_phone#61, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *Project [pas_phone#61, ord_id#62, age#60]

+- *BroadcastHashJoin [pas_phone#59], [pas_phone#61], Inner, BuildRight -- buildright表示仍然使用右表进行广播

:- *Filter ((isnotnull(age#60) && (age#60 > 21)) && isnotnull(pas_phone#59))

: +- HiveTableScan [pas_phone#59, age#60], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

+- *Filter isnotnull(pas_phone#61)

+- HiveTableScan [pas_phone#61, ord_id#62], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

刚开始一路走下来,感觉都正常,而且逻辑优化的时候将一些filter条件下推都是符合RBO优化原则;但是到最后的生成物理执行计划的时候出现问题,理论上来说应该会进行比较两个子表,哪一个小广播哪个;为什么会出现这个问题?问题是应该出在物理执行计划中Join的选择方式上,定位spark 2.2.0 源码; 从 apply 开始看

位置:spark-2.2.0/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala

object JoinSelection extends Strategy with PredicateHelper {

/**

* Matches a plan whose output should be small enough to be used in broadcast join.

*/

// 3. canBroadcast(right), 传入的right是个LogicalPlan对象,也就是一个逻辑计划,其中包含了这个子树节点表的内部信息,包括meta信息,还有解析的hint;这里会进行判断;只需要存在hint语句 或者 满足节点树(这里是右表)filter之后的信息大大于0且小于一个阈值(默认10M) 这两个条件其一就返回true

private def canBroadcast(plan: LogicalPlan): Boolean = {

plan.stats(conf).hints.isBroadcastable.getOrElse(false) ||

(plan.stats(conf).sizeInBytes >= 0 &&

plan.stats(conf).sizeInBytes <= conf.autoBroadcastJoinThreshold)

}

... 隐去一部分代码

// 2. canBuildRight(joinType)判断下,返回 true

private def canBuildRight(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | LeftOuter | LeftSemi | LeftAnti => true

case j: ExistenceJoin => true

case _ => false

}

private def canBuildLeft(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | RightOuter => true

case _ => false

}

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

// --- BroadcastHashJoin --------------------------------------------------------------------

// 1. 广播判断条件 :首先判断(2) canBuildRight(joinType);然后接着判断 (3)canBroadcast(right);当(2)且(3)都true则开始执行broadcast,且广播右表,不理会hint中是否制定广播表

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBuildRight(joinType) && canBroadcast(right) =>

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, BuildRight, condition, planLater(left), planLater(right)))

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBuildLeft(joinType) && canBroadcast(left) =>

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, BuildLeft, condition, planLater(left), planLater(right)))

// --- ShuffledHashJoin ---------------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if !conf.preferSortMergeJoin && canBuildRight(joinType) && canBuildLocalHashMap(right)

&& muchSmaller(right, left) ||

!RowOrdering.isOrderable(leftKeys) =>

...

// --- SortMergeJoin ------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if RowOrdering.isOrderable(leftKeys) =>

...

// --- Without joining keys ------------------------------------------------------------

...

case _ => Nil

}

}

至此,解释了为什么spark 2.2.0中,hint没有生效的问题;因为判断join方式的时候,优先判断是否使用broadcast join,模式匹配先匹配right的情况,也就是说,如果右表只要足够小且满足广播规则,那么无论hint是否有或者hint左表右表,都会进行广播右表;但是一旦右边太大,而且没有hint的方式标注使用右表,那么就会进入第二个,判断左表是否符合广播条件,是的话就进行广播;一样的代码放在2.4.3中看下情况如何

select

/*+ BROADCAST(small) */

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

- 执行计划

== Parsed Logical Plan ==

Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L]

+- Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L, ord_cnt#0L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Filter (age#3 > 21)

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast)

: +- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L]

+- Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L, ord_cnt#0L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Filter (age#3 > 21)

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast)

: +- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast) -- 解析hint语句,指定广播表

: +- Filter ((isnotnull(age#3) && (age#3 > 21)) && isnotnull(pas_phone#2))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- Project [pas_phone#4, ord_id#5]

+- Filter isnotnull(pas_phone#4)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- *(3) Sort [pas_phone#4 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 632554218) hashpartitioning(pas_phone#4, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *(2) Project [pas_phone#4, ord_id#5, age#3]

+- *(2) BroadcastHashJoin [pas_phone#2], [pas_phone#4], Inner, BuildLeft -- BuildLeft hint制定生效

:- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

: +- *(1) Filter ((isnotnull(age#3) && (age#3 > 21)) && isnotnull(pas_phone#2))

: +- Scan hive test.tmp_demo_small [pas_phone#2, age#3], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- *(2) Filter isnotnull(pas_phone#4)

+- Scan hive test.tmp_demo_big [pas_phone#4, ord_id#5], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

- 不指定广播表,默认 join

select

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

- 执行计划

== Parsed Logical Plan ==

Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L]

+- Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L, ord_cnt#11L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Filter (age#14 > 21)

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L]

+- Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L, ord_cnt#11L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Filter (age#14 > 21)

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- Filter ((isnotnull(age#14) && (age#14 > 21)) && isnotnull(pas_phone#13))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- Project [pas_phone#15, ord_id#16]

+- Filter isnotnull(pas_phone#15)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- *(3) Sort [pas_phone#15 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 1731877543) hashpartitioning(pas_phone#15, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *(2) Project [pas_phone#15, ord_id#16, age#14]

+- *(2) BroadcastHashJoin [pas_phone#13], [pas_phone#15], Inner, BuildLeft -- 广播左表成功

:- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

: +- *(1) Filter ((isnotnull(age#14) && (age#14 > 21)) && isnotnull(pas_phone#13))

: +- Scan hive test.tmp_demo_small [pas_phone#13, age#14], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- *(2) Filter isnotnull(pas_phone#15)

+- Scan hive test.tmp_demo_big [pas_phone#15, ord_id#16], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

这就有些意思了,看下2.4.3 的源码

位置:spark-2.4.4/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala

object JoinSelection extends Strategy with PredicateHelper {

/**

* Matches a plan whose output should be small enough to be used in broadcast join.

*/

private def canBroadcast(plan: LogicalPlan): Boolean = {

plan.stats.sizeInBytes >= 0 && plan.stats.sizeInBytes <= conf.autoBroadcastJoinThreshold

}

/**

* Matches a plan whose single partition should be small enough to build a hash table.

*

* Note: this assume that the number of partition is fixed, requires additional work if it's

* dynamic.

*/

private def canBuildLocalHashMap(plan: LogicalPlan): Boolean = {

plan.stats.sizeInBytes < conf.autoBroadcastJoinThreshold * conf.numShufflePartitions

}

/**

* Returns whether plan a is much smaller (3X) than plan b.

*

* The cost to build hash map is higher than sorting, we should only build hash map on a table

* that is much smaller than other one. Since we does not have the statistic for number of rows,

* use the size of bytes here as estimation.

*/

private def muchSmaller(a: LogicalPlan, b: LogicalPlan): Boolean = {

a.stats.sizeInBytes * 3 <= b.stats.sizeInBytes

}

private def canBuildRight(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | LeftOuter | LeftSemi | LeftAnti | _: ExistenceJoin => true

case _ => false

}

private def canBuildLeft(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | RightOuter => true

case _ => false

}

// 3. 就是简单比较左右两表大小,

private def broadcastSide(

canBuildLeft: Boolean,

canBuildRight: Boolean,

left: LogicalPlan,

right: LogicalPlan): BuildSide = {

def smallerSide =

if (right.stats.sizeInBytes <= left.stats.sizeInBytes) BuildRight else BuildLeft

if (canBuildRight && canBuildLeft) {

// Broadcast smaller side base on its estimated physical size

// if both sides have broadcast hint

smallerSide

} else if (canBuildRight) {

BuildRight

} else if (canBuildLeft) {

BuildLeft

} else {

// for the last default broadcast nested loop join

smallerSide

}

}

// 1 判断 canBroadcastByHints(joinType, left, right) ,接着判断 canBuildLeft(joinType)和canBuildRight(joinType) 两者只需要一个为 true就可以,join类型条件基本囊括;主要是判断针对左右子树表的hint制定广播

private def canBroadcastByHints(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: Boolean = {

val buildLeft = canBuildLeft(joinType) && left.stats.hints.broadcast

val buildRight = canBuildRight(joinType) && right.stats.hints.broadcast

buildLeft || buildRight

}

// 2. broadcastSideByHints(joinType, left, right) 再吊起 broadcastSide进行比较,(3)其实就是简单比较两个表的大小

private def broadcastSideByHints(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: BuildSide = {

val buildLeft = canBuildLeft(joinType) && left.stats.hints.broadcast

val buildRight = canBuildRight(joinType) && right.stats.hints.broadcast

broadcastSide(buildLeft, buildRight, left, right)

}

private def canBroadcastBySizes(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: Boolean = {

val buildLeft = canBuildLeft(joinType) && canBroadcast(left)

val buildRight = canBuildRight(joinType) && canBroadcast(right)

buildLeft || buildRight

}

private def broadcastSideBySizes(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: BuildSide = {

val buildLeft = canBuildLeft(joinType) && canBroadcast(left)

val buildRight = canBuildRight(joinType) && canBroadcast(right)

broadcastSide(buildLeft, buildRight, left, right)

}

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

// 区分了两种,当指定hint时和未指定hint时

// --- BroadcastHashJoin --------------------------------------------------------------------

// broadcast hints were specified

// 对于有hint的情况,先判断 canBroadcastByHints(joinType, left, right)(1)为true只是表示有hint语句且囊括的join类型符合条件;然后再吊起 broadcastSideByHints(joinType, left, right) 判断广播哪张表(2)

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBroadcastByHints(joinType, left, right) =>

val buildSide = broadcastSideByHints(joinType, left, right)

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, buildSide, condition, planLater(left), planLater(right)))

// broadcast hints were not specified, so need to infer it from size and configuration.

// 对于没有hint的情况,直接走到判断两张表大小来决定谁当广播表(当然符合一些前置条件)

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBroadcastBySizes(joinType, left, right) =>

val buildSide = broadcastSideBySizes(joinType, left, right)

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, buildSide, condition, planLater(left), planLater(right)))

// --- ShuffledHashJoin ---------------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if !conf.preferSortMergeJoin && canBuildRight(joinType) && canBuildLocalHashMap(right)

&& muchSmaller(right, left) ||

!RowOrdering.isOrderable(leftKeys) =>

...

// --- SortMergeJoin ------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if RowOrdering.isOrderable(leftKeys) =>

...

// --- Without joining keys ----------------------------------------------------------

...

}

}

所以综上所述

- spark 2.2.2的版本当小表join小表(两表都符合广播条件),hint 指定广播表会失效,默认广播右表;若不hint,则默认广播右表

- spark 2.4.3的版本可以指定(inner join)广播表(即使超过广播阈值,但小心OOM风险);若不hint,则在符合广播阈值的条件下,使用较小的表进行广播

- spark不支持full outer join;对于right outer join 只能广播左表;对于left outer join,left semi join,left anti join,internal join等只能广播右表,inner join 可以指定广播

- 其余的一些join触发条件要求:SparkSQL-有必要坐下来聊聊Join,Spark SQL 之 Join 实现

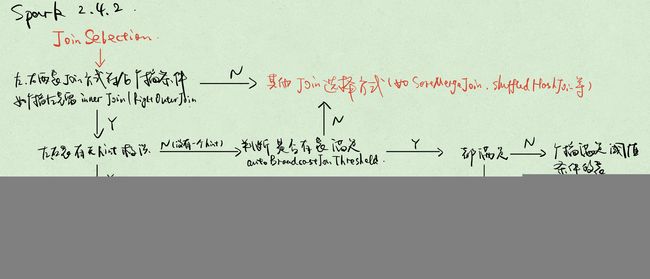

最后放两张收稿图,用于区分2.2和2.4之间的broadcastjoin判断方式

by the way

本来是遇到了一个having的问题,在本地执行没有问题,但是打包好使用spark-submit提交到集群的时候就莫名其妙报错了;

select

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

having

ord_cnt > 2

Error in query: grouping expressions sequence is empty, and 'big.`pas_phone`' is not an aggregate function. Wrap '()' in windowing function(s) or wrap 'big.`pas_phone`' in first() (or first_value) if you don't care which value you get.;;

'Project [pas_phone#26, ord_id#27, age#25, ord_cnt#22L]

+- 'Project [pas_phone#26, ord_id#27, age#25, ord_cnt#22L, ord_cnt#22L]

+- 'Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#26, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#22L], [pas_phone#26]

+- 'Filter ('ord_cnt > 2)

+- Aggregate [pas_phone#26, ord_id#27, age#25]

+- Filter (age#25 > 21)

+- Join Inner, (pas_phone#24 = pas_phone#26)

:- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#24, age#25]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#26, ord_id#27, dt#28]

问题也查到了,我在本地执行的时候使用的是yarn-client模式,所以我的driver是我的服务器,而我这台服务器spark版本是2.2.2的,所以执行没啥问题,因为driver负责生成DAG,划分task等等,这个都是在sql转化为rdd之后去执行的,所以还有一个就是前置的解析sql的工作,也就是sql -> rdd,这个也是由driver来完成的,而提交到集群的方式是yarn-cluster模式,driver在集群的某一台机器上,这就很尬尬了,公司竟然升级到2.4.3了,导致sql解析的环境已经和我的本地不匹配了,然后查了一下新版的 spark release note

In Spark version 2.3 and earlier, HAVING without GROUP BY is treated as WHERE. This means, SELECT 1 FROM range(10) HAVING true is executed as SELECT 1 FROM range(10) WHERE true and returns 10 rows. This violates SQL standard, and has been fixed in Spark 2.4. Since Spark 2.4, HAVING without GROUP BY is treated as a global aggregate, which means SELECT 1 FROM range(10) HAVING true will return only one row. To restore the previous behavior, set spark.sql.legacy.parser.havingWithoutGroupByAsWhere to true.

cool,问题解决,原因也找到了, 如果非要像以前2.2那样不想改整段代码操作,那么再前面加set spark.sql.legacy.parser.havingWithoutGroupByAsWhere=true;解决问题

set spark.sql.legacy.parser.havingWithoutGroupByAsWhere=true;

select

big.pas_phone,

big.ord_id,

small.age,

sum(1) over(partition by big.pas_phone) as ord_cnt

from

test.tmp_demo_small as small -- 小表 3 行

join

test.tmp_demo_big as big -- 大表 9 行

on

small.pas_phone = big.pas_phone

where

small.age > 21

having

ord_cnt > 2

附录

/**

* Select the proper physical plan for join based on joining keys and size of logical plan.

*

* At first, uses the [[ExtractEquiJoinKeys]] pattern to find joins where at least some of the

* predicates can be evaluated by matching join keys. If found, join implementations are chosen

* with the following precedence:

*

* - Broadcast hash join (BHJ):

* BHJ is not supported for full outer join. For right outer join, we only can broadcast the

* left side. For left outer, left semi, left anti and the internal join type ExistenceJoin,

* we only can broadcast the right side. For inner like join, we can broadcast both sides.

* Normally, BHJ can perform faster than the other join algorithms when the broadcast side is

* small. However, broadcasting tables is a network-intensive operation. It could cause OOM

* or perform worse than the other join algorithms, especially when the build/broadcast side

* is big.

*

* For the supported cases, users can specify the broadcast hint (e.g. the user applied the

* [[org.apache.spark.sql.functions.broadcast()]] function to a DataFrame) and session-based

* [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold to adjust whether BHJ is used and

* which join side is broadcast.

*

* 1) Broadcast the join side with the broadcast hint, even if the size is larger than

* [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]]. If both sides have the hint (only when the type

* is inner like join), the side with a smaller estimated physical size will be broadcast.

* 2) Respect the [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold and broadcast the side

* whose estimated physical size is smaller than the threshold. If both sides are below the

* threshold, broadcast the smaller side. If neither is smaller, BHJ is not used.

*

* - Shuffle hash join: if the average size of a single partition is small enough to build a hash

* table.

*

* - Sort merge: if the matching join keys are sortable.

*

* If there is no joining keys, Join implementations are chosen with the following precedence:

* - BroadcastNestedLoopJoin (BNLJ):

* BNLJ supports all the join types but the impl is OPTIMIZED for the following scenarios:

* For right outer join, the left side is broadcast. For left outer, left semi, left anti

* and the internal join type ExistenceJoin, the right side is broadcast. For inner like

* joins, either side is broadcast.

*

* Like BHJ, users still can specify the broadcast hint and session-based

* [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold to impact which side is broadcast.

*

* 1) Broadcast the join side with the broadcast hint, even if the size is larger than

* [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]]. If both sides have the hint (i.e., just for

* inner-like join), the side with a smaller estimated physical size will be broadcast.

* 2) Respect the [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold and broadcast the side

* whose estimated physical size is smaller than the threshold. If both sides are below the

* threshold, broadcast the smaller side. If neither is smaller, BNLJ is not used.

*

* - CartesianProduct: for inner like join, CartesianProduct is the fallback option.

*

* - BroadcastNestedLoopJoin (BNLJ):

* For the other join types, BNLJ is the fallback option. Here, we just pick the broadcast

* side with the broadcast hint. If neither side has a hint, we broadcast the side with

* the smaller estimated physical size.

*/