https://kubernetes.io/zh/docs/concepts/architecture/cloud-controller/ 基础概念

https://v1-14.docs.kubernetes.io/zh/docs/tasks/run-application/run-stateless-application-deployment/ #deployment

https://kubernetes.io/zh/docs/concepts/services-networking/service/ #service

https://v1-14.docs.kubernetes.io/zh/docs/tasks/debug-application-cluster/debug-application/ #故障排查

https://kubernetes.io/zh/docs/concepts/policy/resource-quotas/ #资源配额

https://kubernetes.io/zh/docs/tasks/run-application/rolling-update-replication-controller/ #滚动升级

https://docs.projectcalico.org/v3.8/manifests/calico.yaml #calico yaml

创建k8s的数据目录

root@master:/opt# mkdir k8s-data

创建dockerfile的目录

root@master:/opt/k8s-data# mkdir dockerfile

创建ymal的目录

root@master:/opt/k8s-data# mkdir ymal

root@master:/opt/k8s-data/ymal# mkdir test

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2 # tells deployment to run 2 pods matching the template

template: # create pods using pod definition in this template

metadata:

# unlike pod-nginx.yaml, the name is not included in the meta data as a unique name is

# generated from the deployment name

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.wyh.net/webtest/nginx:1.7.9

修改为本地的镜像仓库地址

ports:

- containerPort: 80

配置阿里云镜像加速服务

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://lcnmouck.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

root@master:~# docker pull nginx:1.7.9

下载镜像

打标签

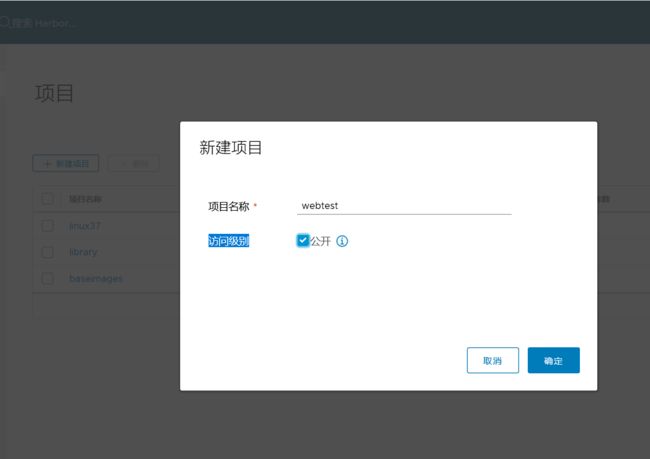

root@master:~# docker tag nginx:1.7.9 harbor.wyh.net/webtest/nginx:1.7.9

上传到本地仓库

root@master:~# docker push harbor.wyh.net/webtest/nginx:1.7.9

root@master:/opt/k8s-data/ymal/test# kubectl create -f nginx-test-v1.yaml

deployment.apps/nginx-deployment created

创建pod

root@master:/opt/k8s-data/ymal/test# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

net-test 2/4 4 2 4d12h

nginx-deployment 2/2 2 2 2d21h

查看已经创建成功了

查看正在运行

root@master:~# kubectl get pod

nginx-deployment-754df65bcf-gjd2v 1/1 Running 0 114s

nginx-deployment-754df65bcf-zncnc 1/1 Running 0 114s

查看pod 的ip地址

root@master:~# kubectl get pod -o wide

nginx-deployment-754df65bcf-gjd2v 1/1 Running 0 2m45s 172.31.180.101 192.168.200.197

nginx-deployment-754df65bcf-zncnc 1/1 Running 0 2m45s 172.31.180.103 192.168.200.197

/ # apk add curl

root@master:~# kubectl exec -it net-test-cd766cb69-5xcj5 sh

/ # wget 172.31.104.146

访问nginx的pod地址

root@node2:~# curl 172.31.167.75

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

查看状态

root@master:~# kubectl describe deployment nginx-deployment

Name: nginx-deployment

Namespace: default

CreationTimestamp: Thu, 28 Nov 2019 07:28:22 +0000

Labels: app=nginx

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 2 desired | 2 updated | 2 total | 2 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: harbor.wyh.net/webtest/nginx:1.7.9

Port: 80/TCP

Host Port: 0/TCP

Environment:

Mounts:

Volumes:

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets:

NewReplicaSet: nginx-deployment-754df65bcf (2/2 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 21m deployment-controller Scaled up replica set nginx-deployment-754df65bcf to 2

下载nginx1.8的镜像

root@master:~# docker pull nginx:1.8

root@master:~# docker tag nginx:1.8 harbor.wyh.net/webtest/nginx:1.8

root@master:~# docker push harbor.wyh.net/webtest/nginx:1.8

修改镜像版本为1.8

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1.yaml

image: harbor.wyh.net/webtest/nginx:1.8

执行镜像

root@master:/opt/k8s-data/ymal/test# kubectl apply -f nginx-test-v1.yaml

查看nginx版本,发现升级了

root@master:/opt/k8s-data/ymal/test# kubectl exec -it nginx-deployment-56fcb9f4dc-8w72m bash

root@nginx-deployment-56fcb9f4dc-8w72m:/# nginx -v

nginx version: nginx/1.8.1

k8s会基于新的镜像版本把容器重新建立

建完之后代码就生效了

修改为4个pod,这个就是k8s的横向扩容

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1.yaml

9 replicas: 4 # tells deployment to run 2 pods matching the template

root@master:/opt/k8s-data/ymal/test# kubectl get pod | grep nginx | wc -l

4

这个时候nginx的pod就有4个了

Service

将运行在一组 Pods 上的应用程序公开为网络服务的抽象方法。

使用Kubernetes,您无需修改应用程序即可使用不熟悉的服务发现机制。 Kubernetes为Pods提供自己的IP地址和一组Pod的单个DNS名称,并且可以在它们之间进行负载平衡。

定义 Service

一个 `Service` 在 Kubernetes 中是一个 REST 对象,和 `Pod` 类似。 像所有的 REST 对象一样, `Service` 定义可以基于 `POST` 方式,请求 API server 创建新的实例。

例如,假定有一组 `Pod`,它们对外暴露了 9376 端口,同时还被打上 `app=MyApp` 标签。

在添加一个标签

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1.yaml

15 app: nginx

16 group: linux37

root@master:/opt/k8s-data/ymal/test# kubectl apply -f nginx-test-v1.yaml

查看有两个标签

root@master:/opt/k8s-data/ymal/test# kubectl describe pod nginx-deployment-995964986-4dvrr

Labels: app=nginx

group=linux37

pod-template-hash=995964986

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

group: linux37

ports:

- protocol: TCP

port: 80

targetPort: 80

写后端pod服务器的实际端口

nodePort: 30002

这个 nodePort端口是hosts文件里指定的端口范围才可以,是宿主机实际端口

root@master:/opt/k8s-data/ymal/test# kubectl apply -f nginx-test-v1-service.yaml

创建服务

修改为2个

root@master:/opt/k8s-data/ymal/test# vim nginx-test-v1.yaml

9 replicas: 2

root@master:/opt/k8s-data/ymal/test# kubectl apply -f nginx-test-v1.yaml

执行yaml文件

进入到pod里

root@master:/opt/k8s-data/ymal/test# kubectl exec -it nginx-deployment-995964986-4dvrr bash

修改页面

root@nginx-deployment-995964986-4dvrr:/# echo "linux37" >> /usr/share/nginx/html/index.html

修改haproxy转发

root@haproxy1:~# vim /etc/haproxy/haproxy.cfg

listen linux37-nginx-80

bind 192.168.200.248:80

mode tcp

server 192.168.200.198 192.168.200.198:30001 check fall 3 rise 3 inter 3s

server 192.168.200.197 192.168.200.197:30001 check fall 3 rise 3 inter 3s

root@haproxy1:~# systemctl restart haproxy

root@master:/opt/k8s-data/ymal/test# kubectl cluster-info

Kubernetes master is running at https://192.168.200.248:6443

KubeDNS is running at https://192.168.200.248:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

kubernetes-dashboard is running at https://192.168.200.248:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

monitoring-grafana is running at https://192.168.200.248:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

monitoring-influxdb is running at https://192.168.200.248:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy