Dynamic Multimodal Instance Segmentation Guided by Natural Language Queries

2018-09-18 09:58:50

Paper:http://openaccess.thecvf.com/content_ECCV_2018/papers/Edgar_Margffoy-Tuay_Dynamic_Multimodal_Instance_ECCV_2018_paper.pdf

GitHub:https://github.com/BCV-Uniandes/query-objseg (PyTorch)

Related paper:

1. Recurrent Multimodal Interaction for Referring Image Segmentation ICCV 2017

Code: https://github.com/chenxi116/TF-phrasecut-public (Tensorflow)

2. Segmentation from Natural Language Expressions ECCV 2016

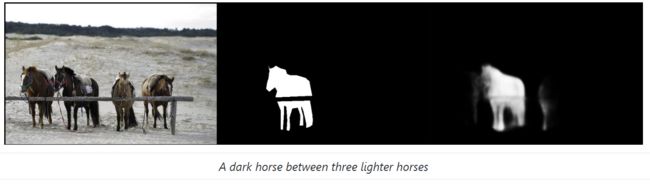

本文就是在给定 language 后,从图像中分割出所对应的目标物体。所设计的 model,如下所示:

1. Visual Module (VM) :

本文采用 Dual Path Network 92 (DPN92) 来提取 visual feature;

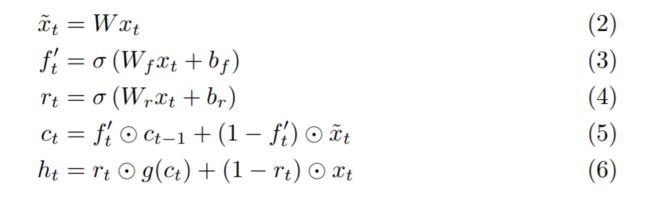

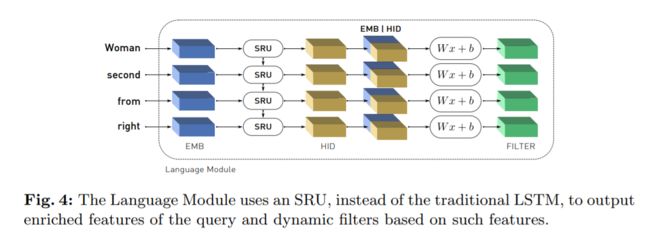

2. Language Module (LM):

本文采用的是 sru,一种新型的快速的 sequential 网络结构。sru 定义为:

我们把 embedding 以及 hidden state 进行 concatenate,然后得到文本中每一个单词的表达,即: rt. 有了这个之后,我们基于 rt 来计算一系列的 动态滤波 fk,t,定义为:

这样,我们可以根据文本 w,就可以得到 文本的特征表达以及对应的动态滤波,即:

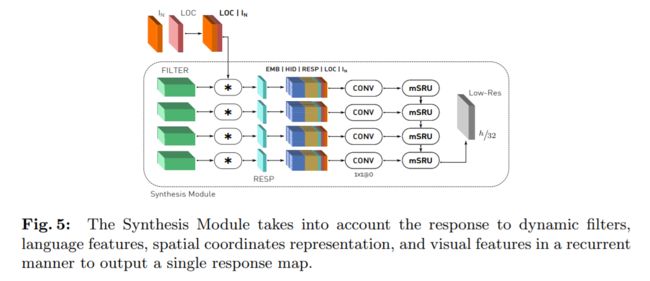

3. Synthesis Module (SM):

SM 是我们框架的核心,用于融合多个模态的信息。如图5所示,我们首先将 IN 以及 空间位置的表达,进行 concatenate,然后用 dynamic filter 对这个结果进行卷积,得到一个响应图,RESP,由 K 个 channel 组成。下一步,我们将 IN,LOC,以及 Ft 沿着 channel dimension 进行 concatenate,得到一个表达 I’。最终,我们用 1*1 的卷积来融合所有的信息,每一个时间步骤,我们有一个输出,即作为 Mt,最终,表达为:

下一步,我们用 mSRU 来产生一个 3D 的 tensor。

4. Upsampling Module (UM) :

最终,我们采用 上采样的方式,得到最终分割的 map 结果。

===== 几点疑问:

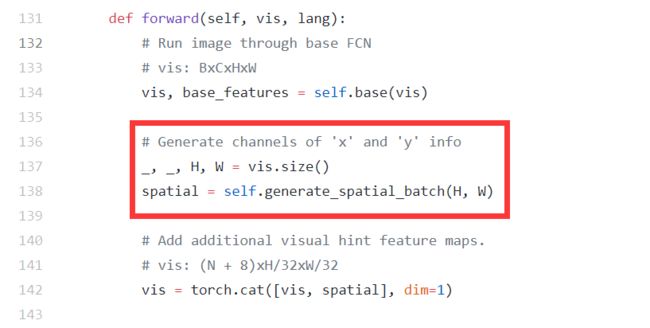

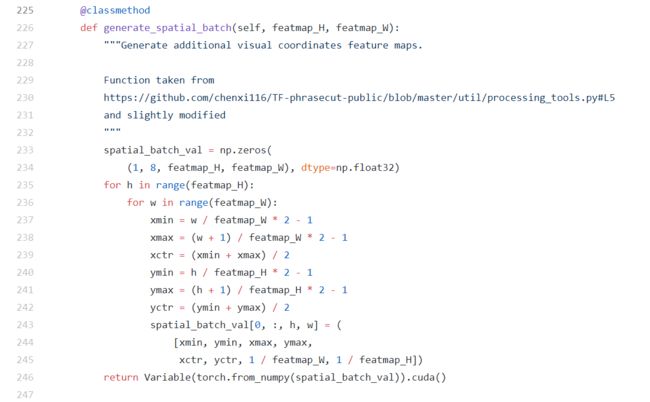

1. 作者将 spatial LOC 的信息也结合到网络中?

The same operation can also be found from the reference papers:

1. Segmentation from Natural Language Expressions ECCV 2016

2. Recurrent Multimodal Interaction for Referring Image Segmentation ICCV 2017

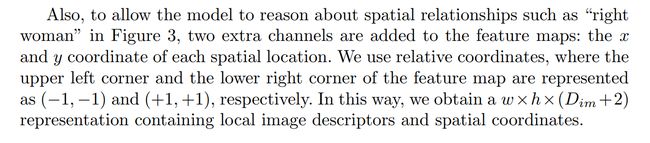

In the paper "Segmentation from Natural Language Expressions", I find the following parts to explain why we should use the spatial location information and concatenate with image feature maps.

2. Run the code successfully.

1 wangxiao@AHU:/DMS$ python3 -u -m dmn_pytorch.train --backend dpn92 --num-filters 10 --lang-layers 3 --mix-we --accum-iters 1 2 /usr/local/lib/python3.6/site-packages/torch/utils/cpp_extension.py:118: UserWarning: 3 4 !! WARNING !! 5 6 !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! 7 Your compiler (c++) may be ABI-incompatible with PyTorch! 8 Please use a compiler that is ABI-compatible with GCC 4.9 and above. 9 See https://gcc.gnu.org/onlinedocs/libstdc++/manual/abi.html. 10 11 See https://gist.github.com/goldsborough/d466f43e8ffc948ff92de7486c5216d6 12 for instructions on how to install GCC 4.9 or higher. 13 !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! 14 15 !! WARNING !! 16 17 warnings.warn(ABI_INCOMPATIBILITY_WARNING.format(compiler)) 18 Argument list to program 19 --data /DMS/referit_data 20 --split_root /DMS/referit_data/referit/splits/referit 21 --save_folder weights/ 22 --snapshot weights/qseg_weights.pth 23 --num_workers 2 24 --dataset unc 25 --split train 26 --val None 27 --eval_first False 28 --workers 4 29 --no_cuda False 30 --log_interval 200 31 --backup_iters 10000 32 --batch_size 1 33 --epochs 40 34 --lr 1e-05 35 --patience 2 36 --seed 1111 37 --iou_loss False 38 --start_epoch 1 39 --optim_snapshot weights/qsegnet_optim.pth 40 --accum_iters 1 41 --pin_memory False 42 --size 512 43 --time -1 44 --emb_size 1000 45 --hid_size 1000 46 --vis_size 2688 47 --num_filters 10 48 --mixed_size 1000 49 --hid_mixed_size 1005 50 --lang_layers 3 51 --mixed_layers 3 52 --backend dpn92 53 --mix_we True 54 --lstm False 55 --high_res False 56 --upsamp_mode bilinear 57 --upsamp_size 3 58 --upsamp_amplification 32 59 --dmn_freeze False 60 --visdom None 61 --env DMN-train 62 63 64 65 Processing unc: train set 66 loading dataset refcoco into memory... 67 creating index... 68 index created. 69 DONE (t=5.78s) 70 Saving dataset corpus dictionary... 71 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 42404/42404 [10:18<00:00, 68.56it/s] 72 Processing unc: val set 73 loading dataset refcoco into memory... 74 creating index... 75 index created. 76 DONE (t=21.52s) 77 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3811/3811 [00:53<00:00, 71.45it/s] 78 Processing unc: trainval set 79 loading dataset refcoco into memory... 80 creating index... 81 index created. 82 DONE (t=4.97s) 83 0it [00:00, ?it/s] 84 Processing unc: testA set 85 loading dataset refcoco into memory... 86 creating index... 87 index created. 88 DONE (t=5.24s) 89 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1975/1975 [00:27<00:00, 72.62it/s] 90 Processing unc: testB set 91 loading dataset refcoco into memory... 92 creating index... 93 index created. 94 DONE (t=5.06s) 95 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1810/1810 [00:31<00:00, 57.91it/s] 96 Train begins... 97 [ 1] ( 0/120624) | ms/batch 456.690311 | loss 3.530792 | lr 0.0000100 98 [ 1] ( 200/120624) | ms/batch 273.972313 | loss 1.487153 | lr 0.0000100 99 [ 1] ( 400/120624) | ms/batch 257.813077 | loss 1.036689 | lr 0.0000100 100 [ 1] ( 600/120624) | ms/batch 251.565860 | loss 1.047311 | lr 0.0000100 101 [ 1] ( 800/120624) | ms/batch 249.070073 | loss 1.657688 | lr 0.0000100 102 [ 1] ( 1000/120624) | ms/batch 246.906650 | loss 1.815347 | lr 0.0000100 103 [ 1] ( 1200/120624) | ms/batch 245.645234 | loss 2.601908 | lr 0.0000100 104 [ 1] ( 1400/120624) | ms/batch 245.039105 | loss 1.495383 | lr 0.0000100 105 [ 1] ( 1600/120624) | ms/batch 244.460579 | loss 1.441855 | lr 0.0000100