win10系统下使用Mask_Rcnn训练自己的数据

版权所有,翻版必究。https://blog.csdn.net/fightingxyz/article/details/106094147

运行环境:WIN10,pycharm,相应的CUDA,CUDNN,tensorflow1.15.0,tensorflow-gpu-1.14.0,Anaconda3

第一步:Mask RCNN开源项目:https://github.com/matterport/Mask_RCNN

第一步:数据准备

首先安装labelme进行数据的标注。安装方法pip install labelme。根据后面程序中的有关问题,有些大佬说安装labelme3.2版本。(网上很多使用方法,不会用可以查一查)

有关mask-rcnn算法可以参考:https://blog.csdn.net/linolzhang/article/details/71774168 (可以去看看论文比较好,网上容易搜索到)

我是用的是新版的labelme,对自己的数据进行标注。目前我做的是一类的。加上背景就是两类。

类似于这种的一张图对应一个json文件。

然后对json文件进行解析,使用的程序为json_datasets.py:

'''

改程序是为了跑自己的josn文件夹,针对每一张图像来生成对应的5个数据

json_file:json数据输入路径

json_file1:相关数据输出路径

'''

# -*- coding: UTF-8 -*-

import argparse

import json

import os

import os.path as osp

import warnings

import os.path

import subprocess

import numpy as np

import PIL.Image

import cv2

import yaml

from labelme import utils

import draw_label

def main():

# 改为自己的打标好存放jison文件的路径

# json_file = 'C:/Users/QJ/Desktop/hh/total'

json_file = 'D:/lingyun/Mask_RCNN-master/img'

json_file1 = 'D:/lingyun/Mask_RCNN-master/data/labelme_json'

list = os.listdir(json_file)

for i in range(0, len(list)):

path = os.path.join(json_file, list[i])

print(path)

if os.path.isfile(path):

# data = json.load(open(path))

data = json.load(open(path, encoding='utf-8'))

img = utils.img_b64_to_arr(data['imageData'])

lbl, lbl_names = utils.labelme_shapes_to_label(img.shape, data['shapes'])

captions = ['%d: %s' % (l, name) for l, name in enumerate(lbl_names)]

# lbl_viz = utils.draw_label(lbl, img, captions)

lbl_viz = draw_label.draw_label(lbl, img, captions)

out_dir = osp.basename(list[i]).replace('.', '_')

out_dir = osp.join(osp.dirname(list[i]), out_dir)

# print("aaa: ", out_dir)

# out_dir = json_file + "/" + out_dir #原始

out_dir = json_file1 + "/" + out_dir #改

# print("bbb: ", out_dir)

if not osp.exists(out_dir):

os.mkdir(out_dir)

PIL.Image.fromarray(img).save(osp.join(out_dir, 'img.png'))

# PIL.Image.fromarray(lbl).save()

labelpath = osp.join(out_dir, 'label.png')

# PIL.Image.fromarray(lbl).save(labelpath)

# opencvimg16 = cv2.imread(labelpath)

# opencvimg.convertTo(opencvimg6,)

lbl8u = np.zeros((lbl.shape[0], lbl.shape[1]), dtype=np.uint8)

for i in range(lbl.shape[0]):

for j in range(lbl.shape[1]):

lbl8u[i, j] = lbl[i, j]

PIL.Image.fromarray(lbl8u).save(labelpath)

# Alllabelpath="%s"

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, 'label_viz.png'))

with open(osp.join(out_dir, 'label_names.txt'), 'w') as f:

for lbl_name in lbl_names:

f.write(lbl_name + '\n')

warnings.warn('info.yaml is being replaced by label_names.txt')

info = dict(label_names=lbl_names)

with open(osp.join(out_dir, 'info.yaml'), 'w') as f:

yaml.dump(info, f, default_flow_style=False)

fov = open(osp.join(out_dir, 'info.yaml'), 'w')

for key in info:

# print("key", key)

fov.writelines(key)

fov.write(':\n')

for k, v in lbl_names.items():

# print("k,v: ", k, v)

fov.write(' ')

fov.write(k)

fov.write(':\n')

fov.close()

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

main()如果utils中没有draw_label函数。就寻找Anaconda3\Lib\site-packages\labelme\utils这个路径下面是否有draw.py文件。如果也没有就是用下面这个代码。这个代码就是draw.py。文件中我使用的是draw_label来命名的。然后调用这个draw_label.py中的draw_label函数。

'''该文件我的命名为draw_label,目的是为了上一个程序调用该程序中的draw_label函数'''

import io

import numpy as np

import PIL.Image

import PIL.ImageDraw

def label_colormap(N=256):

def bitget(byteval, idx):

return ((byteval & (1 << idx)) != 0)

cmap = np.zeros((N, 3))

for i in range(0, N):

id = i

r, g, b = 0, 0, 0

for j in range(0, 8):

r = np.bitwise_or(r, (bitget(id, 0) << 7 - j))

g = np.bitwise_or(g, (bitget(id, 1) << 7 - j))

b = np.bitwise_or(b, (bitget(id, 2) << 7 - j))

id = (id >> 3)

cmap[i, 0] = r

cmap[i, 1] = g

cmap[i, 2] = b

cmap = cmap.astype(np.float32) / 255

return cmap

# similar function as skimage.color.label2rgb

def label2rgb(lbl, img=None, n_labels=None, alpha=0.5, thresh_suppress=0):

if n_labels is None:

n_labels = len(np.unique(lbl))

cmap = label_colormap(n_labels)

cmap = (cmap * 255).astype(np.uint8)

lbl_viz = cmap[lbl]

lbl_viz[lbl == -1] = (0, 0, 0) # unlabeled

if img is not None:

img_gray = PIL.Image.fromarray(img).convert('LA')

img_gray = np.asarray(img_gray.convert('RGB'))

# img_gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

# img_gray = cv2.cvtColor(img_gray, cv2.COLOR_GRAY2RGB)

lbl_viz = alpha * lbl_viz + (1 - alpha) * img_gray

lbl_viz = lbl_viz.astype(np.uint8)

return lbl_viz

def draw_label(label, img=None, label_names=None, colormap=None):

import matplotlib.pyplot as plt

backend_org = plt.rcParams['backend']

plt.switch_backend('agg')

plt.subplots_adjust(left=0, right=1, top=1, bottom=0,

wspace=0, hspace=0)

plt.margins(0, 0)

plt.gca().xaxis.set_major_locator(plt.NullLocator())

plt.gca().yaxis.set_major_locator(plt.NullLocator())

if label_names is None:

label_names = [str(l) for l in range(label.max() + 1)]

if colormap is None:

colormap = label_colormap(len(label_names))

label_viz = label2rgb(label, img, n_labels=len(label_names))

plt.imshow(label_viz)

plt.axis('off')

plt_handlers = []

plt_titles = []

for label_value, label_name in enumerate(label_names):

if label_value not in label:

continue

if label_name.startswith('_'):

continue

fc = colormap[label_value]

p = plt.Rectangle((0, 0), 1, 1, fc=fc)

plt_handlers.append(p)

plt_titles.append('{value}: {name}'

.format(value=label_value, name=label_name))

plt.legend(plt_handlers, plt_titles, loc='lower right', framealpha=.5)

f = io.BytesIO()

plt.savefig(f, bbox_inches='tight', pad_inches=0)

plt.cla()

plt.close()

plt.switch_backend(backend_org)

out_size = (label_viz.shape[1], label_viz.shape[0])

out = PIL.Image.open(f).resize(out_size, PIL.Image.BILINEAR).convert('RGB')

out = np.asarray(out)

return out运行json_datasets.py(其中img中存放的是仅仅是json文件)

生成路径:

每一个里面五个数据。

扩展:https://blog.csdn.net/xjtdw/article/details/94741984这位大佬总结的好!

还有一种办法,不过针对数据类别比较少的。

'''目前的是两分类这么写'''

import argparse

import json

import os

import os.path as osp

import warnings

import copy

import shutil

import numpy as np

import PIL.Image

from skimage import io

import yaml

from labelme import utils

NAME_LABEL_MAP = {

'_background_': 0,

'你的类别': 1,

# 'Rock': 2,

# 'coal': 3,

}

def main():

json_file = '你的数据位置'

out_dir = '数据的输出位置'

if not os.path.exists(out_dir):

os.mkdir(out_dir)

print(out_dir)

list = os.listdir(json_file)

for i in range(0, len(list)):

path = os.path.join(json_file, list[i])

if (list[i].split(".")[-1]) != "json":

continue

filename = list[i][:-5] # .json

print(filename)

# label_name_to_value = {'_background_': 0}

if os.path.isfile(path):

data = json.load(open(path))

img = utils.image.img_b64_to_arr(data['imageData'])

lbl, lbl_names = utils.shape.labelme_shapes_to_label(img.shape, data['shapes']) # labelme_shapes_to_label

# lbl, lbl_names = utils.shape.shapes_to_label(img.shape, data['shapes']) # labelme_shapes_to_label

# lbl = utils.shapes_to_label(img.shape, data['shapes'], label_name_to_value)

# modify labels according to NAME_LABEL_MAP

lbl_tmp = copy.copy(lbl)

for key_name in lbl_names:

old_lbl_val = lbl_names[key_name]

new_lbl_val = NAME_LABEL_MAP[key_name]

lbl_tmp[lbl == old_lbl_val] = new_lbl_val

lbl_names_tmp = {}

for key_name in lbl_names:

lbl_names_tmp[key_name] = NAME_LABEL_MAP[key_name]

# Assign the new label to lbl and lbl_names dict

lbl = np.array(lbl_tmp, dtype=np.int8)

lbl_names = lbl_names_tmp

captions = ['%d: %s' % (l, name) for l, name in enumerate(lbl_names)]

# lbl_viz = utils.draw.draw_label(lbl, img, captions)

utils.lblsave(osp.join(out_dir, '{}.png'.format(filename)), lbl)

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

main()

是对json文件的解析,会生成对应的mask图,但是不是单通道的,还需要进一步处理。(补充,此中的数据位置中存放的是数据和json文件,均要)

然后使用下面代码,将其转化为灰度图:

import glob

import os.path

import numpy as np

from PIL import Image

import tensorflow as tf

FLAGS = tf.compat.v1.flags.FLAGS

tf.compat.v1.flags.DEFINE_string('original_gt_folder',

'你的mask数据',

'Original ground truth annotations.')

tf.compat.v1.flags.DEFINE_string('segmentation_format', 'png', 'Segmentation format.')

tf.compat.v1.flags.DEFINE_string('output_dir',

'生成的单通道的mask数据',

'folder to save modified ground truth annotations.')

def _remove_colormap(filename):

"""Removes the color map from the annotation.

Args:

filename: Ground truth annotation filename.

Returns:

Annotation without color map.

"""

return np.array(Image.open(filename))

def _save_annotation(annotation, filename):

"""Saves the annotation as png file.

Args:

annotation: Segmentation annotation.

filename: Output filename.

"""

pil_image = Image.fromarray(annotation.astype(dtype=np.uint8))

with tf.io.gfile.GFile(filename, mode='w') as f:

pil_image.save(f, 'PNG')

def main(unused_argv):

# Create the output directory if not exists.

if not tf.io.gfile.isdir(FLAGS.output_dir):

tf.io.gfile.makedirs(FLAGS.output_dir)

annotations = glob.glob(os.path.join(FLAGS.original_gt_folder,

'*.' + FLAGS.segmentation_format))

for annotation in annotations:

raw_annotation = _remove_colormap(annotation)

filename = os.path.basename(annotation)[:-4]

_save_annotation(raw_annotation,

os.path.join(

FLAGS.output_dir,

filename + '.' + FLAGS.segmentation_format))

if __name__ == '__main__':

tf.compat.v1.app.run()

至此获取了对应的灰度掩模图,看起来应该啥都没有,是看起来,其实是有数据的。

第二步:训练数据

将你的图片和对应的掩模图放在对应的位置中。上代码:

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

# Root directory of the project

ROOT_DIR = os.path.abspath("../../")

print(ROOT_DIR)

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

from mrcnn.model import log

from PIL import Image

# %matplotlib inline

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "模型保存位置")

print(MODEL_DIR)

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "预训练模型位置,mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 8

# Number of classes (including background)

# NUM_CLASSES = 1 + 3 # background + 3 shapes

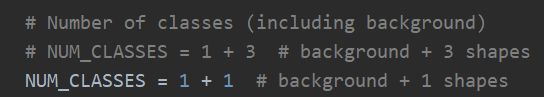

NUM_CLASSES = 1 + 1 # background + 1 shapes #此处需要更改!!!!

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 128

IMAGE_MAX_DIM = 128

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8, 16, 32, 64, 128) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 32

# Use a small epoch since the data is simple

# STEPS_PER_EPOCH = 100

STEPS_PER_EPOCH = 20

# use small validation steps since the epoch is small

VALIDATION_STEPS = 5

config = ShapesConfig()

config.display()

def get_ax(rows=1, cols=1, size=8):

"""

返回要在其中使用的Matplotlib Axes数组的所有可视化。 提供一个控制图形大小的中心点。

更改默认大小属性以控制大小,渲染图像

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

class ShapesDataset(utils.Dataset):

"""

count: 存放的图片数据

img_floder: 图像数据的路径

mask_floder: 掩膜数据的路径

imglist: 图像列表

dataset_root_path: 数据的路径,大路径

"""

def load_shapes_process(self, count, img_floder, mask_floder, imglist, dataset_root_path):

#存放你的数据类型,此处需要更改

self.add_class("shapes", 1, "你的类别")

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

mask_path = mask_floder + "/" + filestr + ".png"

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

#主要为了获取数据的长宽,方法有很多不限此处一种

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path)

def load_mask(self, image_id): #继承重构!!!!需要自己写一个!否则就是使用utils中的load_mask()

"""为给定图像ID的形状生成实例掩膜 """

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path']) #输入图像的路径,然后显示该图像

num_obj = np.max(img) #取掩膜图中的最大值

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8) #掩膜图,h*w*num_obj

mask = self.draw_shape_process(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8) #受用np的逻辑非函数,得到的时bool结果。然后将其转化为np.uiny8类型

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = ["类别名字1", "类别名字2"]

labels_form = []

for i in range(len(labels)):

if labels[i].find("类别名字1") != -1:

labels_form.append("类别名字1")

elif labels[i].find("类别名字2") != -1:

labels_form.append("类别名字2")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def draw_shape_process(self, num_obj, mask, image, image_id):

""" 根据给定的h*w*num_obj绘制形状。"""

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

#基础的设置

dataset_root_path="data/"

img_floder = "存放原始图像的位置"

mask_floder = "存放掩膜的位置"

#yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

# print(1)

# Training dataset 训练数据

dataset_train = ShapesDataset()

# dataset_train.load_shapes(count, img_floder, mask_floder, imglist, dataset_root_path)

dataset_train.load_shapes_process(count, img_floder, mask_floder, imglist, dataset_root_path)

dataset_train.prepare()

# print(2)

# Validation dataset 测试数据

dataset_val = ShapesDataset()

# dataset_val.load_shapes(7, img_floder, mask_floder, imglist, dataset_root_path)

dataset_val.load_shapes_process(7, img_floder, mask_floder, imglist, dataset_root_path)

dataset_val.prepare()

# Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,

model_dir=MODEL_DIR)

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last(), by_name=True)

#总的批次数据

# 训练头上的树枝,传递layers =“ heads”将冻结除head以外的所有图层

# 还可以传递正则表达式来选择,按名称模式训练哪些层。

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=10,

layers='heads')

# 微调所有图层。通过layers =“all”训练所有层。 你也可以传递正则表达式以选择要图层。按名称训练模式。

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=5,

layers="all")需要更改的地方在代码中给出,需要根据自己的实际情况进行修改。此代码也需要加入预训练模型,和我上一篇博客一样,可以参考。

预训练位置在:https://github.com/matterport/Mask_RCNN/releases

结果如下所示:

其中utils.py中的load_mask()一定需要重构,目的就是加载我们自己的数据mask。否则就一致爆出问题:

然后测试自己的数据就是使用你自己的.hs文件来替代预训练模型,来对自己的数据进行检测。

第三步:测试

# -*- coding: utf-8 -*-

import os

import sys

import random

import math

import numpy as np

import skimage.io

import matplotlib

import matplotlib.pyplot as plt

import cv2

import time

from mrcnn.config import Config

from datetime import datetime

# Root directory of the project

ROOT_DIR = os.getcwd()

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

# Import COCO config

sys.path.append(os.path.join(ROOT_DIR, "samples/coco/")) # To find local version

from samples.coco import coco

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs_process")

# Local path to trained weights file

'''此处放置的是你的.h5文件'''

COCO_MODEL_PATH = os.path.join(MODEL_DIR, "shapes20200512T1706\mask_rcnn_shapes_0005.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

print("cuiwei***********************")

# Directory of images to run detection on

IMAGE_DIR = os.path.join(ROOT_DIR, "data/pic") #你的数据位置

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background) #注意更改类别

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 320

IMAGE_MAX_DIM = 384

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE =100

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 100

# use small validation steps since the epoch is small

VALIDATION_STEPS = 50

class InferenceConfig(ShapesConfig):

# Set batch size to 1 since we'll be running inference on

# one image at a time. Batch size = GPU_COUNT * IMAGES_PER_GPU

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

# model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# Create model object in inference mode.

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# Load weights trained on MS-COCO

model.load_weights(COCO_MODEL_PATH, by_name=True)

# IMAGE_DIR = os.path.join(ROOT_DIR, "data/pic")

file_names_path = r"D:\lingyun\new_data_program\data\no_light_red"

file_names_img = os.listdir(file_names_path)

for file_names_img_i in file_names_img:

# print(file_names_path+"\\"+file_names_img_i)

img_path=file_names_path+"\\"+file_names_img_i

image = skimage.io.imread(img_path)

a=datetime.now()

# Run detection

results = model.detect([image], verbose=1)

b=datetime.now()

# Visualize results

print("shijian", (b-a).seconds)

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'],

class_names, r['scores'])