DPDK学习记录6 - eth dev初始化2之rte_eal_init

main函数启动之后,会调用rte_eal_init,在rte_eal_init中跟ether dev相关的是rte_bus_scan和rte_bus_probe。

1 rte_bus_scan

初步扫描总线,函数如下,对rte_bus_list链表,迭代scan各总线。

1.1 rte_bus_scan

/* Scan all the buses for registered devices */

int

rte_bus_scan(void)

{

int ret;

struct rte_bus *bus = NULL;

TAILQ_FOREACH(bus, &rte_bus_list, next) {

ret = bus->scan();

if (ret)

RTE_LOG(ERR, EAL, "Scan for (%s) bus failed.\n",

bus->name);

}

return 0;

}

1.2 rte_pci_scan

rte_pci_bus.bus总线的scan函数为rte_pci_scan

struct rte_pci_bus rte_pci_bus = {

.bus = {

.scan = rte_pci_scan,

.probe = rte_pci_probe,

},

1.3 gdb调试过程

rte_pci_scan函数,会扫描系统中所有的pci设备,并插入到rte_pci_bus.device_list链表中,系统中存储pci device的路径在 /sys/bus/pci_devices中,其中00:03.0和00:08.0被我配置成了igb uio,用于dpdk收发的ether dev。

gdb调试细节如下:

1.3.2 pci_scan_one是真正处理每个pci 设备的函数

第一个处理的是00:08.0 device,在处理之前,可以看到rte_pci_bus.device_list的head指针为0。

处理完00:08.0之后,在处理下一个device 00:0d.0之前,可见rte_pci_bus.device_list的head指针有值了,查看链中的第一个设备,发现就是刚刚处理完毕的00:08.0,而其tqe_next为0,表示后续没有device了,其中kdrv = RTE_KDRV_IGB_UIO。

当插入三个device之后,其中只有00:08.0的kdrv为IGB UIO。

当所有devices插入之后,其中00:03.0和00:08.0两个pci device是我配置成IGB UIO的pci设备。

1.3.3 在进入rte_bus_probe之前

当rte_bus_scan处理完毕之后,所有的pci device都插入到了rte_pci_bus.devcie_list链下。在gcc构造函数中注册的pci pmd driver链,存储到了rte_pci_bus.driver_list链中,其中有一个是我们需要用到的e1000 em pmd 驱动。

2 rte_bus_probe

在前面的步骤之后,rte_pci_bus.driver_list中挂上了所有pmd driver的驱动,rte_pci_bus.device_list挂上了所有 pci device的设备信息。

rte_bus_probe详细探索总线,先迭代所有的总线,在处理PCI总线的时候,再迭代所有的dev,在每个dev中接着迭代所有的pmd driver。当pci dev和driver中的id table匹配成功之后,才确定为dpdk需要的端口。然后申请eth dev全局变量,处理完毕之后,以eth dev全局变量对应一个端口,保存此端口的dev和driver信息。

struct rte_pci_bus rte_pci_bus = {

.bus = {

.scan = rte_pci_scan,

.probe = rte_pci_probe,

},

static struct rte_pci_driver rte_em_pmd = {

.id_table = pci_id_em_map,

.drv_flags = RTE_PCI_DRV_NEED_MAPPING | RTE_PCI_DRV_INTR_LSC |

RTE_PCI_DRV_IOVA_AS_VA,

.probe = eth_em_pci_probe,

.remove = eth_em_pci_remove,

};

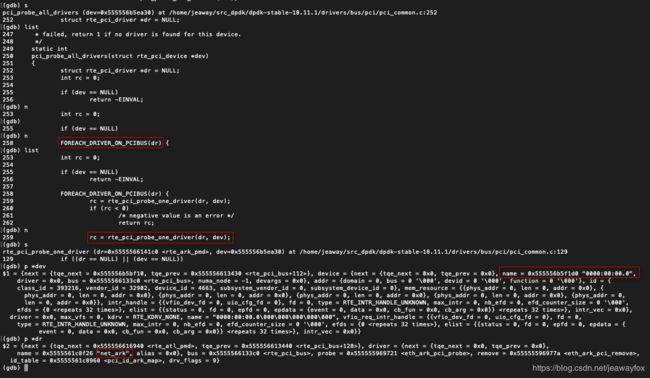

2.1 rte_pci_probe_one_driver

在rte_bus_probe中,通过全局链表头rte_bus_list迭代所有总线类型,接着在pci总线类型处理函数rte_pci_probe中迭代所有的devices,然后在处理每个device的pmd 驱动probe函数pci_probe_all_drivers中迭代所有的pmd driver。

由下图可见,在rte_pci_probe_one_driver中,已经是确定了的device和driver。

2.2 rte_pci_match

2.2.1 过滤不匹配的(device,driver)对

device中有一个id的结构体,保存了vendor_id/device_id等信息。

driver中有一个id_table的结构体数组, 遍历这个id_table数组,和device中的id进行匹配比较。不匹配的都过滤掉。

2.2.2 rte_pci_match匹配成功

找到我们需要的 device 00:03.0和对应的em pmd driver。由下图可见,device的id和drivier中的id_table是可以匹配上的,其中driver中的16777215和65535是全F,表示可以匹配Any

2.2.3 rte_pci_match匹配之后

rte_pci_probe_one_driver中匹配成功之后,接着往下处理:

- 把driver挂到device结构体中。

- rte_pci_map_device //没有深入研究。

3. 调用driver的probe函数,此时是eth_em_pci_probe。

if (!already_probed)

dev->driver = dr;

if (!already_probed && (dr->drv_flags & RTE_PCI_DRV_NEED_MAPPING)) {

/* map resources for devices that use igb_uio */

ret = rte_pci_map_device(dev);

if (ret != 0) {

dev->driver = NULL;

return ret;

}

}

/* call the driver probe() function */

ret = dr->probe(dr, dev);

static int eth_em_pci_probe(struct rte_pci_driver *pci_drv __rte_unused,

struct rte_pci_device *pci_dev)

{

return rte_eth_dev_pci_generic_probe(pci_dev,

sizeof(struct e1000_adapter), eth_em_dev_init);

}

2.3 eth_em_pci_probe 和 rte_eth_dev_pci_generic_probe

在rte_eth_dev_pci_generic_probe函数中,首先会从全局数组rte_eth_devices[]中申请到第一个free的变量,然后再调用pmd特定的dev_init(这里是eth_em_dev_init)

static inline int

rte_eth_dev_pci_generic_probe(struct rte_pci_device *pci_dev,

size_t private_data_size, eth_dev_pci_callback_t dev_init)

{

struct rte_eth_dev *eth_dev;

int ret;

eth_dev = rte_eth_dev_pci_allocate(pci_dev, private_data_size);

if (!eth_dev)

return -ENOMEM;

RTE_FUNC_PTR_OR_ERR_RET(*dev_init, -EINVAL);

ret = dev_init(eth_dev);

if (ret)

rte_eth_dev_pci_release(eth_dev);

else

rte_eth_dev_probing_finish(eth_dev);

return ret;

}

2.4 rte_eth_dev_pci_allocate

在rte_eth_dev_pci_allocate函数中,我们来看看是如何申请eth dev的。首先根据device name的唯一性,去申请得到新的eth dev;然后申请得到private_data_size;最后把dev的内容拷贝到eth dev中。

static inline struct rte_eth_dev *

rte_eth_dev_pci_allocate(struct rte_pci_device *dev, size_t private_data_size)

{

struct rte_eth_dev *eth_dev;

const char *name;

if (!dev)

return NULL;

name = dev->device.name;

if (rte_eal_process_type() == RTE_PROC_PRIMARY) {

eth_dev = rte_eth_dev_allocate(name);

if (!eth_dev)

return NULL;

if (private_data_size) {

eth_dev->data->dev_private = rte_zmalloc_socket(name,

private_data_size, RTE_CACHE_LINE_SIZE,

dev->device.numa_node);

if (!eth_dev->data->dev_private) {

rte_eth_dev_release_port(eth_dev);

return NULL;

}

}

} else {

eth_dev = rte_eth_dev_attach_secondary(name);

if (!eth_dev)

return NULL;

}

eth_dev->device = &dev->device;

rte_eth_copy_pci_info(eth_dev, dev);

return eth_dev;

}

rte_eth_dev_allocate是真正申请获得eth dev资源的函数:

struct rte_eth_dev *

rte_eth_dev_allocate(const char *name)

{

uint16_t port_id;

struct rte_eth_dev *eth_dev = NULL;

//申请进程间共享的data数据,用作挂到eth_dev->data下。

rte_eth_dev_shared_data_prepare();

/* Synchronize port creation between primary and secondary threads. */

rte_spinlock_lock(&rte_eth_dev_shared_data->ownership_lock);

//check一下,此name是否已经被申请。

if (_rte_eth_dev_allocated(name) != NULL) {

RTE_ETHDEV_LOG(ERR,

"Ethernet device with name %s already allocated\n",

name);

goto unlock;

}

//找到第一个free的port id号。

port_id = rte_eth_dev_find_free_port();

if (port_id == RTE_MAX_ETHPORTS) {

RTE_ETHDEV_LOG(ERR,

"Reached maximum number of Ethernet ports\n");

goto unlock;

}

//返回全局变量数组rte_eth_devices[port_id],同时把上面申请到的共享data数据,正式挂到eth_dev->data下。

eth_dev = eth_dev_get(port_id);

snprintf(eth_dev->data->name, sizeof(eth_dev->data->name), "%s", name);

eth_dev->data->port_id = port_id;

eth_dev->data->mtu = ETHER_MTU;

unlock:

rte_spinlock_unlock(&rte_eth_dev_shared_data->ownership_lock);

return eth_dev;

}

2.4.1 用memzone_reserve的方式申请共享data资源

2.4.2 申请private_data

通过rte_zmalloc_socket方式申请private_data,挂到共享的eth dev的data下面。

2.4.3

成功申请eth dev之后,将device下的数据拷贝到eth dev下。

eth_dev->device = &dev->device;

rte_eth_copy_pci_info(eth_dev, dev);

static inline void

rte_eth_copy_pci_info(struct rte_eth_dev *eth_dev,

struct rte_pci_device *pci_dev)

{

if ((eth_dev == NULL) || (pci_dev == NULL)) {

RTE_ETHDEV_LOG(ERR, "NULL pointer eth_dev=%p pci_dev=%p",

(void *)eth_dev, (void *)pci_dev);

return;

}

eth_dev->intr_handle = &pci_dev->intr_handle;

eth_dev->data->dev_flags = 0;

if (pci_dev->driver->drv_flags & RTE_PCI_DRV_INTR_LSC)

eth_dev->data->dev_flags |= RTE_ETH_DEV_INTR_LSC;

if (pci_dev->driver->drv_flags & RTE_PCI_DRV_INTR_RMV)

eth_dev->data->dev_flags |= RTE_ETH_DEV_INTR_RMV;

eth_dev->data->kdrv = pci_dev->kdrv;

eth_dev->data->numa_node = pci_dev->device.numa_node;

}

2.5 dev_init (eth_em_dev_init)

eth dev的结构体中,其中的data字段已经成功申请,rte_device字段也存储了对应pci device的内容。

eth_em_dev_init函数,把private data的void区域特定成em pmd的结构体,然后进一步配置。

然后,把*dev_ops/rx_pkt_burst/tx_pkt_burst等函数指针位置上,挂上em pmd driver特定的处理函数。

设置一些其他参数,包括端口的mac 地址。

rte_eth_copy_pci_info(eth_dev, pci_dev);

hw->hw_addr = (void *)pci_dev->mem_resource[0].addr;

hw->device_id = pci_dev->id.device_id;

adapter->stopped = 0;

/* Allocate memory for storing MAC addresses */

eth_dev->data->mac_addrs = rte_zmalloc("e1000", ETHER_ADDR_LEN *

hw->mac.rar_entry_count, 0);

if (eth_dev->data->mac_addrs == NULL) {

PMD_INIT_LOG(ERR, "Failed to allocate %d bytes needed to "

"store MAC addresses",

ETHER_ADDR_LEN * hw->mac.rar_entry_count);

return -ENOMEM;

}

/* Copy the permanent MAC address */

ether_addr_copy((struct ether_addr *) hw->mac.addr,

eth_dev->data->mac_addrs);

rx_pkt_burst/tx_pkt_burst是收发包函数,dev_ops是对端口的配置操作函数。

static const struct eth_dev_ops eth_em_ops = {

.dev_configure = eth_em_configure,

.dev_start = eth_em_start,

.dev_stop = eth_em_stop,

.dev_close = eth_em_close,

.promiscuous_enable = eth_em_promiscuous_enable,

.promiscuous_disable = eth_em_promiscuous_disable,

.allmulticast_enable = eth_em_allmulticast_enable,

.allmulticast_disable = eth_em_allmulticast_disable,

.link_update = eth_em_link_update,

.stats_get = eth_em_stats_get,

.stats_reset = eth_em_stats_reset,

.dev_infos_get = eth_em_infos_get,

.mtu_set = eth_em_mtu_set,

.vlan_filter_set = eth_em_vlan_filter_set,

.vlan_offload_set = eth_em_vlan_offload_set,

.rx_queue_setup = eth_em_rx_queue_setup,

.rx_queue_release = eth_em_rx_queue_release,

.rx_queue_count = eth_em_rx_queue_count,

.rx_descriptor_done = eth_em_rx_descriptor_done,

.rx_descriptor_status = eth_em_rx_descriptor_status,

.tx_descriptor_status = eth_em_tx_descriptor_status,

.tx_queue_setup = eth_em_tx_queue_setup,

.tx_queue_release = eth_em_tx_queue_release,

.rx_queue_intr_enable = eth_em_rx_queue_intr_enable,

.rx_queue_intr_disable = eth_em_rx_queue_intr_disable,

.dev_led_on = eth_em_led_on,

.dev_led_off = eth_em_led_off,

.flow_ctrl_get = eth_em_flow_ctrl_get,

.flow_ctrl_set = eth_em_flow_ctrl_set,

.mac_addr_set = eth_em_default_mac_addr_set,

.mac_addr_add = eth_em_rar_set,

.mac_addr_remove = eth_em_rar_clear,

.set_mc_addr_list = eth_em_set_mc_addr_list,

.rxq_info_get = em_rxq_info_get,

.txq_info_get = em_txq_info_get,

};

3 总结

在probe第一个端口之后,最终初始化了port id =0的端口,存储在rte_eth_devices[0]中。由下图可见,rte_eth_devices[0]已经设置,对比还没设置的rte_eth_devices[1],可以发现在rte_eth_devices[0]中,像rx_pkt_burst/tx_pkt_burst/dev_ops已经设置,state也已经是ATTACHED状态,device字段也指向了对应的device数据,也可通过其找到driver数据。另外,在重要的data字段中,mac 地址/MTU/port id均已经配置,其中的dev private void指针是指向特定pmd的部分,包括其HW信息等。

然后我们可以发现,data中的rx_queues/tx_queues/nb_rx_queues/nb_tx_queues等信息为0,这一块内容是交由user来配置的,dev_ops也交由user来操作,这将是下一章节的内容。