作者:李毓

首先我们要思考一下,K8S中收集日志与传统条件下的日志收集有什么区别。他一般是收集哪些日志?确定收集日志类型之后又是怎么去收集的?如果日志还是放到容器内部,会随着容器删除而被删除。容器数量很多,按照传统的查看日志方式已变不太现实。K8s本身特性是容器日志输出控制台,本身Docker提供了一种日志采集能力。如果落地到本地文件,目前还没有一种好的采集方式。所以新扩容Pod属性信息(日志文件路径,日志源)可能发生变化

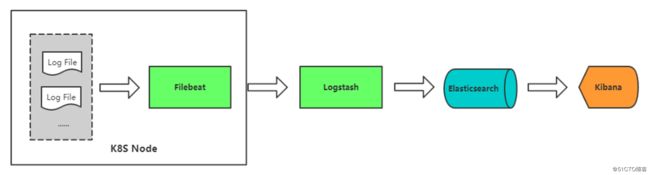

流程和传统采集是类似的,如下图。

一般来说,我们用的日志收集方案有两种,一种是node上部署日志的收集程序,比如用daemonset方式部署,对本节点容器下的目录进行采集。并且把容器内的目录挂载到宿主机目录上面。目录为对本节点/var/log/kubelet/pods和

/var/lib/docker/containers/两个目录下的日志进行采集。还有一种是Pod中附加专用日志收集的容器 每个运行应用程序的Pod中增加一个日志收集容器,使用emtyDir共享日志目录让日志收集程序读取到。这里先探讨第二种方案。

约定K8S集群为三台

k8s-mater:192.168,.187.137

k8s-node1:192.168,.187.138

k8s-node2:192.168,.187.139

执行以下脚本之前要先创建存储,这个参考之前的监控文档。

在master上执行

elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: kube-system

labels:

k8s-app: elasticsearch

spec:

serviceName: elasticsearch

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

containers:

- image: elasticsearch:7.3.2

name: elasticsearch

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx2g"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

storageClassName: "managed-nfs-storage"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: kube-system

spec:

clusterIP: None

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch

filebeat.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

# To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# hints.enabled: true

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: kube-system

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.3.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch

- name: ELASTICSEARCH_PORT

value: "9200"

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

---kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-system

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.3.2

resources:

limits:

cpu: 1

memory: 500Mi

requests:

cpu: 0.5

memory: 200Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-0.elasticsearch.kube-system:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-system

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 30601

selector:

k8s-app: kibana

新建的时候一直建不上,发现是由于之前实验环境PV没有删除,先将PV删除。

一般用常规方法难以删除,可以尝试强制从数据库删除。

kubectl patch pv pvc-4163c9d4-44cb-4c50-aa20-412f388d989e -p '{"metadata":{"finalizers":null}}'登录进去之后创建索引

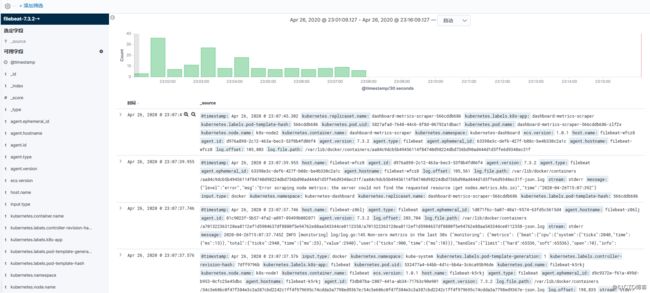

在查看K8S一些pod,命名空间,service的日志,全都收集到了。

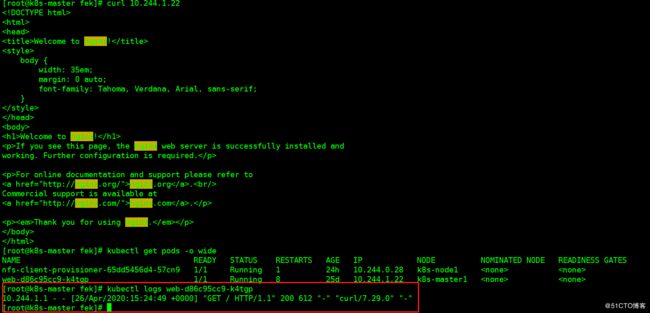

尝试安装一个nginx,然后捕捉他的日志。

kubectl create deployment web --image=nginx

kubectl expose deployment web --port=80 --target-port=80 --name=web --type=NodePort再去查看kinbana

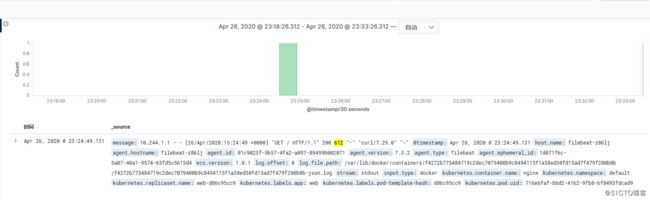

成功获取日志

我们再看看K8S组件日志的收集

apiVersion: v1

kind: ConfigMap

metadata:

name: k8s-logs-filebeat-config

namespace: kube-system

data:

filebeat.yml: |

filebeat.inputs:

- type: log

paths:

- /var/log/messages

fields:

app: k8s

type: module

fields_under_root: true

setup.ilm.enabled: false

setup.template.name: "k8s-module"

setup.template.pattern: "k8s-module-*"

output.elasticsearch:

hosts: ['elasticsearch.kube-system:9200']

index: "k8s-module-%{+yyyy.MM.dd}"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: k8s-logs

namespace: kube-system

spec:

selector:

matchLabels:

project: k8s

app: filebeat

template:

metadata:

labels:

project: k8s

app: filebeat

spec:

containers:

- name: filebeat

image: elastic/filebeat:7.3.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

securityContext:

runAsUser: 0

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-logs

mountPath: /var/log/messages

volumes:

- name: k8s-logs

hostPath:

path: /var/log/messages

- name: filebeat-config

configMap:

name: k8s-logs-filebeat-config

组件日志也可以查看到了。