caffe 利用Python API做分类预测,以及特征的可视化

这里的代码位于 $caffe-root/examples 下,文件名称为00-classification.ipynb,可以在自己的电脑下用jupyter跑一下,加深记忆。

导入相关的库

# set up Python environment: numpy for numerical routines, and matplotlib for plotting

import numpy as np

import matplotlib.pyplot as plt

# display plots in this notebook

%matplotlib inline #notebook 使用 matplotlib

# set display defaults

plt.rcParams['figure.figsize'] = (10, 10) # large images

plt.rcParams['image.interpolation'] = 'nearest' # 插值方式

plt.rcParams['image.cmap'] = 'gray' # 灰度输出有关rcParams参数了解可以参考:http://blog.csdn.net/iamzhangzhuping/article/details/50792208

# The caffe module needs to be on the Python path;

# we'll add it here explicitly.

import sys

caffe_root = '../' # this file should be run from {caffe_root}/examples (otherwise change this line)

sys.path.insert(0, caffe_root + 'python')

import caffe

# If you get "No module named _caffe", either you have not built pycaffe or you have the wrong path.如果import caffe 出现错误,则参考:http://blog.csdn.net/m0_37477175/article/details/78295072重新编译

因为此时文件在 $caffe_root/examples下,所以要把此文件的环境放在$caffe_root/python下才可以。

如果没有相关的模型和模型参数,就下载:

import os

if os.path.isfile(caffe_root + 'models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel'):

print 'CaffeNet found.'

else:

print 'Downloading pre-trained CaffeNet model...'

!../scripts/download_model_binary.py ../models/bvlc_reference_caffenet加载网络以及网络训练之后的参数

caffe.set_mode_cpu()

model_def = caffe_root + 'models/bvlc_reference_caffenet/deploy.prototxt'

model_weights = caffe_root + 'models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel'

net = caffe.Net(model_def, # defines the structure of the model

model_weights, # contains the trained weights

caffe.TEST) # use test mode (e.g., don't perform dropout)注意:此时的网络配置文件并不是原始训练的文件,相比于原始训练的网络,这个test网络去掉了参数初始化,accuracy层,loss层,另外加了porp层(softmax概率),并且修改了数据输入层,数据输入层的修改如下:

原:

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

}

# mean pixel / channel-wise mean instead of mean image

# transform_param {

# crop_size: 227

# mean_value: 104

# mean_value: 117

# mean_value: 123

# mirror: true

# }

data_param {

source: "examples/imagenet/ilsvrc12_train_lmdb"

batch_size: 256

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

}

# mean pixel / channel-wise mean instead of mean image

# transform_param {

# crop_size: 227

# mean_value: 104

# mean_value: 117

# mean_value: 123

# mirror: false

# }

data_param {

source: "examples/imagenet/ilsvrc12_val_lmdb"

batch_size: 50

backend: LMDB

}

}改:

layer {

name: "data"

type: "Input"

top: "data"

input_param { shape: { dim: 10 dim: 3 dim: 227 dim: 227 } }

}输入数据的处理

此过程使用caffe.io.Transformer来做处理,当然也可以使用其他的方法,只要最后处理的过程与训练时数据的处理过程一致就行了。

- 默认的caffenet数据的图像的三色通道读入顺序是BGR

- 输入的数据范围在[0 255]之间,之后加载平均文件

- 读入的图片数据,通道数在第三个位置,需要变换到第一个位置,即由[227 227 3]变为[3 227 227]

# load the mean ImageNet image (as distributed with Caffe) for subtraction

mu = np.load(caffe_root + 'python/caffe/imagenet/ilsvrc_2012_mean.npy')

mu = mu.mean(1).mean(1) # average over pixels to obtain the mean (BGR) pixel values

print 'mean-subtracted values:', zip('BGR', mu)

#mean-subtracted values: [('B', 104.0069879317889), ('G', 116.66876761696767), ('R', 122.6789143406786)]

# create transformer for the input called 'data'

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape})

transformer.set_transpose('data', (2,0,1)) # move image channels to outermost dimension

transformer.set_mean('data', mu) # subtract the dataset-mean value in each channel

transformer.set_raw_scale('data', 255) # rescale from [0, 1] to [0, 255] 数据变换到[0, 255]

transformer.set_channel_swap('data', (2,1,0)) # swap channels from RGB to BGR注意: RGB ——-> BGR

进行CPU分类

只是分类一张图片。

# set the size of the input (we can skip this if we're happy

# with the default; we can also change it later, e.g., for different batch sizes)

net.blobs['data'].reshape(50, # batch size

3, # 3-channel (BGR) images

227, 227) # image size is 227x227调整输入数据的大小,这部可以跳过,因为网络配置文件中已经有所设置。

image = caffe.io.load_image(caffe_root + 'examples/images/cat.jpg')

transformed_image = transformer.preprocess('data', image)

plt.imshow(image)# copy the image data into the memory allocated for the net

net.blobs['data'].data[...] = transformed_image

### perform classification

output = net.forward()

output_prob = output['prob'][0] # 输出batch中第一张图片的概率向量(总共种类为1000类)

print 'predicted class is:', output_prob.argmax()

#输出概率向量中最大值的位置

#predicted class is: 281对于caffe Python 其他API的详细介绍可以参考:

http://www.cnblogs.com/denny402/p/5686257.html

# load ImageNet labels

labels_file = caffe_root + 'data/ilsvrc12/synset_words.txt'

if not os.path.exists(labels_file):

!../data/ilsvrc12/get_ilsvrc_aux.sh

labels = np.loadtxt(labels_file, str, delimiter='\t')

print 'output label:', labels[output_prob.argmax()]

#output label: n02123045 tabby, tabby cat

#"Tabby cat" is correct! But let's also look at other top (but less confident predictions).查看分类概率由大到小前五的概率(置信度),以及分类的标签

# sort top five predictions from softmax output

top_inds = output_prob.argsort()[::-1][:5] # reverse sort and take five largest items

print 'probabilities and labels:'

zip(output_prob[top_inds], labels[top_inds])probabilities and labels:

Out[10]:

[(0.31243637, ‘n02123045 tabby, tabby cat’),

(0.2379719, ‘n02123159 tiger cat’),

(0.12387239, ‘n02124075 Egyptian cat’),

(0.10075711, ‘n02119022 red fox, Vulpes vulpes’),

(0.070957087, ‘n02127052 lynx, catamount’)]

使用GPU模式进行训练

对比CPU和GPU的一次前向运算时间

%timeit net.forward()

## 1 loop, best of 3: 1.42 s per loop

#切换到GPU模式

caffe.set_device(0) # if we have multiple GPUs, pick the first one

caffe.set_mode_gpu()

net.forward() # run once before timing to set up memory

%timeit net.forward()

# 10 loops, best of 3: 70.2 ms per loop

# 速度明显增加检查中间输出,可视化参数

A net is not just a black box; let’s take a look at some of the parameters and intermediate activations.

First we’ll see how to read out the structure of the net in terms of activation and parameter shapes.

For each layer, let’s look at the activation shapes, which typically have the form (batch_size, channel_dim, height, width).

The activations are exposed as an OrderedDict, net.blobs.

# for each layer, show the output shape

for layer_name, blob in net.blobs.iteritems():

print layer_name + '\t' + str(blob.data.shape)data (50, 3, 227, 227)

conv1 (50, 96, 55, 55)

pool1 (50, 96, 27, 27)

norm1 (50, 96, 27, 27)

conv2 (50, 256, 27, 27)

pool2 (50, 256, 13, 13)

norm2 (50, 256, 13, 13)

conv3 (50, 384, 13, 13)

conv4 (50, 384, 13, 13)

conv5 (50, 256, 13, 13)

pool5 (50, 256, 6, 6)

fc6 (50, 4096)

fc7 (50, 4096)

fc8 (50, 1000)

prob (50, 1000)

权重w和偏置b的shape大小:

for layer_name, param in net.params.iteritems():

print layer_name + '\t' + str(param[0].data.shape), str(param[1].data.shape)conv1 (96, 3, 11, 11) (96,)

conv2 (256, 48, 5, 5) (256,)

conv3 (384, 256, 3, 3) (384,)

conv4 (384, 192, 3, 3) (384,)

conv5 (256, 192, 3, 3) (256,)

fc6 (4096, 9216) (4096,)

fc7 (4096, 4096) (4096,)

fc8 (1000, 4096) (1000,)

可视化特征图

def vis_square(data):

"""Take an array of shape (n, height, width) or (n, height, width, 3)

and visualize each (height, width) thing in a grid of size approx. sqrt(n) by sqrt(n)"""

# normalize data for display

data = (data - data.min()) / (data.max() - data.min())

# force the number of filters to be square

n = int(np.ceil(np.sqrt(data.shape[0])))

padding = (((0, n ** 2 - data.shape[0]),

(0, 1), (0, 1)) # add some space between filters

+ ((0, 0),) * (data.ndim - 3)) # don't pad the last dimension (if there is one)

data = np.pad(data, padding, mode='constant', constant_values=1) # pad with ones (white)

# tile the filters into an image

data = data.reshape((n, n) + data.shape[1:]).transpose((0, 2, 1, 3) + tuple(range(4, data.ndim + 1)))

data = data.reshape((n * data.shape[1], n * data.shape[3]) + data.shape[4:])

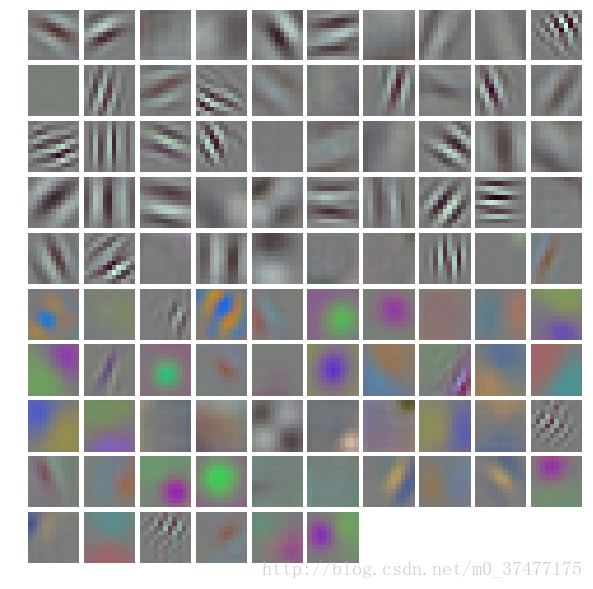

plt.imshow(data); plt.axis('off')对第一层卷积层w参数的可视化

# the parameters are a list of [weights, biases]

filters = net.params['conv1'][0].data

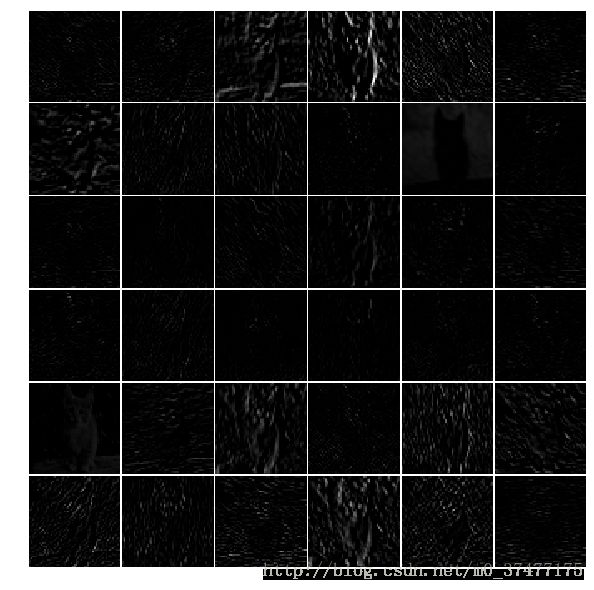

vis_square(filters.transpose(0, 2, 3, 1))对前36个feature map 的可视化

feat = net.blobs['conv1'].data[0, :36]

vis_square(feat)对pooling层的feature map的可视化

feat = net.blobs['pool5'].data[0]

vis_square(feat)对于fc6全卷积层,展示了输出值和正值的直方图

feat = net.blobs['fc6'].data[0]

plt.subplot(2, 1, 1)

plt.plot(feat.flat)

plt.subplot(2, 1, 2)

_ = plt.hist(feat.flat[feat.flat > 0], bins=100)可视化输出概率值:

feat = net.blobs['prob'].data[0]

plt.figure(figsize=(15, 3))

plt.plot(feat.flat)测试自己的图片

# download an image

my_image_url = "..." # paste your URL here

# for example:

# my_image_url = "https://upload.wikimedia.org/wikipedia/commons/b/be/Orang_Utan%2C_Semenggok_Forest_Reserve%2C_Sarawak%2C_Borneo%2C_Malaysia.JPG"

!wget -O image.jpg $my_image_url

# transform it and copy it into the net

image = caffe.io.load_image('image.jpg')

net.blobs['data'].data[...] = transformer.preprocess('data', image)

# perform classification

net.forward()

# obtain the output probabilities

output_prob = net.blobs['prob'].data[0]

# sort top five predictions from softmax output

top_inds = output_prob.argsort()[::-1][:5]

plt.imshow(image)

print 'probabilities and labels:'

zip(output_prob[top_inds], labels[top_inds])