Python Scrapy 爬虫入门: 爬取豆瓣电影top250

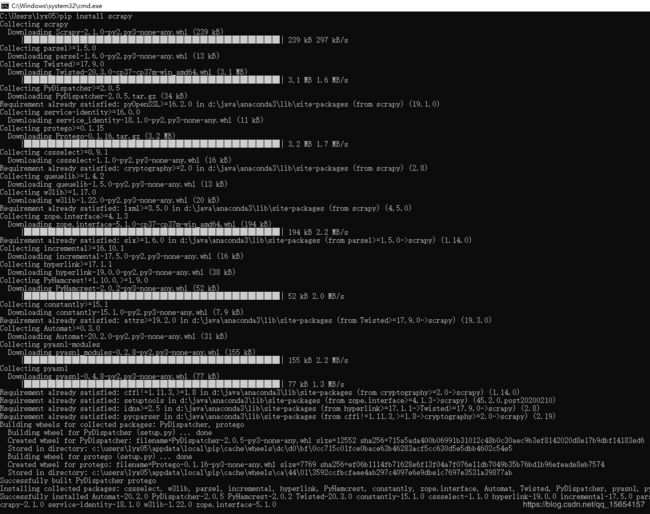

一、安装Scrapy

cmd 命令执行

pip install scrapy二、Scrapy介绍

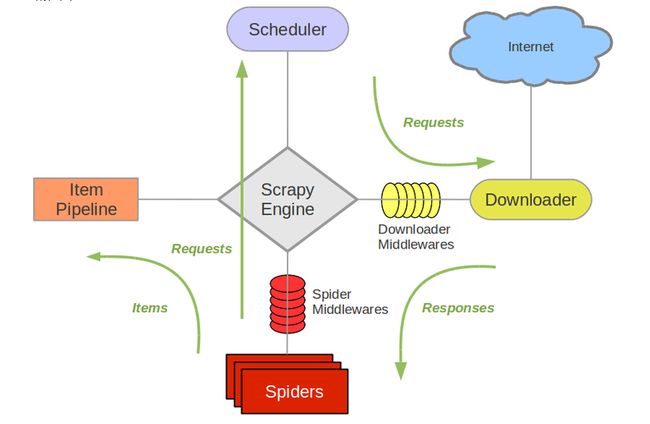

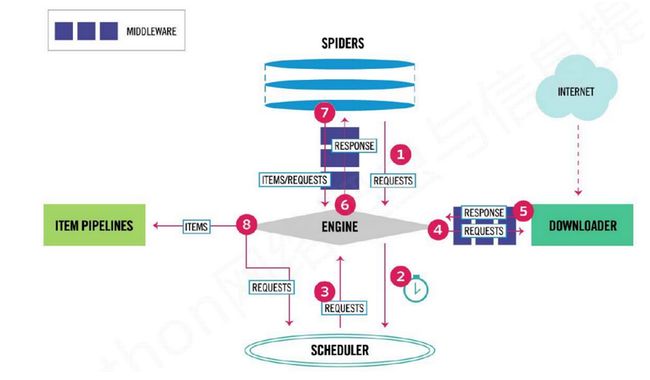

Scrapy是一套基于Twisted的异步处理框架,是纯python实现的爬虫框架,用户只需要定制开发几个模块就可以轻松的实现一个爬虫,用来抓取网页内容或者各种图片。

- Scrapy Engine(引擎):Scrapy框架的核心部分。负责在Spider和ItemPipeline、Downloader、Scheduler中间通信、传递数据等。

- Spider(爬虫):发送需要爬取的链接给引擎,最后引擎把其他模块请求回来的数据再发送给爬虫,爬虫就去解析想要的数据。这个部分是我们开发者自己写的,因为要爬取哪些链接,页面中的哪些数据是我们需要的,都是由程序员自己决定。

- Scheduler(调度器):负责接收引擎发送过来的请求,并按照一定的方式进行排列和整理,负责调度请求的顺序等。

- Downloader(下载器):负责接收引擎传过来的下载请求,然后去网络上下载对应的数据再交还给引擎。

- Item Pipeline(管道):负责将Spider(爬虫)传递过来的数据进行保存。具体保存在哪里,应该看开发者自己的需求。

- Downloader Middlewares(下载中间件):可以扩展下载器和引擎之间通信功能的中间件。

- Spider Middlewares(Spider中间件):可以扩展引擎和爬虫之间通信功能的中间件。

架构图:

三、安装MongoDB

https://www.mongodb.com/download-center/community 选择版本下载

我安装的是zip解压版:

1.解压、重命名文件夹,我放在 : D:\Java\NoSQL\mongodb4.2.7

2.新建文件夹: D:\Java\NoSQL\mongodb_data\log 、 D:\Java\NoSQL\mongodb_data\db

3.在mongodb4.2.7文件夹下新建 mongod.cfg , 编辑内容

systemLog:

destination: file

path: D:\Java\NoSQL\mongodb_data\log\mongod.log

storage:

dbPath: D:\Java\NoSQL\mongodb_data\db4.安装服务:

cd D:\Java\NoSQL\mongodb4.2.7\bin

mongod.exe --config D:\Java\NoSQL\mongodb4.2.7\mongod.cfg --install5.启动mongodb

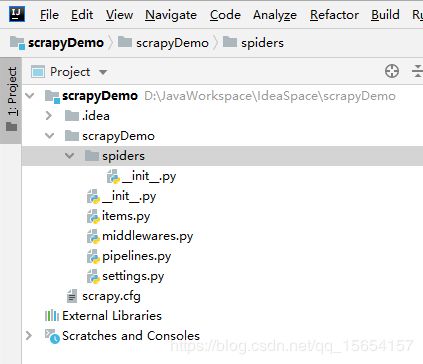

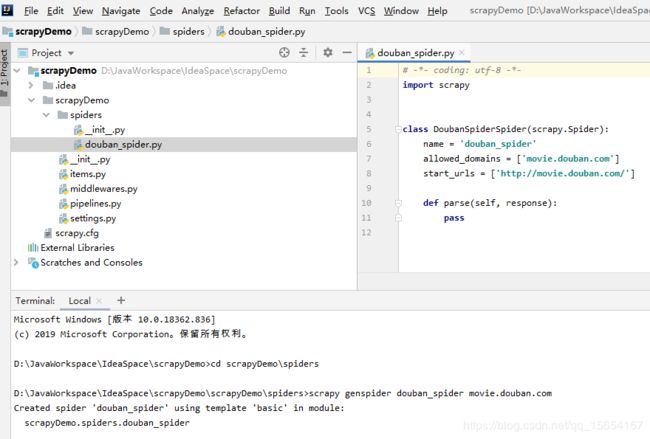

四、新建scrapy 项目

scrapy startproject scrapy_demo创建后的项目结构:

- cfg 项目的配置文件

- settings.py 项目的设置文件

- items.py 定义item数据结构的地方

- 生成spider.py ,用来定义正则表达式 : 进入spiders文件夹,执行命令:scrapy genspider douban_spider movie.douban.com 域名为要爬的网站

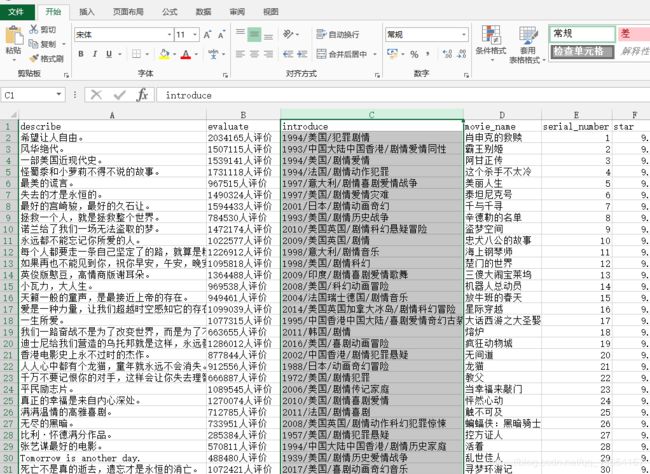

明确目标

编辑items.py,定义数据结构

import scrapy

class ScrapydemoItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 序号

serial_number = scrapy.Field()

# 电影名称

movie_name = scrapy.Field()

# 电影介绍

introduce = scrapy.Field()

# 星级

star = scrapy.Field()

# 电影评论数

evaluate = scrapy.Field()

# 电影描述

describe = scrapy.Field()编辑 Spider.py

import scrapy

class DoubanSpiderSpider(scrapy.Spider):

# 这里是爬虫名称

name = 'douban_spider'

# 允许的域名

allowed_domains = ['movie.douban.com']

# 入口url ,扔到调度器里面

start_urls = ['https://movie.douban.com/top250']

def parse(self, response):

print(response.text)

修改setting.py

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36'爬取内容

执行:scrapy crawl douban_spider

进一步编辑

在项目应用文件夹下新增main.py

from scrapy import cmdline

cmdline.execute('scrapy crawl douban_spider'.split())修改douban_spider.py

# -*- coding: utf-8 -*-

import scrapy

from scrapyDemo.items import ScrapydemoItem

class DoubanSpiderSpider(scrapy.Spider):

# 这里是爬虫名称

name = 'douban_spider'

# 允许的域名

allowed_domains = ['movie.douban.com']

# 入口url ,扔到调度器里面

start_urls = ['https://movie.douban.com/top250']

# 默认的解析方法

def parse(self, response):

movie_list = response.xpath("//div[@class='article']//ol[@class='grid_view']/li")

# 循环电影的条目

for item in movie_list:

douban_item = ScrapydemoItem()

douban_item['serial_number'] = item.xpath(".//div[@class='item']//em/text()").extract_first()

douban_item['movie_name'] = item.xpath(".//div[@class='info']/div[@class='hd']/a/span[1]/text()").extract_first()

content = item.xpath(".//div[@class='info']//div[@class='bd']/p[1]/text()").extract()

for i_content in content:

content_s = "".join(i_content.split())

douban_item['introduce'] = content_s

douban_item['star'] = item.xpath(".//span[@class='rating_num']/text()").extract_first()

douban_item['evaluate'] = item.xpath(".//div[@class='star']//span[4]/text()").extract_first()

douban_item['describe'] = item.xpath(".//p[@class='quote']/span/text()").extract_first()

yield douban_item

# 解析下一页

next_link = response.xpath("//span[@class='next']/link/@href").extract()

if next_link:

next_link = next_link[0]

yield scrapy.Request("https://movie.douban.com/top250" + next_link, callback=self.parse)

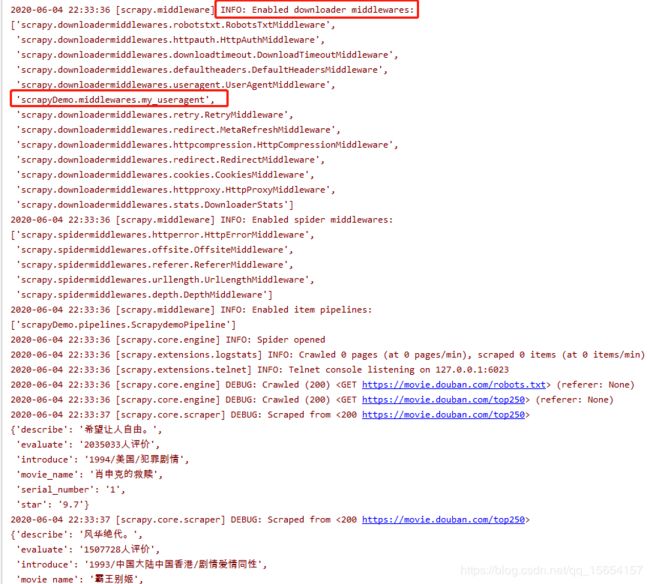

运行main.py , 可以看到已经爬到了数据

保存数据

导出到json

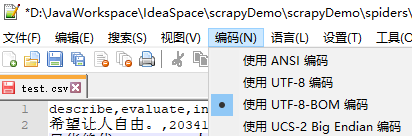

scrapy crawl douban_spider -o test.json导出到CSV : 到处到CSV的需要转 UTF-8-BOM

scrapy crawl douban_spider -o test.csv保存到MongoDB

修改settings.py 找到下面代码,取消注释

ITEM_PIPELINES = {

'scrapyDemo.pipelines.ScrapydemoPipeline': 300,

}修改pipelines.py

import pymongo

class ScrapydemoPipeline:

def __init__(self):

host = "localhost"

port = 27017

dbname = "douban"

sheetname = "movie"

client = pymongo.MongoClient(host=host,port=port)

mydb = client[dbname]

self.post = mydb[sheetname]

def process_item(self, item, spider):

data = dict(item)

self.post.insert(data)

return item

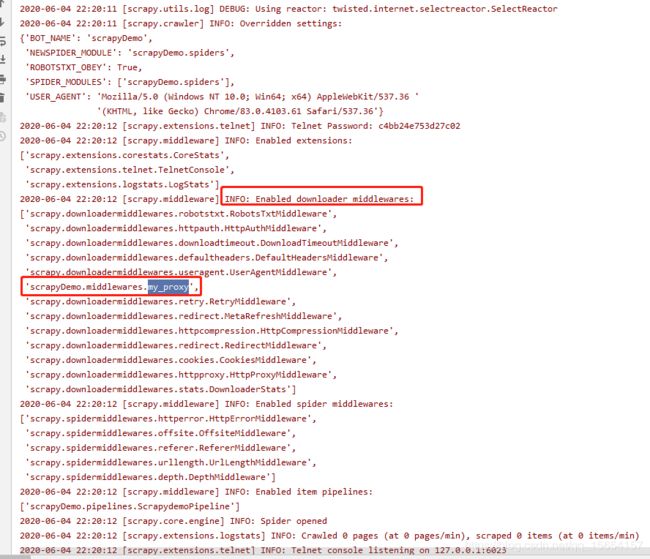

IP代理中间件

如果直接爬,可能会被反爬,就无法抓取网页,所以需要使用ip代理中间件,这里使用的是阿布云

编辑middlewares.py

class my_proxy(object):

def process_request(self,request,spider):

request.meta['proxy'] = 'http-cla.abuyun.com:9030'

proxy_name_pass = b'XXXXXXXXXXXXXX:XXXXXXXXXXXXXX' # 账号:密码

encode_pass_name = base64.b64encode(proxy_name_pass)

request.headers['Proxy-Authorization'] = 'Basic ' + encode_pass_name.decode()编辑settings.py

DOWNLOADER_MIDDLEWARES = {

# 'scrapyDemo.middlewares.ScrapydemoDownloaderMiddleware': 543,

'scrapyDemo.middlewares.my_proxy': 543,

}运行main.py 。如果有自定义的my_proxy就代表启动ip代理中间件成功了

user-agent中间件 反爬

继续编辑 middlewares.py

class my_useragent(object):

def process_request(self,request,spider):

USER_AGENT_LIST = [

'MSIE (MSIE 6.0; X11; Linux; i686) Opera 7.23',

'Opera/9.20 (Macintosh; Intel Mac OS X; U; en)',

'Opera/9.0 (Macintosh; PPC Mac OS X; U; en)',

'iTunes/9.0.3 (Macintosh; U; Intel Mac OS X 10_6_2; en-ca)',

'Mozilla/4.76 [en_jp] (X11; U; SunOS 5.8 sun4u)',

'iTunes/4.2 (Macintosh; U; PPC Mac OS X 10.2)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:5.0) Gecko/20100101 Firefox/5.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:9.0) Gecko/20100101 Firefox/9.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.8; rv:16.0) Gecko/20120813 Firefox/16.0',

'Mozilla/4.77 [en] (X11; I; IRIX;64 6.5 IP30)',

'Mozilla/4.8 [en] (X11; U; SunOS; 5.7 sun4u)'

]

agent = random.choice(USER_AGENT_LIST)

request.headers['User_Agent'] = agent

编辑 settings.py

DOWNLOADER_MIDDLEWARES = {

# 'scrapyDemo.middlewares.ScrapydemoDownloaderMiddleware': 543,

# 'scrapyDemo.middlewares.my_proxy': 543,

'scrapyDemo.middlewares.my_useragent':543 # 可以和其他中间件同时生效,但是优先级不能一样

}启动 main.py , 这样就能成功爬取了

注意事项

- 中间件定义完要在settings文件内启用

- 爬虫文件名和爬虫名称不能相同、spiders目录内不能存在相同爬虫名称的项目文件

- 要做一个文明守法的好公民,不要爬取公民的隐私数据,不要给对方的系统带来不必要的麻烦