使用rancher2.3.6部署mysql集群

作者:lizhonglin

github: https://github.com/Leezhonglin/

blog: https://leezhonglin.github.io

首先需要具备能够提供动态存储的存储类,我这里使用的是应用商店的nfs-provisioner.安装使用默认设置就行.安装好之后就能在启动这个服务的容器机器中有一个 /srv 目录。到时候存储的数据就都在这个文件夹中.

安装NFS

准备的镜像:

gcr.azk8s.cn/google-samples/xtrabackup:1.0

192.169.0.247:5000/dasp/mysql:5.7

gcr.azk8s.cn/google-samples/xtrabackup:1.0这个镜像国内访问不了可以使用dockerhub上面的twoeo/gcr.io-google-samples-xtrabackup:1.0重新tag代替.

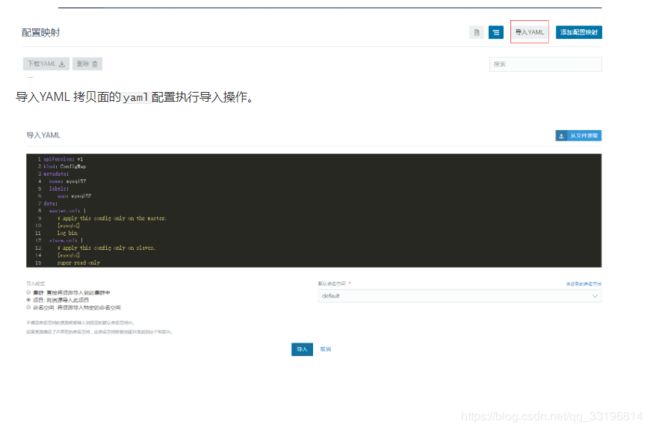

1.部署ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql57

labels:

app: mysql57

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

通过下图:

kubectl describe configmap mysql57

会看到如下结果:

2.部署Services

service1:

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

name: mysql57

labels:

app: mysql57

spec:

ports:

- name: mysql57

port: 3306

clusterIP: None

selector:

app: mysql57

service2:

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql57-read

labels:

app: mysql57

spec:

ports:

- name: mysql57

port: 3306

selector:

app: mysql57

执行完成后再服务发现里面就能看见刚才添加的两个服务。

同样执行如下命令也是可以查询到的

kubectl get svc | grep mysql57

3.部署StatefulSet

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql57

spec:

selector:

matchLabels:

app: mysql57

serviceName: mysql57

replicas: 3

template:

metadata:

labels:

app: mysql57

spec:

initContainers:

- name: init-mysql57

image: 192.169.0.247:5000/dasp/mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql57

image: gcr.azk8s.cn/google-samples/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql57-$(($ordinal-1)).mysql57 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: mysql57-data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql57

image: 192.169.0.247:5000/dasp/mysql:5.7

#command: ['/bin/bash','-c','docker-entrypoint.sh mysqld']

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql57

containerPort: 3306

- containerPort: 3306

hostPort: 3306

name: 3306tcp33060

protocol: TCP

volumeMounts:

- name: mysql57-data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 1Gi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: gcr.azk8s.cn/google-samples/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info && "x$(!= "x" ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave. (Need to remove the tailing semicolon!)

cat xtrabackup_slave_info | sed -E 's/;$//g' > change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$(, \

MASTER_HOST='mysql57-0.mysql57', \

MASTER_USER='root', \

MASTER_PASSWORD='', \

MASTER_CONNECT_RETRY=10; \

START SLAVE;" || exit 1

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: mysql57-data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql57

volumeClaimTemplates:

- metadata:

name: mysql57-data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: "nfs-provisioner"

resources:

requests:

storage: 10Gi

volumeClaimTemplates: 要配置成自己的

volumes: 要配置成自己的

4.检查是否完成

kubectl get pods -l app=mysql57 --watch

>kubectl get pods -l app=mysql57 --watch

NAME READY STATUS RESTARTS AGE

mysql57-0 2/2 Running 0 89m

mysql57-1 2/2 Running 0 90m

mysql57-2 2/2 Running 0 91m

至此mysql集群主从复制就在rancher上面部署好了.