Elastic-job系列(三)-------- 控制台作业事件追踪TODO

一、简介

esjob提供了任务事件追踪功能,通过做数据源配置,监听事件,会在任务执行的时候在指定的数据源创建俩张表,job_execution_log, 和 job_status_trace_log。然后通过配置事件追踪数据源配置,就可以在作业历史中查看作业的执行记录了。

此处使用的是上篇配置的一个simple类型的任务。

二、 整合过程

2.1 pom.xml

4.0.0

org.springframework.boot

spring-boot-starter-parent

2.1.5.RELEASE

com.elasticjob

demo

0.0.1-SNAPSHOT

demo

Demo project for Spring Boot

UTF-8

UTF-8

1.8

org.springframework.boot

spring-boot-starter

org.springframework.boot

spring-boot-starter-test

test

elastic-job-common-core

com.dangdang

2.1.5

elastic-job-lite-core

com.dangdang

2.1.5

elastic-job-lite-spring

com.dangdang

2.1.5

org.projectlombok

lombok

1.16.18

provided

mysql

mysql-connector-java

runtime

com.alibaba

druid-spring-boot-starter

1.1.9

org.mybatis.spring.boot

mybatis-spring-boot-starter

1.3.2

org.springframework.boot

spring-boot-maven-plugin

2.2 application.yml

# 端口

server:

port: 8080

# spring配置相关

spring:

# 应用名称

application:

name: elastic-job-demo

# elasticjob数据源

datasource:

elasticjob:

driverClassName: com.mysql.jdbc.Driver

username: root

password: 123456

# spring2.0此处为jdbc-url

jdbc-url: jdbc:mysql://localhost:3306/elastic-job?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=GMT%2B8

type: com.alibaba.druid.pool.DruidDataSource

# zk注册中心配置相关

reg-center:

# 连接Zookeeper服务器的列表IP:端口号,多个地址用逗号分隔

server-list: "IP:2181,IP:2182,IP:2183"

# Zookeeper的命名空间

namespace: zk-elastic-job

# 等待重试的间隔时间的初始值 默认1000,单位:毫秒

baseSleepTimeMilliseconds: 1000

# 等待重试的间隔时间的最大值 默认3000,单位:毫秒

maxSleepTimeMilliseconds: 3000

# 最大重试次数 默认3

maxRetries: 3

# 会话超时时间 默认60000,单位:毫秒

sessionTimeoutMilliseconds: 60000

# 连接超时时间 默认15000,单位:毫秒

connectionTimeoutMilliseconds: 15000

# 连接Zookeeper的权限令牌 默认不需要

#digest:

# simpleJobDemo作业配置相关

simpleJobDemo:

# cron表达式,用于控制作业触发时间

cron: 0 * * * * ?

# 作业分片总数

sharding-total-count: 3

# 分片序列号和参数用等号分隔,多个键值对用逗号分隔.分片序列号从0开始,不可大于或等于作业分片总数

sharding-item-parameters: 0=上海,1=北京,2=深圳

# 作业自定义参数,可通过传递该参数为作业调度的业务方法传参,用于实现带参数的作业

job-parameter: "simpleJobDemo作业参数"

# 是否开启任务执行失效转移,开启表示如果作业在一次任务执行中途宕机,允许将该次未完成的任务在另一作业节点上补偿执行, 默认为false

failover: true

# 是否开启错过任务重新执行 默认为true

misfire: true

# 作业描述信息

job-description: "simpleJobDemo作业描述"

2.3 zk注册中心配置

package com.elasticjob.demo.config.esjob;

import com.dangdang.ddframe.job.reg.zookeeper.ZookeeperConfiguration;

import com.dangdang.ddframe.job.reg.zookeeper.ZookeeperRegistryCenter;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* zk注册中心配置

*/

@Configuration

public class ZkRegistryCenterConfig {

@Value("${reg-center.server-list}")

private String serverList;

@Value("${reg-center.namespace}")

private String namespace;

@Bean(initMethod = "init")

public ZookeeperRegistryCenter regCenter() {

return new ZookeeperRegistryCenter(new ZookeeperConfiguration(serverList, namespace));

}

}

2.4 ElasticJob数据源

package com.elasticjob.demo.config.esjob;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.SqlSessionTemplate;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.boot.jdbc.DataSourceBuilder;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

/**

* @author qjwyss

* @date 2019/5/23

* @description ElasticJob数据源

*/

@Configuration

public class ElasticJobDatabase {

@Bean

@ConfigurationProperties(prefix = "spring.datasource.elasticjob")

public DataSource elasticjobDataSource() {

return DataSourceBuilder.create().build();

}

@Bean

public SqlSessionFactory elasticjobSqlSessionFactory(@Qualifier("elasticjobDataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean bean = new SqlSessionFactoryBean();

bean.setDataSource(dataSource);

return bean.getObject();

}

@Bean

public DataSourceTransactionManager elasticjobTransactionManager(@Qualifier("elasticjobDataSource") DataSource dataSource) {

return new DataSourceTransactionManager(dataSource);

}

@Bean

public SqlSessionTemplate elasticjobSqlSessionTemplate(@Qualifier("elasticjobSqlSessionFactory") SqlSessionFactory sqlSessionFactory) throws Exception {

return new SqlSessionTemplate(sqlSessionFactory);

}

}

注: 此处注册了一个esjob的数据源。因为有时候任务量会比较大并频繁,生成的监控记录会比较多,可以单独部署。

2.5 JobEventConfig监听配置

package com.elasticjob.demo.config.esjob;

import com.dangdang.ddframe.job.event.JobEventConfiguration;

import com.dangdang.ddframe.job.event.rdb.JobEventRdbConfiguration;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.sql.DataSource;

@Configuration

public class JobEventConfig {

@Autowired

private DataSource dataSource;

@Bean

public JobEventConfiguration jobEventConfiguration() {

return new JobEventRdbConfiguration(dataSource);

}

}

2.6 全局作业配置中心

package com.elasticjob.demo.config.esjob;

import com.dangdang.ddframe.job.event.JobEventConfiguration;

import com.dangdang.ddframe.job.lite.api.JobScheduler;

import com.dangdang.ddframe.job.lite.config.LiteJobConfiguration;

import com.dangdang.ddframe.job.lite.spring.api.SpringJobScheduler;

import com.dangdang.ddframe.job.reg.zookeeper.ZookeeperRegistryCenter;

import com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* 全局作业配置中心

*/

@Configuration

public class JobConfig {

@Autowired

private ZookeeperRegistryCenter regCenter;

@Autowired

private LiteJobConfiguration liteJobConfiguration;

@Autowired

private JobEventConfiguration jobEventConfiguration;

@Autowired

private SimpleJobDemo simpleJobDemo;

@Bean(initMethod = "init")

public JobScheduler simpleJobScheduler() {

return new SpringJobScheduler(simpleJobDemo, regCenter, liteJobConfiguration, jobEventConfiguration);

}

}

2.7 SimpleJobDemo作业配置

package com.elasticjob.demo.taskjob.simplejob;

import com.dangdang.ddframe.job.config.JobCoreConfiguration;

import com.dangdang.ddframe.job.config.simple.SimpleJobConfiguration;

import com.dangdang.ddframe.job.lite.config.LiteJobConfiguration;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* SimpleJobDemo作业配置

*/

@Configuration

public class SimpleJobDemoJobProperties {

@Value("${simpleJobDemo.cron}")

private String cron;

@Value("${simpleJobDemo.sharding-total-count}")

private int shardingTotalCount;

@Value("${simpleJobDemo.sharding-item-parameters}")

private String shardingItemParameters;

@Value("${simpleJobDemo.job-description}")

private String jobDescription;

@Value("${simpleJobDemo.job-parameter}")

private String jobParameter;

@Autowired

private SimpleJobDemo simpleJobDemo;

@Bean

public LiteJobConfiguration liteJobConfiguration() {

JobCoreConfiguration.Builder builder =

JobCoreConfiguration.newBuilder(simpleJobDemo.getClass().getName(), cron, shardingTotalCount);

JobCoreConfiguration jobCoreConfiguration = builder

.shardingItemParameters(shardingItemParameters)

.description(jobDescription)

.jobParameter(jobParameter)

.build();

SimpleJobConfiguration simpleJobConfiguration = new SimpleJobConfiguration(jobCoreConfiguration, simpleJobDemo.getClass().getCanonicalName());

return LiteJobConfiguration

.newBuilder(simpleJobConfiguration)

.overwrite(true)

.build();

}

}

2.8 SimpleJobDemo

package com.elasticjob.demo.taskjob.simplejob;

import com.dangdang.ddframe.job.api.ShardingContext;

import com.dangdang.ddframe.job.api.simple.SimpleJob;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Component;

@Component

@Slf4j

public class SimpleJobDemo implements SimpleJob {

@Override

public void execute(ShardingContext shardingContext) {

log.info("shardingContext的信息为:" + shardingContext);

switch (shardingContext.getShardingItem()) {

case 0:

System.out.println("上海分片执行的任务:求和1到3完成,结果为:6");

break;

case 1:

System.out.println("北京分片执行的任务:求和4到7完成,结果为:22");

break;

case 2:

System.out.println("深圳分片执行的任务:求和8到10完成,结果为:27");

break;

}

}

}

2.9 执行

- 启动项目,可以看到输入如下内容:

-

2019-05-23 15:19:30.382 INFO 15492 --- [SimpleJobDemo-1] c.e.d.taskjob.simplejob.SimpleJobDemo : shardingContext的信息为:ShardingContext(jobName=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo, taskId=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo@-@0,1,2@-@READY@[email protected]@-@15492, shardingTotalCount=3, jobParameter=simpleJobDemo作业参数, shardingItem=0, shardingParameter=上海) 上海分片执行的任务:求和1到3完成,结果为:6 2019-05-23 15:19:30.383 INFO 15492 --- [SimpleJobDemo-2] c.e.d.taskjob.simplejob.SimpleJobDemo : shardingContext的信息为:ShardingContext(jobName=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo, taskId=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo@-@0,1,2@-@READY@[email protected]@-@15492, shardingTotalCount=3, jobParameter=simpleJobDemo作业参数, shardingItem=1, shardingParameter=北京) 北京分片执行的任务:求和4到7完成,结果为:22 2019-05-23 15:19:30.385 INFO 15492 --- [SimpleJobDemo-3] c.e.d.taskjob.simplejob.SimpleJobDemo : shardingContext的信息为:ShardingContext(jobName=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo, taskId=com.elasticjob.demo.taskjob.simplejob.SimpleJobDemo@-@0,1,2@-@READY@[email protected]@-@15492, shardingTotalCount=3, jobParameter=simpleJobDemo作业参数, shardingItem=2, shardingParameter=深圳) 深圳分片执行的任务:求和8到10完成,结果为:27

-

三、查看控制台

3.1 数据库

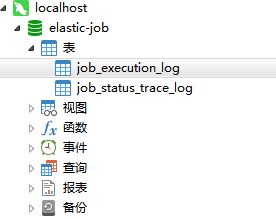

- 当项目启动并有任务执行的时候,会生成一下俩张表,如下图:

- job_execution_log表结构详解TODO

-

CREATE TABLE `job_execution_log` ( `id` varchar(40) NOT NULL, `job_name` varchar(100) NOT NULL, `task_id` varchar(255) NOT NULL, `hostname` varchar(255) NOT NULL, `ip` varchar(50) NOT NULL, `sharding_item` int(11) NOT NULL, `execution_source` varchar(20) NOT NULL, `failure_cause` varchar(4000) DEFAULT NULL, `is_success` int(11) NOT NULL, `start_time` timestamp NULL DEFAULT NULL, `complete_time` timestamp NULL DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

- job_status_trace_log表结构详解TODO

-

CREATE TABLE `job_status_trace_log` ( `id` varchar(40) NOT NULL, `job_name` varchar(100) NOT NULL, `original_task_id` varchar(255) NOT NULL, `task_id` varchar(255) NOT NULL, `slave_id` varchar(50) NOT NULL, `source` varchar(50) NOT NULL, `execution_type` varchar(20) NOT NULL, `sharding_item` varchar(100) NOT NULL, `state` varchar(20) NOT NULL, `message` varchar(4000) DEFAULT NULL, `creation_time` timestamp NULL DEFAULT NULL, PRIMARY KEY (`id`), KEY `TASK_ID_STATE_INDEX` (`task_id`,`state`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

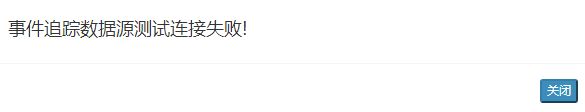

3.2 控制台添加事件追踪数据源TODO

注: 此处一直失败,TODO

3.3 查看

四、Elastic-job系列

- Elastic-job系列(一)-------- 搭建Esjob控制台

- Elastic-job系列(二)-------- simple类型作业

- Elastic-job系列(三)-------- 控制台作业事件追踪TODO