Python 层次聚类+K-means算法+可视化 数据挖掘

层次聚类:

import pandas as pd

import scipy.cluster.hierarchy as sch

from sklearn.cluster import AgglomerativeClustering

from sklearn.preprocessing import MinMaxScaler

from matplotlib import pyplot as plt

# 读取数据

data = pd.read_csv(r'C:/Users/24224/Desktop/countries/test2.csv',index_col=0)

# print(data.head())

df = MinMaxScaler().fit_transform(data)

# 建立模型

model = AgglomerativeClustering(n_clusters=4)

model.fit(df)

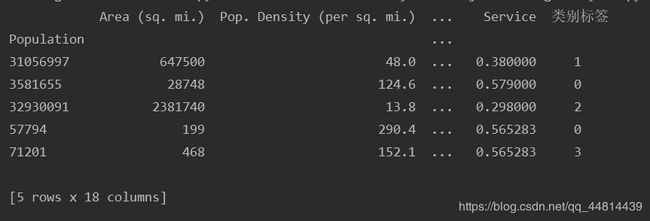

data['类别标签'] = model.labels_

print(data.head())

# 画图

ss = sch.linkage(df,method='ward')

sch.dendrogram(ss)

plt.show()

预处理,填充缺失值

# -*- coding: utf-8 -*-

#导入相应的包

import scipy

import scipy.cluster.hierarchy as sch

from scipy.cluster.vq import vq,kmeans,whiten

import numpy as np

import math

import matplotlib.pylab as plt

import pandas as pd

#导入pandas包

df= pd.read_csv("C:/Users/24224/Desktop/countries/countries of the world.csv")

df=df.drop(['Country','Region'],axis=1)

df = df.apply(pd.to_numeric, errors='coerce')

df = df.fillna(df.mean())

df.isnull()

"""

df['Net migration'].fillna(df['Net migration'].mean())

df['Infant mortality (per 1000 births)'].fillna(df['Infant mortality (per 1000 births)'].mean())

df['GDP ($ per capita)'].fillna(df['GDP ($ per capita)'].mean())

df['Literacy (%)'].fillna(df['Literacy (%)'].mean())

df['Phones (per 1000)'].fillna(df['Phones (per 1000)'].mean())

df['Arable (%)'].fillna(df['Arable (%)'].mean())

df['Crops (%)'].fillna(df['Crops (%)'].mean())

df['Other (%)'].fillna(df['Other (%)'].mean())

df['Climate'].fillna(df['Climate'].mean())

df['Birthrate'].fillna(df['Birthrate'].mean())

df['Deathrate'].fillna(df['Deathrate'].mean())

df['Agriculture'].fillna(df['Agriculture'].mean())

df['Industry'].fillna(df['Industry'].mean())

df['Service'].fillna(df['Service'].mean())"""

df.to_csv("C:/Users/24224/Desktop/countries/test2.csv",index=False,sep=',')

k-means聚类:

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# 加载数据

def loadDataSet(fileName):

data = pd.read_csv(fileName,usecols=[17,6])

return data

# 欧氏距离计算

def distEclud(x, y):

return np.sqrt(np.sum((x - y) ** 2)) # 计算欧氏距离

# 为给定数据集构建一个包含K个随机质心的集合

def randCent(dataSet, k):

m, n = dataSet.shape

centroids = np.zeros((k, n))

for i in range(k):

index = int(np.random.uniform(0, m)) #

centroids[i, :] = dataSet[index, :]

return centroids

# k均值聚类

def KMeans(dataSet, k):

m = np.shape(dataSet)[0] # 行的数目

# 第一列存样本属于哪一簇,第二列存样本的到簇的中心点的误差

clusterAssment = np.mat(np.zeros((m, 2))) #生成矩阵

clusterChange = True

# 第1步 初始化centroids

centroids = randCent(dataSet, k)

while clusterChange: # while True

clusterChange = False

# 遍历所有的样本(行数)

for i in range(m):

minDist = 100000.0

minIndex = -1# 遍历所有的质心

# 第2步 找出最近的质心

for j in range(k):

# 计算该样本到质心的欧式距离

distance = distEclud(centroids[j, :], dataSet[i, :]) # minDist = 100000.0 minIndex = -1

if distance < minDist:

minDist = distance

minIndex = j #质心为j

# clusterAssment,第一列存样本属于哪一簇,第二列存样本的到簇的中心点的误差,

# 第 3 步:更新每一行样本所属的簇

if clusterAssment[i, 0] != minIndex: #clusterAssment = np.mat(np.zeros((m, 2))) #生成矩阵

clusterChange = True

clusterAssment[i, :] = minIndex, minDist ** 2

# 第 4 步:更新k个质心

for j in range(k):

pointsInCluster = dataSet[np.nonzero(clusterAssment[:, 0].A == j)[0]] # 获取簇类所有的点,非零元素

centroids[j, :] = np.mean(pointsInCluster, axis=0) # 对矩阵的行求均值

print("Congratulations,cluster complete!")

return centroids, clusterAssment

def showCluster(dataSet, k, centroids, clusterAssment):

m, n = dataSet.shape

if n != 2:

print("数据不是二维的")

return 1

mark = ['or', 'ob', 'og', 'ok', '^r', '+r', 'sr', 'dr', ', 'pr']

if k > len(mark):

print("k值太大了,不够标记了")

return 1

# 绘制所有的样本

for i in range(m):

markIndex = int(clusterAssment[i, 0])

plt.plot(dataSet[i, 0], dataSet[i, 1], mark[markIndex])

mark = ['Dr', 'Db', 'Dg', 'Dk', '^b', '+b', 'sb', 'db', ', 'pb']

# 绘制质心

for i in range(k):

plt.plot(centroids[i, 0], centroids[i, 1], mark[i])

plt.show()

dataSet = loadDataSet("C:/Users/24224/Desktop/countries/test2.csv")

k = 4

centroids, clusterAssment = KMeans(dataSet.values, k)

showCluster(dataSet.values, k, centroids, clusterAssment)