elastic-job入门实例

- 说明

- 功能列表

- 任务分片

- 多任务类型

- 云原生

- 容错性

- 任务聚合

- 易用性

- 构建工具

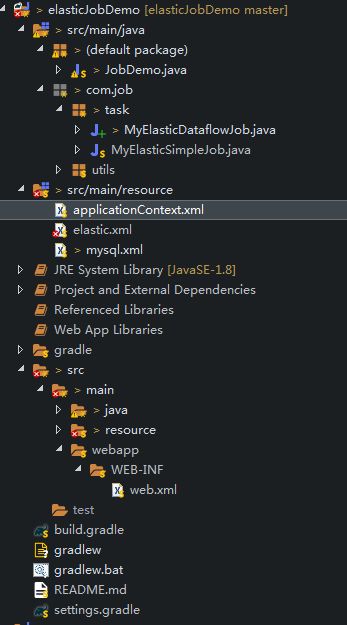

- 项目结构如下

- 引入依赖

- SimpleJob 简单作业

- DataFlowJob 数据流作业

- 测试以上两种作业

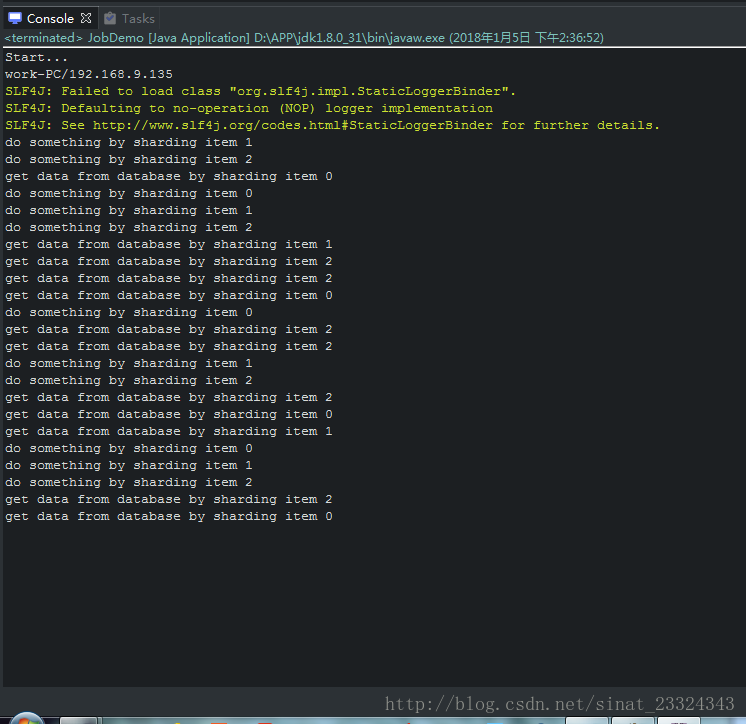

- 运行结果

- 创建elasticxml配置文件

- 配置datasource

- 创建applicationContextxml文件

- 配置webxml

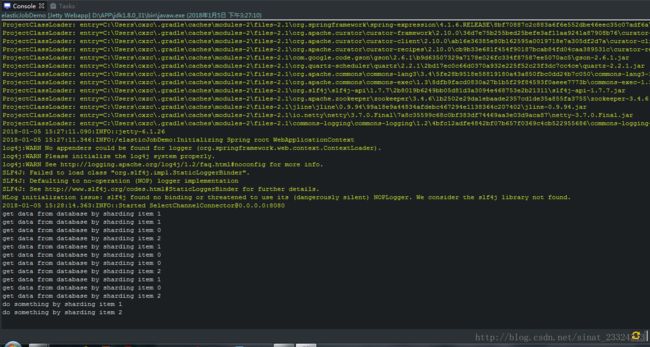

- 运作结果

说明

Elastic-Job是一个分布式调度解决方案,由两个相互独立的子项目Elastic-Job-Lite和Elastic-Job-Cloud组成。

Elastic-Job-Lite定位为轻量级无中心化解决方案,使用jar包的形式提供分布式任务的协调服务;Elastic-Job-Cloud采用自研Mesos Framework的解决方案,额外提供资源治理、应用分发以及进程隔离等功能。

功能列表

1. 任务分片

- 将整体任务拆解为多个子任务

- 可通过服务器的增减弹性伸缩任务处理能力

- 分布式协调,任务服务器上下线的全自动发现与处理

2. 多任务类型

- 基于时间驱动的任务

- 基于数据驱动的任务(TBD)

- 同时支持常驻任务和瞬时任务

- 多语言任务支持

3. 云原生

- 完美结合Mesos或Kubernetes等调度平台

- 任务不依赖于IP、磁盘、数据等有状态组件

- 合理的资源调度,基于Netflix的Fenzo进行资源分配

4. 容错性

- 支持定时自我故障检测与自动修复

- 分布式任务分片唯一性保证

- 支持失效转移和错过任务重触发

5. 任务聚合

- 相同任务聚合至相同的执行器统一处理

- 节省系统资源与初始化开销

- 动态调配追加资源至新分配的任务

6. 易用性

- 完善的运维平台

- 提供任务执行历史数据追踪能力

- 注册中心数据一键dump用于备份与调试问题

相关概念可以访问官方网站进行了解:http://elasticjob.io/index_zh.html

接下来我们就开始实现一个小例子

构建工具

gradle

项目结构如下

引入依赖

在build.gradle文件中

//elastic-job

[group: 'com.dangdang', name: 'elastic-job-lite-core', version: '2.1.5'],

[group: 'com.dangdang', name: 'elastic-job-lite-spring', version: '2.1.5']SimpleJob 简单作业

import com.dangdang.ddframe.job.api.ShardingContext;

import com.dangdang.ddframe.job.api.simple.SimpleJob;

public class MyElasticSimpleJob implements SimpleJob{

@Override

public void execute(ShardingContext context) {

switch (context.getShardingItem()) {

case 0:

System.out.println("do something by sharding item 0");

break;

case 1:

System.out.println("do something by sharding item 1");

break;

case 2:

System.out.println("do something by sharding item 2");

break;

// case n: ...

}

}

}DataFlowJob 数据流作业

import java.util.ArrayList;

import java.util.List;

import com.dangdang.ddframe.job.api.ShardingContext;

import com.dangdang.ddframe.job.api.dataflow.DataflowJob;

public class MyElasticDataflowJob implements DataflowJob<String>{

@Override

public List<String> fetchData(ShardingContext context) {

switch (context.getShardingItem()) {

case 0:

// get data from database by sharding item 0

List<String> data1 = new ArrayList<>();

data1.add("get data from database by sharding item 0");

return data1;

case 1:

// get data from database by sharding item 1

List<String> data2 = new ArrayList<>();

data2.add("get data from database by sharding item 1");

return data2;

case 2:

// get data from database by sharding item 2

List<String> data3 = new ArrayList<>();

data3.add("get data from database by sharding item 2");

return data3;

// case n: ...

}

return null;

}

@Override

public void processData(ShardingContext shardingContext, List<String> data) {

int count=0;

// process data

// ...

for (String string : data) {

count++;

System.out.println(string);

if (count>10) {

return;

}

}

}

}测试以上两种作业

import java.net.InetAddress;

import java.net.UnknownHostException;

import com.dangdang.ddframe.job.api.dataflow.DataflowJob;

import com.dangdang.ddframe.job.api.simple.SimpleJob;

import com.dangdang.ddframe.job.config.JobCoreConfiguration;

import com.dangdang.ddframe.job.config.JobRootConfiguration;

import com.dangdang.ddframe.job.config.dataflow.DataflowJobConfiguration;

import com.dangdang.ddframe.job.config.script.ScriptJobConfiguration;

import com.dangdang.ddframe.job.config.simple.SimpleJobConfiguration;

import com.dangdang.ddframe.job.lite.api.JobScheduler;

import com.dangdang.ddframe.job.lite.config.LiteJobConfiguration;

import com.dangdang.ddframe.job.reg.base.CoordinatorRegistryCenter;

import com.dangdang.ddframe.job.reg.zookeeper.ZookeeperConfiguration;

import com.dangdang.ddframe.job.reg.zookeeper.ZookeeperRegistryCenter;

import com.job.task.MyElasticDataflowJob;

import com.job.task.MyElasticSimpleJob;

public class JobDemo {

public static void main(String[] args) throws UnknownHostException {

System.out.println("Start...");

System.out.println(InetAddress.getLocalHost());

new JobScheduler(createRegistryCenter(), createSimpleJobConfiguration()).init();

new JobScheduler(createRegistryCenter(), createDataflowJobConfiguration()).init();

}

private static CoordinatorRegistryCenter createRegistryCenter() {

CoordinatorRegistryCenter regCenter = new ZookeeperRegistryCenter(

new ZookeeperConfiguration("127.0.0.1:2181", "new-elastic-job-demo"));

regCenter.init();

return regCenter;

}

private static LiteJobConfiguration createSimpleJobConfiguration() {

// 定义作业核心配置

JobCoreConfiguration simpleCoreConfig = JobCoreConfiguration.newBuilder("SimpleJobDemo", "0/15 * * * * ?", 10).build();

// 定义SIMPLE类型配置

SimpleJobConfiguration simpleJobConfig = new SimpleJobConfiguration(simpleCoreConfig, MyElasticSimpleJob.class.getCanonicalName());

// 定义Lite作业根配置

JobRootConfiguration simpleJobRootConfig = LiteJobConfiguration.newBuilder(simpleJobConfig).build();

return (LiteJobConfiguration) simpleJobRootConfig;

}

private static LiteJobConfiguration createDataflowJobConfiguration() {

// 定义作业核心配置

JobCoreConfiguration dataflowCoreConfig = JobCoreConfiguration.newBuilder("DataflowJob", "0/30 * * * * ?", 10).build();

// 定义DATAFLOW类型配置

DataflowJobConfiguration dataflowJobConfig = new DataflowJobConfiguration(dataflowCoreConfig, MyElasticDataflowJob.class.getCanonicalName(), true);

// 定义Lite作业根配置

JobRootConfiguration dataflowJobRootConfig = LiteJobConfiguration.newBuilder(dataflowJobConfig).build();

return (LiteJobConfiguration) dataflowJobRootConfig;

}

}

运行结果

现在我们通过配置文件的方式来实现两种类型的作业

创建elastic.xml配置文件

将elastic-job通过配置文件进行参数设置

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:reg="http://www.dangdang.com/schema/ddframe/reg"

xmlns:job="http://www.dangdang.com/schema/ddframe/job"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.dangdang.com/schema/ddframe/reg

http://www.dangdang.com/schema/ddframe/reg/reg.xsd

http://www.dangdang.com/schema/ddframe/job

http://www.dangdang.com/schema/ddframe/job/job.xsd

">

<reg:zookeeper id="regCenter" server-lists="192.168.6.175:12181"

namespace="elastic-job" base-sleep-time-milliseconds="1000"

max-sleep-time-milliseconds="3000" max-retries="3" />

<job:simple id="JobSimpleJob" class="com.job.task.MyElasticSimpleJob"

registry-center-ref="regCenter" cron="0/30 * * * * ?"

sharding-total-count="3" sharding-item-parameters="0=A,1=B,2=C" />

<job:dataflow id="JobDataflow" class="com.job.task.MqElasticDataflowJob"

registry-center-ref="regCenter" cron="0/10 * * * * ?" sharding-total-count="3"

sharding-item-parameters="0=a,1=b,2=c" job-sharding-strategy-class="com.dangdang.ddframe.job.lite.api.strategy.impl.AverageAllocationJobShardingStrategy"

job-parameter="100" streaming-process="true" reconcile-interval-minutes="10"

overwrite="true" event-trace-rdb-data-source="dataSource"/>

beans>配置datasource

version="1.0" encoding="UTF-8"?>

"http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:util="http://www.springframework.org/schema/util"

xmlns:tx="http://www.springframework.org/schema/tx"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util.xsd http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx.xsd">

id="dataSource" class="com.mchange.v2.c3p0.ComboPooledDataSource"

destroy-method="close">

<property name="driverClass" value="com.mysql.jdbc.Driver" />

<property name="jdbcUrl" value="jdbc:mysql://127.0.0.1:3306/for_test?useUnicode=yes&characterEncoding=UTF-8" />

<property name="user" value="admin" />

<property name="password" value="super" />

<property name="minPoolSize" value="3" />

<property name="maxPoolSize" value="20" />

<property name="acquireIncrement" value="1" />

<property name="testConnectionOnCheckin" value="true" />

<property name="maxIdleTimeExcessConnections" value="240" />

<property name="idleConnectionTestPeriod" value="300" />

创建applicationContext.xml文件

将elastic-job与Spring整合

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context" xmlns:task="http://www.springframework.org/schema/task"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.2.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/task http://www.springframework.org/schema/task/spring-task.xsd ">

<task:scheduler id="taskScheduler" pool-size="10" />

<task:executor id="taskExecutor" />

<task:annotation-driven executor="taskExecutor" scheduler="taskScheduler" />

<import resource="elastic.xml" />

<import resource="mysql.xml"/>

beans>配置web.xml

<web-app version="2.5" xmlns="http://java.sun.com/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd">

<display-name>elastic-jobdisplay-name>

<context-param>

<param-name>contextConfigLocationparam-name>

<param-value>classpath:applicationContext.xmlparam-value>

context-param>

<listener>

<listener-class>

org.springframework.web.context.ContextLoaderListener

listener-class>

listener>

web-app>

运作结果

代码下载:https://coding.net/u/liaiyomia/p/elasticJobDemo/git