Tensorflow + AlexNet + MNIST 填坑小记

最近,帮老婆debug过程中,也学习了一些tensorflow的相关知识。其中,花了比较多的实验研究如何使用tf.dataset来导入数据。以下是对一个demo的小记录,完成的工作是在tensorflow上实现AlexNet,使用的数据为MNIST。

由于国内网络原因,直接用tf中的函数读取mnist数据集较慢,我选择使用keras.datasets中import mnist数据集。这就导致后续的训练中,无法直接使用mnist.train.next_batch(batch_size)来生成mnist的训练数据。因此,选择使用tf.dataset将读取的numpy数据转化为tf所需格式。

读取数据代码如下:

from keras.datssets import mnist

from keras.utils import to_categorical

def load_mnist(image_size):

(x_train,y_train),(x_test,y_test) = mnist.load_data()

train_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_train]

test_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_test]

train_image = np.asarray(train_image, 'f')

test_image = np.asarray(test_image, 'f')

train_label = to_categorical(y_train)

test_label = to_categorical(y_test)

print('finish loading data!')

return train_image, train_label, test_image, test_labelload_mnist函数完成了mnist数据的读取,并将单通道转化为3通道(使用opencv函数),将label转化为onehot编码。

接下来,进行网络的初始化。网络结构转载自老婆的博客。

首先定义几个层结构:

# define layers

def conv(x, kernel, strides, b):

return tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, kernel, strides, padding = 'SAME'), b))

def max_pooling(x, kernel, strides):

return tf.nn.max_pool(x, kernel, strides, padding = 'VALID')

def fc(x, w, b):

return tf.nn.relu(tf.add(tf.matmul(x,w),b))其次,定义网络初始权值:

# define variables

weights = {

'wc1':tf.Variable(tf.random_normal([11,11,3,96], dtype=tf.float32, stddev=0.1), name='weights1'),

'wc2':tf.Variable(tf.random_normal([5,5,96,256], dtype=tf.float32, stddev=0.1), name='weights2'),

'wc3':tf.Variable(tf.random_normal([3,3,256,384], dtype=tf.float32, stddev=0.1), name='weights3'),

'wc4':tf.Variable(tf.random_normal([3,3,384,384], dtype=tf.float32, stddev=0.1), name='weights4'),

'wc5':tf.Variable(tf.random_normal([3,3,384,256], dtype=tf.float32, stddev=0.1), name='weights5'),

'wd1':tf.Variable(tf.random_normal([6*6*256, 4096], dtype=tf.float32, stddev=0.1), name='weights_fc1'),

'wd2':tf.Variable(tf.random_normal([4096, 1000], dtype=tf.float32, stddev=0.1), name='weights_fc2'),

'wd3':tf.Variable(tf.random_normal([1000, n_output], dtype=tf.float32, stddev=0.1), name='weights_fc3'),

}

bias = {

'bc1':tf.Variable(tf.zeros([96]), name='bias1'),

'bc2':tf.Variable(tf.zeros([256]), name='bias2'),

'bc3':tf.Variable(tf.zeros([384]), name='bias3'),

'bc4':tf.Variable(tf.zeros([384]), name='bias4'),

'bc5':tf.Variable(tf.zeros([256]), name='bias5'),

'bd1':tf.Variable(tf.zeros([4096]), name='bias_fc1'),

'bd2':tf.Variable(tf.zeros([1000]), name='bias_fc2'),

'bd3':tf.Variable(tf.zeros([n_output]), name='bias_fc3'),

}

strides = {

'sc1':[1,4,4,1],

'sc2':[1,1,1,1],

'sc3':[1,1,1,1],

'sc4':[1,1,1,1],

'sc5':[1,1,1,1],

'sp1':[1,2,2,1],

'sp2':[1,2,2,1],

'sp3':[1,2,2,1]

}

pooling_size = {

'kp1':[1,3,3,1],

'kp2':[1,3,3,1],

'kp3':[1,3,3,1]

}随后搭建网络:

#build model

def alexnet(inputs, weights, bias, strides, pooling_size, keep_prob):

with tf.name_scope('conv1'):

conv1 = conv(inputs, weights['wc1'], strides['sc1'], bias['bc1'])

print_layer(conv1)

with tf.name_scope('pool1'):

pool1 = max_pooling(conv1, pooling_size['kp1'], strides['sp1'])

print_layer(pool1)

with tf.name_scope('conv2'):

conv2 = conv(pool1, weights['wc2'], strides['sc2'], bias['bc2'])

print_layer(conv2)

with tf.name_scope('pool2'):

pool2 = max_pooling(conv2, pooling_size['kp2'], strides['sp2'])

print_layer(pool2)

with tf.name_scope('conv3'):

conv3 = conv(pool2, weights['wc3'], strides['sc3'], bias['bc3'])

print_layer(conv3)

with tf.name_scope('conv4'):

conv4 = conv(conv3, weights['wc4'], strides['sc4'], bias['bc4'])

print_layer(conv4)

with tf.name_scope('conv5'):

conv5 = conv(conv4, weights['wc5'], strides['sc5'], bias['bc5'])

print_layer(conv5)

with tf.name_scope('pool3'):

pool3 = max_pooling(conv5, pooling_size['kp3'], strides['sp3'])

print_layer(pool3)

flatten = tf.reshape(pool3, [-1,6*6*256])

with tf.name_scope('fc1'):

fc1 = fc(flatten, weights['wd1'], bias['bd1'])

fc1_drop = tf.nn.dropout(fc1, keep_prob)

print_layer(fc1_drop)

with tf.name_scope('fc2'):

fc2 = fc(fc1_drop, weights['wd2'], bias['bd2'])

fc2_drop = tf.nn.dropout(fc2, keep_prob)

print_layer(fc2_drop)

with tf.name_scope('fc3'):

outputs = tf.add(tf.matmul(fc2,weights['wd3']),bias['bd3'])

print_layer(outputs)

return outputs接下来将读取的numpy类型数据处理为tf.dataset的数据格式。由于数据量较大,超过tf规定的2GB要求,因此需要使用可初始话的迭代器来进行数据读取。读取方式如下:

x_dataset = tf.placeholder(train_image.dtype, train_image.shape)

y_dataset = tf.placeholder(train_label.dtype, train_label.shape)

train_dataset = tf.data.Dataset.from_tensor_slices((x_dataset,y_dataset)).repeat().batch(batch_size)

train_iterator = train_dataset.make_initializable_iterator()

features, labels = train_iterator.get_next()其中,使用tf.placeholder占位符来进行初始化,并使用tf.data.Dataset.from_tensor_slices设置dataset。为了使代码能够运行多个epoch,使用repeat函数创建多个周期的数据集(具体使用参照tensorflow官网)。

然后,建立目标函数,选定优化方式,并设定计算acc方法。

keep_prob = tf.placeholder(tf.float32)

pred = alexnet(features, weights, bias, strides, pooling_size, keep_prob)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=labels))

train_step = tf.train.AdamOptimizer(learning_rate = lr).minimize(loss)

correct = tf.equal(tf.argmax(labels,1), tf.argmax(pred,1))

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))这样就可以开始训练了,训练的代码如下:

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run(train_iterator.initializer, feed_dict={x_dataset: train_image, y_dataset: train_label})

for epoch in range(epochs):

_, loss_value, acc = sess.run([train_step, loss, accuracy], feed_dict = {keep_prob: dropout_rate})

if (epoch+1)%100 == 0:

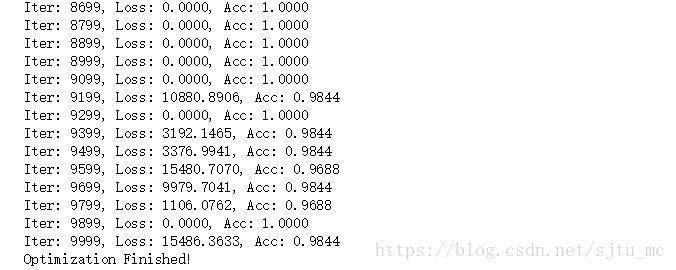

print("Iter: {}, Loss: {:.4f}, Acc: {:.4f}".format(epoch, loss_value, acc))

print ("Optimization Finished!")