【深度学习】Alexnet网络分析及代码实现

简介

Alexnet是2012年ImageNet比赛的冠军Hinton及其学生Alex Krizhevsky提出,并以其姓名命名的网络。Alexnet的提出也正式掀起了深度学习的热潮,激发了研究者对深度学习的热情。虽然后面出现了更为优秀的VGGNet、GooLeNet、ResNet等网络,但是Alexnet的地位是不可撼动的,因此我们有必要去花些时间了解一下这一深度学习史上的伟大杰作。

Alexnet论文链接:Imagenet classification with deep convolutional neural networks

网络结构

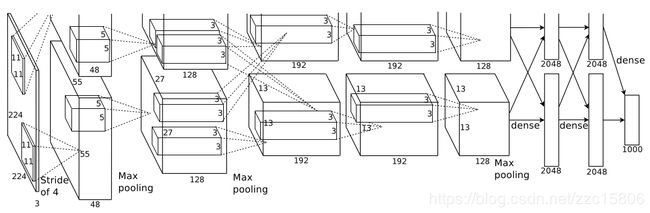

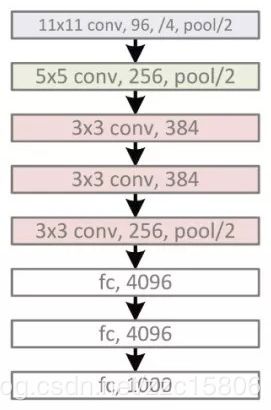

上图为论文中的网络结构图。因为论文中使用了两块GPU进行训练,所以有两个分支。为了使网络结构看起来更加直观,将网络结构简化为下图,

Alexnet共包含5个卷积层和3个全连接层,其中第1,2,5个卷积层后接最大池化和LRN操作。

主要贡献

1. 使用ReLU代替Sigmoid作为激活函数,成功解决了Sigmoid在网络较深时的梯度弥散问题;

2. 最后几个全连接层使用Dropout,避免过拟合;

3. 使用了重叠最大池化操作,即池化步长比池化核尺寸小,提升了特征的丰富性。此前CNN普遍采用平均池化,最大池化能够避免平均池化的模糊化效果;

4. 提出了LRN层。LRN全称Local Response Normalization,对局部神经元创建竞争机制,增大响应大的单元,抑制反馈小的神经元,增强了模型的泛化能力。(VGGNet没有使用LRN,作者表示LRN对模型没有提升,而且参数量大,当然不能以偏概全);

5. 使用CUDA利用GPU加速深度卷积网络的训练。

6. 数据增强。通过随机裁剪、旋转、翻转等操作,减轻过拟合,提升模型泛化性能。

代码实现

本文使用Keras实现Alexnet网络。

from keras.layers import Input

from keras.layers import Conv2D, MaxPool2D, Dense, Flatten, Dropout, Lambda

from keras.models import Model

from keras import optimizers

from keras.utils import plot_model

from keras import backend as K

def LRN(alpha=1e-4, k=2, beta=0.75, n=5):

"""

LRN for cross channel normalization in the original Alexnet

parameters default as original paper.

"""

def f(X):

b, r, c, ch = X.shape

half = n // 2

square = K.square(X)

extra_channels = K.spatial_2d_padding(square, ((0, 0), (half, half)), data_format='channels_first')

scale = k

for i in range(n):

scale += alpha * extra_channels[:,:,:,i:i+int(ch)]

scale = scale ** beta

return X / scale

return Lambda(f, output_shape=lambda input_shape: input_shape)

def alexnet(input_shape=(224,224,3), nclass=1000):

"""

build Alexnet model using keras with TensorFlow backend.

:param input_shape: input shape of network, default as (224,224,3)

:param nclass: numbers of class(output shape of network), default as 1000

:return: Alexnet model

"""

input_ = Input(shape=input_shape)

x = Conv2D(96, kernel_size=(11, 11), strides=(4, 4), activation='relu')(input_)

x = LRN()(x)

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2))(x)

x = Conv2D(256, kernel_size=(5, 5), strides=(1, 1), activation='relu', padding='same')(x)

x = LRN()(x)

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2))(x)

x = Conv2D(384, kernel_size=(3, 3), strides=(1, 1), activation='relu', padding='same')(x)

x = Conv2D(384, kernel_size=(3, 3), strides=(1, 1), activation='relu', padding='same')(x)

x = Conv2D(256, kernel_size=(3, 3), strides=(1, 1), activation='relu', padding='same')(x)

x = MaxPool2D(pool_size=(3, 3), strides=(2, 2))(x)

x = Flatten()(x)

x = Dense(4096, activation='relu')(x)

x = Dropout(0.5)(x)

x = Dense(4096, activation='relu')(x)

x = Dropout(0.5)(x)

output_ = Dense(nclass, activation='softmax')(x)

model = Model(inputs=input_, outputs=output_)

model.summary()

opti_sgd = optimizers.sgd(lr=0.01, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=opti_sgd, metrics=['accuracy'])

return model

if __name__ == '__main__':

model = alexnet()

plot_model(model, 'Alexnet.png', show_shapes=True) # 保存模型图