Binder通信流程浅析

某位牛逼的大神曾说过:Read The Fucking Resource Code.这句话是个程序员应该都懂…最好的学习方式就是阅读其源代码,源码之下,了无秘密,所以在学习binder机制的时候,我选择了从源代码下手,过程很枯燥…

binder机制中,有4个重要的角色,Client,Serve,ServiceManager,Binder驱动。简单来讲,各种服务通过ServiceManager将服务添加到ServiceManager中,Client再通ServiceManager获取到想要的服务.我打算从以下几点讲起:

1.ServiceManager是如何启动的?

2.如何添加一个服务?

3.如何获取服务?

再这之前先来看看Binder通信中所涉及的关键类.

添加服务

Framework层:IServiceManager是一个接口,主要提供addService,getService等方法,ServiceManager通过getIServiceManager方法获取一个IServiceManager对象,其内部通过ServiceManagerNative的asInterface来获取一个ServiceManagerProxy对象,该对象实现了IServiceManager接口

private static IServiceManager getIServiceManager() {

if (sServiceManager != null) {

return sServiceManager;

}

// Find the service manager

sServiceManager = ServiceManagerNative

.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()));

return sServiceManager;

}

BinderInternal的getContextObject方法会通过JNI调用native层的代码返回一个BinderProxy对象,BinderProxy实现了IBinder接口,相当于ServiceManagerProxy的mRemote变量就是这个BinderProxy对象.

static public IServiceManager asInterface(IBinder obj)

{

if (obj == null) {

return null;

}

IServiceManager in =

(IServiceManager)obj.queryLocalInterface(descriptor);

if (in != null) {

return in;

}

return new ServiceManagerProxy(obj);

}

public ServiceManagerProxy(IBinder remote) {

mRemote = remote;

}

所以,通过ServiceManager的addService方法,其实是调用的ServiceManagerProxy的addService方法,再来看看具体addService的实现

public void addService(String name, IBinder service, boolean allowIsolated, int dumpPriority)

throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IServiceManager.descriptor);

data.writeString(name);

data.writeStrongBinder(service);

data.writeInt(allowIsolated ? 1 : 0);

data.writeInt(dumpPriority);

mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0);

reply.recycle();

data.recycle();

}

这里mRemote就是刚说的BinderProxy对象,其中看关键的一行代码mRemote.transac方法的具体实现:

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

if (mWarnOnBlocking && ((flags & FLAG_ONEWAY) == 0)) {

// For now, avoid spamming the log by disabling after we've logged

// about this interface at least once

mWarnOnBlocking = false;

Log.w(Binder.TAG, "Outgoing transactions from this process must be FLAG_ONEWAY",

new Throwable());

}

final boolean tracingEnabled = Binder.isTracingEnabled();

if (tracingEnabled) {

final Throwable tr = new Throwable();

Binder.getTransactionTracker().addTrace(tr);

StackTraceElement stackTraceElement = tr.getStackTrace()[1];

Trace.traceBegin(Trace.TRACE_TAG_ALWAYS,

stackTraceElement.getClassName() + "." + stackTraceElement.getMethodName());

}

try {

return transactNative(code, data, reply, flags);

} finally {

if (tracingEnabled) {

Trace.traceEnd(Trace.TRACE_TAG_ALWAYS);

}

}

}

从这里会调用transacNative方法,这是一个本地方法,具体实现在Native层,所以Binder通信的核心都是在Native层跟内核层。

这里涉及JNI层的调用,先看看这些JNI方法的注册,Android系统启动时,会在AndroidRuntime中调用startReg方法统一注册所有的JNI方法:

int AndroidRuntime::startReg(JNIEnv* env)

{

ATRACE_NAME("RegisterAndroidNatives");

/*

* This hook causes all future threads created in this process to be

* attached to the JavaVM. (This needs to go away in favor of JNI

* Attach calls.)

*/

androidSetCreateThreadFunc((android_create_thread_fn) javaCreateThreadEtc);

ALOGV("--- registering native functions ---\n");

/*

* Every "register" function calls one or more things that return

* a local reference (e.g. FindClass). Because we haven't really

* started the VM yet, they're all getting stored in the base frame

* and never released. Use Push/Pop to manage the storage.

*/

env->PushLocalFrame(200);

if (register_jni_procs(gRegJNI, NELEM(gRegJNI), env) < 0) {

env->PopLocalFrame(NULL);

return -1;

}

env->PopLocalFrame(NULL);

//createJavaThread("fubar", quickTest, (void*) "hello");

return 0;

}

其中gRegJNI是一个数组,记录所有要注册的JNI方法,涉及到Binder相关的注册主要是:

REG_JNI(register_android_os_Binder),

REG_JNI(register_android_os_Parcel),

这是gRegJNI数组里面的两个元素,register_android_os_Binder,register_android_os_Parcel的具体实现在android_util_Binder.cpp以及android_os_Parcel.cpp中。

先看下register_android_os_Binder函数:

int register_android_os_Binder(JNIEnv* env)

{

if (int_register_android_os_Binder(env) < 0)

return -1;

if (int_register_android_os_BinderInternal(env) < 0)

return -1;

if (int_register_android_os_BinderProxy(env) < 0)

return -1;

jclass clazz = FindClassOrDie(env, "android/util/Log");

gLogOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gLogOffsets.mLogE = GetStaticMethodIDOrDie(env, clazz, "e",

"(Ljava/lang/String;Ljava/lang/String;Ljava/lang/Throwable;)I");

clazz = FindClassOrDie(env, "android/os/ParcelFileDescriptor");

gParcelFileDescriptorOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gParcelFileDescriptorOffsets.mConstructor = GetMethodIDOrDie(env, clazz, "",

"(Ljava/io/FileDescriptor;)V");

clazz = FindClassOrDie(env, "android/os/StrictMode");

gStrictModeCallbackOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gStrictModeCallbackOffsets.mCallback = GetStaticMethodIDOrDie(env, clazz,

"onBinderStrictModePolicyChange", "(I)V");

clazz = FindClassOrDie(env, "java/lang/Thread");

gThreadDispatchOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gThreadDispatchOffsets.mDispatchUncaughtException = GetMethodIDOrDie(env, clazz,

"dispatchUncaughtException", "(Ljava/lang/Throwable;)V");

gThreadDispatchOffsets.mCurrentThread = GetStaticMethodIDOrDie(env, clazz, "currentThread",

"()Ljava/lang/Thread;");

return 0;

}

这函数主要注册了Framework层的Binder,BinderInternal,BinderProxy这三个类,对应这里的int_register_android_os_Binder,int_register_android_os_BinderInternal,int_register_android_os_BinderProxy三个函数,下面分别看下:

int_register_android_os_Binder函数,注册Binder:

static int int_register_android_os_Binder(JNIEnv* env)

{

jclass clazz = FindClassOrDie(env, kBinderPathName);

gBinderOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz, "execTransact", "(IJJI)Z");

gBinderOffsets.mObject = GetFieldIDOrDie(env, clazz, "mObject", "J");

return RegisterMethodsOrDie(

env, kBinderPathName,

gBinderMethods, NELEM(gBinderMethods));

}

关注此处两个结构体:

1.gBinderOffset保存了java层对应类的成员变量以及方法,这为JNI层访问java层提供了通道.

2.gBinderMethods是一个数组,建立java层方法与JNI层函数一一映射的关系

注册的方法,gBinderMethods源码如下:

static const JNINativeMethod gBinderMethods[] = {

/* name, signature, funcPtr */

{ "getCallingPid", "()I", (void*)android_os_Binder_getCallingPid },

{ "getCallingUid", "()I", (void*)android_os_Binder_getCallingUid },

{ "clearCallingIdentity", "()J", (void*)android_os_Binder_clearCallingIdentity },

{ "restoreCallingIdentity", "(J)V", (void*)android_os_Binder_restoreCallingIdentity },

{ "setThreadStrictModePolicy", "(I)V", (void*)android_os_Binder_setThreadStrictModePolicy },

{ "getThreadStrictModePolicy", "()I", (void*)android_os_Binder_getThreadStrictModePolicy },

{ "flushPendingCommands", "()V", (void*)android_os_Binder_flushPendingCommands },

{ "getNativeBBinderHolder", "()J", (void*)android_os_Binder_getNativeBBinderHolder },

{ "getNativeFinalizer", "()J", (void*)android_os_Binder_getNativeFinalizer },

{ "blockUntilThreadAvailable", "()V", (void*)android_os_Binder_blockUntilThreadAvailable }

};

这里主要关注getNativeBBinderHolder方法,后续会用到…用到时再分析.

另外两个方法类似:

int_register_android_os_BinderInternal,注册BinderInternal

static int int_register_android_os_BinderInternal(JNIEnv* env)

{

jclass clazz = FindClassOrDie(env, kBinderInternalPathName);

gBinderInternalOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gBinderInternalOffsets.mForceGc = GetStaticMethodIDOrDie(env, clazz, "forceBinderGc", "()V");

gBinderInternalOffsets.mProxyLimitCallback = GetStaticMethodIDOrDie(env, clazz, "binderProxyLimitCallbackFromNative", "(I)V");

jclass SparseIntArrayClass = FindClassOrDie(env, "android/util/SparseIntArray");

gSparseIntArrayOffsets.classObject = MakeGlobalRefOrDie(env, SparseIntArrayClass);

gSparseIntArrayOffsets.constructor = GetMethodIDOrDie(env, gSparseIntArrayOffsets.classObject,

"", "()V");

gSparseIntArrayOffsets.put = GetMethodIDOrDie(env, gSparseIntArrayOffsets.classObject, "put",

"(II)V");

BpBinder::setLimitCallback(android_os_BinderInternal_proxyLimitcallback);

return RegisterMethodsOrDie(

env, kBinderInternalPathName,

gBinderInternalMethods, NELEM(gBinderInternalMethods));

}

注册的方法,gBinderInternalMethods源码如下:

static const JNINativeMethod gBinderInternalMethods[] = {

/* name, signature, funcPtr */

{ "getContextObject", "()Landroid/os/IBinder;", (void*)android_os_BinderInternal_getContextObject },

{ "joinThreadPool", "()V", (void*)android_os_BinderInternal_joinThreadPool },

{ "disableBackgroundScheduling", "(Z)V", (void*)android_os_BinderInternal_disableBackgroundScheduling },

{ "setMaxThreads", "(I)V", (void*)android_os_BinderInternal_setMaxThreads },

{ "handleGc", "()V", (void*)android_os_BinderInternal_handleGc },

{ "nSetBinderProxyCountEnabled", "(Z)V", (void*)android_os_BinderInternal_setBinderProxyCountEnabled },

{ "nGetBinderProxyPerUidCounts", "()Landroid/util/SparseIntArray;", (void*)android_os_BinderInternal_getBinderProxyPerUidCounts },

{ "nGetBinderProxyCount", "(I)I", (void*)android_os_BinderInternal_getBinderProxyCount },

{ "nSetBinderProxyCountWatermarks", "(II)V", (void*)android_os_BinderInternal_setBinderProxyCountWatermarks}

};

这里先看下getContextObject方法,我们在这之前从Framework层获取ServiceManager对象的时候用到 sServiceManager = ServiceManagerNative

.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()));这里的getContextObject本地方法就对应native层注册的android_os_BinderInternal_getContextObject函数,我们后续会具体看这个函数的实现.这里先跳过…

最后注册BinderProxy,int_register_android_os_BinderProxy:

static int int_register_android_os_BinderProxy(JNIEnv* env)

{

jclass clazz = FindClassOrDie(env, "java/lang/Error");

gErrorOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

clazz = FindClassOrDie(env, kBinderProxyPathName);

gBinderProxyOffsets.mClass = MakeGlobalRefOrDie(env, clazz);

gBinderProxyOffsets.mGetInstance = GetStaticMethodIDOrDie(env, clazz, "getInstance",

"(JJ)Landroid/os/BinderProxy;");

gBinderProxyOffsets.mSendDeathNotice = GetStaticMethodIDOrDie(env, clazz, "sendDeathNotice",

"(Landroid/os/IBinder$DeathRecipient;)V");

gBinderProxyOffsets.mDumpProxyDebugInfo = GetStaticMethodIDOrDie(env, clazz, "dumpProxyDebugInfo",

"()V");

gBinderProxyOffsets.mNativeData = GetFieldIDOrDie(env, clazz, "mNativeData", "J");

clazz = FindClassOrDie(env, "java/lang/Class");

gClassOffsets.mGetName = GetMethodIDOrDie(env, clazz, "getName", "()Ljava/lang/String;");

return RegisterMethodsOrDie(

env, kBinderProxyPathName,

gBinderProxyMethods, NELEM(gBinderProxyMethods));

}

注册的方法,gBinderProxyMethods源码如下:

static const JNINativeMethod gBinderProxyMethods[] = {

/* name, signature, funcPtr */

{"pingBinder", "()Z", (void*)android_os_BinderProxy_pingBinder},

{"isBinderAlive", "()Z", (void*)android_os_BinderProxy_isBinderAlive},

{"getInterfaceDescriptor", "()Ljava/lang/String;", (void*)android_os_BinderProxy_getInterfaceDescriptor},

{"transactNative", "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z", (void*)android_os_BinderProxy_transact},

{"linkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)V", (void*)android_os_BinderProxy_linkToDeath},

{"unlinkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)Z", (void*)android_os_BinderProxy_unlinkToDeath},

{"getNativeFinalizer", "()J", (void*)android_os_BinderProxy_getNativeFinalizer},

};

这里主要关注transacNative方法,后续会用到…

现在我们回到获取sServiceManager对象时

sServiceManager=ServiceManagerNative.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()));这行代码,看看getContextObject这个方法的具体实现,上面说了,这是个本地方法,具体实现是在native层的android_os_BinderInternal_getContextObject函数:

static jobject android_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz)

{

sp b = ProcessState::self()->getContextObject(NULL);

return javaObjectForIBinder(env, b);

}

先给出结论:这里ProcessState::self()->getContextObject(NULL);得到的是一个new BpBinder(0)对象,然后调用javaObjectForIBinder方法返回,从这个方法名可以看出它的作用是根据这个C++层的BpBinder对象得到一个Java层对应的对象,这个对象是BinderProxy.其具体实现如下:

jobject javaObjectForIBinder(JNIEnv* env, const sp& val)

{

if (val == NULL) return NULL;

if (val->checkSubclass(&gBinderOffsets)) {

// It's a JavaBBinder created by ibinderForJavaObject. Already has Java object.

jobject object = static_cast(val.get())->object();

LOGDEATH("objectForBinder %p: it's our own %p!\n", val.get(), object);

return object;

}

// For the rest of the function we will hold this lock, to serialize

// looking/creation/destruction of Java proxies for native Binder proxies.

AutoMutex _l(gProxyLock);

BinderProxyNativeData* nativeData = gNativeDataCache;

if (nativeData == nullptr) {

nativeData = new BinderProxyNativeData();

}

// gNativeDataCache is now logically empty.

jobject object = env->CallStaticObjectMethod(gBinderProxyOffsets.mClass,

gBinderProxyOffsets.mGetInstance, (jlong) nativeData, (jlong) val.get());

if (env->ExceptionCheck()) {

// In the exception case, getInstance still took ownership of nativeData.

gNativeDataCache = nullptr;

return NULL;

}

BinderProxyNativeData* actualNativeData = getBPNativeData(env, object);

if (actualNativeData == nativeData) {

// New BinderProxy; we still have exclusive access.

nativeData->mOrgue = new DeathRecipientList;

nativeData->mObject = val;

gNativeDataCache = nullptr;

++gNumProxies;

if (gNumProxies >= gProxiesWarned + PROXY_WARN_INTERVAL) {

ALOGW("Unexpectedly many live BinderProxies: %d\n", gNumProxies);

gProxiesWarned = gNumProxies;

}

} else {

// nativeData wasn't used. Reuse it the next time.

gNativeDataCache = nativeData;

}

return object;

}

第一次进来,val->checkSubclass这个返回为false,继续往下执行,new了一个BinderProxyNativeData的nativeData变量,然后通过jobject object = env->CallStaticObjectMethod(gBinderProxyOffsets.mClass,

gBinderProxyOffsets.mGetInstance, (jlong) nativeData, (jlong) val.get());创建了一个BinderProxy对象,CallStaticObjectMethod方法,第一个参数表示类名,这里是BinderProxy,第二个参数是方法名,mGetInstance的初始化对应的是gBinderProxyOffsets.mGetInstance = GetStaticMethodIDOrDie(env, clazz, “getInstance”,"(JJ)Landroid/os/BinderProxy;");BinderProxy的getInstance方法,我们看下java层也就是BinderProxy的getInstance的具体实现:

private static BinderProxy getInstance(long nativeData, long iBinder) {

BinderProxy result;

try {

result = sProxyMap.get(iBinder);

if (result != null) {

return result;

}

result = new BinderProxy(nativeData);

} catch (Throwable e) {

// We're throwing an exception (probably OOME); don't drop nativeData.

NativeAllocationRegistry.applyFreeFunction(NoImagePreloadHolder.sNativeFinalizer,

nativeData);

throw e;

}

NoImagePreloadHolder.sRegistry.registerNativeAllocation(result, nativeData);

// The registry now owns nativeData, even if registration threw an exception.

sProxyMap.set(iBinder, result);

return result;

}

这里可以看到直接new 了一个BinderProxy对象,并传入了一个nativeData对象,这个对象是通过CallStaticObjectMethod的第三个参数传进来的,就是上述native层创建的nativeData。接下来通过getBPNativeData函数得到actualNativeData,getBPNativeData实际上得到的就是java层的mNativeData属性,就是我们刚刚在构造函数传进去的那个参数,native层的nativeData变量,所以这里

if (actualNativeData == nativeData) 这个这个条件是成立的。会把val这个BpBinder对象保存在nativeData的mObject中。这个mObject对象后续会用到。

BinderProxyNativeData* getBPNativeData(JNIEnv* env, jobject obj) {

return (BinderProxyNativeData *) env->GetLongField(obj, gBinderProxyOffsets.mNativeData);

}

gBinderProxyOffsets.mNativeData的初始化在注册BinderProxy的时候有体现,即:gBinderProxyOffsets.mNativeData = GetFieldIDOrDie(env, clazz, “mNativeData”, “J”);

至此,先做一个小结:

Framework层通过ServiceManager的getIServiceManager方法得到的是一个BinderProxy对象,并在Native层生成一个BpBinder对象并保存在BinderProxyNativeData的mObject中。

//又复习了一遍,此处修正一下:ServiceManager的getIServiceManager方法得到的是一个ServiceManagerProxy对象,其构造函数会传进去一个成员变量mRemote,这个mRemote对象才是BinderProxy。

回到之前说的,Framework层的ServiceManager的addService方法经过层层调用最终到BinderProxy的transac方法,该方法内部会调用transacNative方法,这是个本地方法,具体实现在android_util_Binder.cpp的android_os_BinderProxy_transact函数:

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

if (dataObj == NULL) {

jniThrowNullPointerException(env, NULL);

return JNI_FALSE;

}

Parcel* data = parcelForJavaObject(env, dataObj);

if (data == NULL) {

return JNI_FALSE;

}

Parcel* reply = parcelForJavaObject(env, replyObj);

if (reply == NULL && replyObj != NULL) {

return JNI_FALSE;

}

IBinder* target = getBPNativeData(env, obj)->mObject.get();

if (target == NULL) {

jniThrowException(env, "java/lang/IllegalStateException", "Binder has been finalized!");

return JNI_FALSE;

}

ALOGV("Java code calling transact on %p in Java object %p with code %" PRId32 "\n",

target, obj, code);

bool time_binder_calls;

int64_t start_millis;

if (kEnableBinderSample) {

// Only log the binder call duration for things on the Java-level main thread.

// But if we don't

time_binder_calls = should_time_binder_calls();

if (time_binder_calls) {

start_millis = uptimeMillis();

}

}

//printf("Transact from Java code to %p sending: ", target); data->print();

status_t err = target->transact(code, *data, reply, flags);

//if (reply) printf("Transact from Java code to %p received: ", target); reply->print();

if (kEnableBinderSample) {

if (time_binder_calls) {

conditionally_log_binder_call(start_millis, target, code);

}

}

if (err == NO_ERROR) {

return JNI_TRUE;

} else if (err == UNKNOWN_TRANSACTION) {

return JNI_FALSE;

}

signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/, data->dataSize());

return JNI_FALSE;

}

看这里IBinder* target = getBPNativeData(env, obj)->mObject.get();这行代码就是从取出之前保存在mObject中的BpBinder对象,然后执行status_t err = target->transact(code, *data, reply, flags);继续往下跟看下BpBinder的transact的实现:

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

可以看到BpBinder其实把任务交给了IPCThreadState类,这个类是Binder通信Native层的核心,之前创建的BpBinder的时候,传入了一个参数0,就是 status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);这里的mHandle,这个0表示远程服务ServiceManager的引用,这里的code是ADD_SERVICE_TRANSACTION

接下来看看真正数据的传递,IPCThreadState的实现:

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState;

}

......省略部分代码.....

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}

self函数就是创建了IPCThreadState对象

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

初始化了mIn,mOut用来传递数据的容器。

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err;

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(nullptr, nullptr);

}

return err;

}

先说明下各参数的值,handle为0,表示服务ServiceManager的引用,code为ADD_SERVICE_TRANSACTION,flag为0,data为要发送的数据.

该函数主要做了两件事情:

1,封装要发送的数据writeTransactionData

2,等待回复 waitForResponse

看下writeTransactionData的实现:

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

这里把要发送的数据都封装在binder_transaction_data这个结构体内,此处写入的cmd为BC_TRANSACTION然后写入mOut,接着看下waitForResponse:

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast(tr.data.ptr.buffer);

freeBuffer(nullptr,

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(nullptr,

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

该函数又调用talkWithDrive函数来与内核层binder驱动进行交互,看下talkWithDrive的实现:

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

if (outAvail != 0) {

alog << "Sending commands to driver: " << indent;

const void* cmds = (const void*)bwr.write_buffer;

const void* end = ((const uint8_t*)cmds)+bwr.write_size;

alog << HexDump(cmds, bwr.write_size) << endl;

while (cmds < end) cmds = printCommand(alog, cmds);

alog << dedent;

}

alog << "Size of receive buffer: " << bwr.read_size

<< ", needRead: " << needRead << ", doReceive: " << doReceive << endl;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(__ANDROID__)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

IF_LOG_COMMANDS() {

alog << "Our err: " << (void*)(intptr_t)err << ", write consumed: "

<< bwr.write_consumed << " (of " << mOut.dataSize()

<< "), read consumed: " << bwr.read_consumed << endl;

}

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

alog << "Remaining data size: " << mOut.dataSize() << endl;

alog << "Received commands from driver: " << indent;

const void* cmds = mIn.data();

const void* end = mIn.data() + mIn.dataSize();

alog << HexDump(cmds, mIn.dataSize()) << endl;

while (cmds < end) cmds = printReturnCommand(alog, cmds);

alog << dedent;

}

return NO_ERROR;

}

return err;

}

这里doReceive为ture,如果不传,默认就是ture,这在IPCThreadState.h文件中有说明:

status_t talkWithDriver(bool doReceive=true);

另外,needRead也为true,const bool needRead = mIn.dataPosition() >= mIn.dataSize();dataPosition表示起始位置,dataSize表示终止位置,当没有数据时,这两个值是相等的,所以这里needRead也为true。由此得出const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0; outAvail等于mOut.dataSize();

接下来会调用ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) 经系统调用进入内核层的binder_ioctl方法:

该方法的源码路径(我看的是基于8.1的):kernel-4.4\drivers\android\binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx\n",

proc->pid, current->pid, cmd, arg);*/

binder_selftest_alloc(&proc->alloc);

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

case BINDER_SET_MAX_THREADS: {

int max_threads;

if (copy_from_user(&max_threads, ubuf,

sizeof(max_threads))) {

ret = -EINVAL;

goto err;

}

binder_inner_proc_lock(proc);

proc->max_threads = max_threads;

binder_inner_proc_unlock(proc);

break;

}

case BINDER_SET_CONTEXT_MGR_EXT: {

struct flat_binder_object fbo;

if (copy_from_user(&fbo, ubuf, sizeof(fbo))) {

ret = -EINVAL;

goto err;

}

ret = binder_ioctl_set_ctx_mgr(filp, &fbo);

if (ret)

goto err;

break;

}

case BINDER_SET_CONTEXT_MGR:

ret = binder_ioctl_set_ctx_mgr(filp, NULL);

if (ret)

goto err;

break;

case BINDER_THREAD_EXIT:

binder_debug(BINDER_DEBUG_THREADS, "%d:%d exit\n",

proc->pid, thread->pid);

binder_thread_release(proc, thread);

thread = NULL;

break;

case BINDER_VERSION: {

struct binder_version __user *ver = ubuf;

if (size != sizeof(struct binder_version)) {

ret = -EINVAL;

goto err;

}

if (put_user(BINDER_CURRENT_PROTOCOL_VERSION,

&ver->protocol_version)) {

ret = -EINVAL;

goto err;

}

break;

}

case BINDER_GET_NODE_DEBUG_INFO: {

struct binder_node_debug_info info;

if (copy_from_user(&info, ubuf, sizeof(info))) {

ret = -EFAULT;

goto err;

}

ret = binder_ioctl_get_node_debug_info(proc, &info);

if (ret < 0)

goto err;

if (copy_to_user(ubuf, &info, sizeof(info))) {

ret = -EFAULT;

goto err;

}

break;

}

default:

ret = -EINVAL;

goto err;

}

ret = 0;

err:

if (thread)

thread->looper_need_return = false;

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

pr_info("%d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

err_unlocked:

trace_binder_ioctl_done(ret);

return ret;

}

这里我们的场景cmd是BINDER_WRITE_READ,然后调用binder_ioctl_write_read函数:

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llx\n",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&proc->todo))

binder_wakeup_proc_ilocked(proc);

binder_inner_proc_unlock(proc);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lld\n",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

通过copy_from_user方法把数据保存到本地bwr,上述我们已经说明,bwr.write_size大于0,于是进入binder_thread_write函数:

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

struct binder_context *context = proc->context;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error.cmd == BR_OK) {

int ret;

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

atomic_inc(&binder_stats.bc[_IOC_NR(cmd)]);

atomic_inc(&proc->stats.bc[_IOC_NR(cmd)]);

atomic_inc(&thread->stats.bc[_IOC_NR(cmd)]);

}

switch (cmd) {

.....省略一大波代码.....实在太多了

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0);

break;

}

.....省略大波大波代码.....

default:

pr_err("%d:%d unknown command %d\n",

proc->pid, thread->pid, cmd);

return -EINVAL;

}

*consumed = ptr - buffer;

}

return 0;

}

该方法很长很长,这里我们只需关注cmd为BC_TRANSACTION,get_user(cmd, (uint32_t __user *)ptr)这是取出传进来的命令,这里传入的BC_TRANSACTION,之后把数据通过copy_from_user存在了本地变量tr中,然后调用binder_transaction函数:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

int ret;

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end, *off_start;

binder_size_t off_min;

u8 *sg_bufp, *sg_buf_end;

struct binder_proc *target_proc = NULL;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error = 0;

uint32_t return_error_param = 0;

uint32_t return_error_line = 0;

struct binder_buffer_object *last_fixup_obj = NULL;

binder_size_t last_fixup_min_off = 0;

struct binder_context *context = proc->context;

int t_debug_id = atomic_inc_return(&binder_last_id);

char *secctx = NULL;

u32 secctx_sz = 0;

....省略部分打印日志的无关代码.......

if (reply) {

binder_inner_proc_lock(proc);

in_reply_to = thread->transaction_stack;

if (in_reply_to == NULL) {

binder_inner_proc_unlock(proc);

binder_user_error("%d:%d got reply transaction with no transaction stack\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EPROTO;

return_error_line = __LINE__;

goto err_empty_call_stack;

}

if (in_reply_to->to_thread != thread) {

spin_lock(&in_reply_to->lock);

binder_user_error("%d:%d got reply transaction with bad transaction stack, transaction %d has target %d:%d\n",

proc->pid, thread->pid, in_reply_to->debug_id,

in_reply_to->to_proc ?

in_reply_to->to_proc->pid : 0,

in_reply_to->to_thread ?

in_reply_to->to_thread->pid : 0);

spin_unlock(&in_reply_to->lock);

binder_inner_proc_unlock(proc);

return_error = BR_FAILED_REPLY;

return_error_param = -EPROTO;

return_error_line = __LINE__;

in_reply_to = NULL;

goto err_bad_call_stack;

}

thread->transaction_stack = in_reply_to->to_parent;

binder_inner_proc_unlock(proc);

target_thread = binder_get_txn_from_and_acq_inner(in_reply_to);

if (target_thread == NULL) {

return_error = BR_DEAD_REPLY;

return_error_line = __LINE__;

goto err_dead_binder;

}

if (target_thread->transaction_stack != in_reply_to) {

binder_user_error("%d:%d got reply transaction with bad target transaction stack %d, expected %d\n",

proc->pid, thread->pid,

target_thread->transaction_stack ?

target_thread->transaction_stack->debug_id : 0,

in_reply_to->debug_id);

binder_inner_proc_unlock(target_thread->proc);

return_error = BR_FAILED_REPLY;

return_error_param = -EPROTO;

return_error_line = __LINE__;

in_reply_to = NULL;

target_thread = NULL;

goto err_dead_binder;

}

target_proc = target_thread->proc;

target_proc->tmp_ref++;

binder_inner_proc_unlock(target_thread->proc);

} else {

if (tr->target.handle) {

struct binder_ref *ref;

/*

* There must already be a strong ref

* on this node. If so, do a strong

* increment on the node to ensure it

* stays alive until the transaction is

* done.

*/

binder_proc_lock(proc);

ref = binder_get_ref_olocked(proc, tr->target.handle,

true);

if (ref) {

target_node = binder_get_node_refs_for_txn(

ref->node, &target_proc,

&return_error);

} else {

binder_user_error("%d:%d got transaction to invalid handle\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

}

binder_proc_unlock(proc);

} else {

mutex_lock(&context->context_mgr_node_lock);

target_node = context->binder_context_mgr_node;

if (target_node)

target_node = binder_get_node_refs_for_txn(

target_node, &target_proc,

&return_error);

else

return_error = BR_DEAD_REPLY;

mutex_unlock(&context->context_mgr_node_lock);

}

if (!target_node) {

/*

* return_error is set above

*/

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_dead_binder;

}

e->to_node = target_node->debug_id;

if (security_binder_transaction(proc->tsk,

target_proc->tsk) < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = -EPERM;

return_error_line = __LINE__;

goto err_invalid_target_handle;

}

binder_inner_proc_lock(proc);

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

if (tmp->to_thread != thread) {

spin_lock(&tmp->lock);

binder_user_error("%d:%d got new transaction with bad transaction stack, transaction %d has target %d:%d\n",

proc->pid, thread->pid, tmp->debug_id,

tmp->to_proc ? tmp->to_proc->pid : 0,

tmp->to_thread ?

tmp->to_thread->pid : 0);

spin_unlock(&tmp->lock);

binder_inner_proc_unlock(proc);

return_error = BR_FAILED_REPLY;

return_error_param = -EPROTO;

return_error_line = __LINE__;

goto err_bad_call_stack;

}

while (tmp) {

struct binder_thread *from;

spin_lock(&tmp->lock);

from = tmp->from;

if (from && from->proc == target_proc) {

atomic_inc(&from->tmp_ref);

target_thread = from;

spin_unlock(&tmp->lock);

break;

}

spin_unlock(&tmp->lock);

tmp = tmp->from_parent;

}

}

binder_inner_proc_unlock(proc);

}

if (target_thread)

e->to_thread = target_thread->pid;

e->to_proc = target_proc->pid;

/* TODO: reuse incoming transaction for reply */

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

return_error_param = -ENOMEM;

return_error_line = __LINE__;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

spin_lock_init(&t->lock);

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

return_error_param = -ENOMEM;

return_error_line = __LINE__;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = t_debug_id;

if (reply)

binder_debug(BINDER_DEBUG_TRANSACTION,

"%d:%d BC_REPLY %d -> %d:%d, data %016llx-%016llx size %lld-%lld-%lld\n",

proc->pid, thread->pid, t->debug_id,

target_proc->pid, target_thread->pid,

(u64)tr->data.ptr.buffer,

(u64)tr->data.ptr.offsets,

(u64)tr->data_size, (u64)tr->offsets_size,

(u64)extra_buffers_size);

else

binder_debug(BINDER_DEBUG_TRANSACTION,

"%d:%d BC_TRANSACTION %d -> %d - node %d, data %016llx-%016llx size %lld-%lld-%lld\n",

proc->pid, thread->pid, t->debug_id,

target_proc->pid, target_node->debug_id,

(u64)tr->data.ptr.buffer,

(u64)tr->data.ptr.offsets,

(u64)tr->data_size, (u64)tr->offsets_size,

(u64)extra_buffers_size);

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

if (!(t->flags & TF_ONE_WAY) &&

binder_supported_policy(current->policy)) {

/* Inherit supported policies for synchronous transactions */

t->priority.sched_policy = current->policy;

t->priority.prio = current->normal_prio;

} else {

/* Otherwise, fall back to the default priority */

t->priority = target_proc->default_priority;

}

if (target_node && target_node->txn_security_ctx) {

u32 secid;

security_task_getsecid(proc->tsk, &secid);

ret = security_secid_to_secctx(secid, &secctx, &secctx_sz);

if (ret) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_get_secctx_failed;

}

extra_buffers_size += ALIGN(secctx_sz, sizeof(u64));

}

trace_binder_transaction(reply, t, target_node);

t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size,

tr->offsets_size, extra_buffers_size,

!reply && (t->flags & TF_ONE_WAY));

if (IS_ERR(t->buffer)) {

/*

* -ESRCH indicates VMA cleared. The target is dying.

*/

return_error_param = PTR_ERR(t->buffer);

return_error = return_error_param == -ESRCH ?

BR_DEAD_REPLY : BR_FAILED_REPLY;

return_error_line = __LINE__;

t->buffer = NULL;

goto err_binder_alloc_buf_failed;

}

if (secctx) {

size_t buf_offset = ALIGN(tr->data_size, sizeof(void *)) +

ALIGN(tr->offsets_size, sizeof(void *)) +

ALIGN(extra_buffers_size, sizeof(void *)) -

ALIGN(secctx_sz, sizeof(u64));

char *kptr = t->buffer->data + buf_offset;

t->security_ctx = (uintptr_t)kptr +

binder_alloc_get_user_buffer_offset(&target_proc->alloc);

memcpy(kptr, secctx, secctx_sz);

security_release_secctx(secctx, secctx_sz);

secctx = NULL;

}

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

off_start = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

offp = off_start;

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

binder_user_error("%d:%d got transaction with invalid data ptr\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EFAULT;

return_error_line = __LINE__;

goto err_copy_data_failed;

}

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size)) {

binder_user_error("%d:%d got transaction with invalid offsets ptr\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EFAULT;

return_error_line = __LINE__;

goto err_copy_data_failed;

}

if (!IS_ALIGNED(tr->offsets_size, sizeof(binder_size_t))) {

binder_user_error("%d:%d got transaction with invalid offsets size, %lld\n",

proc->pid, thread->pid, (u64)tr->offsets_size);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_offset;

}

if (!IS_ALIGNED(extra_buffers_size, sizeof(u64))) {

binder_user_error("%d:%d got transaction with unaligned buffers size, %lld\n",

proc->pid, thread->pid,

(u64)extra_buffers_size);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_offset;

}

off_end = (void *)off_start + tr->offsets_size;

sg_bufp = (u8 *)(PTR_ALIGN(off_end, sizeof(void *)));

sg_buf_end = sg_bufp + extra_buffers_size;

off_min = 0;

for (; offp < off_end; offp++) {

struct binder_object_header *hdr;

size_t object_size = binder_validate_object(t->buffer, *offp);

if (object_size == 0 || *offp < off_min) {

binder_user_error("%d:%d got transaction with invalid offset (%lld, min %lld max %lld) or object.\n",

proc->pid, thread->pid, (u64)*offp,

(u64)off_min,

(u64)t->buffer->data_size);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_offset;

}

hdr = (struct binder_object_header *)(t->buffer->data + *offp);

off_min = *offp + object_size;

switch (hdr->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_binder(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

} break;

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_handle(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

} break;

case BINDER_TYPE_FD: {

struct binder_fd_object *fp = to_binder_fd_object(hdr);

int target_fd = binder_translate_fd(fp->fd, t, thread,

in_reply_to);

if (target_fd < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = target_fd;

return_error_line = __LINE__;

goto err_translate_failed;

}

fp->pad_binder = 0;

fp->fd = target_fd;

} break;

case BINDER_TYPE_FDA: {

struct binder_fd_array_object *fda =

to_binder_fd_array_object(hdr);

struct binder_buffer_object *parent =

binder_validate_ptr(t->buffer, fda->parent,

off_start,

offp - off_start);

if (!parent) {

binder_user_error("%d:%d got transaction with invalid parent offset or type\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_parent;

}

if (!binder_validate_fixup(t->buffer, off_start,

parent, fda->parent_offset,

last_fixup_obj,

last_fixup_min_off)) {

binder_user_error("%d:%d got transaction with out-of-order buffer fixup\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_parent;

}

ret = binder_translate_fd_array(fda, parent, t, thread,

in_reply_to);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

last_fixup_obj = parent;

last_fixup_min_off =

fda->parent_offset + sizeof(u32) * fda->num_fds;

} break;

case BINDER_TYPE_PTR: {

struct binder_buffer_object *bp =

to_binder_buffer_object(hdr);

size_t buf_left = sg_buf_end - sg_bufp;

if (bp->length > buf_left) {

binder_user_error("%d:%d got transaction with too large buffer\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_offset;

}

if (copy_from_user(sg_bufp,

(const void __user *)(uintptr_t)

bp->buffer, bp->length)) {

binder_user_error("%d:%d got transaction with invalid offsets ptr\n",

proc->pid, thread->pid);

return_error_param = -EFAULT;

return_error = BR_FAILED_REPLY;

return_error_line = __LINE__;

goto err_copy_data_failed;

}

/* Fixup buffer pointer to target proc address space */

bp->buffer = (uintptr_t)sg_bufp +

binder_alloc_get_user_buffer_offset(

&target_proc->alloc);

sg_bufp += ALIGN(bp->length, sizeof(u64));

ret = binder_fixup_parent(t, thread, bp, off_start,

offp - off_start,

last_fixup_obj,

last_fixup_min_off);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

last_fixup_obj = bp;

last_fixup_min_off = 0;

} break;

default:

binder_user_error("%d:%d got transaction with invalid object type, %x\n",

proc->pid, thread->pid, hdr->type);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_object_type;

}

}

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

binder_enqueue_work(proc, tcomplete, &thread->todo);

t->work.type = BINDER_WORK_TRANSACTION;

if (reply) {

binder_inner_proc_lock(target_proc);

if (target_thread->is_dead) {

binder_inner_proc_unlock(target_proc);

goto err_dead_proc_or_thread;

}

BUG_ON(t->buffer->async_transaction != 0);

binder_pop_transaction_ilocked(target_thread, in_reply_to);

binder_enqueue_work_ilocked(&t->work, &target_thread->todo);

binder_inner_proc_unlock(target_proc);

wake_up_interruptible_sync(&target_thread->wait);

binder_restore_priority(current, in_reply_to->saved_priority);

binder_free_transaction(in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {

BUG_ON(t->buffer->async_transaction != 0);

binder_inner_proc_lock(proc);

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

binder_inner_proc_unlock(proc);

if (!binder_proc_transaction(t, target_proc, target_thread)) {

binder_inner_proc_lock(proc);

binder_pop_transaction_ilocked(thread, t);

binder_inner_proc_unlock(proc);

goto err_dead_proc_or_thread;

}

} else {

BUG_ON(target_node == NULL);

BUG_ON(t->buffer->async_transaction != 1);

if (!binder_proc_transaction(t, target_proc, NULL))

goto err_dead_proc_or_thread;

}

if (target_thread)

binder_thread_dec_tmpref(target_thread);

binder_proc_dec_tmpref(target_proc);

if (target_node)

binder_dec_node_tmpref(target_node);

/*

* write barrier to synchronize with initialization

* of log entry

*/

smp_wmb();

WRITE_ONCE(e->debug_id_done, t_debug_id);

return;

err_dead_proc_or_thread:

return_error = BR_DEAD_REPLY;

return_error_line = __LINE__;

binder_dequeue_work(proc, tcomplete);

err_translate_failed:

err_bad_object_type:

err_bad_offset:

err_bad_parent:

err_copy_data_failed:

trace_binder_transaction_failed_buffer_release(t->buffer);

binder_transaction_buffer_release(target_proc, t->buffer, offp);

if (target_node)

binder_dec_node_tmpref(target_node);

target_node = NULL;

t->buffer->transaction = NULL;

binder_alloc_free_buf(&target_proc->alloc, t->buffer);

err_binder_alloc_buf_failed:

if (secctx)

security_release_secctx(secctx, secctx_sz);

err_get_secctx_failed:

kfree(tcomplete);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

err_alloc_tcomplete_failed:

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

err_alloc_t_failed:

err_bad_call_stack:

err_empty_call_stack:

err_dead_binder:

err_invalid_target_handle:

if (target_thread)

binder_thread_dec_tmpref(target_thread);

if (target_proc)

binder_proc_dec_tmpref(target_proc);

if (target_node) {

binder_dec_node(target_node, 1, 0);

binder_dec_node_tmpref(target_node);

}

binder_debug(BINDER_DEBUG_FAILED_TRANSACTION,

"%d:%d transaction failed %d/%d, size %lld-%lld line %d\n",

proc->pid, thread->pid, return_error, return_error_param,

(u64)tr->data_size, (u64)tr->offsets_size,

return_error_line);

{

struct binder_transaction_log_entry *fe;

e->return_error = return_error;

e->return_error_param = return_error_param;

e->return_error_line = return_error_line;

fe = binder_transaction_log_add(&binder_transaction_log_failed);

*fe = *e;

/*

* write barrier to synchronize with initialization

* of log entry

*/

smp_wmb();

WRITE_ONCE(e->debug_id_done, t_debug_id);

WRITE_ONCE(fe->debug_id_done, t_debug_id);

}

BUG_ON(thread->return_error.cmd != BR_OK);

if (in_reply_to) {

binder_restore_priority(current, in_reply_to->saved_priority);

thread->return_error.cmd = BR_TRANSACTION_COMPLETE;

binder_enqueue_work(thread->proc,

&thread->return_error.work,

&thread->todo);

binder_send_failed_reply(in_reply_to, return_error);

} else {

thread->return_error.cmd = return_error;

binder_enqueue_work(thread->proc,

&thread->return_error.work,

&thread->todo);

}

}

这个函数很长,但我们看关键的几个地方,首先这里我们传进来的reply为0,tr->target.handle为0,所以target_node = context->binder_context_mgr_node即ServiceManager;紧接着通过binder_get_node_refs_for_txn给target_proc赋值为target_node->pro,该方法的具体实现:

static struct binder_node *binder_get_node_refs_for_txn(

struct binder_node *node,

struct binder_proc **procp,

uint32_t *error)

{

struct binder_node *target_node = NULL;

binder_node_inner_lock(node);

if (node->proc) {

target_node = node;

binder_inc_node_nilocked(node, 1, 0, NULL);

binder_inc_node_tmpref_ilocked(node);

node->proc->tmp_ref++;

*procp = node->proc;

} else

*error = BR_DEAD_REPLY;

binder_node_inner_unlock(node);

return target_node;

}

往下,分配了两个事务t与tcomplete,并初始化,接着给事务t,即要传输的数据在目标进程(此处的目标进程是ServiceManager)分配了一块内存

t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size,

tr->offsets_size, extra_buffers_size,

!reply && (t->flags & TF_ONE_WAY));这块内存是在binder驱动初始化的时通过mmap映射出来的一部分.

然后把用户传进来的数据通过copy_from_user保存到t->buffer中,我们在添加服务时,实际上是添加的一个本地binder实体,该服务会被封装成flat_binder_object 结构,其type为BINDER_TYPE_BINDER,在接下来的for循环中,会把传输中的binder取出来,然后对这个binder进行处理,执行binder_translate_binder函数:

static int binder_translate_binder(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

struct binder_node *node;

struct binder_proc *proc = thread->proc;

struct binder_proc *target_proc = t->to_proc;

struct binder_ref_data rdata;

int ret = 0;

node = binder_get_node(proc, fp->binder);

if (!node) {

node = binder_new_node(proc, fp);

if (!node)

return -ENOMEM;

}

if (fp->cookie != node->cookie) {

binder_user_error("%d:%d sending u%016llx node %d, cookie mismatch %016llx != %016llx\n",

proc->pid, thread->pid, (u64)fp->binder,

node->debug_id, (u64)fp->cookie,

(u64)node->cookie);

ret = -EINVAL;

goto done;

}

if (security_binder_transfer_binder(proc->tsk, target_proc->tsk)) {

ret = -EPERM;

goto done;

}

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_BINDER,

&thread->todo, &rdata);

if (ret)

goto done;

if (fp->hdr.type == BINDER_TYPE_BINDER)

fp->hdr.type = BINDER_TYPE_HANDLE;

else

fp->hdr.type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = rdata.desc;

fp->cookie = 0;

trace_binder_transaction_node_to_ref(t, node, &rdata);

binder_debug(BINDER_DEBUG_TRANSACTION,

" node %d u%016llx -> ref %d desc %d\n",

node->debug_id, (u64)node->ptr,

rdata.debug_id, rdata.desc);

done:

binder_put_node(node);

return ret;

}

该函数内部又会通过binder_inc_ref_for_node函数创建一个binder实体的引用,并把引用计数加1:

static int binder_inc_ref_for_node(struct binder_proc *proc,

struct binder_node *node,

bool strong,

struct list_head *target_list,

struct binder_ref_data *rdata)

{

struct binder_ref *ref;

struct binder_ref *new_ref = NULL;

int ret = 0;

binder_proc_lock(proc);

ref = binder_get_ref_for_node_olocked(proc, node, NULL);

if (!ref) {

binder_proc_unlock(proc);

new_ref = kzalloc(sizeof(*ref), GFP_KERNEL);

if (!new_ref)

return -ENOMEM;

binder_proc_lock(proc);

ref = binder_get_ref_for_node_olocked(proc, node, new_ref);

}

ret = binder_inc_ref_olocked(ref, strong, target_list);

*rdata = ref->data;

binder_proc_unlock(proc);

if (new_ref && ref != new_ref)

/*

* Another thread created the ref first so

* free the one we allocated

*/

kfree(new_ref);

return ret;

}

通过binder_get_ref_for_node_olocked函数创建了一个引用,并把其值赋给rdata,然后回到binder_translate_binder函数,把fp->hdr.type设置为BINDER_TYPE_HANDLE,

fp->binder = 0;

fp->handle = rdata.desc; handle重新赋值为刚刚生成的rdata.desc

fp->cookie = 0;

这里的binder是要传输到目标进程的(ServiceManager),Service Manager只能够通过句柄值来引用这个Binder实体。回到binder_transaction函数,把tcomplete事务放到当前线程队列thread,其type为:

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;继续往下,会执行if (!binder_proc_transaction(t, target_proc, NULL))这个判断,其实就是把t入队:

static bool binder_proc_transaction(struct binder_transaction *t,

struct binder_proc *proc,

struct binder_thread *thread)

{

struct list_head *target_list = NULL;

struct binder_node *node = t->buffer->target_node;

struct binder_priority node_prio;

bool oneway = !!(t->flags & TF_ONE_WAY);

bool wakeup = true;

BUG_ON(!node);

binder_node_lock(node);

node_prio.prio = node->min_priority;

node_prio.sched_policy = node->sched_policy;

if (oneway) {

BUG_ON(thread);

if (node->has_async_transaction) {

target_list = &node->async_todo;

wakeup = false;

} else {

node->has_async_transaction = 1;

}

}

binder_inner_proc_lock(proc);

if (proc->is_dead || (thread && thread->is_dead)) {

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return false;

}

if (!thread && !target_list)

thread = binder_select_thread_ilocked(proc);

if (thread) {

target_list = &thread->todo;

binder_transaction_priority(thread->task, t, node_prio,

node->inherit_rt);

} else if (!target_list) {

target_list = &proc->todo;

} else {

BUG_ON(target_list != &node->async_todo);

}

binder_enqueue_work_ilocked(&t->work, target_list);

if (wakeup)

binder_wakeup_thread_ilocked(proc, thread, !oneway /* sync */);

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return true;

}

binder_enqueue_work_ilocked(&t->work, target_list);通过该函数把事务t放到目标队列中即(ServiceManager),t->work.type = BINDER_WORK_TRANSACTION;然后通过binder_wakeup_thread_ilocked唤醒目标进程.在唤醒之前前,当前进程还有一件事情要做,就是刚刚往当前线程thread->todo队列添加了一个tcomplete事务,回到binder_ioctl_write_read,此时bwr.read_size > 0,所以执行binder_thread_read函数:

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

binder_inner_proc_lock(proc);

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

binder_inner_proc_unlock(proc);

thread->looper |= BINDER_LOOPER_STATE_WAITING;

trace_binder_wait_for_work(wait_for_proc_work,

!!thread->transaction_stack,

!binder_worklist_empty(proc, &thread->todo));

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_restore_priority(current, proc->default_priority);

}

if (non_block) {

if (!binder_has_work(thread, wait_for_proc_work))

ret = -EAGAIN;

} else {

ret = binder_wait_for_work(thread, wait_for_proc_work);

}

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

uint32_t cmd;

struct binder_transaction_data_secctx tr;

struct binder_transaction_data *trd = &tr.transaction_data;

struct binder_work *w = NULL;

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

struct binder_thread *t_from;

size_t trsize = sizeof(*trd);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

else if (!binder_worklist_empty_ilocked(&proc->todo) &&

wait_for_proc_work)

list = &proc->todo;

else {

binder_inner_proc_unlock(proc);

/* no data added */

if (ptr - buffer == 4 && !thread->looper_need_return)

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4) {

binder_inner_proc_unlock(proc);

break;

}

w = binder_dequeue_work_head_ilocked(list);

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

binder_inner_proc_unlock(proc);

t = container_of(w, struct binder_transaction, work);

} break;

case BINDER_WORK_RETURN_ERROR: {

struct binder_error *e = container_of(

w, struct binder_error, work);

WARN_ON(e->cmd == BR_OK);

binder_inner_proc_unlock(proc);

if (put_user(e->cmd, (uint32_t __user *)ptr))

return -EFAULT;

e->cmd = BR_OK;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

} break;

case BINDER_WORK_TRANSACTION_COMPLETE: {

binder_inner_proc_unlock(proc);

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

binder_debug(BINDER_DEBUG_TRANSACTION_COMPLETE,

"%d:%d BR_TRANSACTION_COMPLETE\n",

proc->pid, thread->pid);

kfree(w);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

} break;

case BINDER_WORK_NODE: {

struct binder_node *node = container_of(w, struct binder_node, work);

int strong, weak;

binder_uintptr_t node_ptr = node->ptr;

binder_uintptr_t node_cookie = node->cookie;

int node_debug_id = node->debug_id;

int has_weak_ref;

int has_strong_ref;

void __user *orig_ptr = ptr;

BUG_ON(proc != node->proc);

strong = node->internal_strong_refs ||

node->local_strong_refs;

weak = !hlist_empty(&node->refs) ||

node->local_weak_refs ||

node->tmp_refs || strong;

has_strong_ref = node->has_strong_ref;

has_weak_ref = node->has_weak_ref;

if (weak && !has_weak_ref) {

node->has_weak_ref = 1;

node->pending_weak_ref = 1;

node->local_weak_refs++;

}

if (strong && !has_strong_ref) {

node->has_strong_ref = 1;

node->pending_strong_ref = 1;

node->local_strong_refs++;

}

if (!strong && has_strong_ref)

node->has_strong_ref = 0;

if (!weak && has_weak_ref)

node->has_weak_ref = 0;

if (!weak && !strong) {

binder_debug(BINDER_DEBUG_INTERNAL_REFS,

"%d:%d node %d u%016llx c%016llx deleted\n",

proc->pid, thread->pid,

node_debug_id,

(u64)node_ptr,

(u64)node_cookie);

rb_erase(&node->rb_node, &proc->nodes);

binder_inner_proc_unlock(proc);

binder_node_lock(node);

/*

* Acquire the node lock before freeing the

* node to serialize with other threads that

* may have been holding the node lock while

* decrementing this node (avoids race where

* this thread frees while the other thread

* is unlocking the node after the final

* decrement)

*/

binder_node_unlock(node);

binder_free_node(node);

} else

binder_inner_proc_unlock(proc);

if (weak && !has_weak_ref)

ret = binder_put_node_cmd(

proc, thread, &ptr, node_ptr,

node_cookie, node_debug_id,

BR_INCREFS, "BR_INCREFS");

if (!ret && strong && !has_strong_ref)

ret = binder_put_node_cmd(

proc, thread, &ptr, node_ptr,

node_cookie, node_debug_id,

BR_ACQUIRE, "BR_ACQUIRE");

if (!ret && !strong && has_strong_ref)

ret = binder_put_node_cmd(

proc, thread, &ptr, node_ptr,

node_cookie, node_debug_id,

BR_RELEASE, "BR_RELEASE");

if (!ret && !weak && has_weak_ref)

ret = binder_put_node_cmd(

proc, thread, &ptr, node_ptr,

node_cookie, node_debug_id,

BR_DECREFS, "BR_DECREFS");

if (orig_ptr == ptr)

binder_debug(BINDER_DEBUG_INTERNAL_REFS,

"%d:%d node %d u%016llx c%016llx state unchanged\n",

proc->pid, thread->pid,

node_debug_id,

(u64)node_ptr,

(u64)node_cookie);

if (ret)

return ret;

} break;

case BINDER_WORK_DEAD_BINDER:

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: {

struct binder_ref_death *death;

uint32_t cmd;

binder_uintptr_t cookie;

death = container_of(w, struct binder_ref_death, work);

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION)

cmd = BR_CLEAR_DEATH_NOTIFICATION_DONE;

else

cmd = BR_DEAD_BINDER;

cookie = death->cookie;

binder_debug(BINDER_DEBUG_DEATH_NOTIFICATION,

"%d:%d %s %016llx\n",

proc->pid, thread->pid,

cmd == BR_DEAD_BINDER ?

"BR_DEAD_BINDER" :

"BR_CLEAR_DEATH_NOTIFICATION_DONE",

(u64)cookie);

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) {

binder_inner_proc_unlock(proc);

kfree(death);

binder_stats_deleted(BINDER_STAT_DEATH);

} else {

binder_enqueue_work_ilocked(

w, &proc->delivered_death);

binder_inner_proc_unlock(proc);

}

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (put_user(cookie,

(binder_uintptr_t __user *)ptr))

return -EFAULT;

ptr += sizeof(binder_uintptr_t);

binder_stat_br(proc, thread, cmd);

if (cmd == BR_DEAD_BINDER)

goto done; /* DEAD_BINDER notifications can cause transactions */

} break;

}

if (!t)

continue;

BUG_ON(t->buffer == NULL);

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

struct binder_priority node_prio;

trd->target.ptr = target_node->ptr;

trd->cookie = target_node->cookie;

node_prio.sched_policy = target_node->sched_policy;

node_prio.prio = target_node->min_priority;

binder_transaction_priority(current, t, node_prio,

target_node->inherit_rt);

cmd = BR_TRANSACTION;

} else {

trd->target.ptr = 0;

trd->cookie = 0;

cmd = BR_REPLY;

}

trd->code = t->code;

trd->flags = t->flags;

trd->sender_euid = from_kuid(current_user_ns(), t->sender_euid);

t_from = binder_get_txn_from(t);

if (t_from) {

struct task_struct *sender = t_from->proc->tsk;

trd->sender_pid =

task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

trd->sender_pid = 0;

}

trd->data_size = t->buffer->data_size;

trd->offsets_size = t->buffer->offsets_size;

trd->data.ptr.buffer = (binder_uintptr_t)

((uintptr_t)t->buffer->data +

binder_alloc_get_user_buffer_offset(&proc->alloc));

trd->data.ptr.offsets = trd->data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

tr.secctx = t->security_ctx;

if (t->security_ctx) {

cmd = BR_TRANSACTION_SEC_CTX;

trsize = sizeof(tr);

}

if (put_user(cmd, (uint32_t __user *)ptr)) {

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "put_user failed",

BR_FAILED_REPLY);

return -EFAULT;

}

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, trsize)) {

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "copy_to_user failed",

BR_FAILED_REPLY);

return -EFAULT;

}

ptr += trsize;

trace_binder_transaction_received(t);

binder_stat_br(proc, thread, cmd);

binder_debug(BINDER_DEBUG_TRANSACTION,

"%d:%d %s %d %d:%d, cmd %d size %zd-%zd ptr %016llx-%016llx\n",

proc->pid, thread->pid,

(cmd == BR_TRANSACTION) ? "BR_TRANSACTION" :

(cmd == BR_TRANSACTION_SEC_CTX) ?

"BR_TRANSACTION_SEC_CTX" : "BR_REPLY",

t->debug_id, t_from ? t_from->proc->pid : 0,

t_from ? t_from->pid : 0, cmd,

t->buffer->data_size, t->buffer->offsets_size,

(u64)trd->data.ptr.buffer,

(u64)trd->data.ptr.offsets);

if (t_from)

binder_thread_dec_tmpref(t_from);

t->buffer->allow_user_free = 1;

if (cmd != BR_REPLY && !(t->flags & TF_ONE_WAY)) {

binder_inner_proc_lock(thread->proc);

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

binder_inner_proc_unlock(thread->proc);

} else {

binder_free_transaction(t);

}

break;

}

done:

*consumed = ptr - buffer;

binder_inner_proc_lock(proc);

if (proc->requested_threads == 0 &&

list_empty(&thread->proc->waiting_threads) &&

proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */

/*spawn a new thread if we leave this out */) {

proc->requested_threads++;

binder_inner_proc_unlock(proc);

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BR_SPAWN_LOOPER\n",

proc->pid, thread->pid);

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

binder_stat_br(proc, thread, BR_SPAWN_LOOPER);

} else

binder_inner_proc_unlock(proc);

return 0;

}

该函数先往用户空间写入一个BR_NOOP命令,然后看while循环,由于thread->todo不为空,所以执行以下代码:

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

.....省略部分代码

w = binder_dequeue_work_head_ilocked(list);

这里w->type为BINDER_WORK_TRANSACTION_COMPLETE,在写入一个BR_TRANSACTION_COMPLETE到用户空间,所以这里一共是写入了两个命令.然后返回到用户空间的talkWithDriver:

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

移除掉已写的数据,然后设置已读取的数据.在返回上一层调用的waitForResponse:

cmd = (uint32_t)mIn.readInt32();

这里读出第一个命令BR_NOOP,该命令没有执行任何操作,然后继续执行talkWithDriver:

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

if (outAvail != 0) {

alog << "Sending commands to driver: " << indent;

const void* cmds = (const void*)bwr.write_buffer;

const void* end = ((const uint8_t*)cmds)+bwr.write_size;

alog << HexDump(cmds, bwr.write_size) << endl;

while (cmds < end) cmds = printCommand(alog, cmds);

alog << dedent;

}

alog << "Size of receive buffer: " << bwr.read_size

<< ", needRead: " << needRead << ", doReceive: " << doReceive << endl;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

此时,needRead为false,因为mIn里面还有一个命令BR_TRANSACTION_COMPLETE,outAvail=0,所以bwr.write_size跟bwr.read_size都为0,该函数就直接返回了.回到waitForResponse,再读出另外一个命令BR_TRANSACTION_COMPLETE:

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

......

}

由于reply不为空,所以再次进入talkWithDriver函数,这次needRead为true,由于之前已经清空了mOut的数据,所以outAvail还是为0,bwr.write_size等于0,bwr.read_size大于0,于是通过:

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)

进入到Binder驱动程序中的binder_ioctl函数中。由于bwr.write_size为0,bwr.read_size不为0,这次直接就进入到binder_thread_read函数中,部分代码如下:

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

binder_inner_proc_unlock(proc);

thread->looper |= BINDER_LOOPER_STATE_WAITING;

trace_binder_wait_for_work(wait_for_proc_work,

!!thread->transaction_stack,

!binder_worklist_empty(proc, &thread->todo));

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_restore_priority(current, proc->default_priority);

}

这里,wait_for_proc_work 为true,然后会调用wait_event_interruptible使当前线程进入睡眠,等待Service Manager来唤醒了。

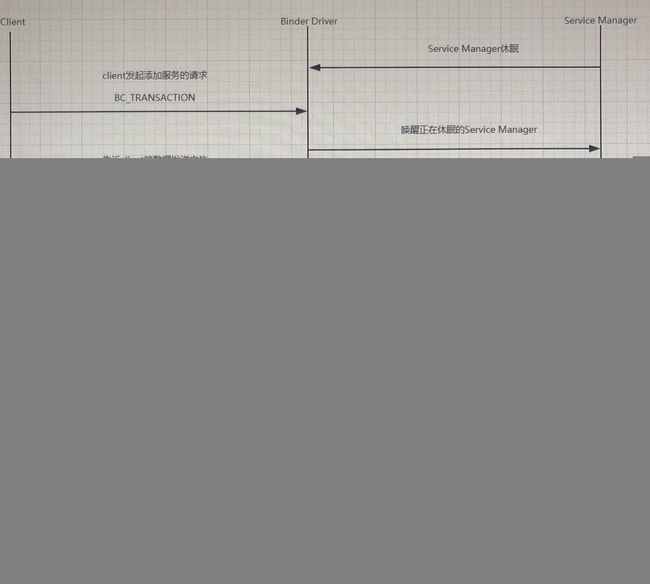

现在,我们回到ServiceManager被唤醒的过程,这里先给个结论,此时的ServiceManager在休眠状态,在上面被唤醒后,继续执行binder_thread_read函数,部分代码如下:

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

else if (!binder_worklist_empty_ilocked(&proc->todo) &&

wait_for_proc_work)

list = &proc->todo;

else {

binder_inner_proc_unlock(proc);

/* no data added */