kubernetes学习:留言板(Guestbook)系统的搭建

kubernetes学习:留言板(Guestbook)系统的搭建

-

master节点搭建

部署环境

- Ubuntu 16.04 虚拟机两台

- 国内镜像源ustc

安装docker环境(https://blog.iwnweb.com/环境/Installing-docker-environment/)

sudo mkdir /etc/docker

sudo mkdir -p /data/docker

安装包以允许仓库使用 https

sudo apt-get install -y apt-transport-https ca-certificates curl software-properties-common

添加官方 GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

添加稳定版的docker-ce和kubernetes仓库源

sudo add-apt-repository "deb [arch=amd64] https://mirrors.ustc.edu.cn/docker-ce/linux/$(. /etc/os-release; echo "$ID") $(lsb_release -cs) stable"

echo "deb [arch=amd64] https://mirrors.ustc.edu.cn/kubernetes/apt kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list

sudo apt-get update

⚠可能会报错GPG ERROR(解决方法如这篇文章 https://blog.csdn.net/suresand/article/details/82321453 )

注:E084DAB9 是提示的NO_PUBLICKEY公匙的后八位

gpg --keyserver keyserver.ubuntu.com --recv-keys E084DAB9

gpg --export --armor E084DAB9 | sudo apt-key add –

sudo apt-get update

安装docker-ce

- 安装18.06.0版本的 docker-ce

sudo apt-cache madison docker-ce // 查看docker-ce的版本

sudo apt install docker-ce=18.06.0~ce~3-0~ubuntu -y

- 通过运行 hello-world 镜像验证 docker-ce 已被正确安装

sudo docker run hello-world

sudo docker version

sudo docker images

- 将当前用户加入docker用户组

sudo groupadd docker //创建docker组(可能已经存在)

sudo gpasswd -a [本机用户名] docker

安装 kubeadm,kubelete,kubectl

kubeadm: 引导启动 k8s 集群的命令工具。

kubelet: 在群集中的所有计算机上运行的组件, 并用来执行如启动 pods 和 containers 等操作。

kubectl: 用于操作运行中的集群的命令行工具

- 从链接 https://raw.githubusercontent.com/EagleChen/kubernetes_init/master/kube_apt_key.gpg 下载 kube_apt_key.gpg 到当前工作目录下,并添加。

wget https://raw.githubusercontent.com/EagleChen/kubernetes_init/master/kube_apt_key.gpg

cat kube_apt_key.gpg | sudo apt-key add –

sudo apt-get update

- 查看kubeadm在镜像中的版本

sudo apt-cache madison kubeadm

- 安装kubeadm,kuberlet,kubectl

sudo apt-get install -y kubernetes-cni=0.6.0-00 kubelet=1.13.4-00 kubeadm=1.13.4-00 kubectl=1.13.4-00 --allow-unauthenticated /

⚠ kubernetes-cni,如果不加这句话,就会报错kubelet : Depends: kubernetes-cni (= 0.6.0) but 0.7.5-00 is to be installed

- 查看当前版本kubeadm所对应的k8s的镜像列表,并将镜像pull下来

sudo kubeadm config images list

sudo vim pull-images.sh

pull-images.sh内容如下:

echo ""

echo "=========================================================="

echo "Pull Kubernetes v1.13.4 Images from aliyuncs.com ......"

echo "=========================================================="

echo ""

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/openthings

## 拉取镜像

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.13.4

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.13.4

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.13.4

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.13.4

docker pull ${MY_REGISTRY}/k8s-gcr-io-etcd:3.2.24

docker pull ${MY_REGISTRY}/k8s-gcr-io-pause:3.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-coredns:1.2.6

## 添加Tag

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.13.4 k8s.gcr.io/kube-apiserver:v1.13.4

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.13.4 k8s.gcr.io/kube-scheduler:v1.13.4

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.13.4 k8s.gcr.io/kube-controller-manager:v1.13.4

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.13.4 k8s.gcr.io/kube-proxy:v1.13.4

docker tag ${MY_REGISTRY}/k8s-gcr-io-etcd:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag ${MY_REGISTRY}/k8s-gcr-io-pause:3.1 k8s.gcr.io/pause:3.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

echo ""

echo "=========================================================="

echo "Pull Kubernetes v1.13.4 Images FINISHED."

echo "into registry.cn-hangzhou.aliyuncs.com/openthings, "

echo " by openthings@https://my.oschina.net/u/2306127."

echo "=========================================================="

echo ""

- 执行并导入镜像。

sudo sh pull-images.sh

sudo docker images

禁止分区

必须禁用swap为了使kubelet正常工作

sudo swapoff -a

在一些os上有iptables被绕过而导致流量路由不正确的问题,因此应该确保 net.bridge.bridge-nf-call-iptables在sysctl配置中设置为1(所有节点执行)

sudo sysctl net.bridge.bridge-nf-call-iptables=1

修改conf文件:

sudo vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

sudo systemctl daemon-reload

sudo systemctl start kubelet

conf文件内容:

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

#This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

#This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

#the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

#Environment="KUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --fail-swap-on=false"

#Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/ --cni-bin-dir=/opt/cni/bin""

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS $KUBELET_SYSTEM_PODS_ARGS

初始化master节点

sudo kubeadm init --ignore-preflight-errors=Swap --pod-network-cidr 10.244.0.0/16

查看master节点上kubelet的状态

sudo systemctl status kubelet

这时可能报错:Unable to update cni config: No networks found in /etc/cni/net.d

参考这篇博客 http://windgreen.me/2018/05/16/unable-to-update-cni-config-no-networks-found-in-etccninet-d/ ,查看/etc/cni/net.d/这个文件夹,如果里面没有任何内容,则导入flannel:v0.10.0-amd64镜像

sudo docker pull quay.io/coreos/flannel:v0.10.0-amd64

然后从reset开始重新执行一遍

sudo kubeadm reset

sudo systemctl daemon-reload

sudo systemctl start kubelet

sudo kubeadm init --ignore-preflight-errors=Swap --pod-network-cidr 10.244.0.0/16

sudo systemctl status kubelet

init执行结果如下:

I0704 19:27:52.713123 53823 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I0704 19:27:52.713345 53823 version.go:95] falling back to the local client version: v1.13.4

[init] Using Kubernetes version: v1.13.4

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.09.5. Latest validated version: 18.06

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [ubuntu localhost] and IPs [10.2.25.129 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [ubuntu localhost] and IPs [10.2.25.129 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [ubuntu kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.2.25.129]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 30.503715 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "ubuntu" as an annotation

[mark-control-plane] Marking the node ubuntu as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node ubuntu as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: nfp5k8.5v2hmi9wer1ztoc8

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.2.25.129:6443 --token nfp5k8.5v2hmi9wer1ztoc8 --discovery-token-ca-cert-hash sha256:16876d1d873c287b89ca14d46aaef41f7fb50f4742b8d590d3ad3cc29e299b4a

如果kubectl命令执行失败,显示The connection to the server 172.16.196.2:8443 was refused - did you specify the right host or port?这是因为没有执行下面三步,这是必须要执行的

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

若10250端口被占用。报错如下:

I0704 16:47:07.124473 93279 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I0704 16:47:07.124652 93279 version.go:95] falling back to the local client version: v1.13.4

[init] Using Kubernetes version: v1.13.4

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.09.5. Latest validated version: 18.06

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-6443]: Port 6443 is in use

[ERROR Port-10251]: Port 10251 is in use

[ERROR Port-10252]: Port 10252 is in use

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR Port-2379]: Port 2379 is in use

[ERROR Port-2380]: Port 2380 is in use

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

通过下面两句话来解决:

sudo netstat -antup

sudo kill -s 9 [PID]

部署flannel网络

sudo kubectl create -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

查看pod的状态(第一句和第二句执行结果相似)

sudo kubectl get pods --all-namespaces

sudo kubectl get pod -n kube-system -o wide

第二句执行后显示如下:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-86c58d9df4-ctz4h 1/1 Running 0 5m57s 10.244.0.14 ubuntu

coredns-86c58d9df4-dpk6h 1/1 Running 0 5m56s 10.244.0.13 ubuntu

etcd-ubuntu 1/1 Running 0 5m16s 10.2.25.129 ubuntu

kube-apiserver-ubuntu 1/1 Running 0 5m14s 10.2.25.129 ubuntu

kube-controller-manager-ubuntu 1/1 Running 0 5m1s 10.2.25.129 ubuntu

kube-flannel-ds-amd64-65675 1/1 Running 0 5m27s 10.2.25.129 ubuntu

kube-proxy-cffw7 1/1 Running 0 5m56s 10.2.25.129 ubuntu

kube-scheduler-ubuntu 1/1 Running 0 5m2s 10.2.25.129 ubuntu

vjone@ubuntu:~$ sudo kubectl create -f mysql-rc.yaml

如果coredns处于crashloopbackoff状态,如下所示:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-86c58d9df4-9nbpf 0/1 CrashLoopBackOff 1 3m18s 10.244.0.7 ubuntu

则将/etc/resolv.conf删除,在原地创建一个同名文件,内容如下:

nameserver 8.8.8.8

nameserver 8.8.4.4

-

node搭建

在master上执行

init之前执行步骤相同(不需要执行init)

在10-kubeadm.conf加入环境变量

sudo sed -i "s,ExecStart=$,Environment=\"KUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1\"\nExecStart=,g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

sudo systemctl daemon-reload

sudo systemctl restart kubelet

将node节点加入kubernetes集群中

kubeadm join 10.2.25.129:6443 --token nfp5k8.5v2hmi9wer1ztoc8 --discovery-token-ca-cert-hash sha256:16876d1d873c287b89ca14d46aaef41f7fb50f4742b8d590d3ad3cc29e299b4a

查看节点的状态:

sudo kubectl get nodes

结果显示如下:

NAME STATUS ROLES AGE VERSION

sxq-virtual-machine Ready 2d2h v1.13.4

ubuntu Ready master 2d2h v1.13.4

留言板(Guestbook)系统的搭建(《Kubernetes权威指南》)

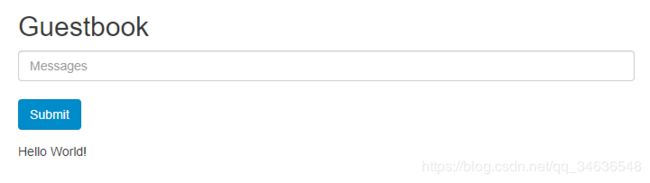

该系统架构是一个基于PHP-Redis的分布式web应用,前端PHP Web网站通过访问后端的Redis来完成用户留言的查询和添加。

- redis-master:用于前端Web一个用进行写留言操作的Redis服务。

- guestbook-redis-slave:用于前端web应用进行读留言操作的Redis服务,并与Redis-Master的数据保持同步。

- guestbook-php-frontend:PHP Web服务,在网页上展示留言的内容。

创建rc和mvc文件

frontend-controller.yaml:

- 如果是set请求(提交留言),则会连接到redis-master服务中进行写数据操作。其中redis-master服务的虚拟IP地址是从环境变量中获取的方式得到的。

- 如果是get请求,则会连接到redis-slave服务进行读数据操作。

apiVersion: v1

kind: ReplicationController

metadata:

name: frontend

labels:

name: frontend

spec:

replicas: 3

selector:

name: frontend

template:

metadata:

labels:

name: frontend

spec:

containers:

- name: frontend

image: registry.fjhb.cn/guestbook-php-frontend #容器的镜像名

env:

- name: GET_HOSTS_FROM

value: env

ports:

- containerPort: 80

frontend-service.yaml:

在该文件中制定了nodePort,给Kubernetes集群中的Service映射一个外网可以访问的端口,这样,外部网络就可以通过NodeIP+NodePort的方式访问集群中的服务了

apiVersion: v1

kind: Service

metadata:

name: frontend

labels:

name: frontend

spec:

type: NodePort

ports:

- port: 80

nodePort: 30011

selector:

name: frontend

Redis master启动1个实例用于写操作(添加留言)

Redis Master与Slave的数据同步由Redis具备的数据同步机制完成。

redis-master-controller.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: redis-master

labels:

name: redis-master

spec:

replicas: 1

selector:

name: redis-master

template:

metadata:

labels:

name: redis-master #该name一定要与selector中的name匹配

spec:

containers:

- name: master

image: docker.io/kubeguide/redis-master

ports:

- containerPort: 6379

redis-master-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: redis-master

labels:

name: redis-master

spec:

ports:

- port: 6379

targetPort: 6379

selector:

name: redis-master

Redis Slave启动两个实例用于读操作(读取留言)。

redis-slave-controller.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: redis-slave

labels:

name: redis-slave

spec:

replicas: 2

selector:

name: redis-slave

template:

metadata:

labels:

name: redis-slave

spec:

containers:

- name: slave

image: kubeguide/guestbook-redis-slave

env:

- name: GET_HOSTS_FROM

value: env

ports:

- containerPort: 6379

redis-slave-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: redis-slave

labels:

name: redis-slave

spec:

ports:

- port: 6379

selector:

name: redis-slave

将文件发布到kubernetes集群中

sudo kubectl create -f redis-master-controller.yaml

sudo kubectl create -f kubernetes/redis-master-controller.yaml

sudo kubectl create -f kubernetes/redis-slave-controller.yaml

sudo kubectl create -f kubernetes/redis-slave-service.yaml

sudo kubectl create -f kubernetes/frontend-service.yaml

sudo kubectl create -f redis-master-controller.yaml

sudo kubectl create -f kubernetes/frontend-controller.yaml

sudo kubectl create -f kubernetes/frontend-service.yaml

查看刚刚创建的rc和svc

sudo kubectl get rc

sudo kubectl get svc

查看pod的状态

RC会为每个Pod实例在用于设置的name后补充一段UIUD,以区分不同的实例。由于RC需要花费时间确定调度到哪个node上运行pod和下载pod的镜像,因此,需要花费一段时间,一开始查看pod状态会处于pending状态。

sudo kubectl get pods

NAME READY STATUS RESTARTS AGE

frontend-5fhst 1/1 Running 0 30h

frontend-cw8fg 1/1 Running 0 30h

frontend-dks6n 1/1 Running 0 30h

redis-master-5dlsp 1/1 Running 0 30h

redis-slave-24rfm 1/1 Running 0 30h

redis-slave-6jfsd 1/1 Running 0 30h

如果pod运行不正常,可以使用对pod的状态进行查看

sudo kubectl describe pod [pod_name]