用kubeadm安装最新Kubernetes1.13.0【centos7.3 在线装docker,kubeadm,kubectl,kubelet,dashboard】kubernetesv1.13.0

参考 用kubeadm安装最新Kubernetes1.10.1【centos7.3 离线安装docker,kubeadm,kubectl,kubelet,dashboard】kubernetesv1.10.1

链接:https://pan.baidu.com/s/1b65YnQvQcmNtNflJvzvifQ 密码:di5a

环境:3台centos7.3虚拟机

10.10.31.202 k8s-master

10.10.31.203 k8s-node1

10.10.31.204 k8s-node2

###一定要配置host

#配置hosts

cat > /etc/hosts << EOF

127.0.0.1 localhost

10.10.31.202 k8s-master

10.10.31.203 k8s-node1

10.10.31.204 k8s-node2

EOF

环境设置:

1 . 系统是centos7.3

#uname -a 查看内核版本

[root@test01 /]# uname -a

Linux test01.szy.local 3.10.0-514.el7.x86_64 #1 SMP Tue Nov 22 16:42:41 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

2.关闭防火墙,SELinux,SWAP(所有节点)

setenforce 0 :临时关闭,用于关闭selinux防火墙,但重启后失效。

swapoff -a #保证 kubelet 正确运行

systemctl stop firewalld

systemctl disable firewalld #关闭防火墙

执行效果:

[root@szy-k8s-master ~]# systemctl stop firewalld && systemctl disable firewalld

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

[root@szy-k8s-master ~]# swapoff -a

[root@szy-k8s-master ~]# setenforce 0

[root@szy-k8s-master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config # 修改配置永久生效,需重启

[root@szy-k8s-master ~]# /usr/sbin/sestatus #查看selinux的状态

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: disabled

Policy MLS status: enabled

Policy deny_unknown status: allowed

Max kernel policy version: 28

组件安装:

1.docker的安装-经常出问题,版本和系统不兼容等问题

或者使用文件docker-packages.tar,每个节点都要安装。

yum install -y docker

systemctl enable docker && systemctl start docker

本人是第二种安装方法:

链接:https://pan.baidu.com/s/1nV_lOOJhNJpqGBq9heNWug 密码:zkfr

tar -xvf docker-packages.tar

cd docker-packages

rpm -Uvh * 或者 yum install local *.rpm 进行安装

docker version #安装完成查看版本

注意:如果docker安装失败,重装步骤:

yum remove docker

yum remove docker-selinu

如果删不干净,就执行下面操作

1.#查看已经安装的docker安装包,列出入校内容

[root@szy-k8s-node2 docker-packages]# rpm -qa|grep docker

docker-common-1.13.1-63.git94f4240.el7.centos.x86_64

docker-client-1.13.1-63.git94f4240.el7.centos.x86_64

2.分别删除

yum -y remove docker-common-1.13.1-63.git94f4240.el7.centos.x86_64

yum -y remove docker-client-1.13.1-63.git94f4240.el7.centos.x86_64

3.删除docker镜像

rm -rf /var/lib/docker

再重新安装

输入docker info,记录Cgroup Driver

Cgroup Driver: systemd

docker和kubelet的cgroup driver需要一致,如果docker不是systemd,则执行

#我配置了一个私有仓库地址,是Harbor

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://XXXX.mirror.aliyuncs.com"],

"insecure-registries" : ["10.10.31.205"],

"exec-opts": ["native.cgroupdriver=systemd"],

"iptables": false

}

EOF

systemctl daemon-reload && systemctl restart docker

#配置网络

#配置IPVS

yum install ipset ipvsadm conntrack-tools.x86_64 expect -y

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_fo ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack_ipv4"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

echo "bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs" >> /etc/rc.d/rc.local

chmod +x /etc/rc.d/rc.local

#配置环境

sed -i 7,9s/0/1/g /usr/lib/sysctl.d/00-system.conf

echo 'net.ipv4.ip_forward = 1' >> /usr/lib/sysctl.d/00-system.conf

echo 'vm.swappiness = 0' >> /usr/lib/sysctl.d/00-system.conf

sysctl --system

sysctl -p /usr/lib/sysctl.d/00-system.conf

aliyun的kubelet kubeadm kubectl 包

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#kubernetes bin安装

yum install -y kubelet kubeadm kubectl

#配置Cgroup

我使用的是:systemd

10.10.31.205/k8s/pause:3.1 这个地址,根据你自己的镜像名字,重新指定

在所有kubernetes节点上设置kubelet使用cgroupfs,与dockerd保持一致,否则kubelet会启动报错

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

echo $DOCKER_CGROUPS

cat > /etc/sysconfig/kubelet <###导入镜像

每个节点都要执行

链接:https://pan.baidu.com/s/1b65YnQvQcmNtNflJvzvifQ 密码:di5a

这里,如果你需要的话,可以重新做下tag,我是放在私有仓库的。

docker load -i k8s1.13.0.tar

#一共9个镜像,分别是

sai:~ ws$ docker images | grep 10.10.31.205/k8s/

10.10.31.205/k8s/kube-proxy v1.13.0 8fa56d18961f 6 days ago 80.2MB

10.10.31.205/k8s/kube-apiserver v1.13.0 f1ff9b7e3d6e 6 days ago 181MB

10.10.31.205/k8s/kube-controller-manager v1.13.0 d82530ead066 6 days ago 146MB

10.10.31.205/k8s/kube-scheduler v1.13.0 9508b7d8008d 6 days ago 79.6MB

10.10.31.205/k8s/coredns 1.2.6 f59dcacceff4 5 weeks ago 40MB

10.10.31.205/k8s/etcd 3.2.24 3cab8e1b9802 2 months ago 220MB

10.10.31.205/k8s/kubernetes-dashboard-amd64 v1.10.0 0dab2435c100 3 months ago 122MB

10.10.31.205/k8s/flannel v0.10.0-amd64 f0fad859c909 10 months ago 44.6MB

10.10.31.205/k8s/pause 3.1 da86e6ba6ca1 11 months ago 742kB

kubeadm init 部署master节点,只在master节点执行

此处选用最简单快捷的部署方案。etcd、api、controller-manager、 scheduler服务都会以容器的方式运行在master。etcd 为单点,不带证书。etcd的数据会挂载到master节点/var/lib/etcd

init命令注意要指定版本,和pod范围

#版本,指不指定,都可以,网络的话,如果想避免冲突的话,注意。 我是10的网段,所以就用192部署。

kubeadm init --pod-network-cidr=192.168.0.0/16

##参考# kubeadm init --kubernetes-version=v1.13.0 --pod-network-cidr=10.244.0.0/16

记下join的命令,后续node节点加入的时候要用到 执行提示的命令,保存kubeconfig

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# master上运行pod

kubectl taint nodes --all node-role.kubernetes.io/master-

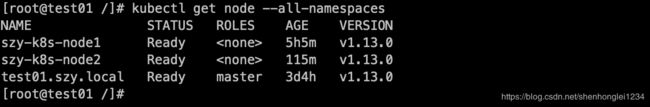

此时执行kubectl get node 已经可以看到master节点,notready是因为还未部署网络插件

[root@szy-k8s-master k8s]# kubectl get node

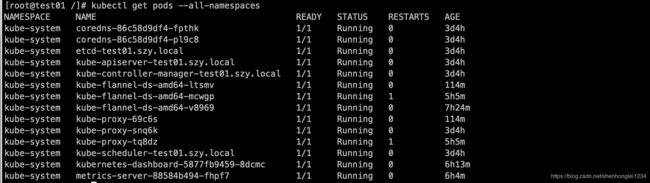

[root@szy-k8s-master k8s]# kubectl get pod --all-namespaces

安装flannel

链接:https://pan.baidu.com/s/140RcYA71VwWnfWE0aARhqA 密码:y9eu

注意:除了镜像名字要修改外,其它的都不用动,就OK。

image: 10.10.31.205/k8s/flannel:v0.10.0-amd64 ,要修改成自己的私有仓库地址

cat > network.yaml << EOF

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "192.168.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: 10.10.31.205/k8s/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: 10.10.31.205/k8s/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

EOF

kubectl apply -f network.yaml

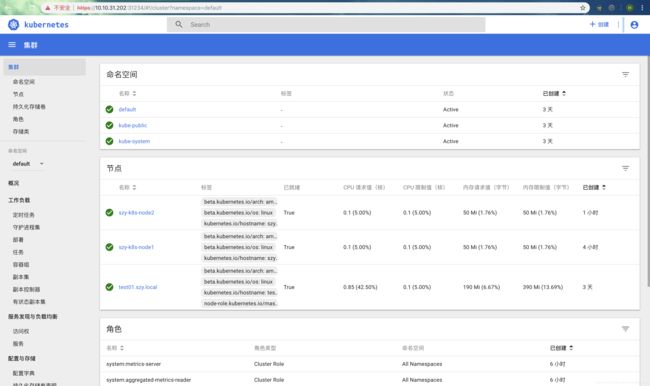

安装DashBoard

链接:https://pan.baidu.com/s/140RcYA71VwWnfWE0aARhqA 密码:y9eu

nodePort: 31234 安装成功后,主机名要这个端口,就能访问dashboard

#Dashboard

cat > dashboard.yaml << EOF

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: 10.10.31.205/k8s/kubernetes-dashboard-amd64:v1.10.0

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 31234

selector:

k8s-app: kubernetes-dashboard

type: NodePort

EOF

kubectl apply -f dashboard.yaml

创建登录Token

#创建登录Token

cat > admin-user-role-binding.yaml << EOF

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

EOF

kubectl apply -f admin-user-role-binding.yaml

获取Token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin | awk '{print $1}')

Q&A:

问题一: 防火墙,没有关闭,所以安装时,一定要检查仔细

问题二:centos7添加bridge-nf-call-ip6tables出现No such file or directory

解决:

解决方法:

[root@localhost ~]# modprobe br_netfilter

[root@localhost ~]# ls /proc/sys/net/bridge

bridge-nf-call-arptables bridge-nf-filter-pppoe-tagged

bridge-nf-call-ip6tables bridge-nf-filter-vlan-tagged

bridge-nf-call-iptables bridge-nf-pass-vlan-input-dev

[root@localhost ~]# sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

问题三:unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

# [preflight] Running pre-flight checks

# [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.04.0-ce. Latest validated version: 18.06

# [WARNING Hostname]: hostname "szy-k8s-node1" could not be reached

# [WARNING Hostname]: hostname "szy-k8s-node1": lookup szy-k8s-node1 on 10.10.10.21:53: server misbehaving

# [discovery] Trying to connect to API Server "10.10.31.202:6443"

# [discovery] Created cluster-info discovery client, requesting info from "https://10.10.31.202:6443"

# [discovery] Requesting info from "https://10.10.31.202:6443" again to validate TLS against the pinned public key

# [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.10.31.202:6443"

# [discovery] Successfully established connection with API Server "10.10.31.202:6443"

# [join] Reading configuration from the cluster...

# [join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

# unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

# kubeadm token create

# kubeadm token list

解决办法是: token过期了,24小时内有效果的,所以可以重新生成token

#重新生成token

[root@test01 /]# kubeadm token create

u7w3un.e781f1n6sq0ocfmo

[root@test01 /]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

jjcku9.1o65bpdk4oqhj9g8 19h 2018-12-11T16:05:11+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

spxuf3.2rikngiu4k8gfk98 2018-12-08T16:53:12+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

u7w3un.e781f1n6sq0ocfmo 23h 2018-12-11T20:58:32+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

问题四:在所有kubernetes节点上设置kubelet使用systemd,让dockerd与kubelet保持一致,否则会启动报错

默认kubelet使用的cgroup-driver=systemd,改为cgroup-driver=systemd

在这里插入代码片

#配置Cgroup

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

echo $DOCKER_CGROUPS

cat > /etc/sysconfig/kubelet <用kubeadm安装最新Kubernetes1.10.1【centos7.3 离线安装docker,kubeadm,kubectl,kubelet,dashboard】kubernetesv1.10.1